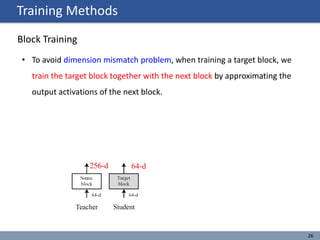

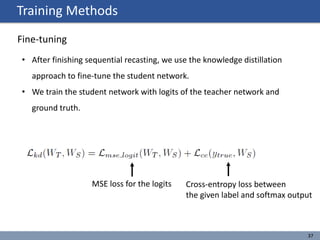

The document discusses 'network recasting,' a method for transforming neural network architectures to improve their efficiency and performance by replacing pretrained blocks with new target blocks. It outlines various training methods, including sequential recasting to mitigate the vanishing gradient problem, and demonstrates how the technique effectively reduces filter counts and accelerates inference times. Experiments indicate that network recasting achieves significant speed-ups and reduces errors compared to previous methods, validating its potential in deep learning optimization.

![Related Works

5

Hardware architecture

Intel Skylake architecture [1]

NVIDIA Turing architecture [2]

• Traditional computer architectures are not efficient for DNNs.

• NVDIA introduced Tensor cores to accelerate DNNs.](https://image.slidesharecdn.com/networkrecasting-190329011421/85/Network-recasting-5-320.jpg)

![Related Works

6

DL accelerator

DianNao architecture [3] ZeNa architecture [4]

• To accelerate neural network, several accelerators are also introduced.

• DNNs consists of simple operations (MAC), so it is easy to accelerate.

• In addition, conditional memory access is also possible thanks to pruning.](https://image.slidesharecdn.com/networkrecasting-190329011421/85/Network-recasting-6-320.jpg)

![Related Works

7

Network architecture

Big-Little architecture [5]

ShuffleNet v2 architecture [6]

• Many network architectures are introduced to improve performance.

• In addition, many research also focus on light-weight

and light-computation CNN architecture.](https://image.slidesharecdn.com/networkrecasting-190329011421/85/Network-recasting-7-320.jpg)

![Related Works

8

Compression (pruning)

Example of pruning method: ThiNet [7]

• Pruning-based network compression methods were introduced.

• After training, we can remove weak weights or filters.](https://image.slidesharecdn.com/networkrecasting-190329011421/85/Network-recasting-8-320.jpg)

![Related Works

9

Compression (distillation)

Knowledge distillation [8]

• By distilling knowledge from cumbersome model, small network can

achieve higher accuracy compared with conventional training method.](https://image.slidesharecdn.com/networkrecasting-190329011421/85/Network-recasting-9-320.jpg)

![Related Works

10

Compression (distillation)

Deep mutual learning [9]

• By distilling knowledge from cumbersome model, small network can

achieve higher accuracy compared with conventional training method.](https://image.slidesharecdn.com/networkrecasting-190329011421/85/Network-recasting-10-320.jpg)

![Related Works

11

Compression (distillation)

Deep mutual learning [10]

• In addition, knowledge distillation enables reducing network depth and

architecture transformation.](https://image.slidesharecdn.com/networkrecasting-190329011421/85/Network-recasting-11-320.jpg)

![Reference

60

• [1] https://wccftech.com/idf15-intel-skylake-analysis-cpu-gpu-microarchitecture-ddr4-memory-impact/3/

• [2] https://devblogs.nvidia.com/nvidia-turing-architecture-in-depth/

• [3] Chen, Tianshi, et al. Diannao: A small-footprint high-throughput accelerator for ubiquitous machine-learning. In ASPLOS,

2014.

• [4] Kim, Dongyoung, et al. Zena: Zero-aware neural network accelerator. IEEE Design & Test 35.1 (2018): 39-46.

• [5] Chen, Chun-Fu, et al. Big-Little Net: An Efficient Multi-Scale Feature Representation for Visual and Speech Recognition.

In ICLR, 2019.

• [6] Ma, Ningning, et al. Shufflenet v2: Practical guidelines for efficient cnn architecture design. In ECCV. 2018.

• [7] Luo, J.-H., et al. ThiNet: A filter level pruning method for deep neural network compression. In ICCV, 2017.

• [8] Hinton, G. et al. Distilling the knowledge in a neural network. In NIPS Deep Learning and Representation Learning

Workshop, 2014.

• [9] Zhang, Ying, et al. Deep mutual learning. IN CVPR. 2018.

• [10] Yim, Junho, et al. A gift from knowledge distillation: Fast optimization, network minimization and transfer learning. In

CVPR. 2017.](https://image.slidesharecdn.com/networkrecasting-190329011421/85/Network-recasting-60-320.jpg)