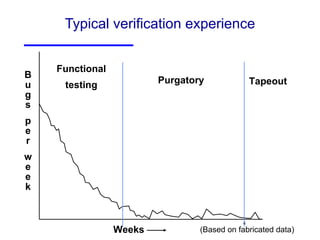

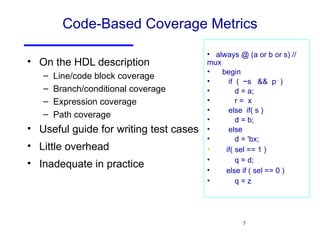

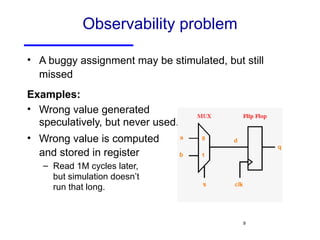

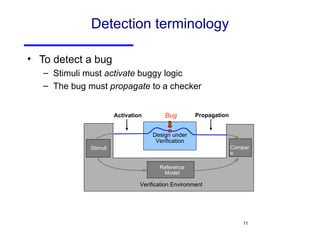

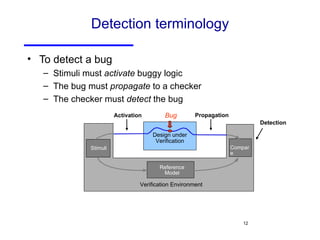

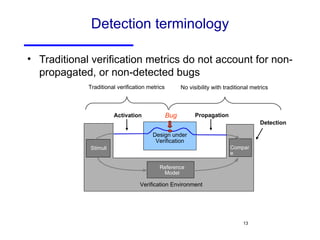

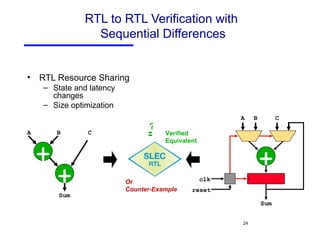

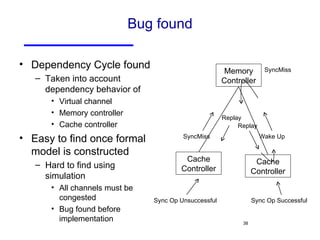

The document discusses three major problems in verification: specifying properties to check, specifying the environment, and computational complexity. It then presents several approaches to addressing these problems, including using coverage metrics tailored to detection ability, sequential equivalence checking to avoid testbenches, and "perspective-based verification" using minimal abstract models focused on specific property classes. This allows verification earlier in design when changes are more tractable and catches bugs before implementation.