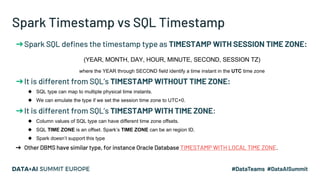

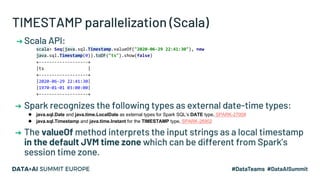

The document provides an in-depth analysis of dates and timestamps in Apache Spark 3.0, discussing the transition from the Julian to the Proleptic Gregorian calendar and the implications for date type handling. It covers how date and timestamp values are structured, created, and manipulated, including functions introduced in Spark 3.0 like make_date and make_timestamp. Additionally, the document addresses the complexities of time zone management, including resolving overlapping timestamps and differences in handling between Spark and SQL timestamps.

![Constructing a DATE column

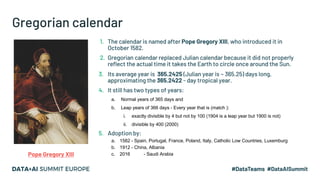

▪ MAKE_DATE - new function in Spark 3.0, SPARK-28432

>>> spark.createDataFrame([(2020, 6, 26), (1000, 2, 29), (-44, 1, 1)], ['Y', 'M', 'D']).createTempView('YMD')

>>> df = sql('select MAKE_DATE(Y, M, D) as date from YMD')

▪ Parallelization of java.time.LocalDate, SPARK-27008

scala> Seq(java.time.LocalDate.of(2020, 2, 29), java.time.LocalDate.now).toDF("date")

▪ Casting from strings or typed literal, SPARK-26618

SELECT DATE '2019-01-14'

SELECT CAST('2019-01-14' AS DATE)

▪ Parsing by to_date() (in JSON and CSV datasource)

Spark 3.0 uses new parser but if it fails you can switch to old one by set

spark.sql.legacy.timeParserPolicy to LEGACY, SPARK-30668

▪ CURRENT_DATE - catch the current date at start of a query, SPARK-33015](https://image.slidesharecdn.com/134maximgekk-201129184839/85/Comprehensive-View-on-Date-time-APIs-of-Apache-Spark-3-0-9-320.jpg)

![Constructing a TIMESTAMP column

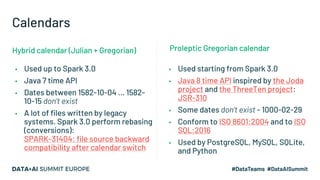

▪ MAKE_TIMESTAMP - new function in Spark 3.0, SPARK-28459

>>> df = spark.createDataFrame([(2020, 6, 28, 10, 31, 30.123456), (1582, 10, 10, 0, 1, 2.0001), (2019, 2, 29, 9, 29, 1.0)],

... ['YEAR', 'MONTH', 'DAY', 'HOUR', 'MINUTE', 'SECOND'])

ts = df.selectExpr("make_timestamp(YEAR, MONTH, DAY, HOUR, MINUTE, SECOND) as MAKE_TIMESTAMP")

▪ Parallelization of java.time.Instant, SPARK-26902

scala> Seq(java.time.Instant.ofEpochSecond(-12219261484L), java.time.Instant.EPOCH).toDF("ts").show

▪ Casting from strings or typed literal, SPARK-26618

SELECT TIMESTAMP '2019-01-14 20:54:00.000'

SELECT CAST('2019-01-14 20:54:00.000' AS TIMESTAMP)

▪ Parsing by to_timestamp() (in JSON and CSV datasource)

Spark 3.0 uses new parser but if it fails you can switch to old one by set

spark.sql.legacy.timeParserPolicy to LEGACY, SPARK-30668

▪ CURRENT_TEMESTAMP - the timestamp at start of a query, SPARK-27035](https://image.slidesharecdn.com/134maximgekk-201129184839/85/Comprehensive-View-on-Date-time-APIs-of-Apache-Spark-3-0-21-320.jpg)

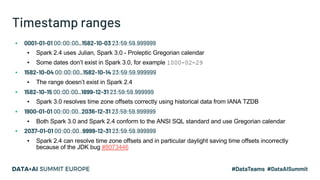

![TIMESTAMP parallelization (Python)

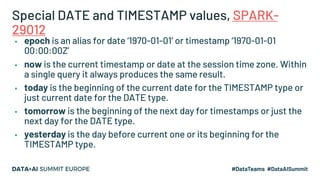

➔ PySpark:

>>> import datetime

>>> df = spark.createDataFrame([(datetime.datetime(2020, 7, 1, 0, 0, 0),

... datetime.date(2020, 7, 1))], ['timestamp', 'date'])

>>> df.show()

+-------------------+----------+

| timestamp| date|

+-------------------+----------+

|2020-07-01 00:00:00|2020-07-01|

+-------------------+----------+

➔ PySpark converts Python’s datetime objects to internal Spark SQL

representations at the driver side using the system time zone

➔ The system time zone can be different from Spark’s session time

zone settings spark.sql.session.timeZone](https://image.slidesharecdn.com/134maximgekk-201129184839/85/Comprehensive-View-on-Date-time-APIs-of-Apache-Spark-3-0-22-320.jpg)

![Collecting dates and timestamps

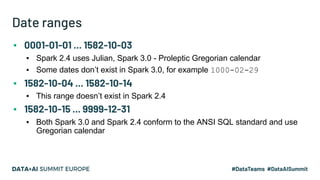

➔ Spark transfers internal values of dates and timestamps columns as

time instants in the UTC time zone from executors to the driver:

>>> df.collect()

[Row(timestamp=datetime.datetime(2020, 7, 1, 0, 0), date=datetime.date(2020, 7, 1))]

➔ And performs conversions to Python datetime objects in the system

time zone at the driver, NOT using Spark SQL session time zone

➔ In Java and Scala APIs, Spark performs the following conversions by

default:

◆ Spark SQL’s DATE values are converted to instances of java.sql.Date.

◆ Timestamps are converted to instances of java.sql.Timestamp.

➔ When spark.sql.datetime.java8API.enabled is true:

◆ java.time.LocalDate for Spark SQL’s DATE type, SPARK-27008

◆ java.time.Instant for Spark SQL’s TIMESTAMP type, SPARK-26902](https://image.slidesharecdn.com/134maximgekk-201129184839/85/Comprehensive-View-on-Date-time-APIs-of-Apache-Spark-3-0-26-320.jpg)