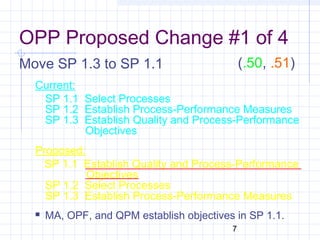

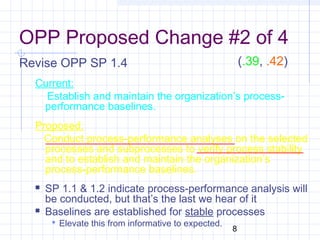

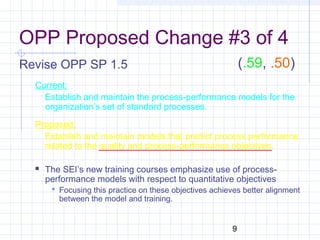

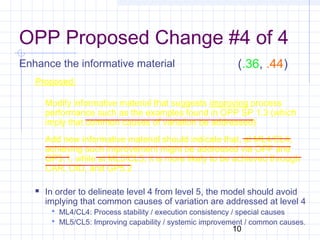

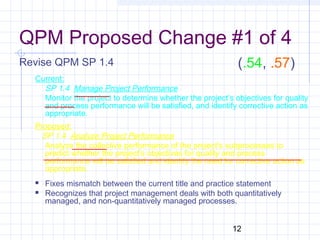

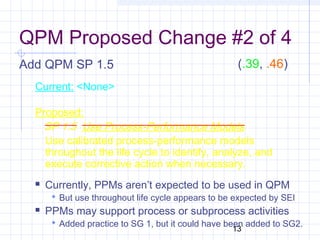

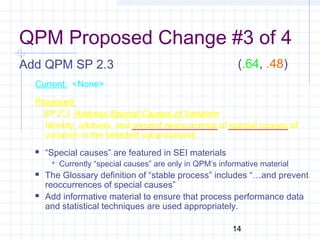

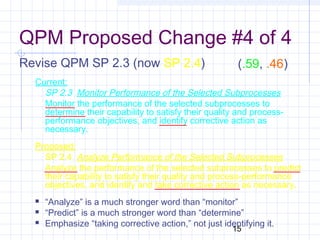

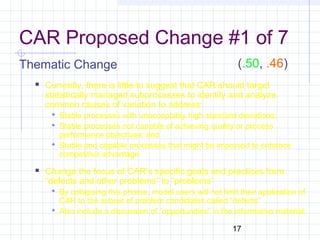

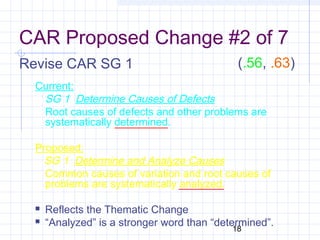

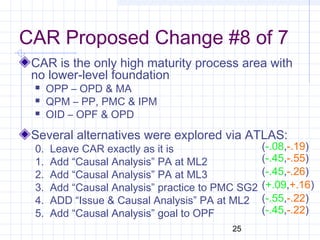

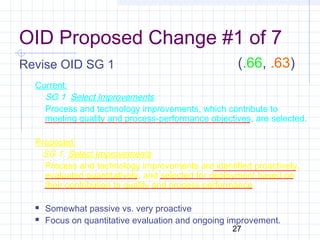

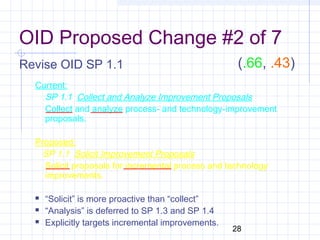

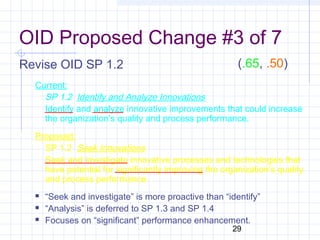

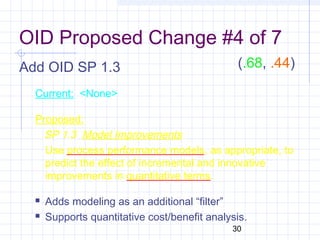

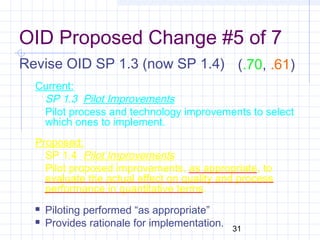

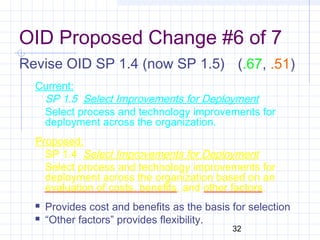

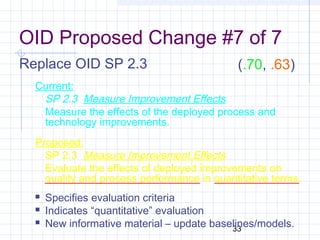

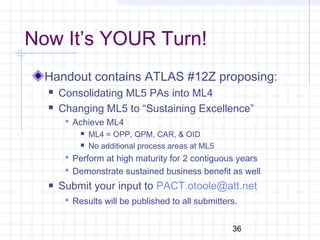

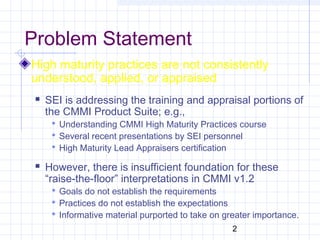

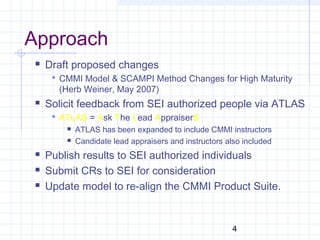

This document proposes changes to the CMMI model to better align it with high maturity practices as understood by the SEI. It describes proposed changes to specific goals and practices in the OPP, QPM, CAR and OID process areas based on feedback from CMMI instructors and appraisers. The changes are intended to make high maturity practices more consistently understood, applied and appraised by emphasizing quantitative process management, use of process performance models, addressing common and special causes of variation, and continual incremental and innovative process improvement.

![5

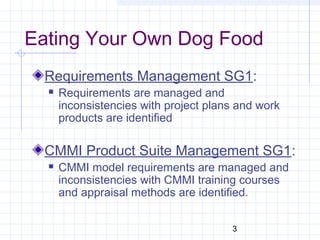

ATLAS Feedback

For each proposed change, respondents indicated:

Strongly support (It’s perfect!)

Support (It’s better)

Are ambivalent (It’s OK either way)

Disagree (It’s worse)

Strongly disagree (What were you thinking?)

Ratings were determined on a +1 to -1 scale as follows:

Strongly support = +1.0

Support = +0.5

Ambivalent = 0.0

Disagree = -0.5

Strongly disagree = -1.0

For each change, the average rating will be displayed for:

[High Maturity Lead Appraisers, Other SEI authorized individuals]](https://image.slidesharecdn.com/cmmihm2008sepgmodelchangesforhighmaturity1v011-150525024656-lva1-app6892/85/Cmmi-hm-2008-sepg-model-changes-for-high-maturity-1v01-1-5-320.jpg)