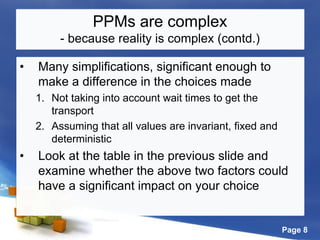

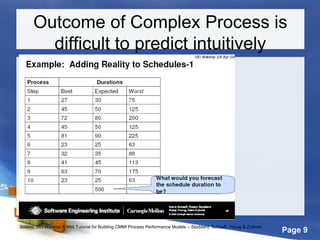

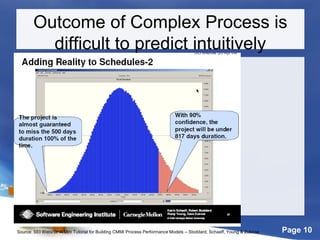

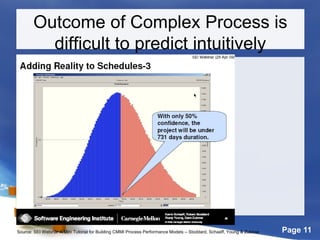

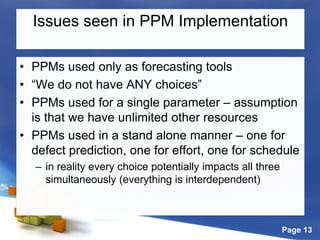

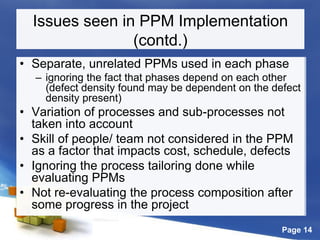

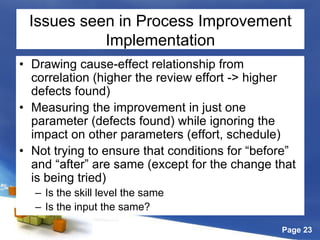

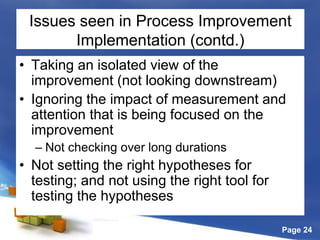

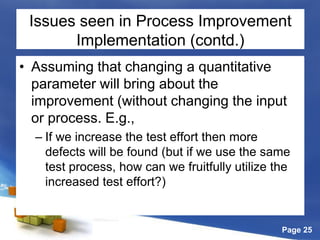

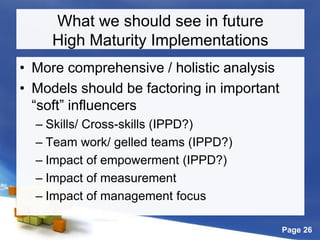

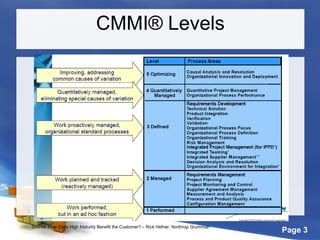

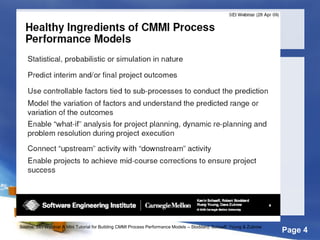

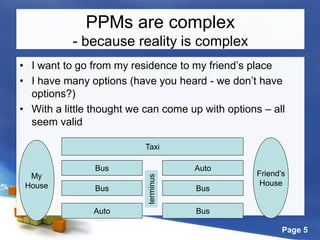

This document discusses high maturity process implementation and common pitfalls. It begins by outlining the agenda, which includes process performance models, sub-process control, managing process improvements, and typical misconceptions and pitfalls. It then discusses how process performance models are complex because reality is complex, and outlines simplifications commonly made. It also notes that outcomes of complex processes are difficult to intuitively predict. The document concludes by identifying common issues seen in implementing high maturity practices and what should be seen in future high maturity implementations to address these issues.

![Page 6

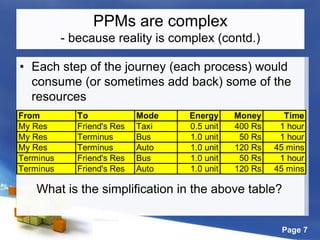

• There are combination of resources that I would

like to optimize

– Energy level (physical, emotional) [Quality]

– Money [Cost/ effort]

– Elapsed time [Schedule]

(some may be more important than others, some may

start pinching when they cross a threshold)

• I may also have constraints on some of the

resources (e.g., I can spend a max of 3 hours

elapsed time; or I don’t want to spend more than

Rs 500 on the journey)

PPMs are complex

- because reality is complex (contd.)](https://image.slidesharecdn.com/hmmisconceptionspitfalls-100718095213-phpapp02/85/CMMI-High-Maturity-Misconceptions-and-Pitfalls-6-320.jpg)