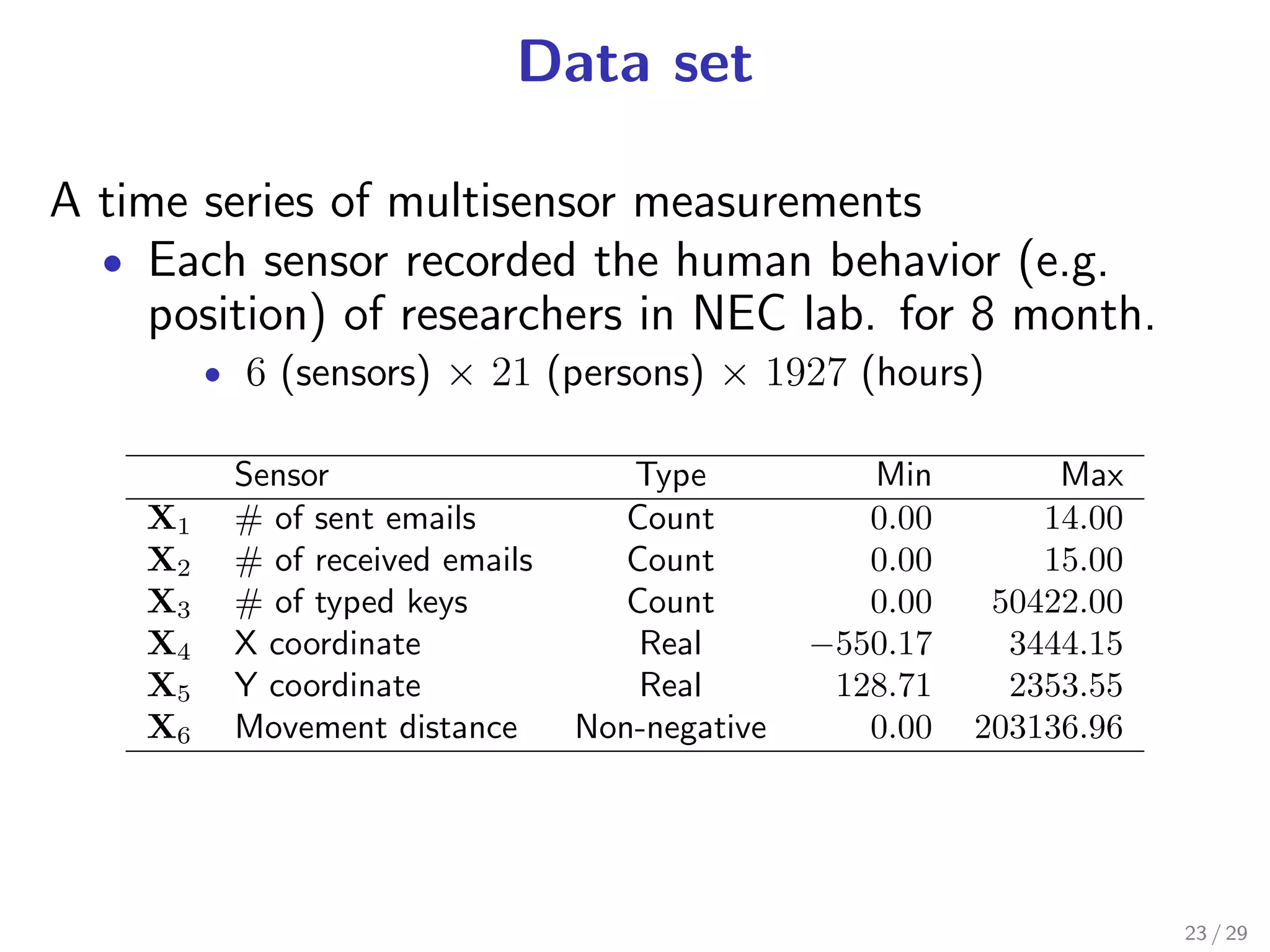

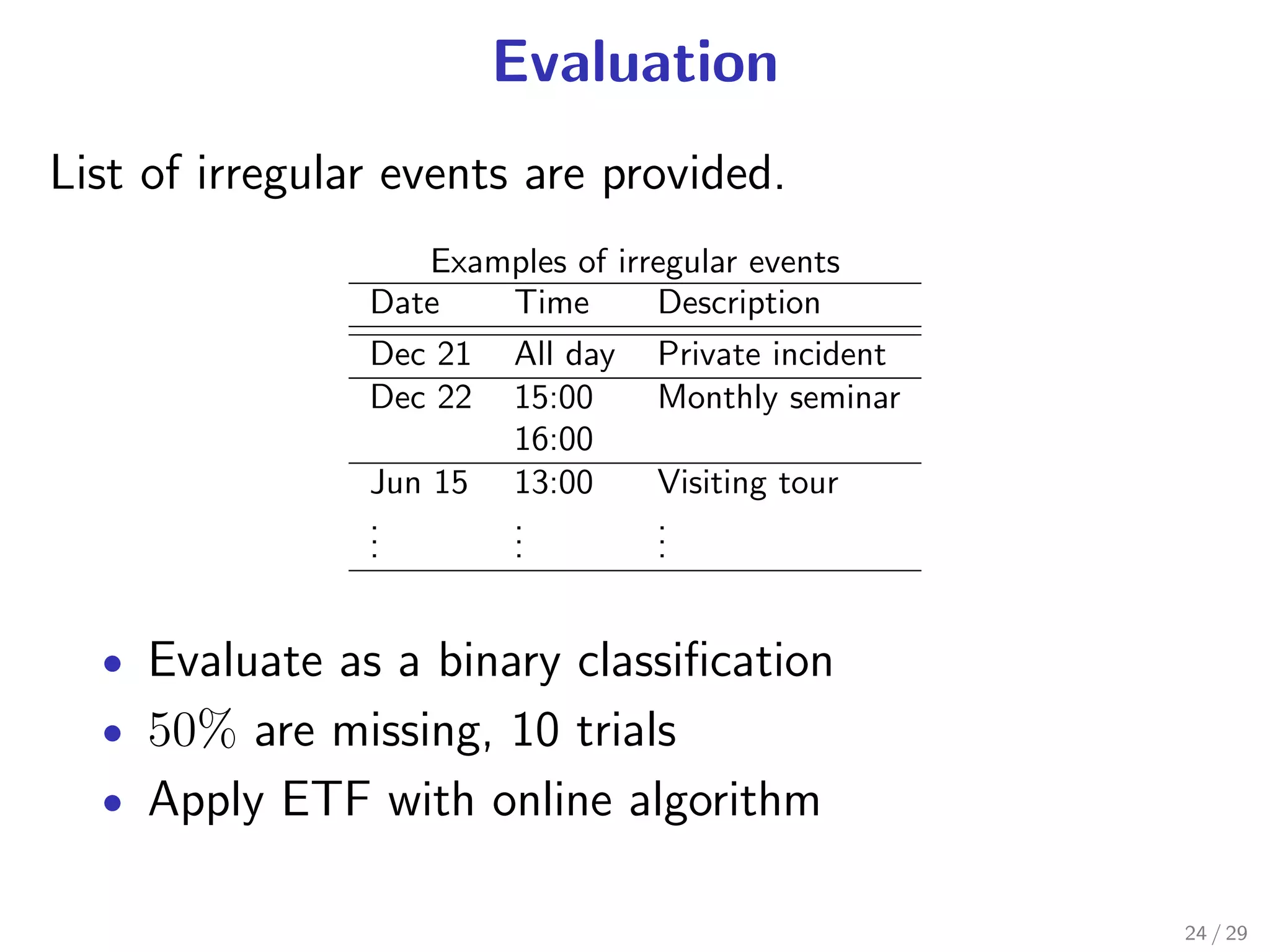

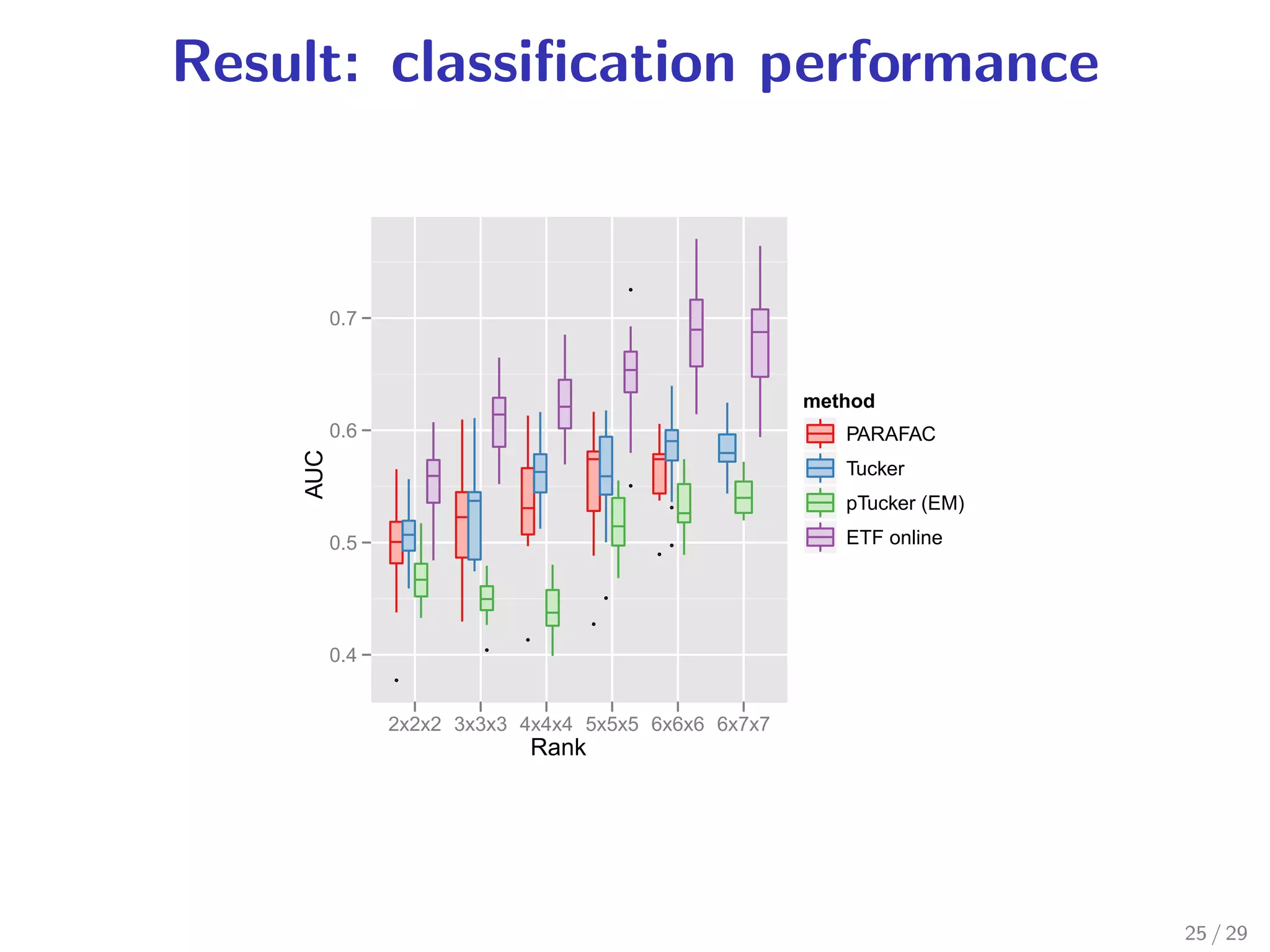

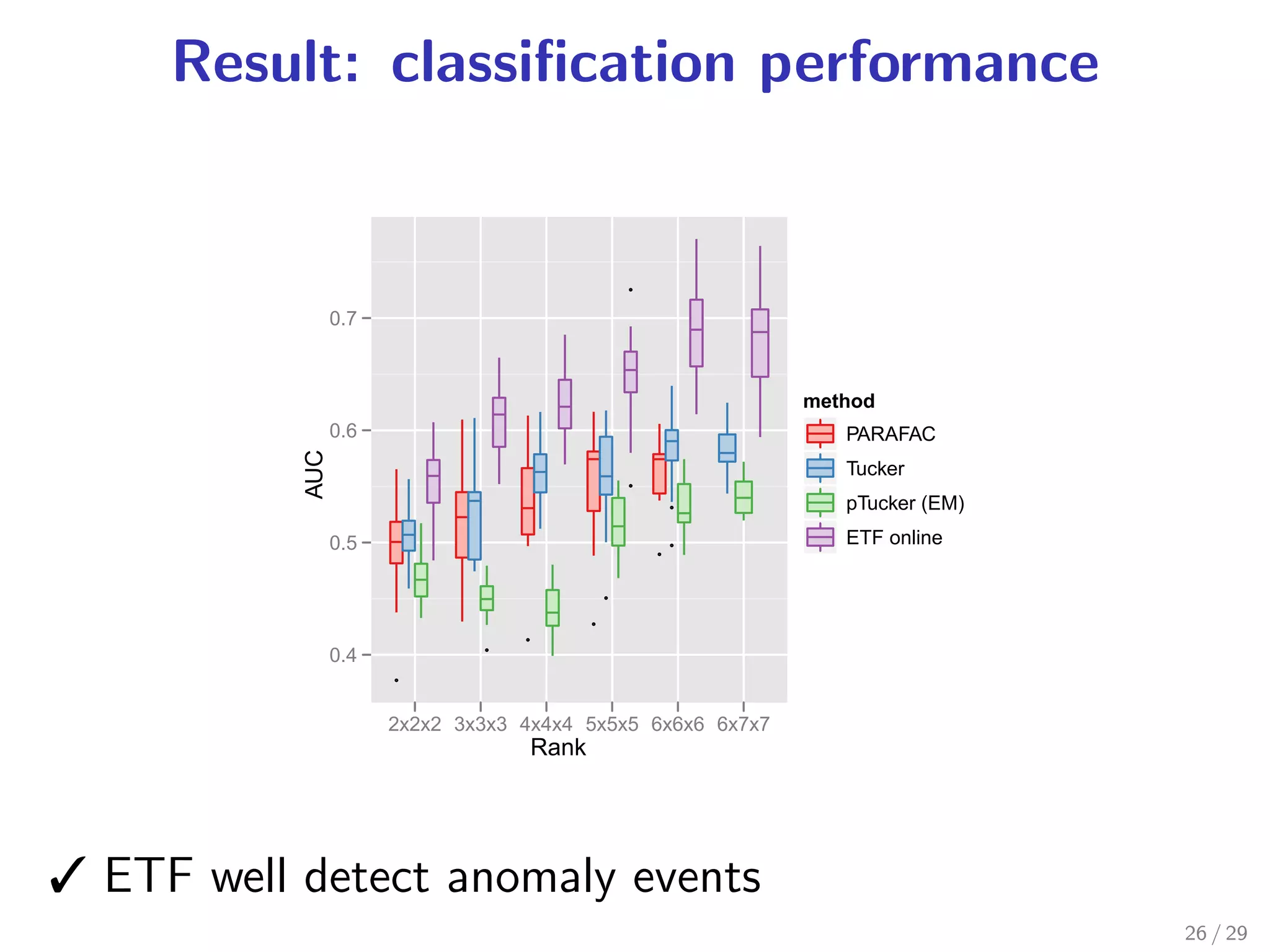

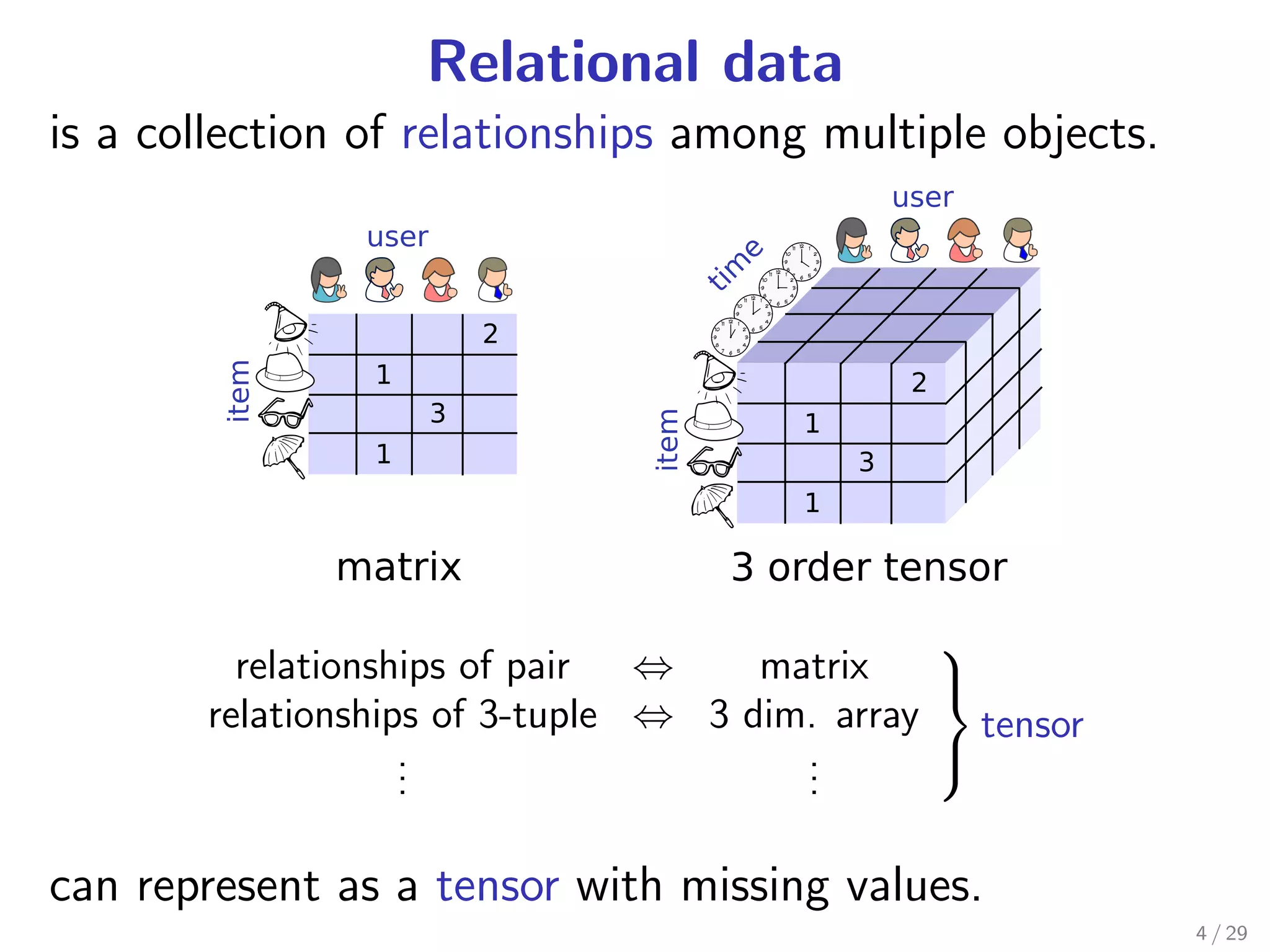

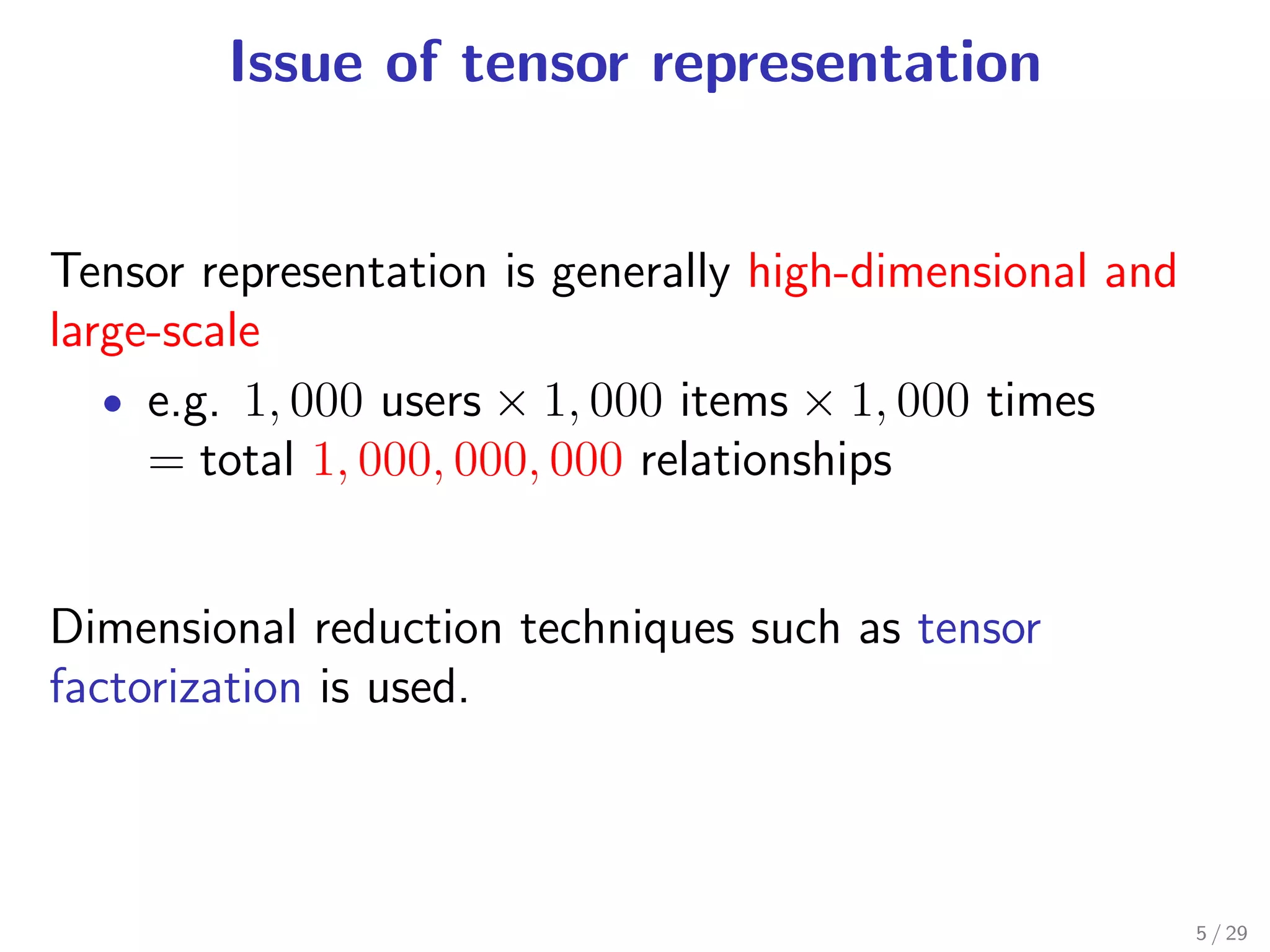

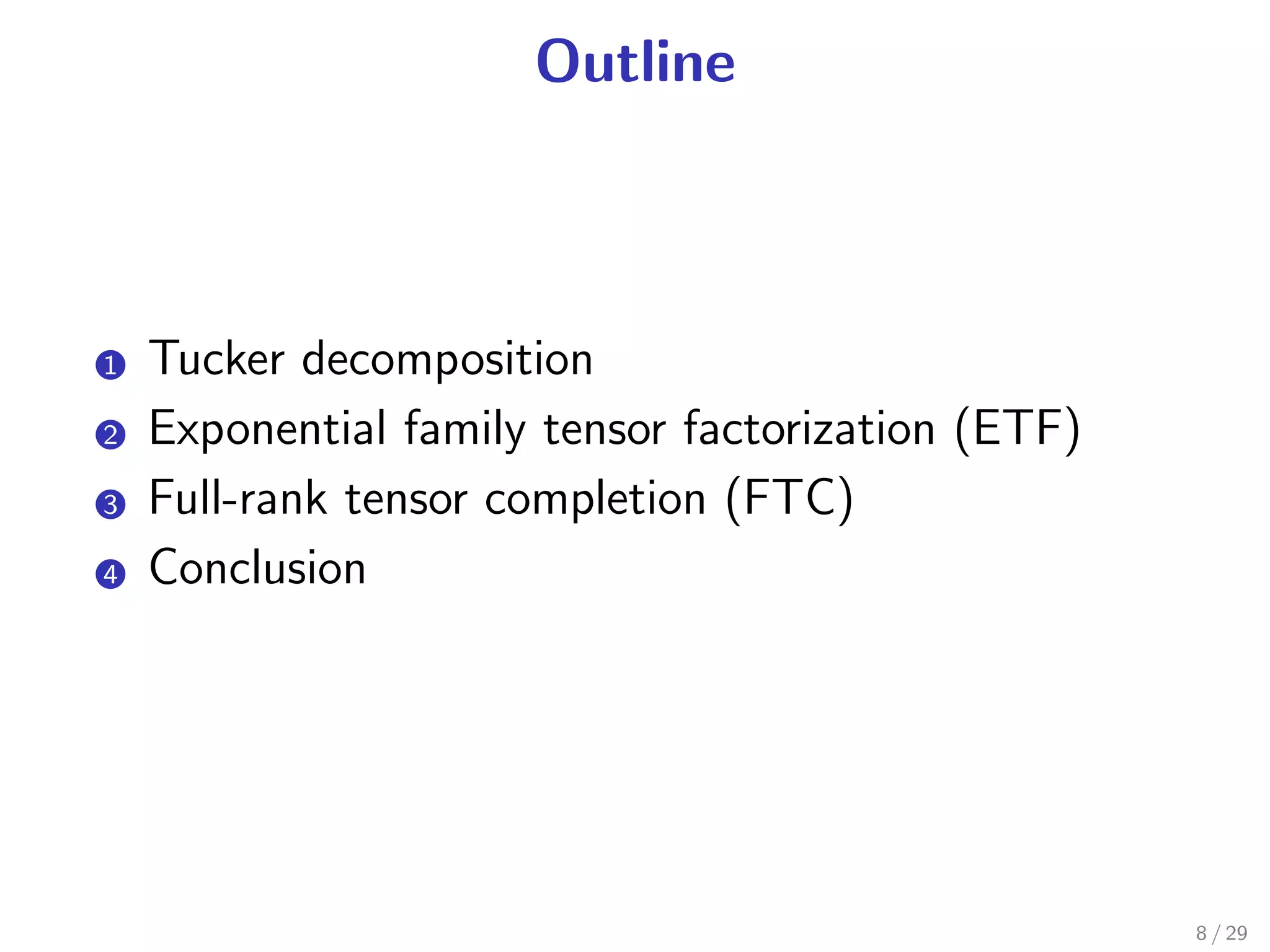

This document presents two tensor factorization methods: Exponential Family Tensor Factorization (ETF) and Full-Rank Tensor Completion (FTC). ETF generalizes Tucker decomposition by allowing for different noise distributions in the tensor and handles mixed discrete and continuous values. FTC completes missing tensor values without reducing dimensionality by kernelizing Tucker decomposition. The document outlines these methods and their motivations, discusses Tucker decomposition, and provides an example applying ETF to anomaly detection in time series sensor data.

![Tensor factorization

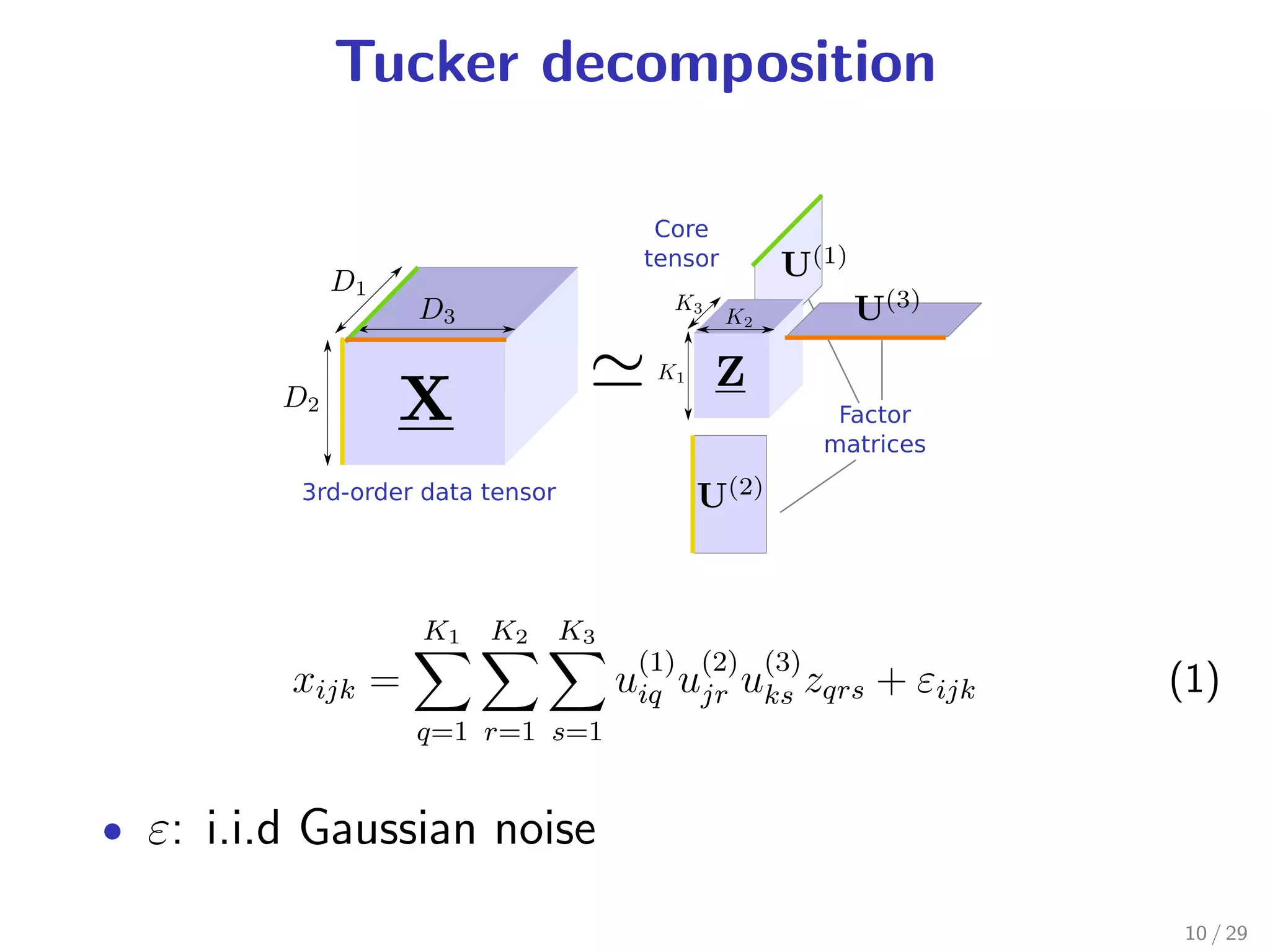

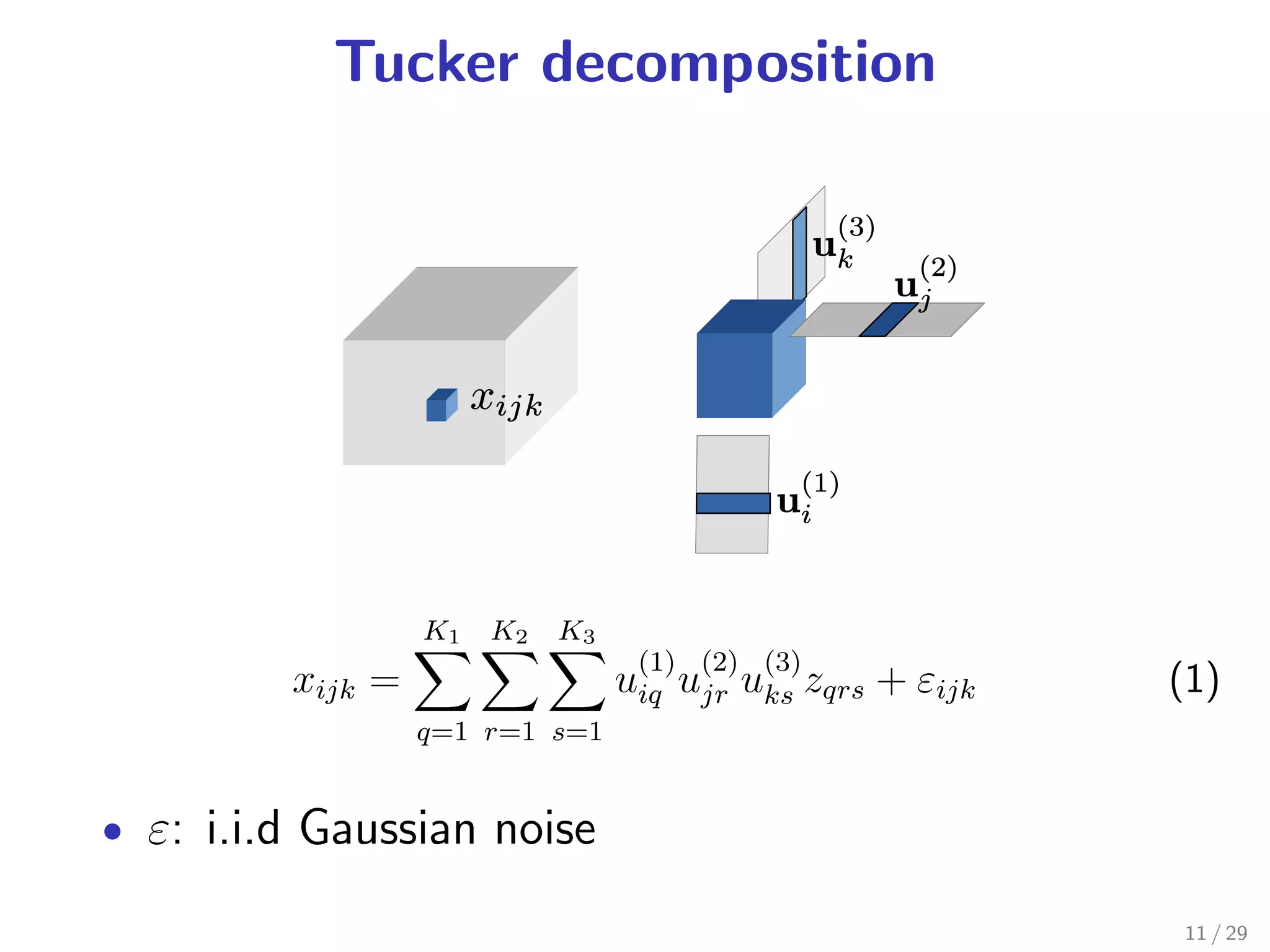

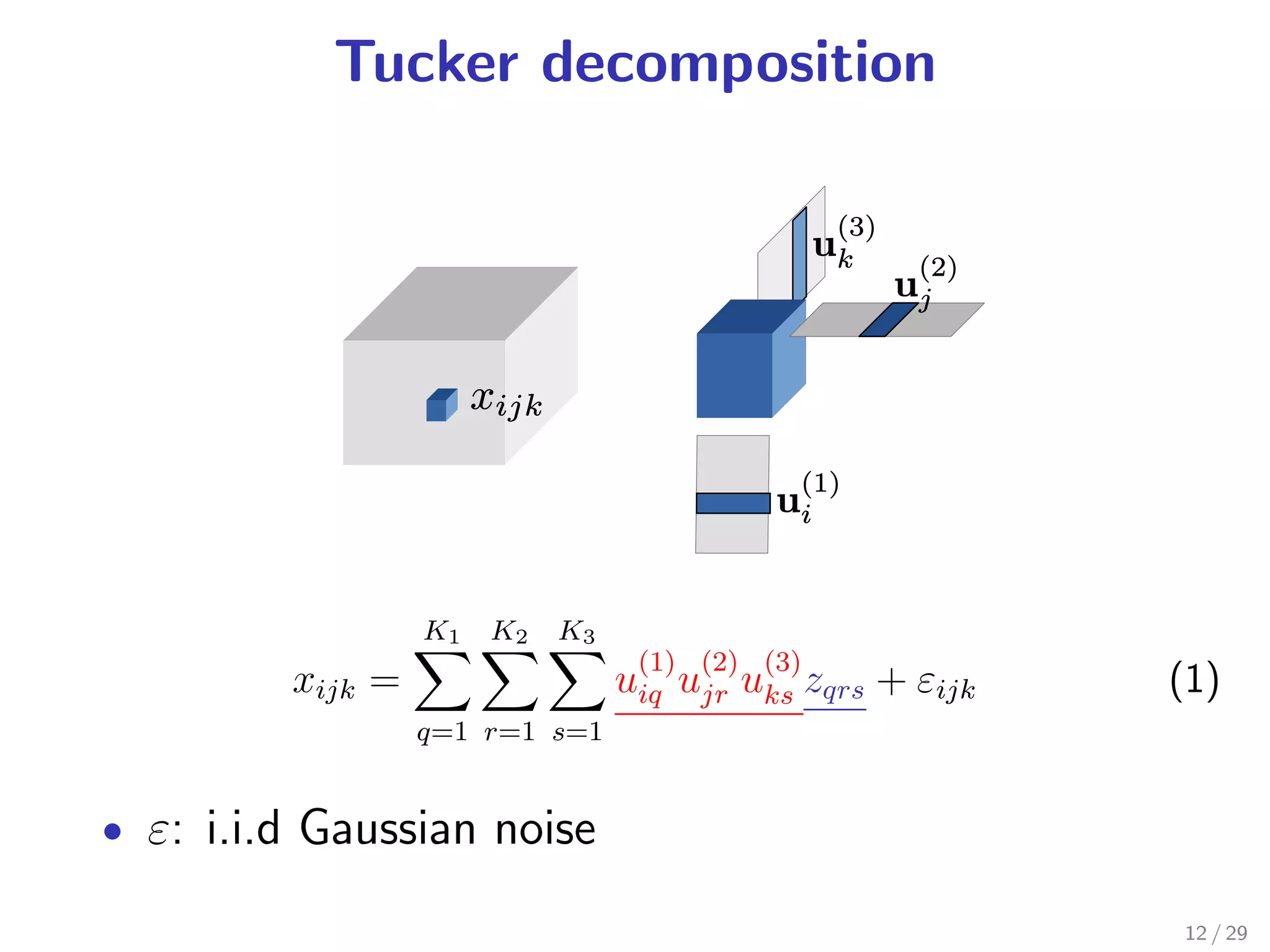

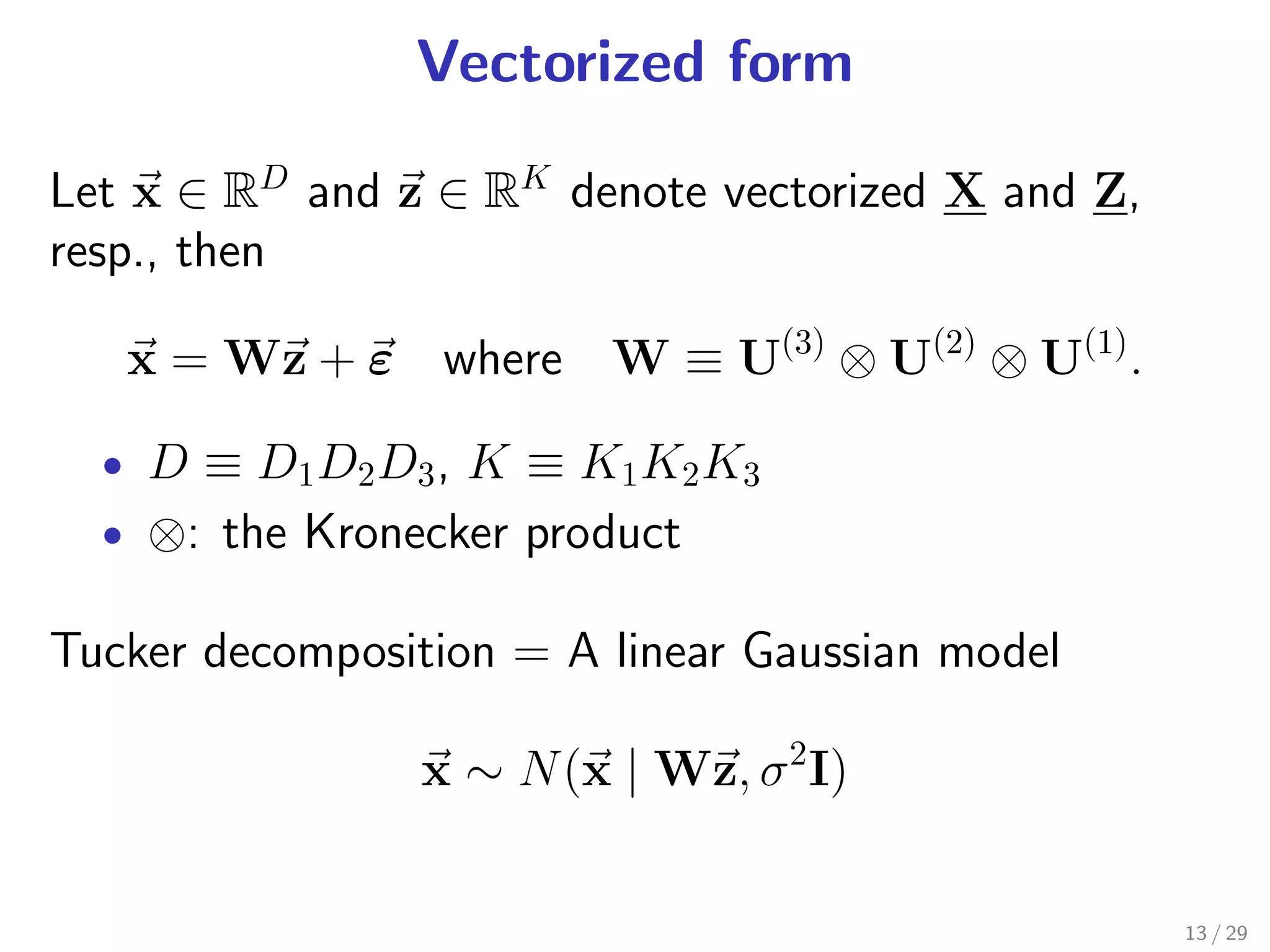

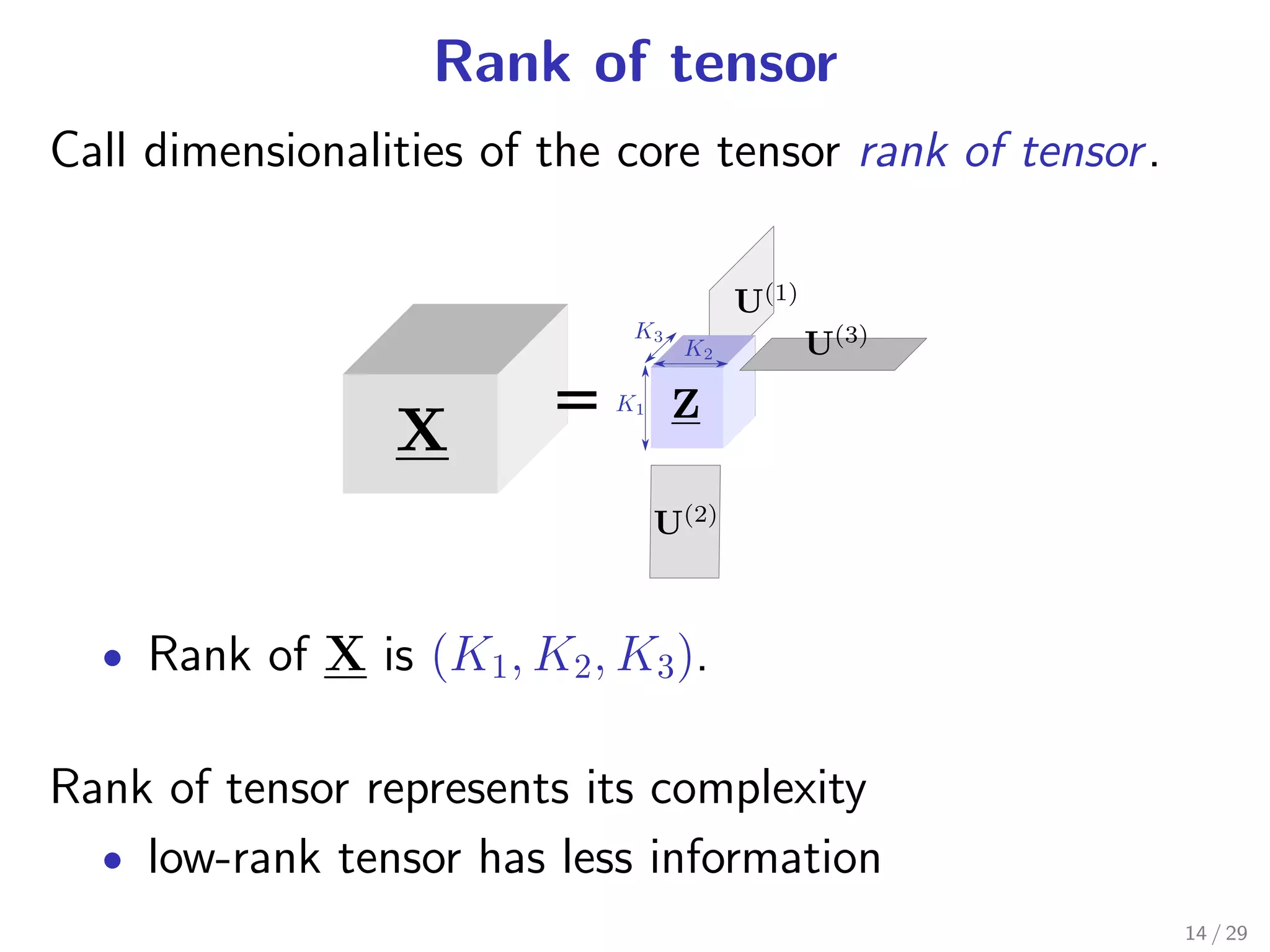

Tucker decomposition [Tucker 1966]: a tensor factorization

method assuming

1 observation noise is Gaussian

.

2 underlying tensor is low-dimensional

.

These assumptions are general ... but are not always

true.

6 / 29](https://image.slidesharecdn.com/short-120518210704-phpapp01/75/Generalization-of-Tensor-Factorization-and-Applications-6-2048.jpg)

![Contributions

Generalize Tucker decomposition and propose two new

models:

1 Exponential family tensor factorization (ETF)

.

[Joint work with Takenouchi, Shibata, Kamiya, Kunieda, Yamada, and

Ikeda]

• Generalize the noise distribution.

• Can handle a tensor containing mixed discrete and

continuous values.

2. Full-rank tensor completion (FTC)

[Joint work with Tomioka and Kashima]

• Kernelize Tucker decomposition

• Complete missing values without reducing the

dimensionality.

7 / 29](https://image.slidesharecdn.com/short-120518210704-phpapp01/75/Generalization-of-Tensor-Factorization-and-Applications-7-2048.jpg)

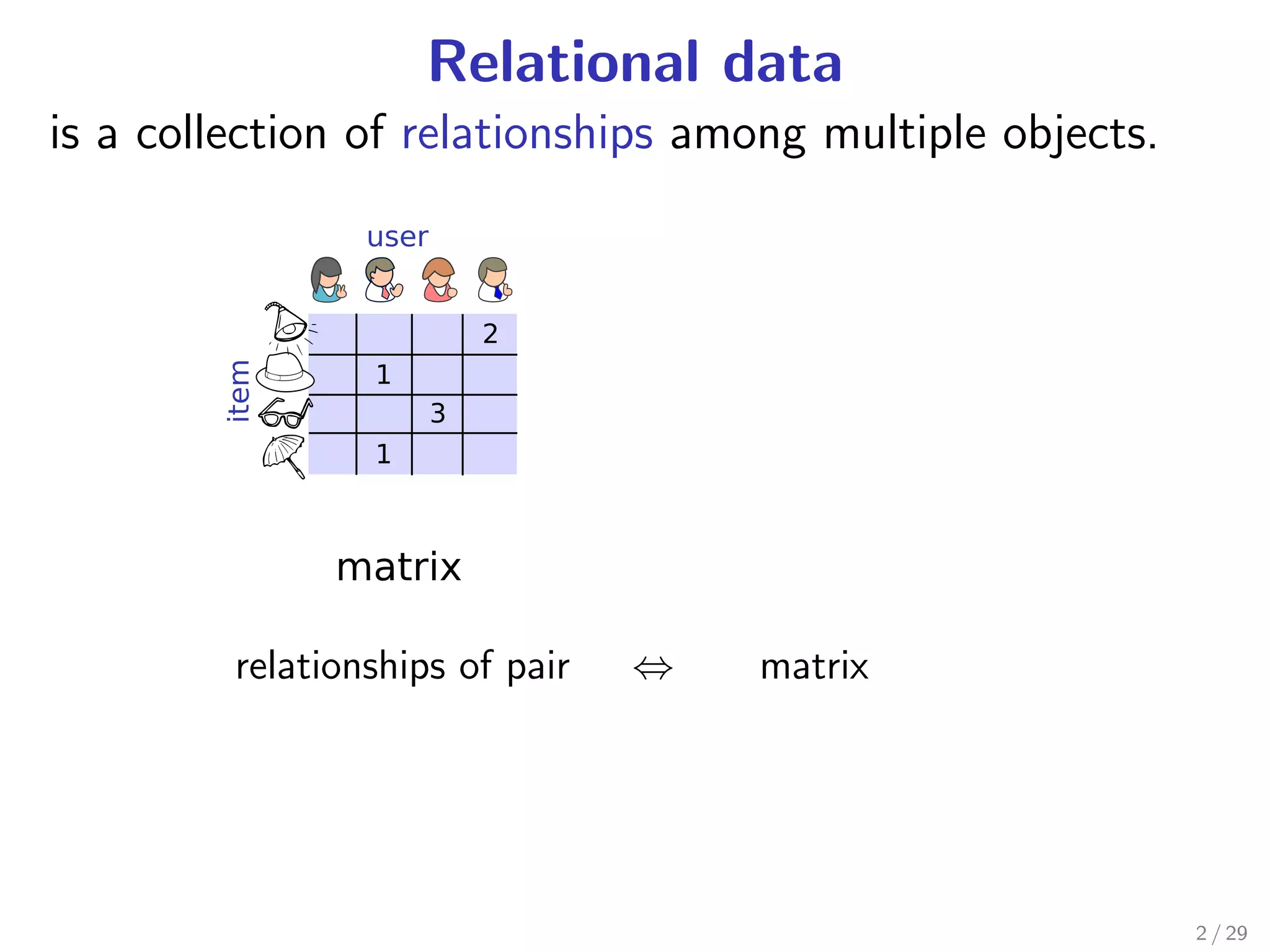

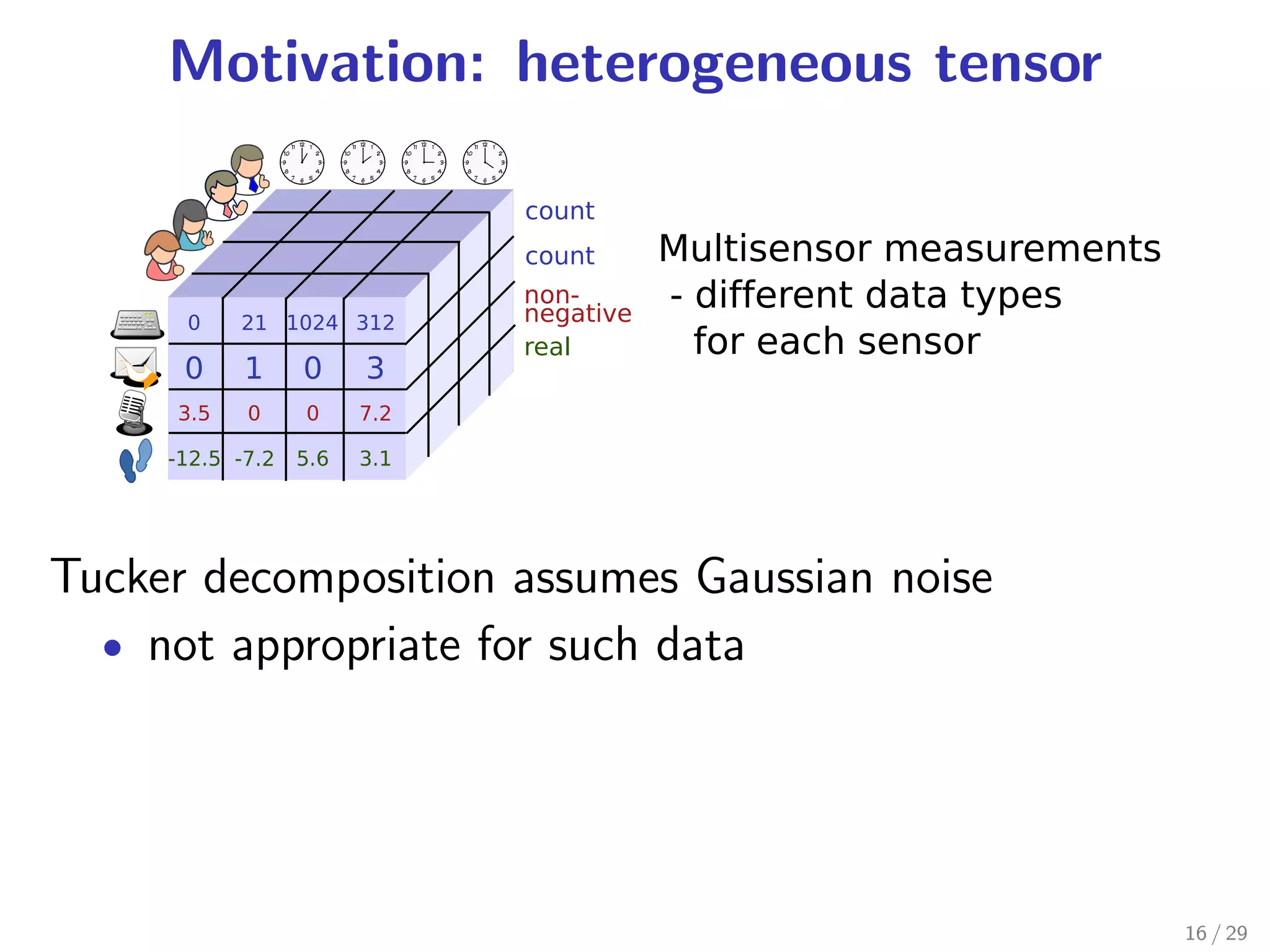

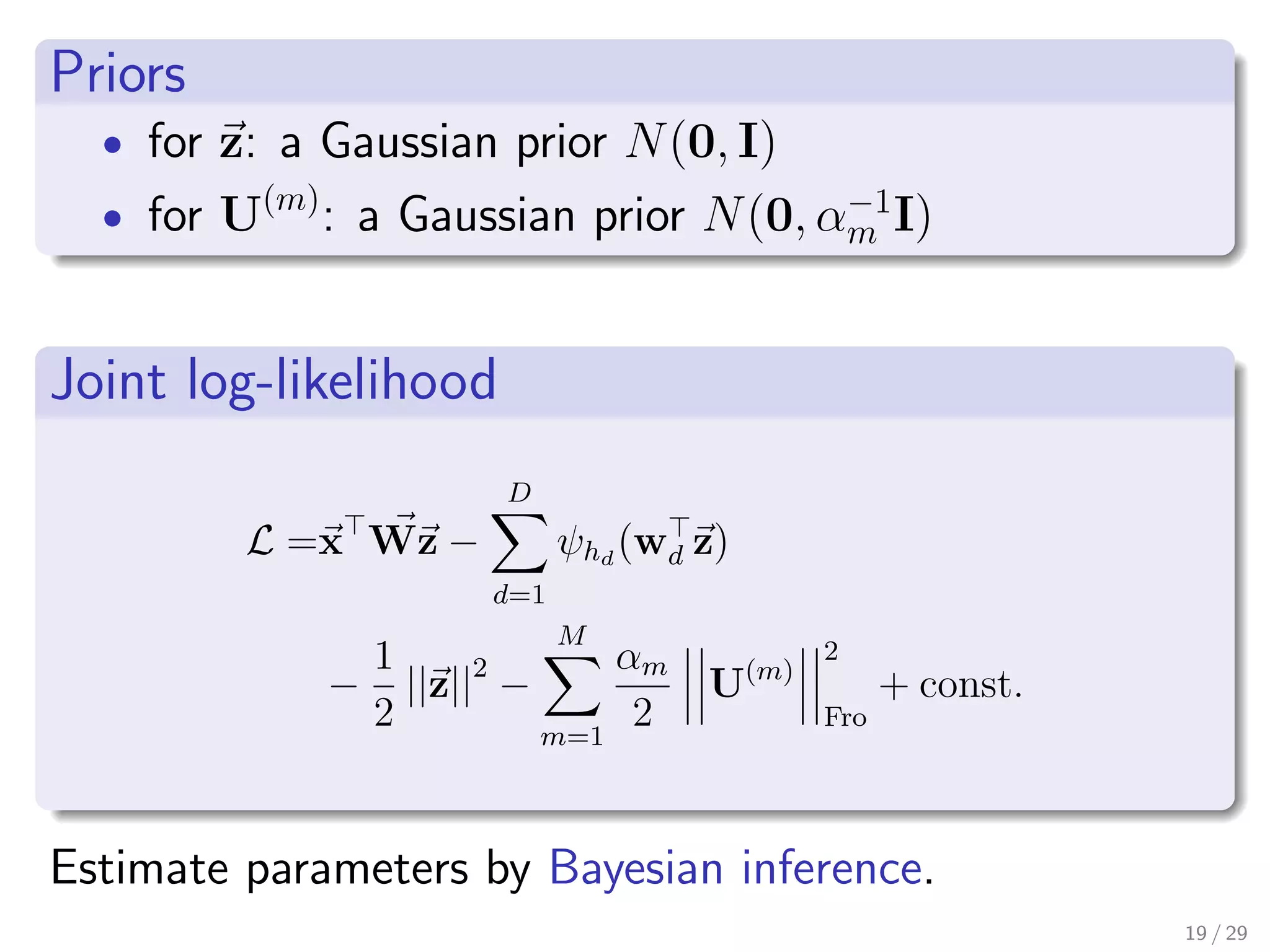

![Exponential family tensor factorization

.

Likelihood

.

D

x∼ Expond (xd | θd ), θ ≡ Wz

d=1 exp. family Tucker decomp.

. where Expon(x | θ) ≡ exp [xθ − ψ(θ) + F (x)]

exponential family : a class of distributions

Gaussian Poisson Bernoulli

0.4

0.7

Density

Density

0.2

0.2

0.5

(θ = 0)

0.0

0.0

0.3

ψ(θ) θ2−4 −2

/2 0 2 4

exp[θ] 4

0 2 6 8

ln(10.0 0.5 1.0 1.5

−0.5

+ exp[θ])

x

18 / 29](https://image.slidesharecdn.com/short-120518210704-phpapp01/75/Generalization-of-Tensor-Factorization-and-Applications-18-2048.jpg)

![Bayesian inference

Marginal-MAP estimator:

argmax exp[L(z, U(1) , . . . , U(M ) )]dz

U(1) ,...,U(M )

• The integral is not analytical.

Develop efficient yet accurate approximation with

Laplace approximation and Gaussian process.

• Computational cost is still higher than Tucker

dcomp.

• For a long and thin tensor (e.g. time series), online

algorithm is applicable (see thesis.)

20 / 29](https://image.slidesharecdn.com/short-120518210704-phpapp01/75/Generalization-of-Tensor-Factorization-and-Applications-20-2048.jpg)

![Anomaly detection by ETF

Purpose Find irregular parts of tensor data

Method Apply distance-based outlier

DB (p, D) [Knorr+ VLDB’00] to the estimated

factor matrix U(m)

.

Definition of DB (p, D)

.

“An object O is a DB (p, D) outlier if at least fraction p

. the objects lies at a distance greater than D from O.”

of

22 / 29](https://image.slidesharecdn.com/short-120518210704-phpapp01/75/Generalization-of-Tensor-Factorization-and-Applications-22-2048.jpg)