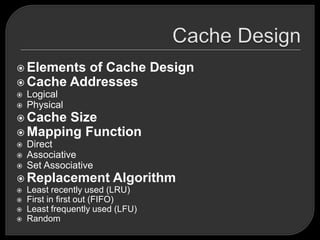

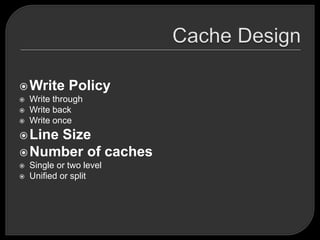

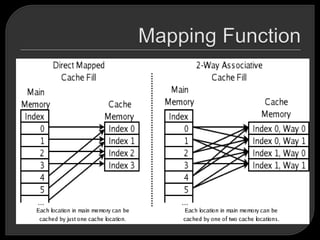

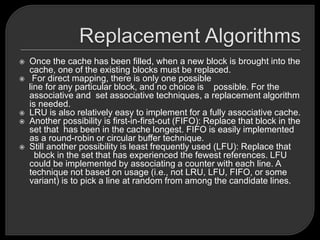

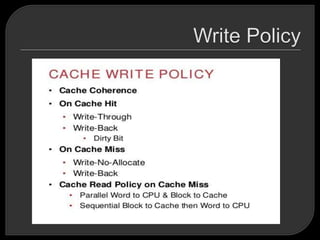

Caches are commonly used in high-performance computing to improve performance. However, high-performance computing applications often perform poorly on computer architectures that employ caches, unless the application software is specifically tuned to exploit the caches. There are several basic design elements that differentiate cache architectures, including the cache size, mapping function, replacement algorithm, write policy, line size, and number of cache levels. The replacement algorithm determines which block of data to remove from the cache when a new block needs to be added.