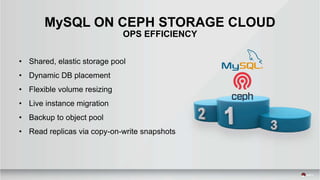

This document summarizes a presentation given by Kyle Bader of Red Hat on software defined storage and performance testing of MySQL on Red Hat Ceph Storage compared to AWS EBS. Some key points:

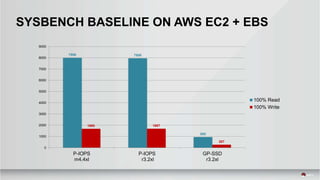

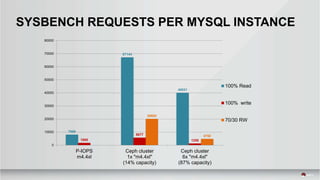

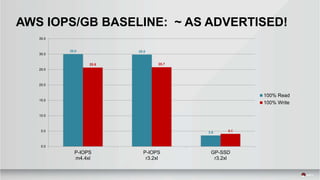

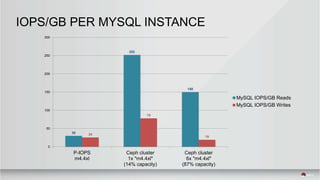

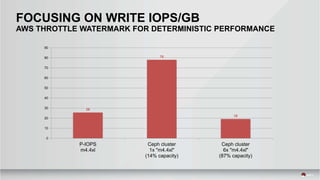

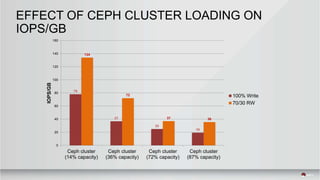

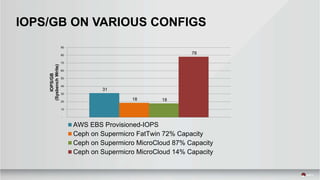

- Performance testing showed Red Hat Ceph Storage could provide over 78 IOPS/GB for MySQL workloads, meeting and exceeding the 30 IOPS/GB target of AWS EBS provisioned IOPS.

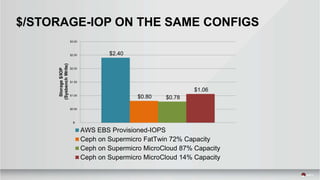

- The price per IOP of Red Hat Ceph Storage on a Supermicro cluster was $0.78, well below the $2.50 target cost of AWS EBS provisioned IOPS storage.

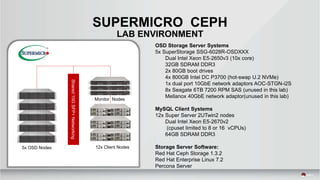

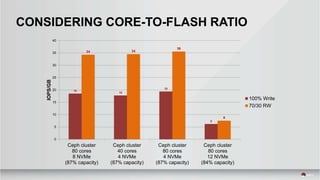

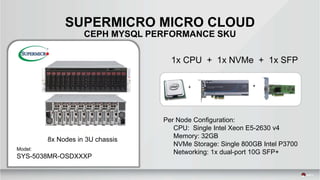

- Different hardware configurations, especially core-to-flash ratios, impacted Ceph Storage performance