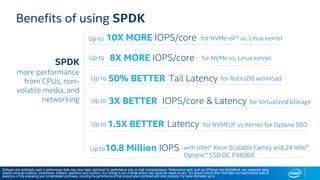

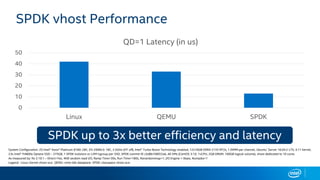

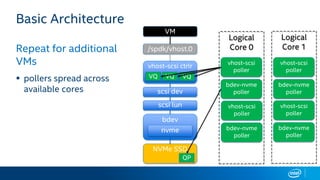

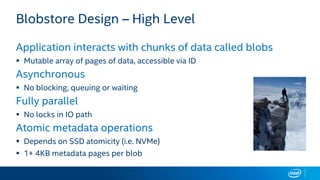

The document discusses Intel's storage software products and highlights the performance of their technologies, including the Storage Performance Development Kit (SPDK). It notes that performance can vary based on system configurations and emphasizes the importance of consulting additional benchmarks and sources for accurate performance evaluation. Additionally, it outlines the benefits of using SPDK for maximizing IOPS and reducing latency in various storage applications.