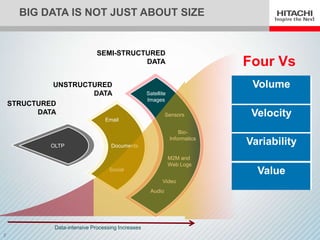

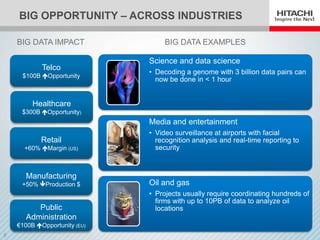

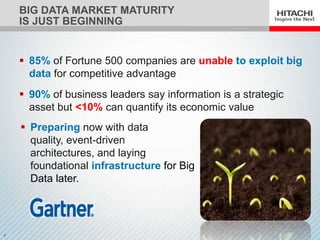

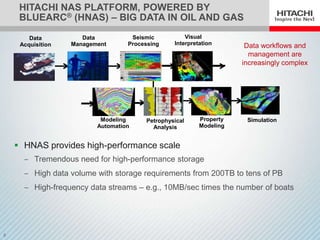

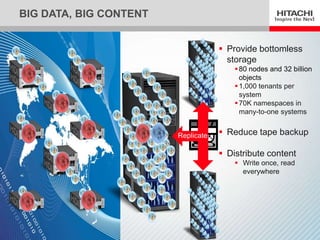

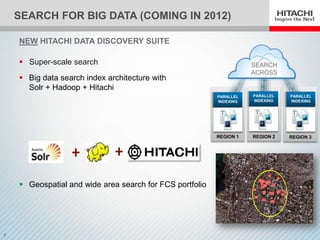

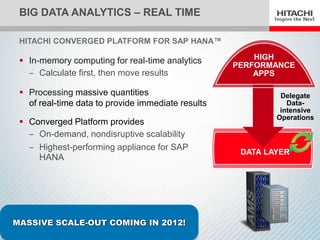

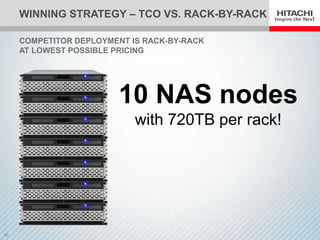

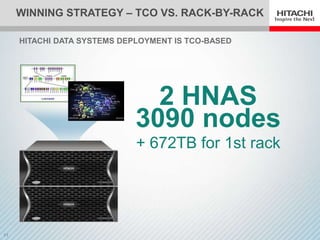

The document discusses the implications and opportunities of big data across various industries, highlighting that many Fortune 500 companies are currently unable to fully leverage it for competitive advantage. It outlines specific examples of big data applications in sectors like healthcare, media, and oil and gas, as well as the technological needs for data management and analytics. Additionally, it introduces Hitachi's solutions for big data storage and analytics, emphasizing the need for high-performance infrastructures.