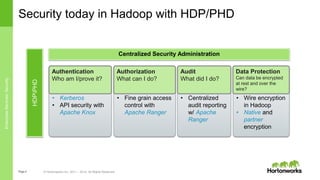

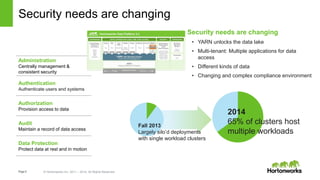

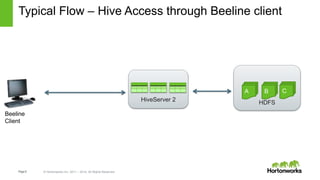

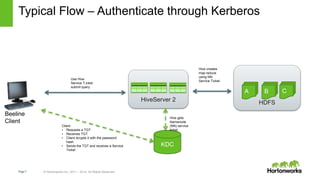

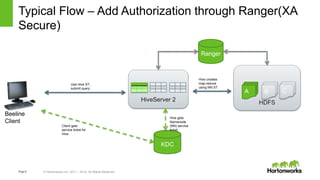

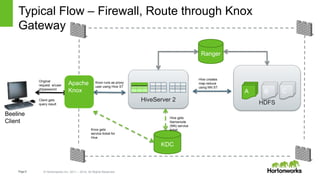

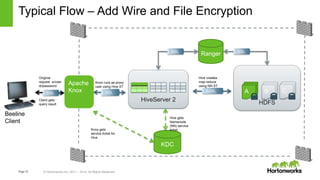

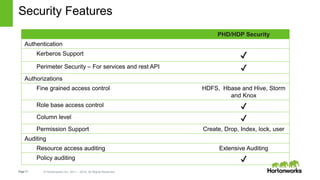

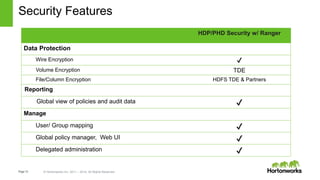

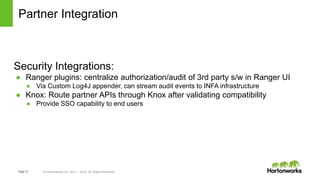

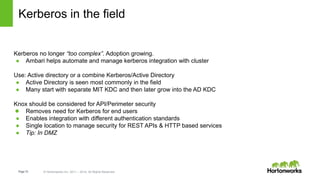

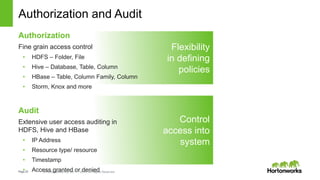

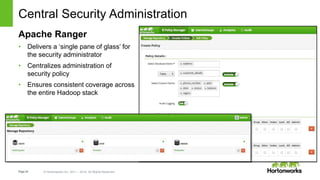

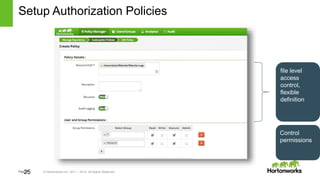

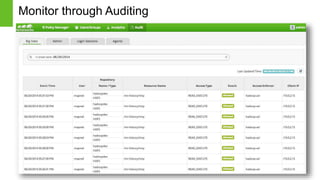

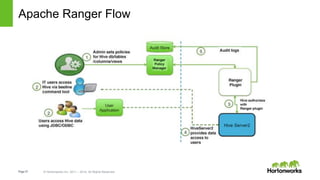

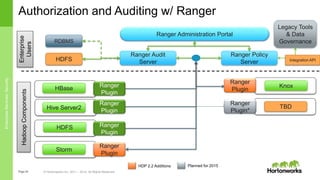

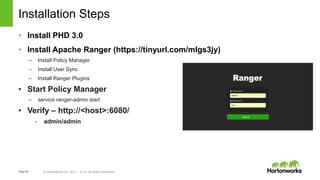

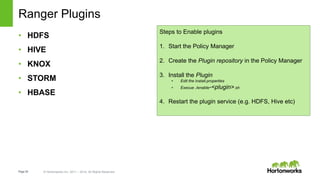

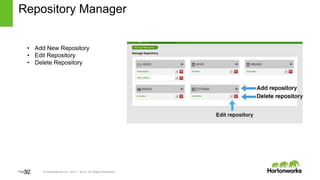

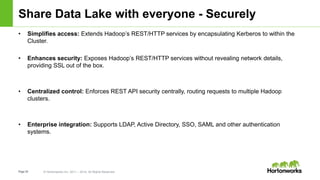

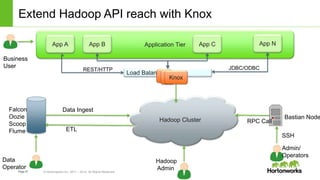

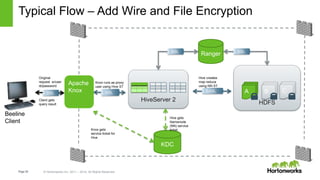

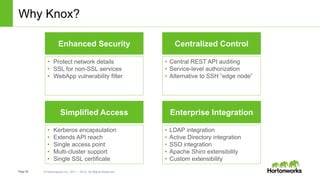

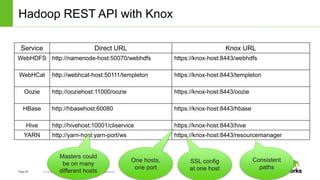

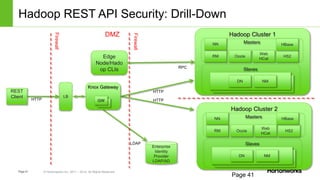

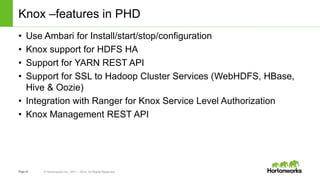

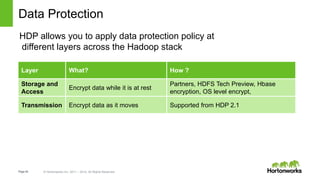

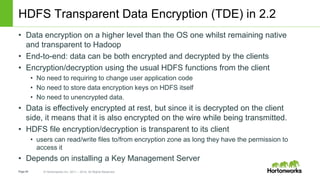

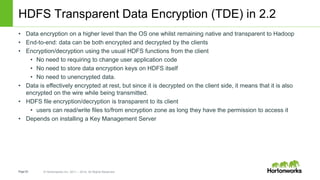

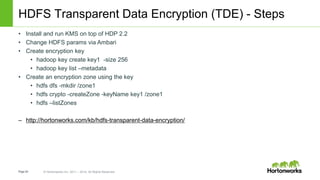

The document discusses security features in Hortonworks Data Platform (HDP) and Pivotal HD. It covers authentication with Kerberos, authorization and auditing using Apache Ranger, perimeter security with Apache Knox, and data encryption at rest and in transit. Various security flows are illustrated including typical access to Hive through Beeline and adding authorization, firewall routing, and encryption. Installation and configuration of Ranger and Knox are also outlined.