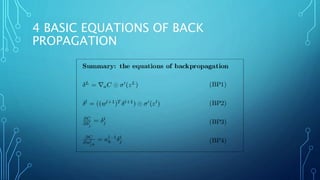

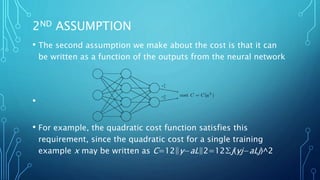

The back propagation method is a key technique for training artificial neural networks, involving a cycle of propagation and weight updates. It calculates partial derivatives of a cost function with respect to weights and biases by using a quadratic cost function and averaging over training examples. The algorithm relies on linear algebra operations, particularly the Hadamard product for elementwise vector multiplication.

![THE HADAMARD PRODUCT

• The back propagation algorithm is based on common linear

algebraic operations - things like vector addition, multiplying a

vector by a matrix, and so on.

• In particular, suppose s and t are two vectors of the same

dimension. Then we use s⊙t to denote the elementwise product

of the two vectors.

• Thus the components of s⊙t are just (s⊙t)j=sjtj. As an example,

[12]⊙[34]=[1∗32∗4]=[38]

• This kind of elementwise multiplication is sometimes called the

Hadamard product](https://image.slidesharecdn.com/backpropagationmethod-170418044200/85/Back-propagation-method-11-320.jpg)