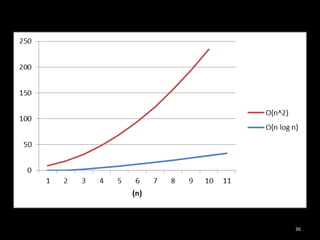

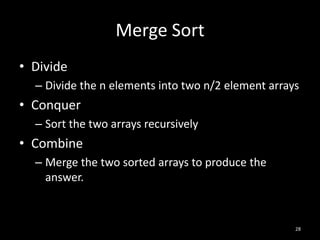

This document summarizes a presentation on algorithm performance and asymptotic analysis. It introduces common algorithm analysis techniques like Big O notation and the master method for solving recurrences. Specific algorithms like insertion sort and merge sort are analyzed. Insertion sort is shown to have quadratic worst-case runtime while merge sort achieves linearithmic runtime through a divide and conquer approach. Overall the presentation aims to demonstrate how asymptotic analysis can provide insights into algorithm optimization.

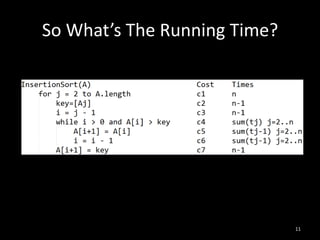

![Analysis of Insertion Sort

InsertionSort(A)

for j = 2 to A.length

key=[Aj]

i=j-1

while i > 0 and A[i] > key

A[i+1] = A[i]

i=i-1

A[i+1] = key

9](https://image.slidesharecdn.com/algorithms-130123054628-phpapp02/85/Algorithms-Rocksolid-Tour-2013-9-320.jpg)

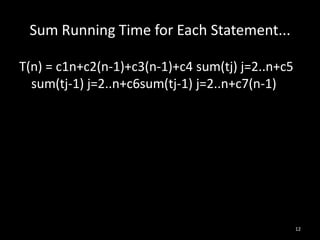

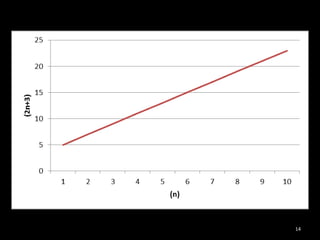

![Best Case Running Time

If the input (A) is already sorted then...

A[i] <= key when has initial value of j-1 thus tj=1.

And so...

T(n) = c1n+c2(n-1)+c3(n-1)+c4(n-1)+c7(n-1)

= (c1+c2+c3+c4+c7)n-(c2+c3+c4+c7)

Which can be expressed as an+b for constants a

and b that depend on ci

So T(n) is a linear function of n

13](https://image.slidesharecdn.com/algorithms-130123054628-phpapp02/85/Algorithms-Rocksolid-Tour-2013-13-320.jpg)

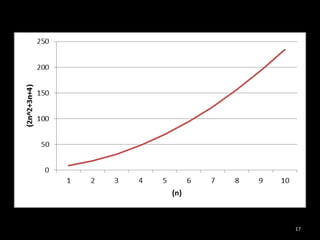

![Worst Case Scenario

If the input (n) is in reverse sort order then...

We have to compare each A[j] with each

element in the sub array A[1..j-1].

And so...

T(n) = (c4/2+c5/2+c6/2)n^2 +(c1 +c2+c3+c4/2-

c5/2-c6/2+c7)n-(c2+c3+c4+c7)

Which can be expressed as an^2 + bn + c

So T(n) is a quadratic function of n

16](https://image.slidesharecdn.com/algorithms-130123054628-phpapp02/85/Algorithms-Rocksolid-Tour-2013-16-320.jpg)

![Analysis of Merge Sort

MergeSort(A,p,r)

if(p<r)

q = [(p+r)/2]

MergeSort(A,p,q)

MergeSort(A,q+1,r)

Merge(A,p,q,r)

Initial call MergeSort(A,1,A.length)

29](https://image.slidesharecdn.com/algorithms-130123054628-phpapp02/85/Algorithms-Rocksolid-Tour-2013-29-320.jpg)