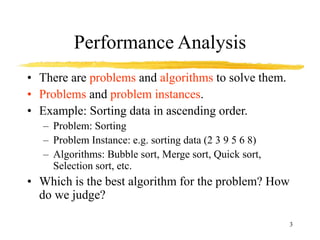

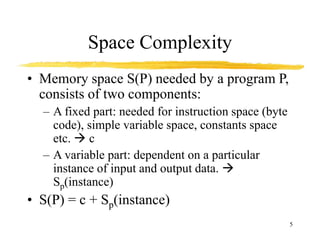

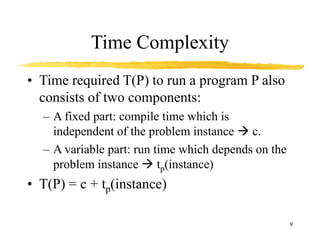

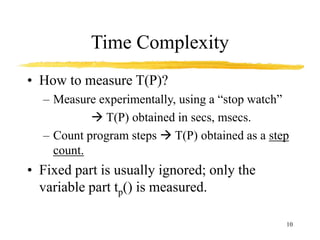

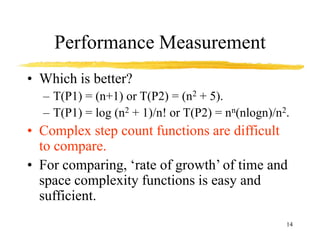

This document discusses performance analysis of algorithms. It introduces key concepts like time complexity, which measures the amount of CPU time an algorithm needs to run, and space complexity, which measures the amount of memory needed. Common time complexities include constant O(1), linear O(n), quadratic O(n^2), and exponential O(2^n). Big O notation is used to analyze the rate of growth of these complexities and simplify comparisons between algorithms.

![7

Space Complexity: Example 2

1. Algorithm Sum(a[], n)

2. {

3. s:= 0.0;

4. for i = 1 to n do

5. s := s + a[i];

6. return s;

7. }](https://image.slidesharecdn.com/algorithm-analysis-230309123427-92a78653/85/ALGORITHM-ANALYSIS-ppt-7-320.jpg)

![8

Space Complexity: Example 2.

• Every instance needs to store array a[] & n.

– Space needed to store n = 1 word.

– Space needed to store a[ ] = n floating point

words (or at least n

words)

– Space needed to store i and s = 2 words

• Sp(n) = (n + 3). Hence S(P) = (n + 3).](https://image.slidesharecdn.com/algorithm-analysis-230309123427-92a78653/85/ALGORITHM-ANALYSIS-ppt-8-320.jpg)

![12

Time Complexity: Example 1

Statements S/E Freq. Total

1 Algorithm Sum(a[],n) 0 - 0

2 { 0 - 0

3 S = 0.0; 1 1 1

4 for i=1 to n do 1 n+1 n+1

5 s = s+a[i]; 1 n n

6 return s; 1 1 1

7 } 0 - 0

2n+3](https://image.slidesharecdn.com/algorithm-analysis-230309123427-92a78653/85/ALGORITHM-ANALYSIS-ppt-12-320.jpg)

![13

Time Complexity: Example 2

Statements S/E Freq. Total

1 Algorithm Sum(a[],n,m) 0 - 0

2 { 0 - 0

3 for i=1 to n do; 1 n+1 n+1

4 for j=1 to m do 1 n(m+1) n(m+1)

5 s = s+a[i][j]; 1 nm nm

6 return s; 1 1 1

2nm+2n+2](https://image.slidesharecdn.com/algorithm-analysis-230309123427-92a78653/85/ALGORITHM-ANALYSIS-ppt-13-320.jpg)

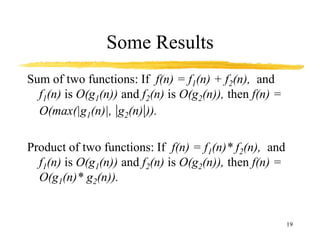

![15

Big O Notation

• Big O of a function gives us ‘rate of growth’ of the

step count function f(n), in terms of a simple

function g(n), which is easy to compare.

• Definition: [Big O] The function f(n) = O(g(n))

(big ‘oh’ of g of n) iff there exist positive

constants c and n0 such that f(n) <= c*g(n) for all

n, n>=n0. See graph on next slide.

• Example: f(n) = 3n+2 is O(n) because 3n+2 <= 4n

for all n >= 2. c = 4, n0 = 2. Here g(n) = n.](https://image.slidesharecdn.com/algorithm-analysis-230309123427-92a78653/85/ALGORITHM-ANALYSIS-ppt-15-320.jpg)