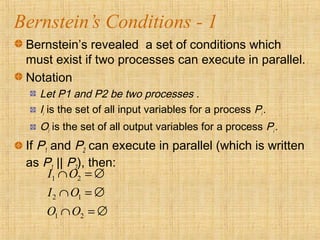

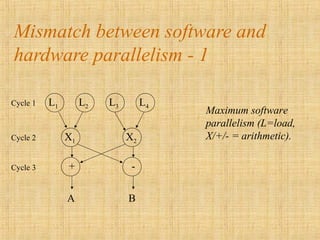

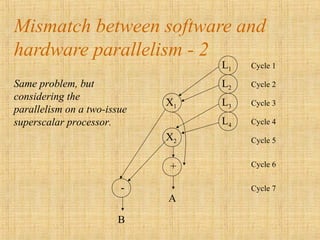

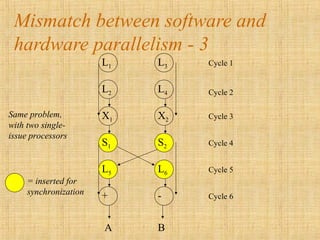

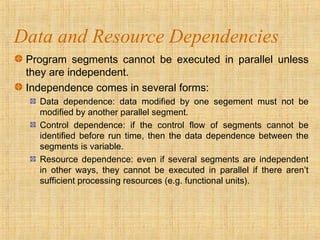

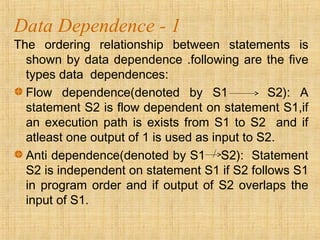

The document discusses the conditions necessary for exploiting parallelism in computer architecture, emphasizing data, control, and resource dependencies that affect parallel execution. It explains various types of data dependencies, the importance of independence among program segments, and Bernstein's conditions for parallel execution. Additionally, it covers hardware and software parallelism, the mismatch between them, and the critical role of compilers in optimizing parallel performance.

![Control Dependence

Dependence may also exist between operations

performed in successive iteration of looping

procedure.

The successive iteration of the following loop are

control-independent. example:

for (i=0;i<n;i++) {

a[i] = c[i];

if (a[i] < 0) a[i] = 1;

}](https://image.slidesharecdn.com/unit-1-conditionsofparallelism-180209071619/85/advanced-computer-architesture-conditions-of-parallelism-11-320.jpg)

![Control Dependence

The successive iteration of the following loop are control-

independent. example:

for (i=1;i<n;i++) {

if (a[i-1] < 0) a[i] = 1;

}

Compiler techniques are needed to get around

control dependence limitations.](https://image.slidesharecdn.com/unit-1-conditionsofparallelism-180209071619/85/advanced-computer-architesture-conditions-of-parallelism-12-320.jpg)