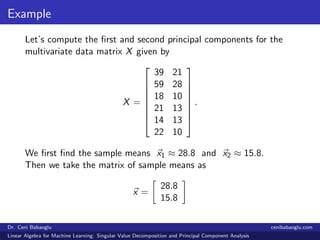

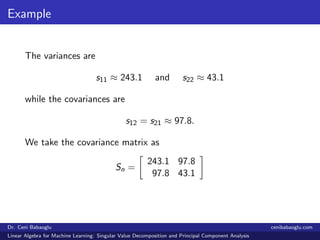

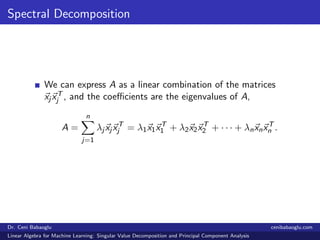

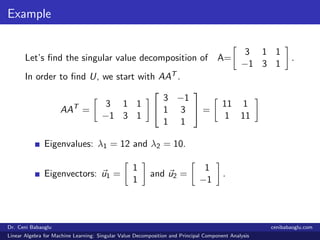

This document discusses the concepts of singular value decomposition (SVD) and principal component analysis (PCA) within the context of linear algebra for machine learning. It provides detailed explanations, mathematical formulations, and examples of spectral decomposition, SVD, and PCA, emphasizing their applications in data analysis. References for further reading on linear algebra are also included.

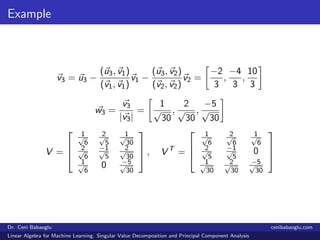

![Example

Using Gram-Schmidt Process

v1 = u1 w1 =

v1

|v1|

=

1/

√

2

1/

√

2

v2 = u2 −

(u2, v1)

(v1, v1)

v1

v2 =

1

−1

− 0

1

1

=

1

−1

− [0, 0] =

1

−1

w2 =

v2

|v2|

=

1

√

2

,

−1

√

2

U =

1/

√

2 1/

√

2

1/

√

2 −1/

√

2

Dr. Ceni Babaoglu cenibabaoglu.com

Linear Algebra for Machine Learning: Singular Value Decomposition and Principal Component Analysis](https://image.slidesharecdn.com/5-190527200756/85/5-Linear-Algebra-for-Machine-Learning-Singular-Value-Decomposition-and-Principal-Component-Analysis-11-320.jpg)

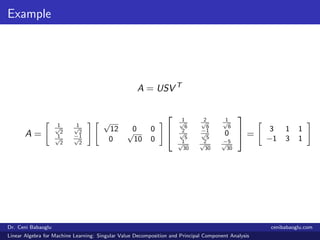

![Example

v1 = u1, w1 =

v1

|v1|

=

1

√

6

,

2

√

6

,

1

√

6

v2 = u2 −

(u2, v1)

(v1, v1)

v1 = [2, −1, 0]

w2 =

v2

|v2|

=

2

√

5

,

−1

√

5

, 0

Dr. Ceni Babaoglu cenibabaoglu.com

Linear Algebra for Machine Learning: Singular Value Decomposition and Principal Component Analysis](https://image.slidesharecdn.com/5-190527200756/85/5-Linear-Algebra-for-Machine-Learning-Singular-Value-Decomposition-and-Principal-Component-Analysis-13-320.jpg)