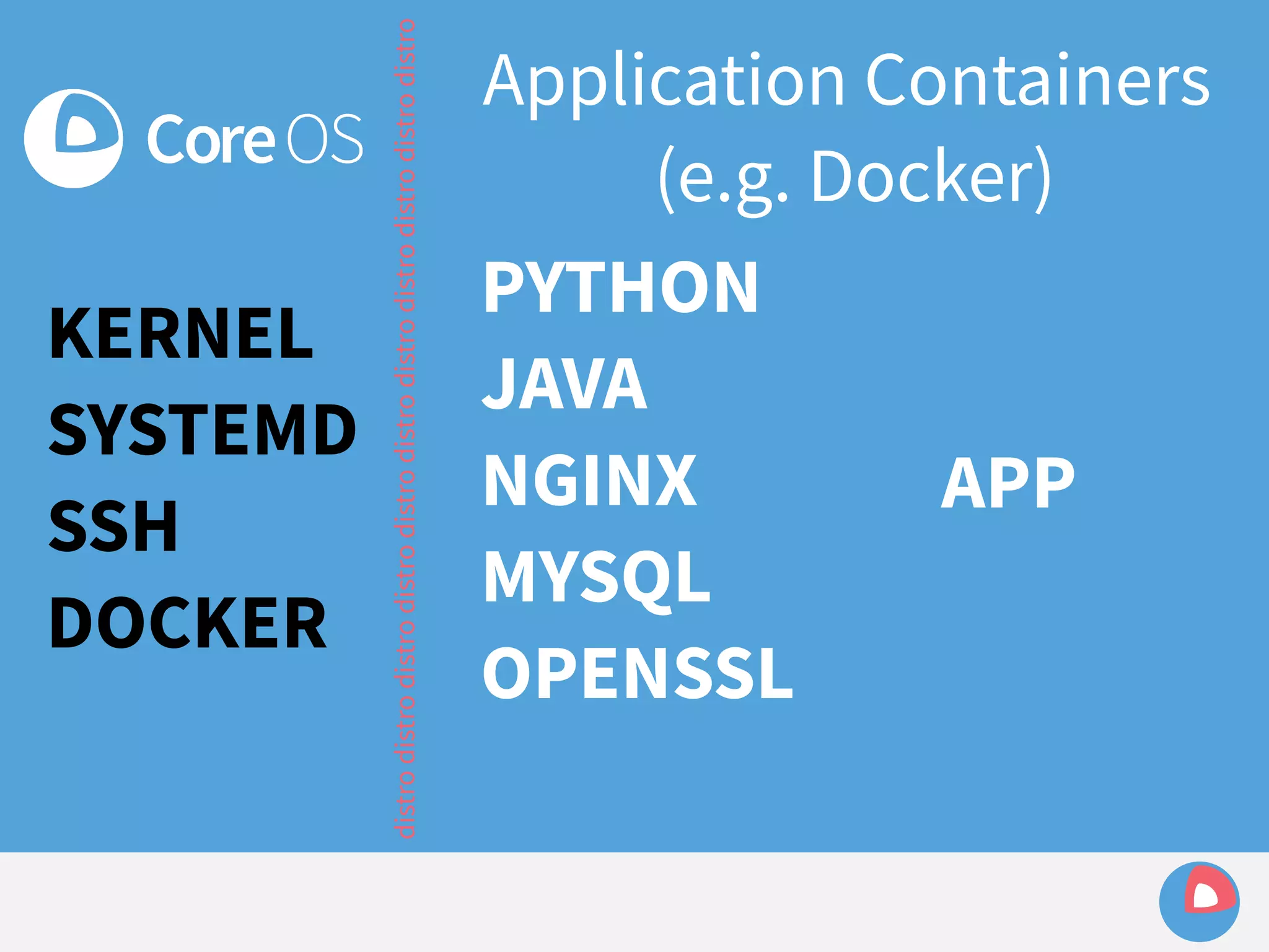

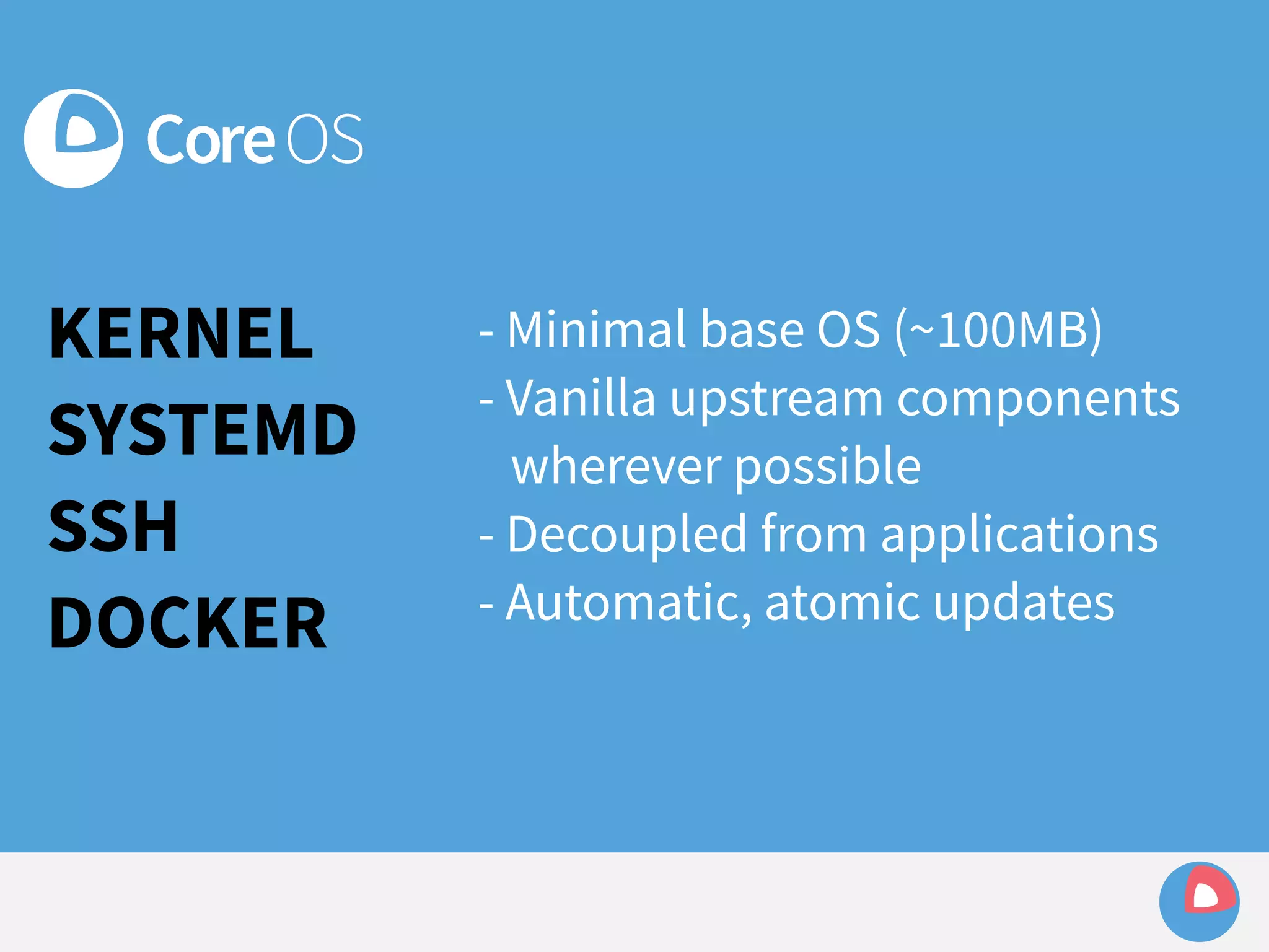

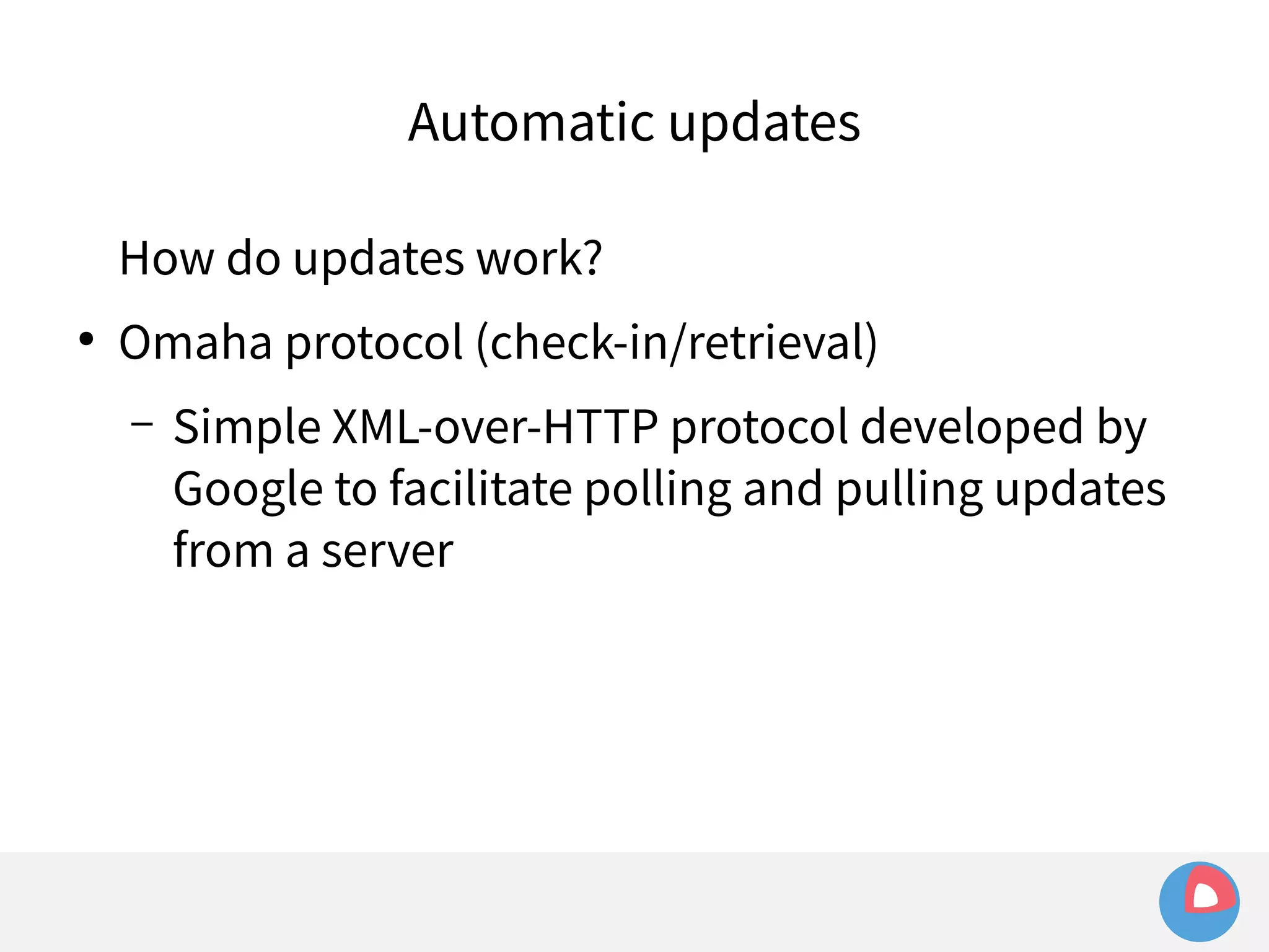

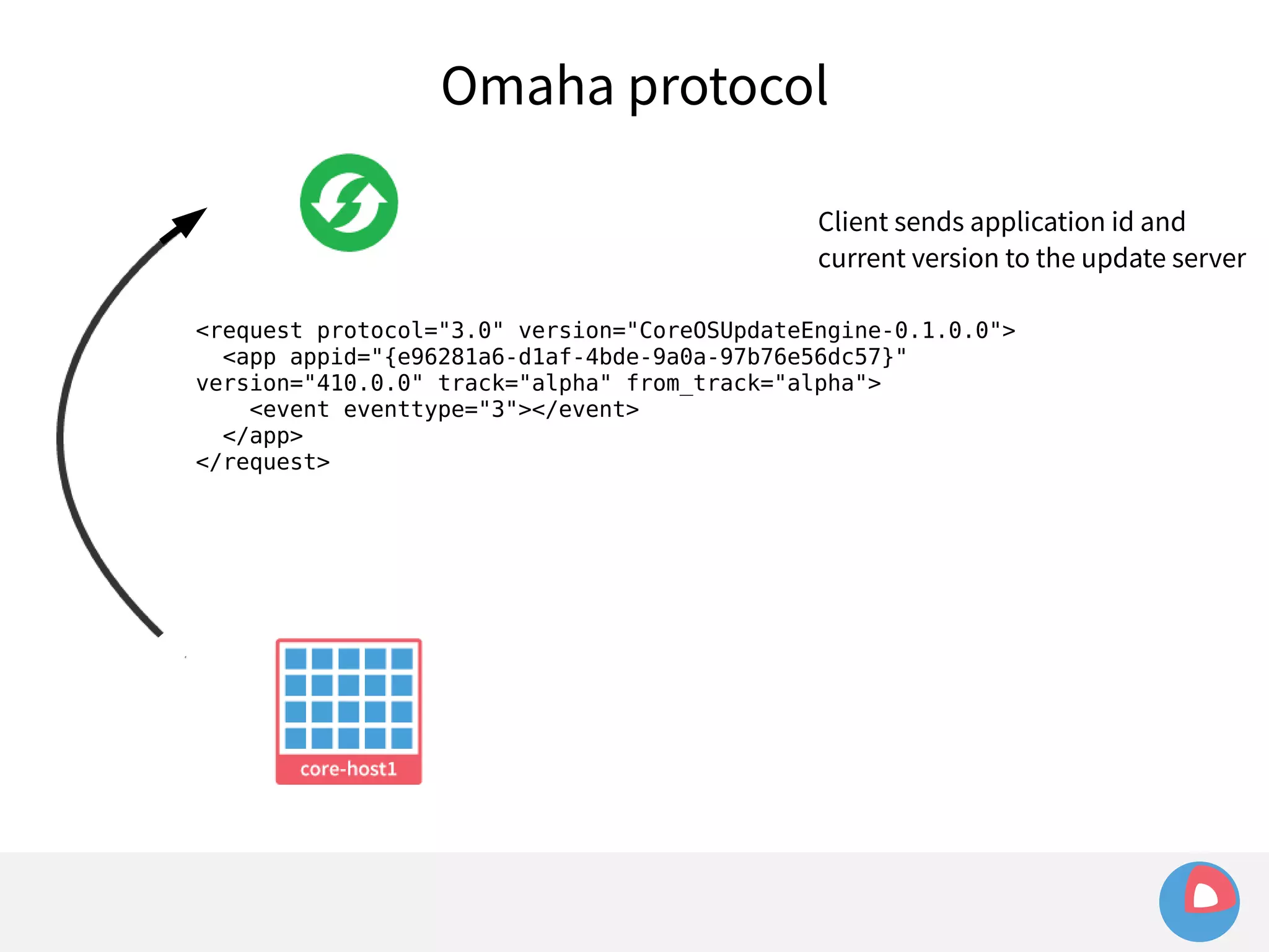

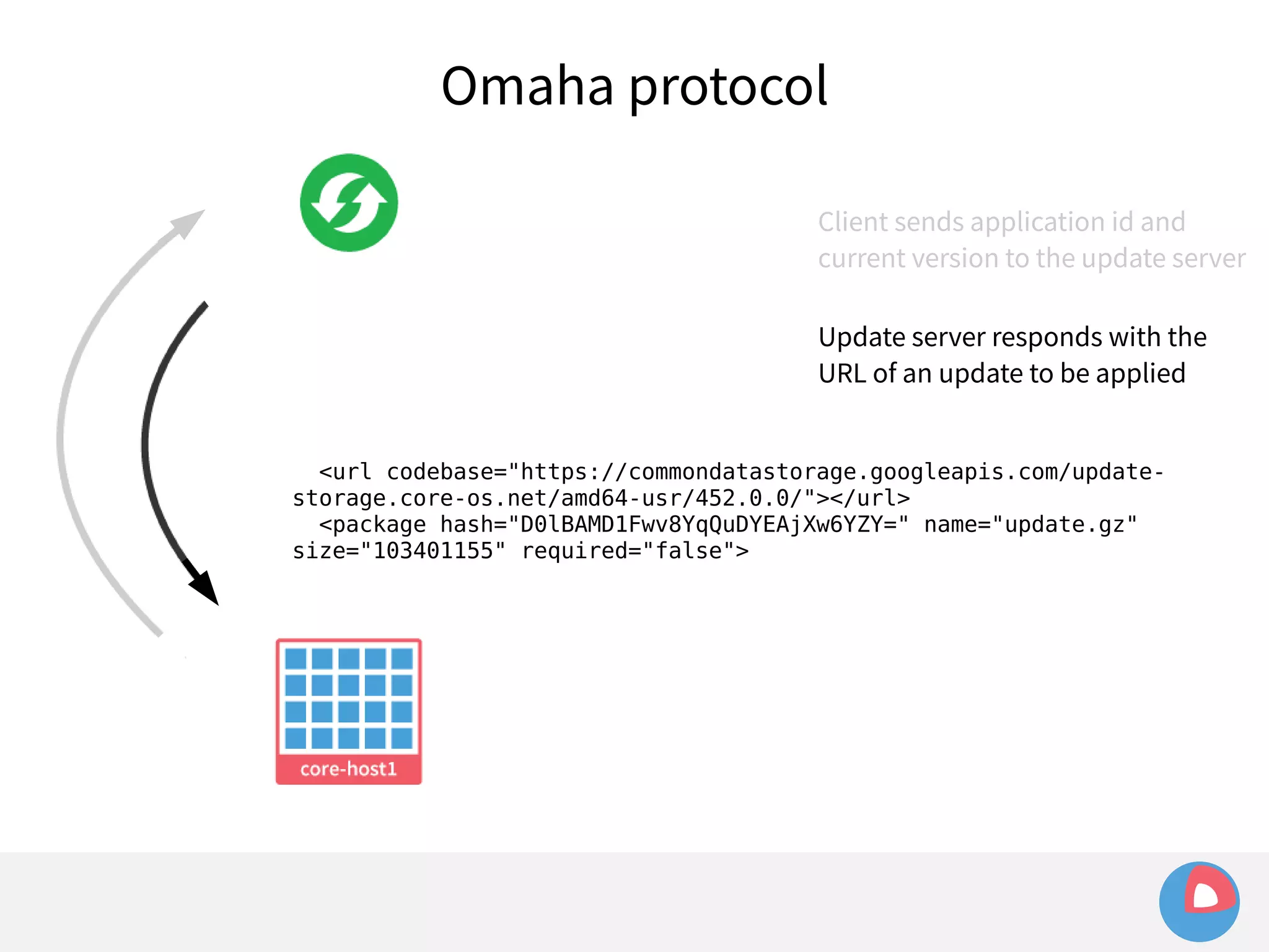

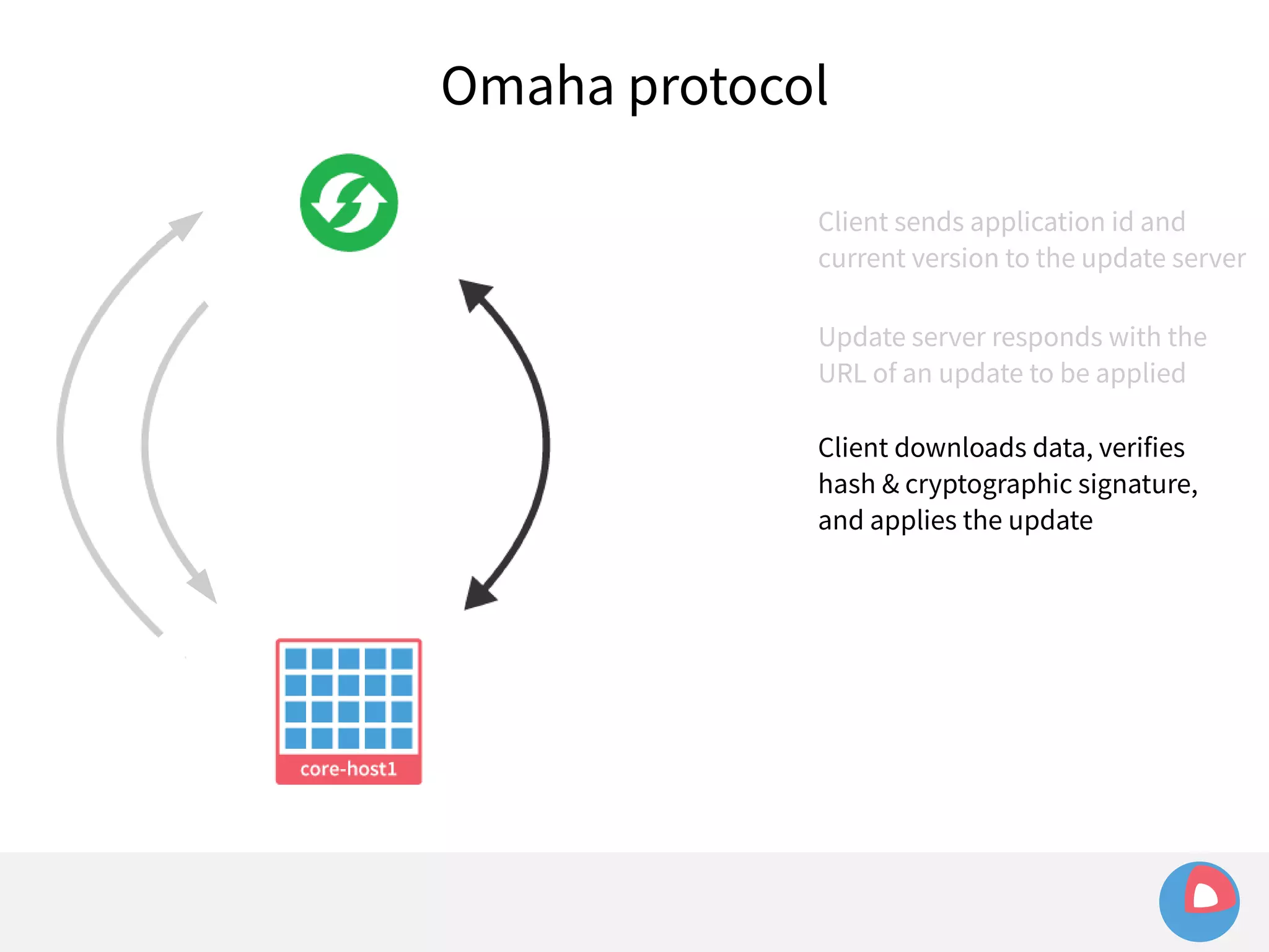

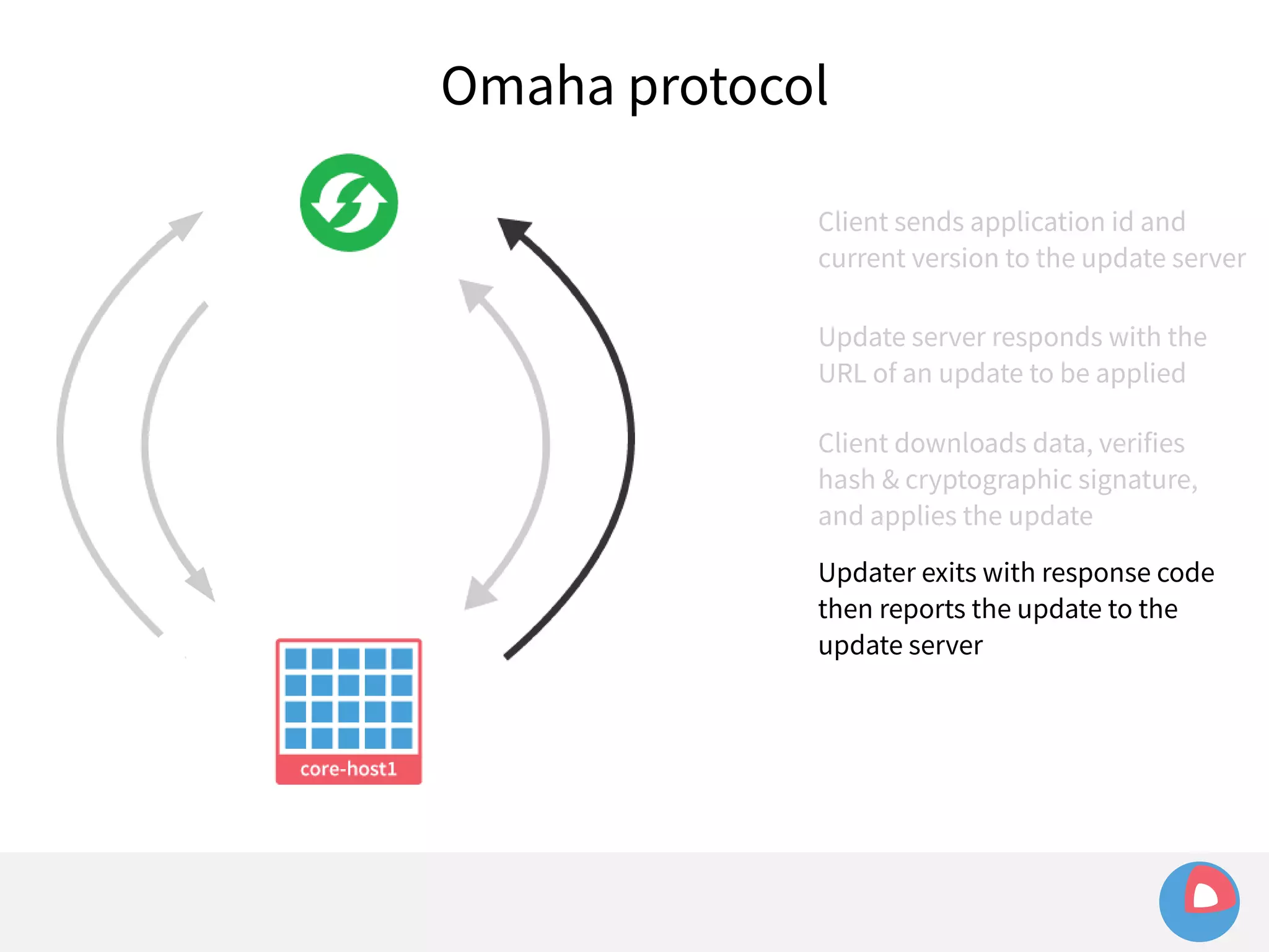

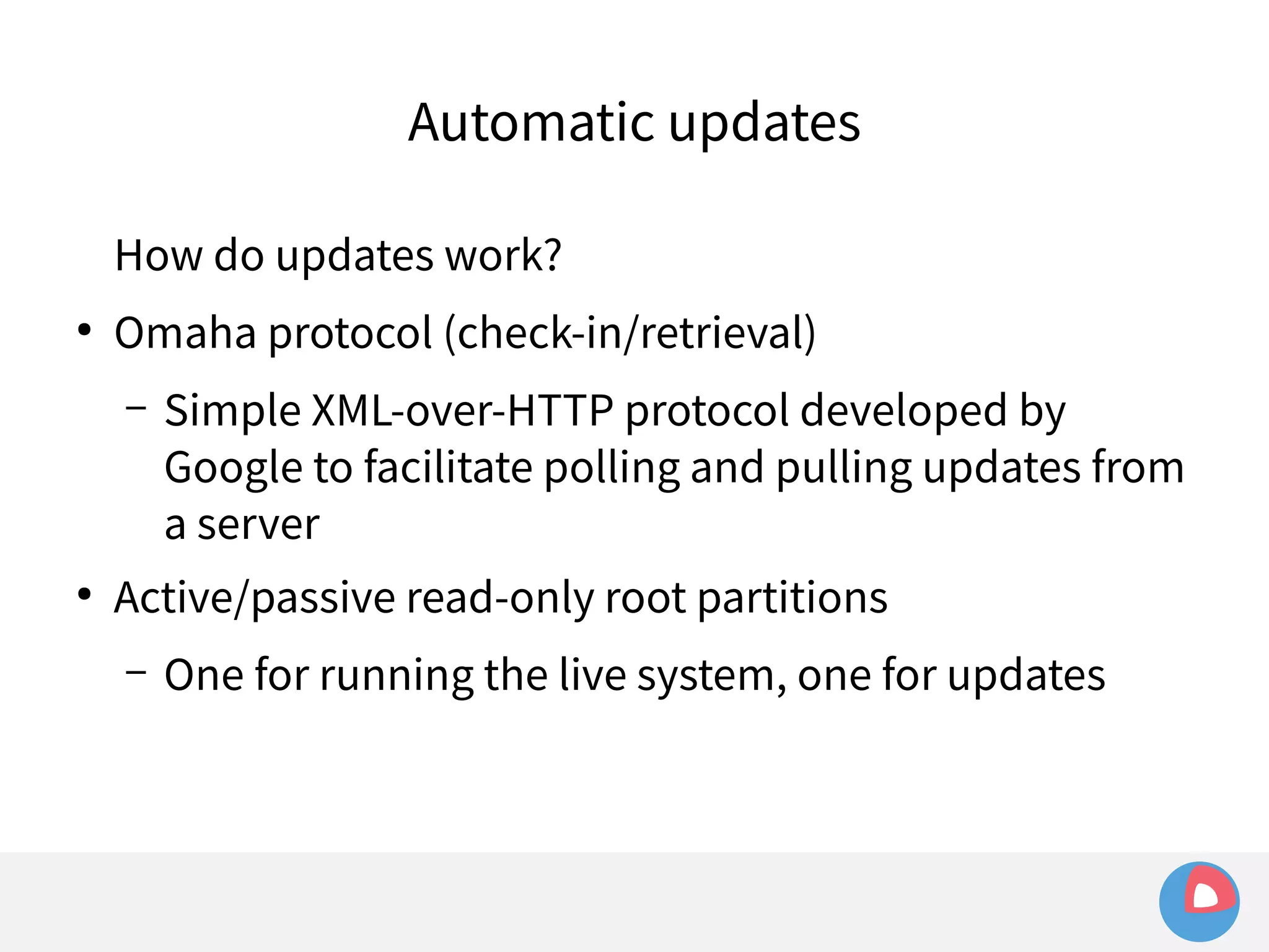

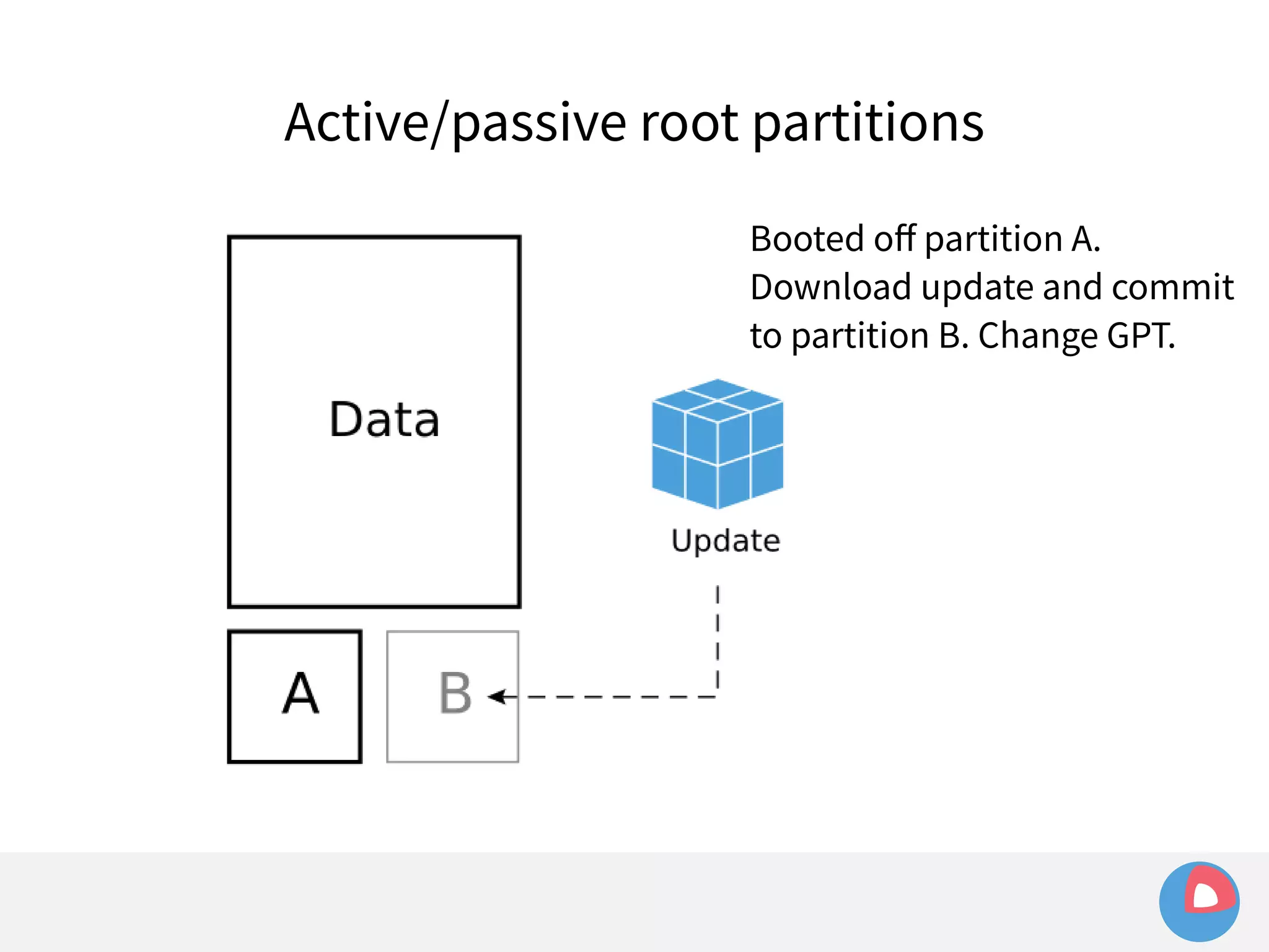

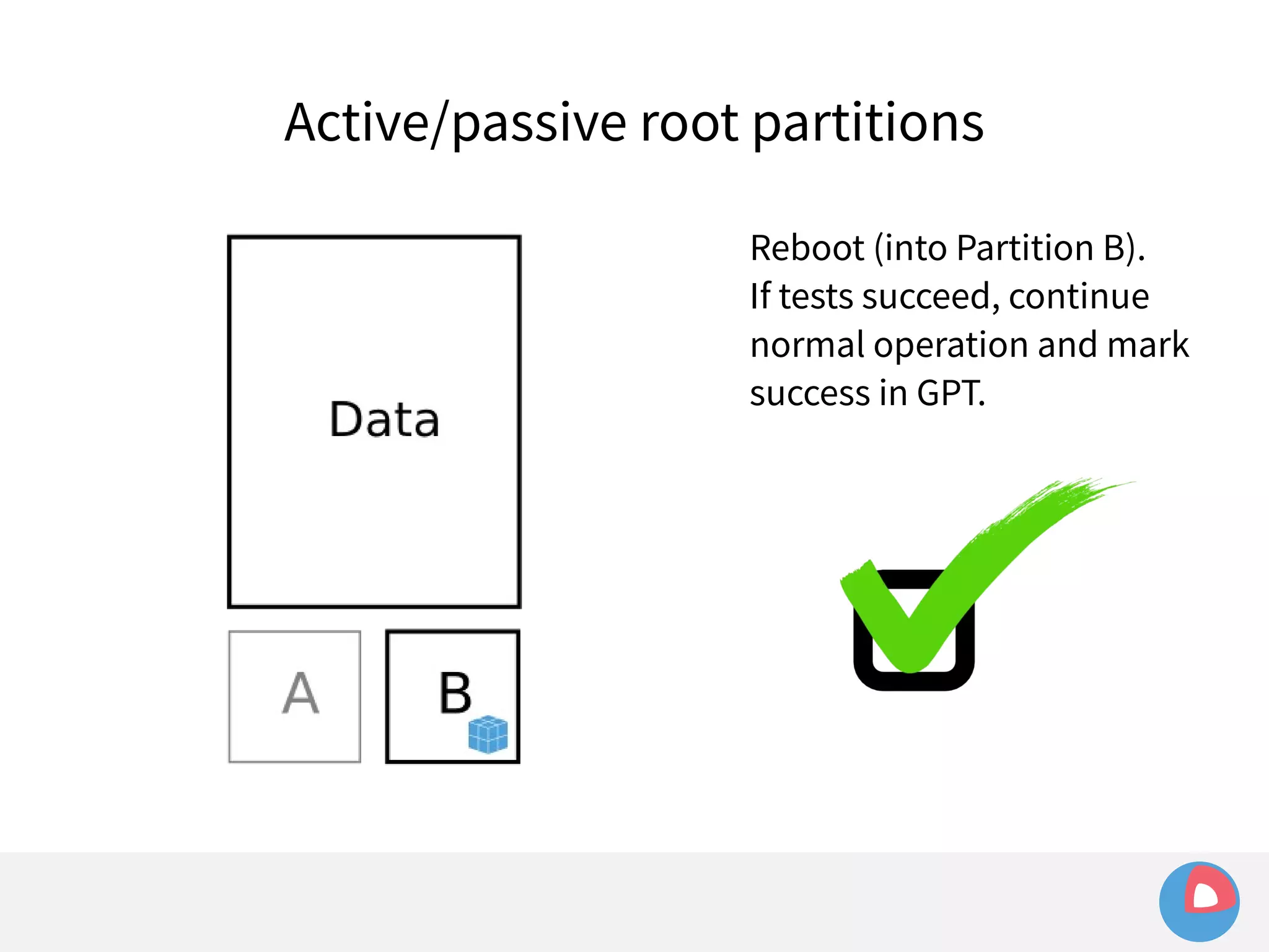

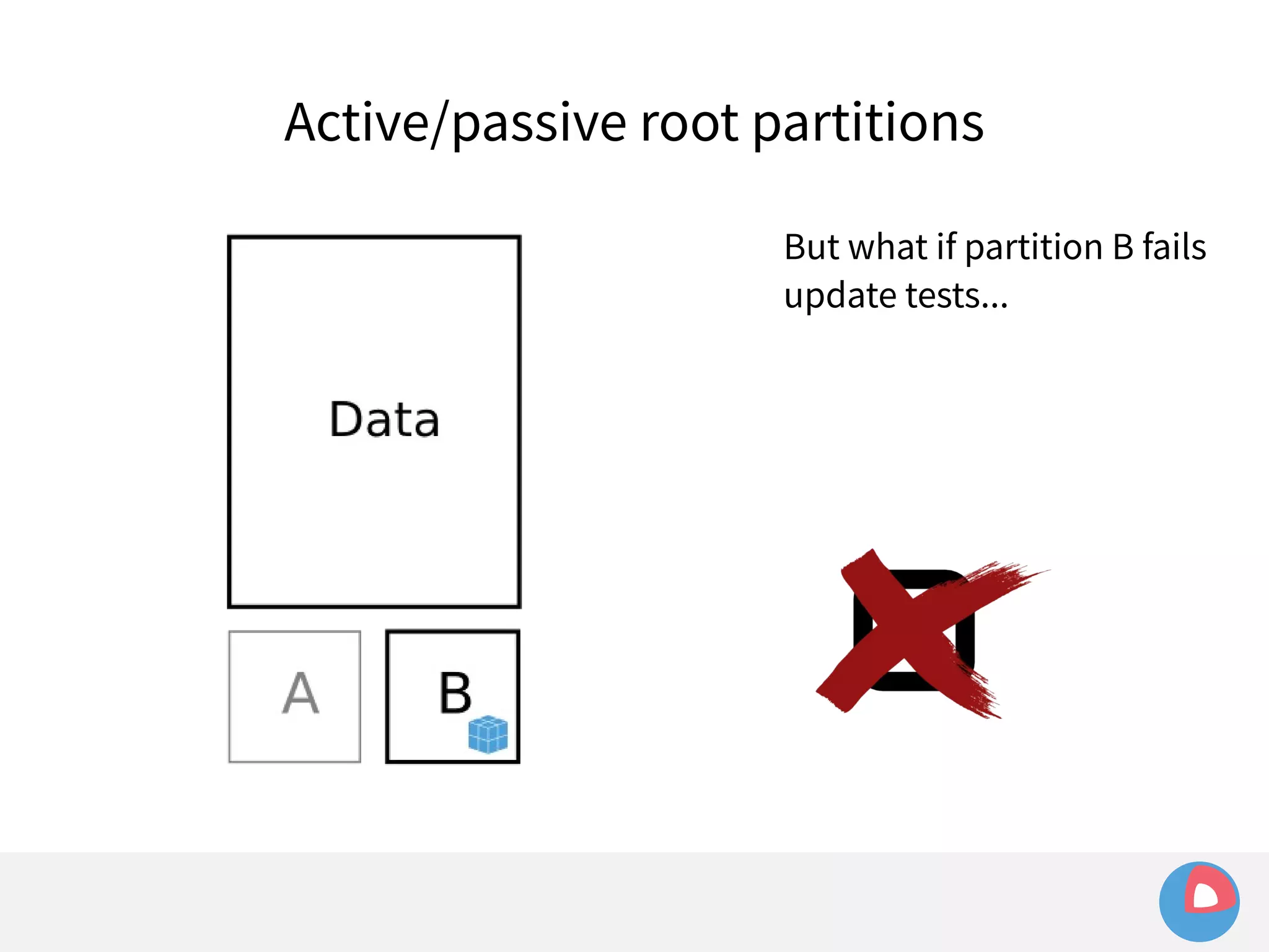

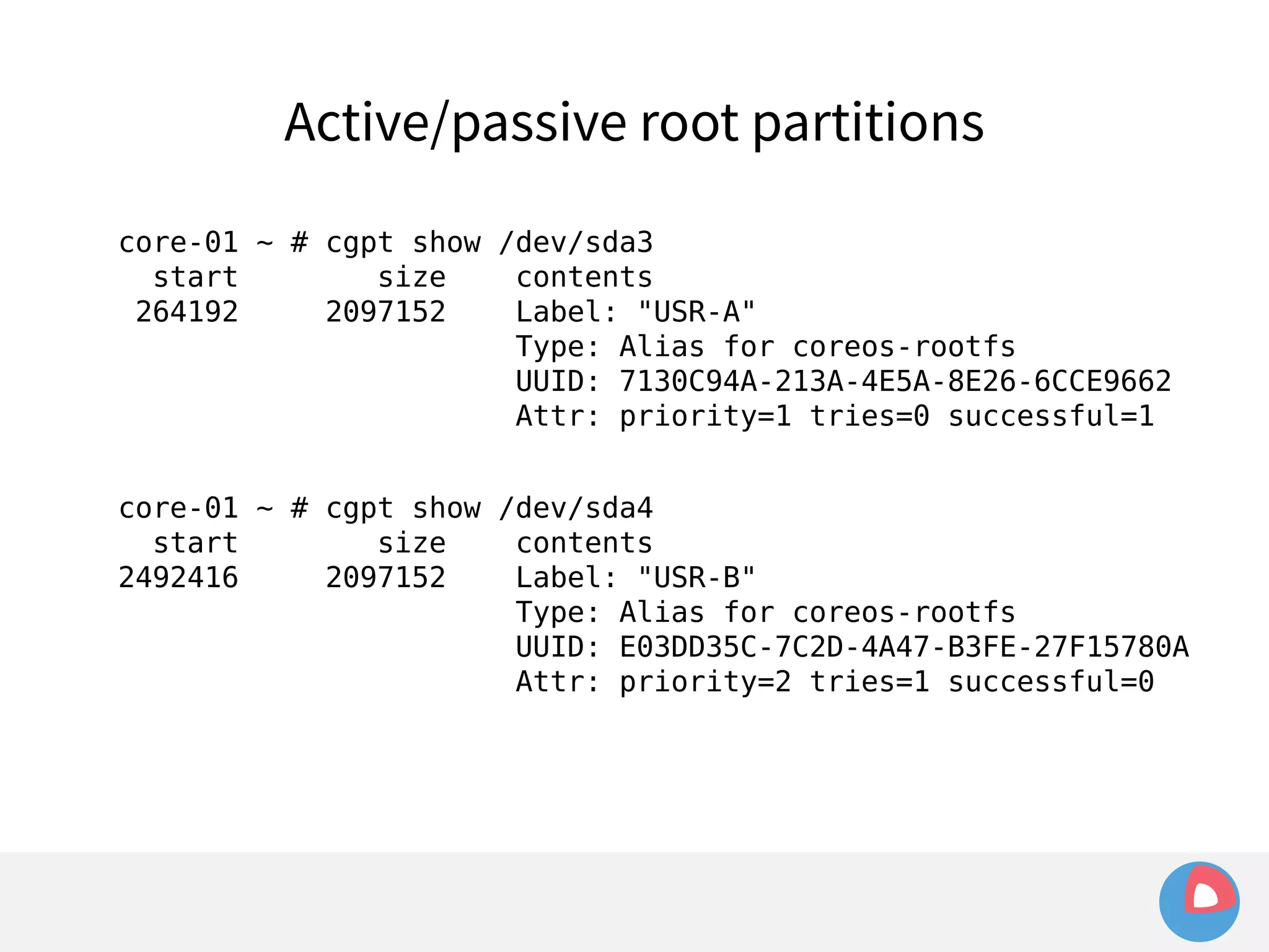

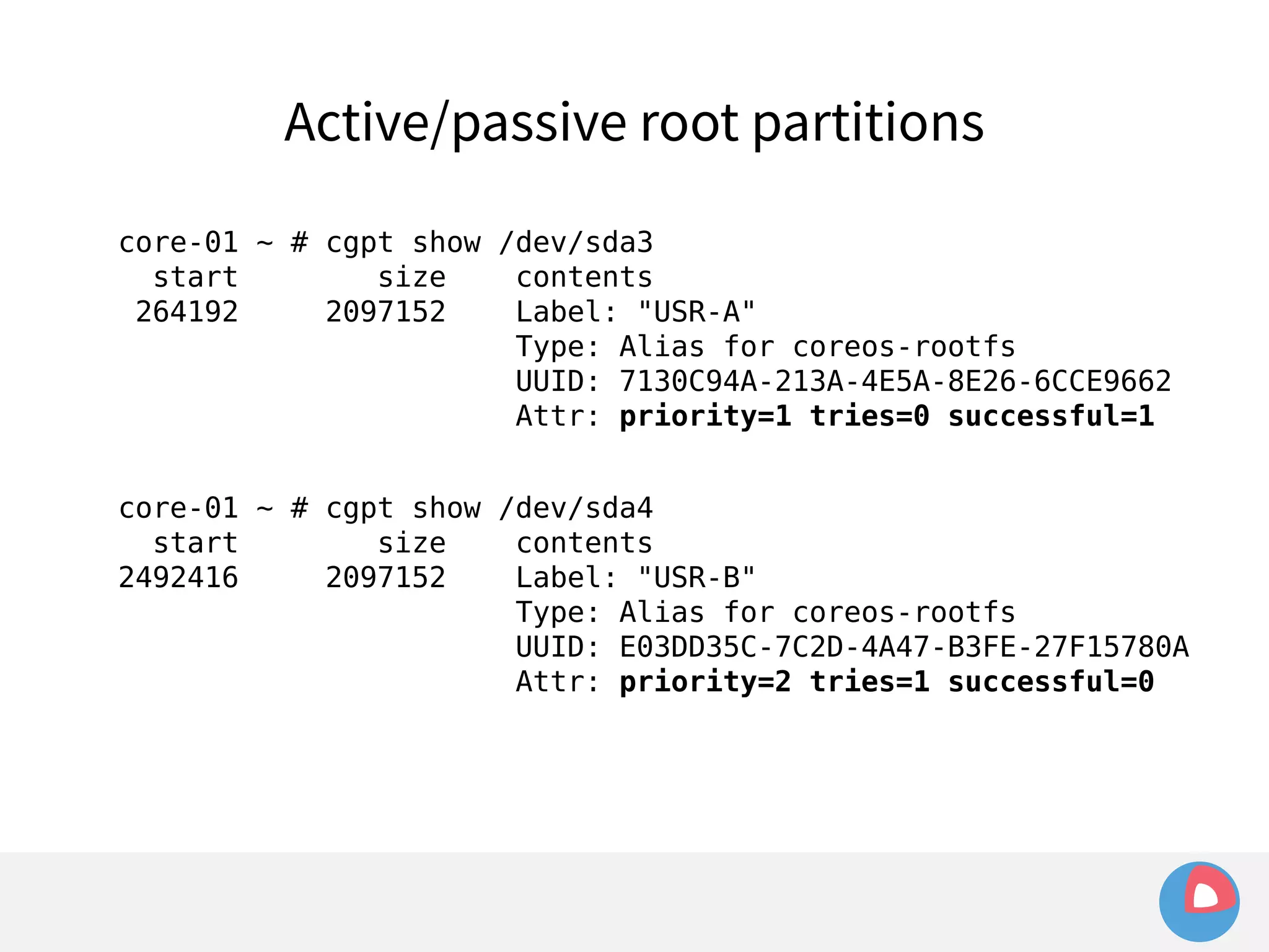

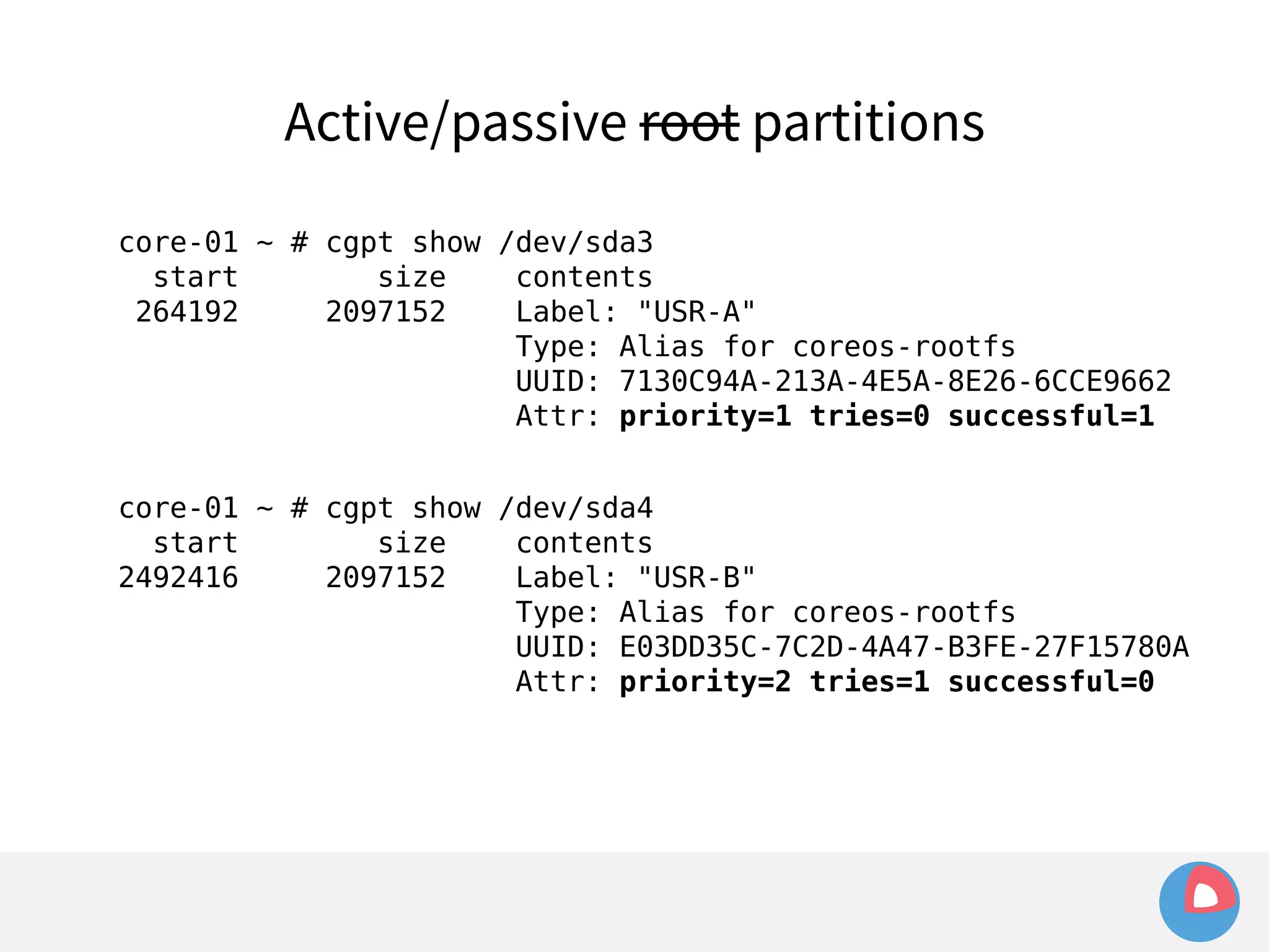

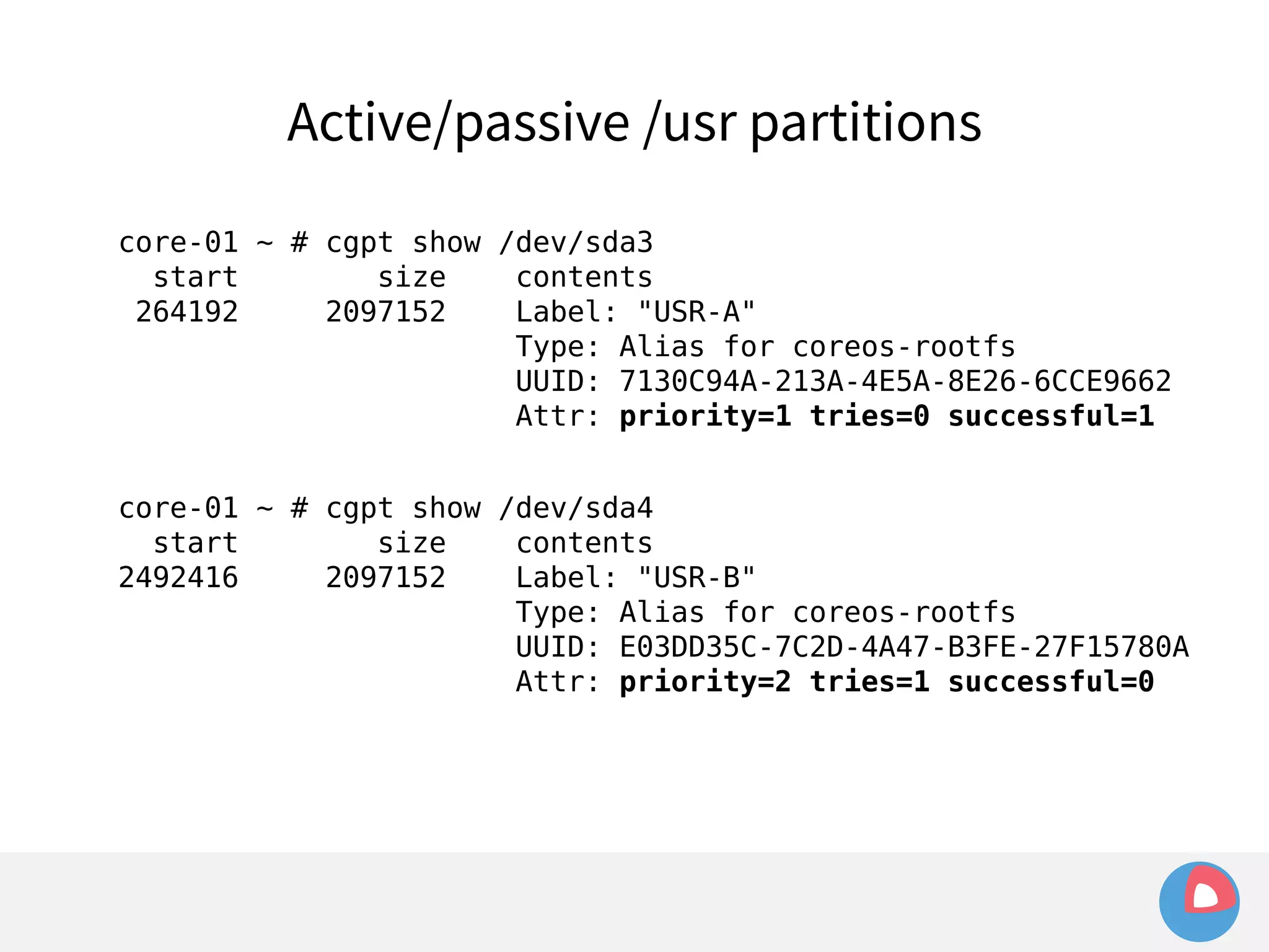

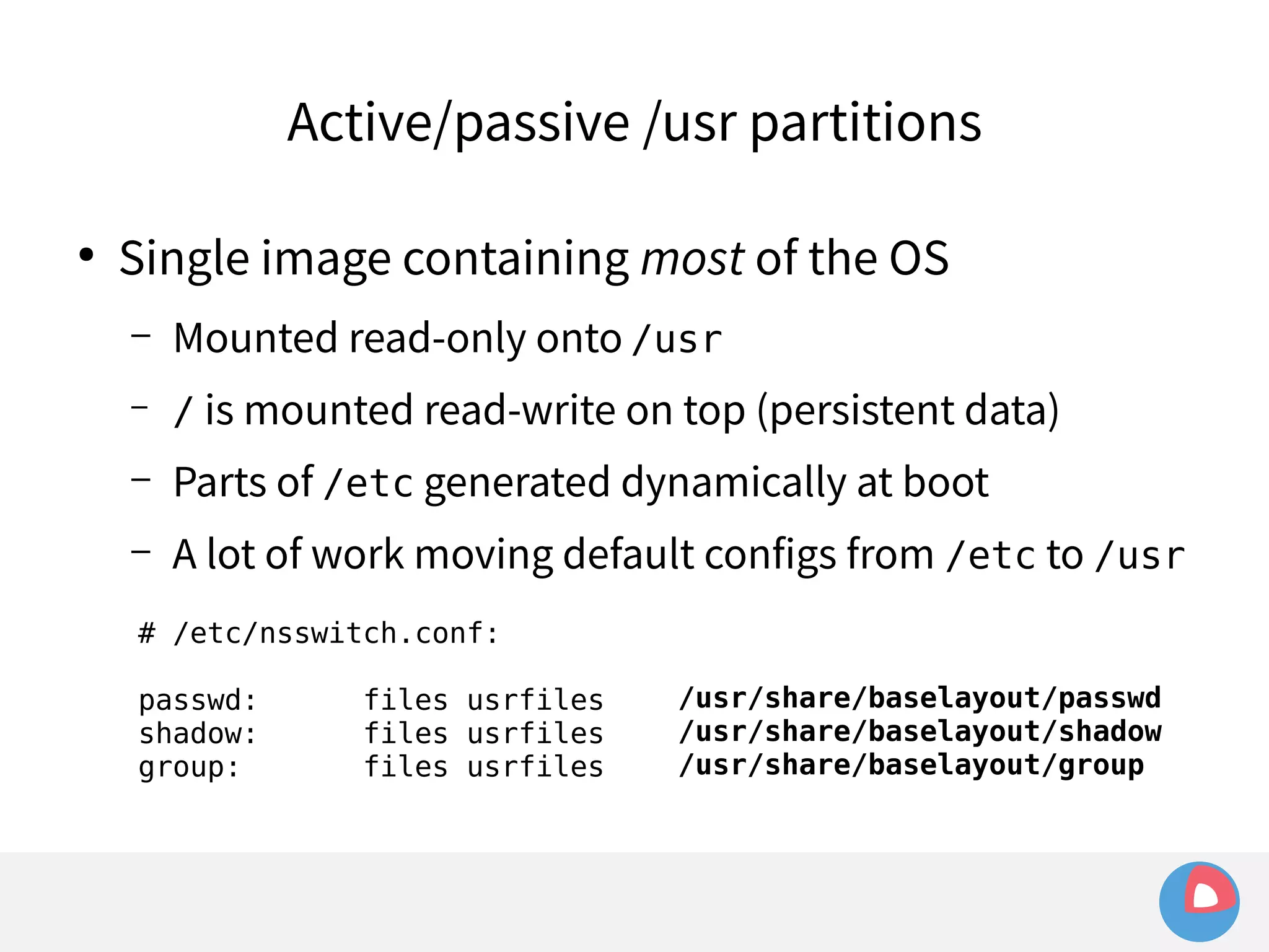

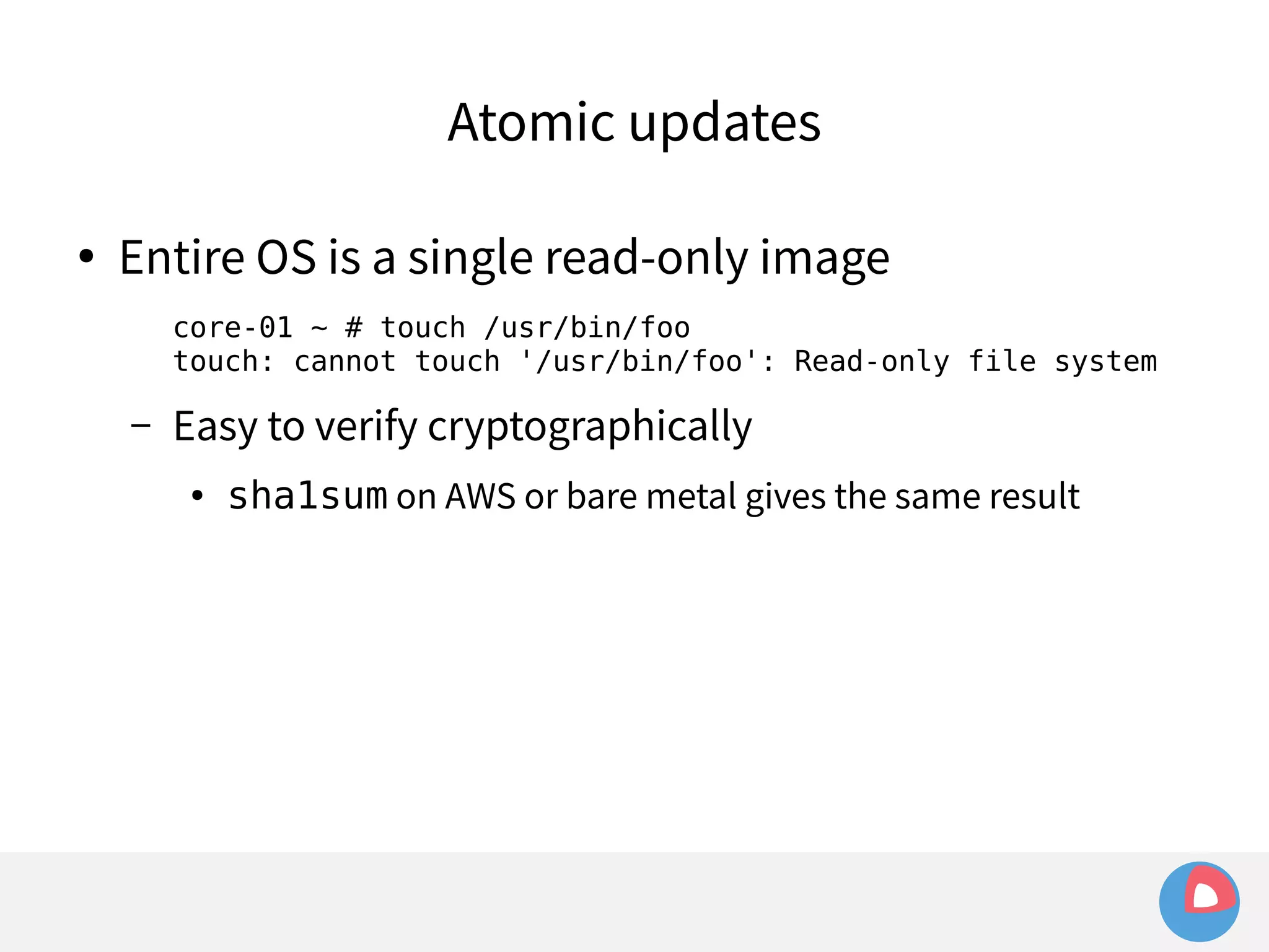

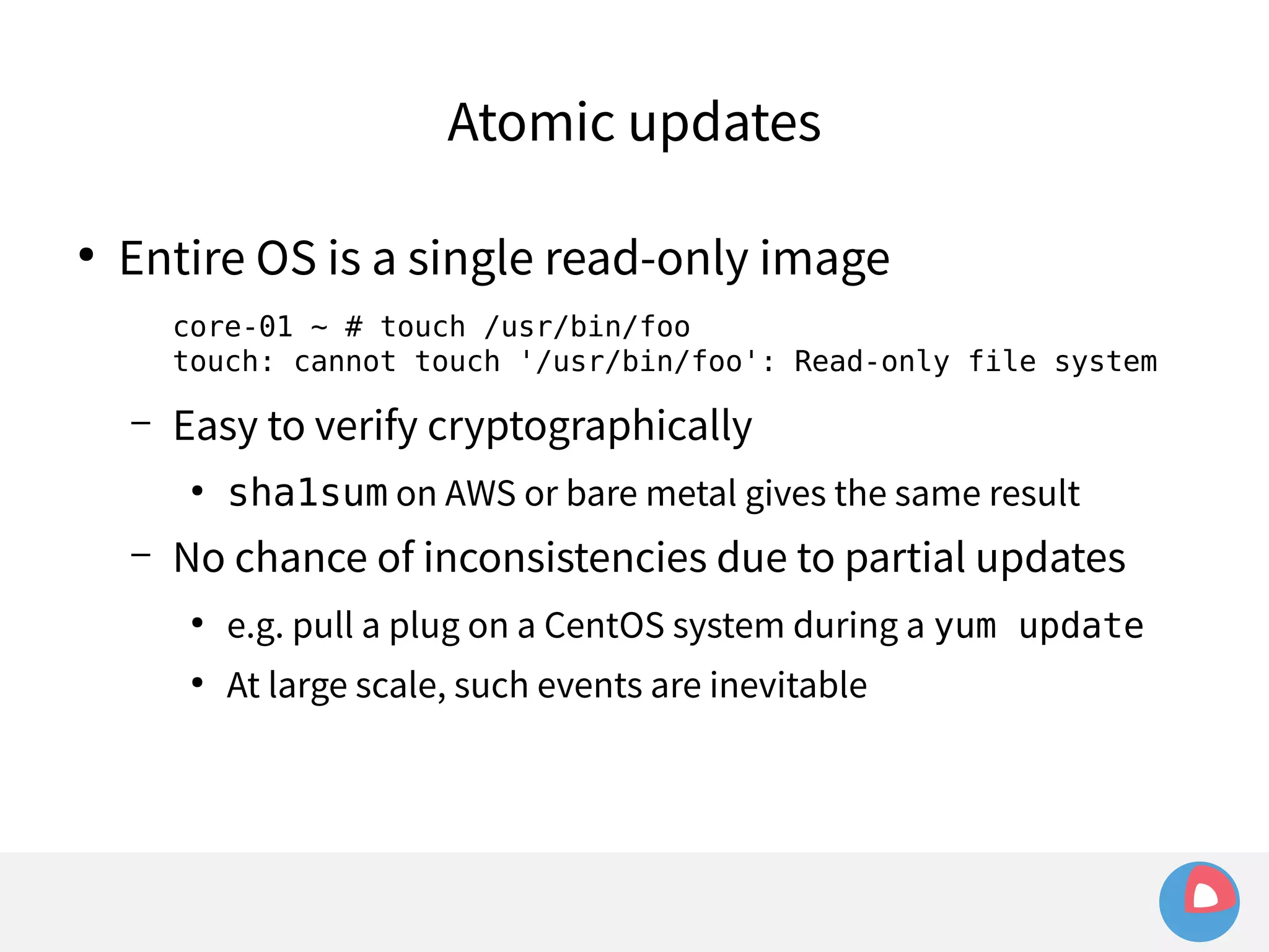

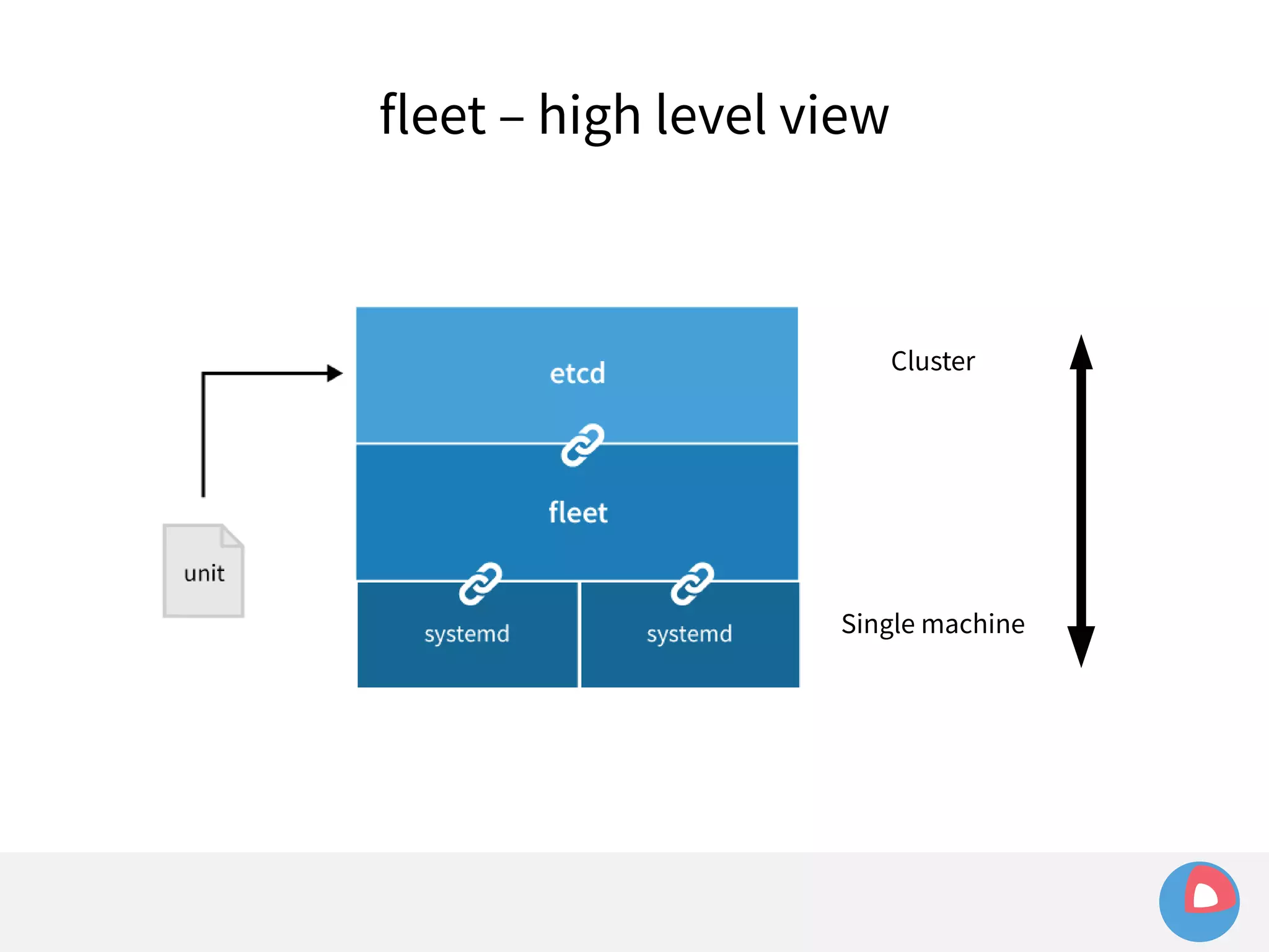

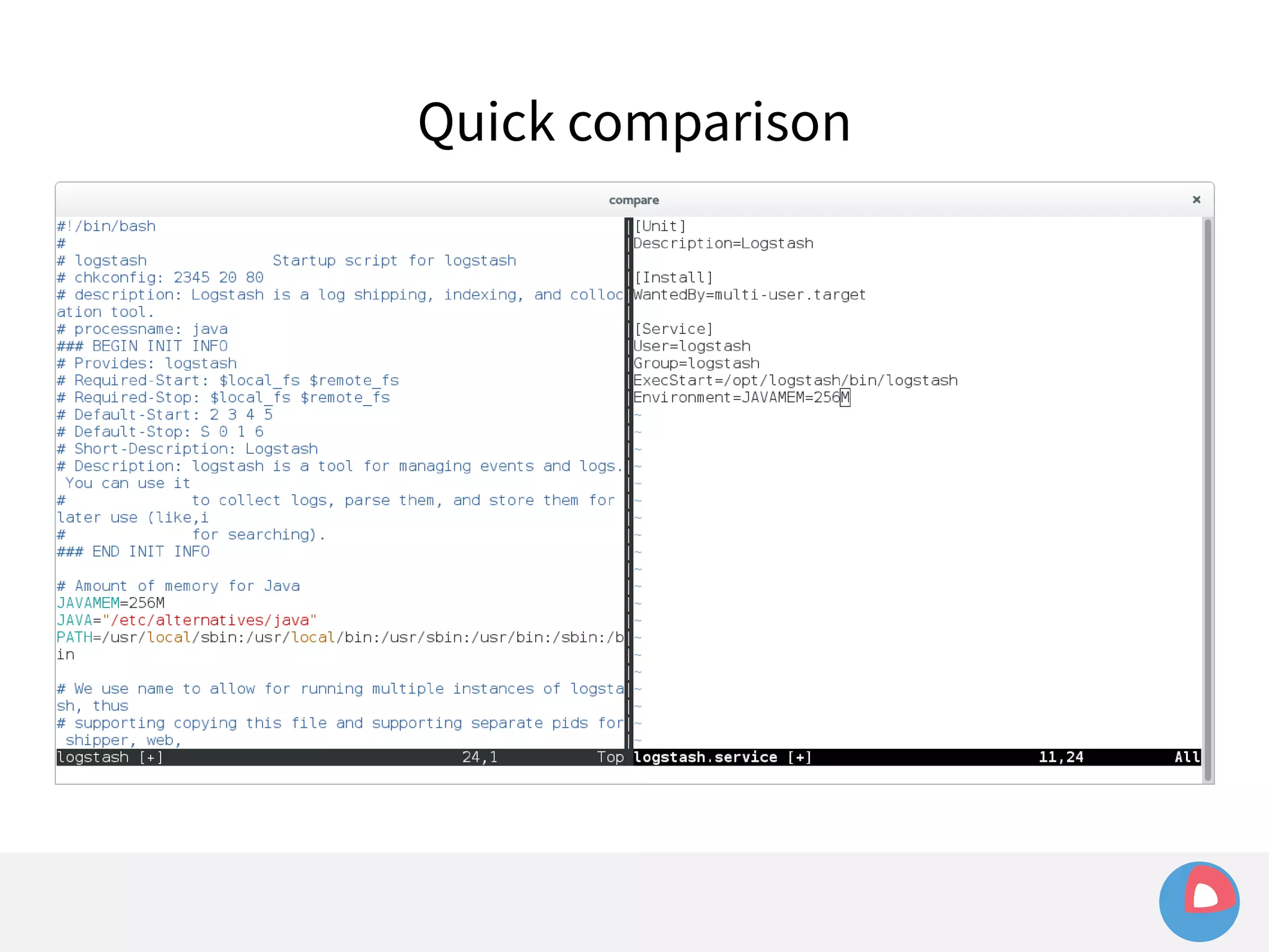

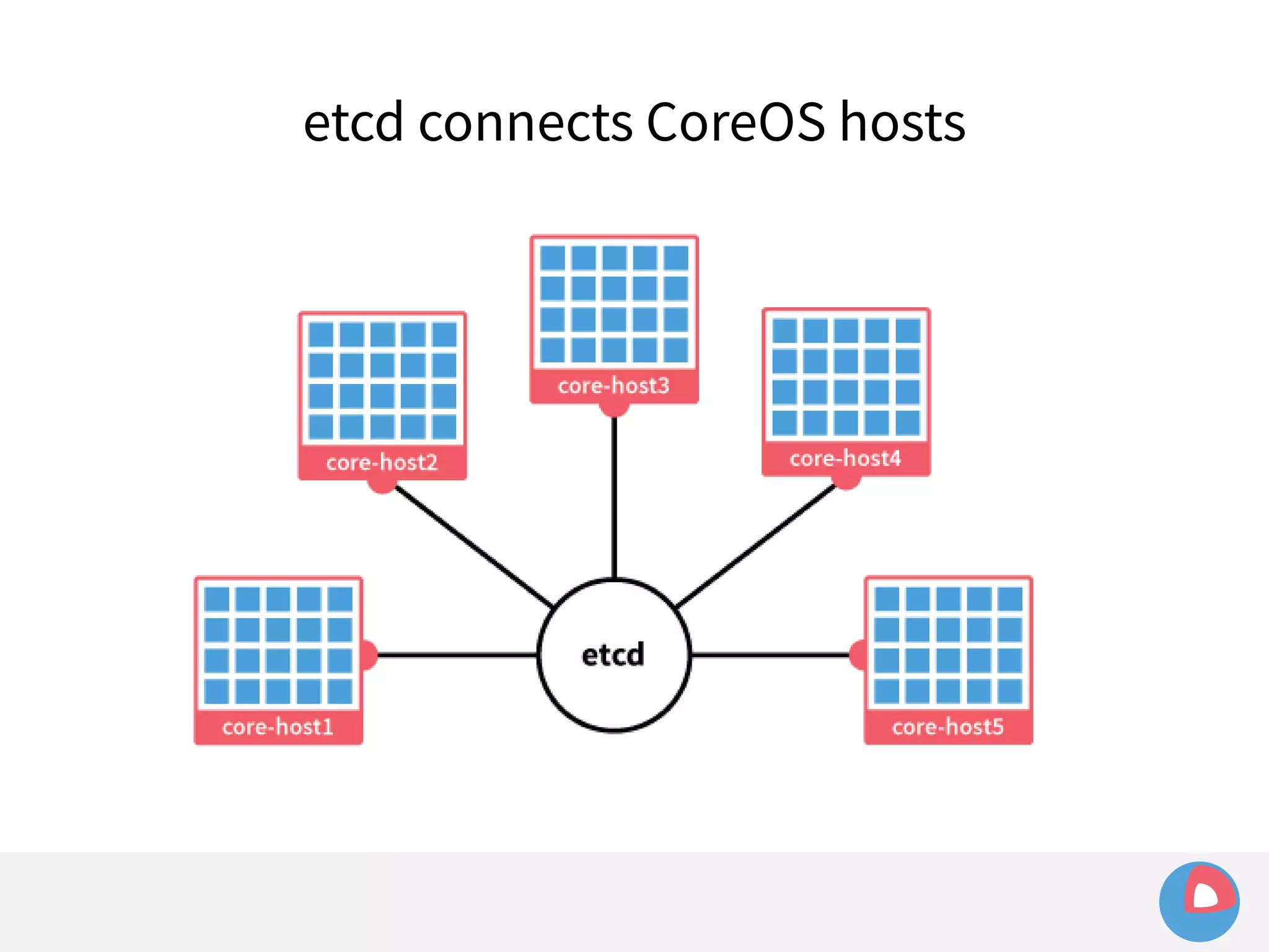

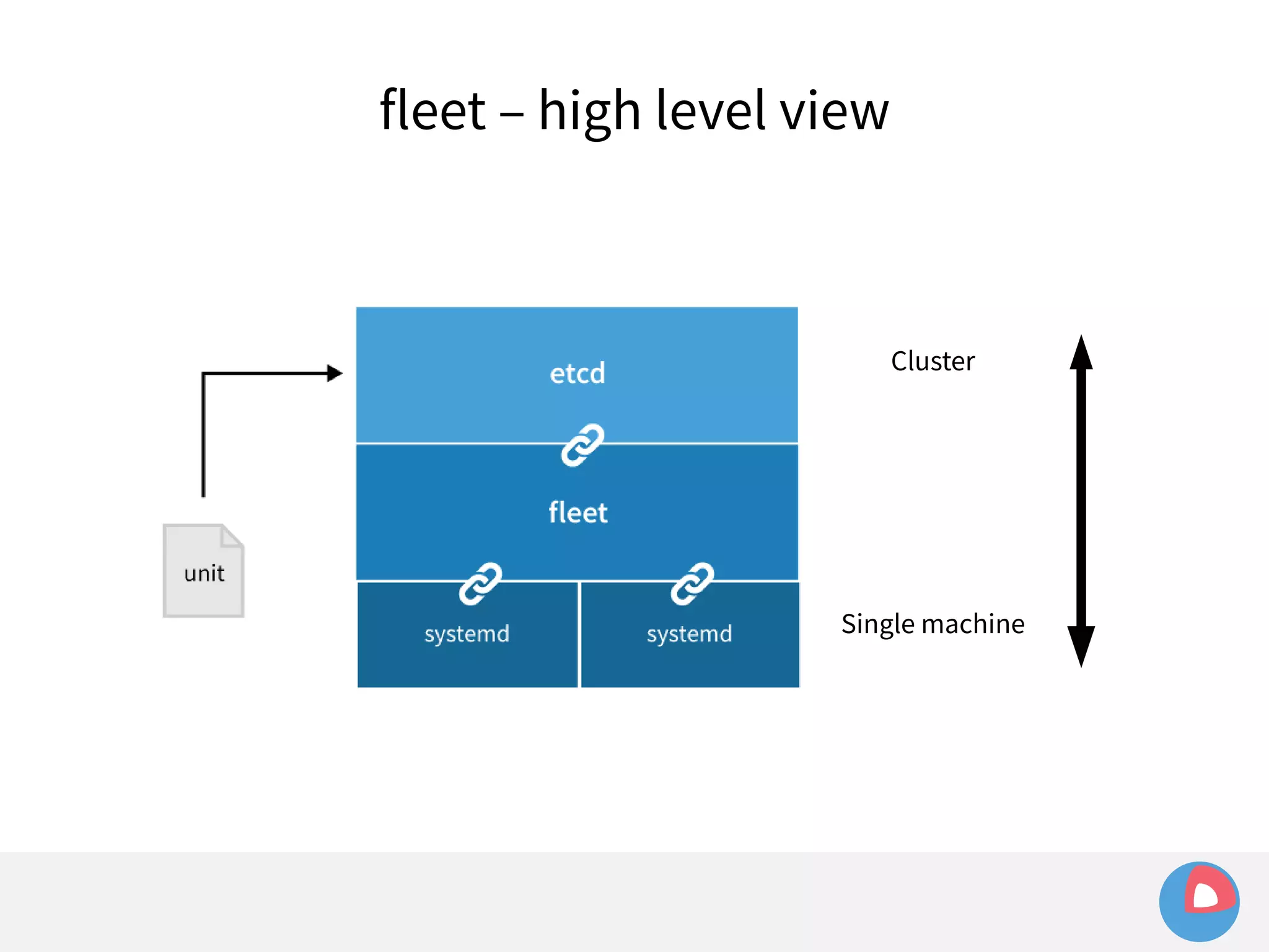

This document is a presentation on clustered computing with CoreOS, fleet and etcd given by Jonathan Boulle of CoreOS. It begins with introducing the speaker and his background. The bulk of the presentation covers CoreOS Linux, its self-updating operating system design and use of application containers. It also discusses fleet, CoreOS's cluster management system, and how it allows applications to remain highly available during server updates using atomic operating system updates and active/passive root file systems.

![fleet + systemd

systemd takes care of things so we don't have to

fleet configuration is just systemd unit files

fleet extends systemd to the cluster-level, and adds

some features of its own (using [X-Fleet])](https://image.slidesharecdn.com/2c4clusteredcomputingwithcoreosfleetandetcd-140929235711-phpapp01/75/2C4-Clustered-computing-with-CoreOS-fleet-and-etcd-119-2048.jpg)

![fleet + systemd

systemd takes care of things so we don't have to

fleet configuration is just systemd unit files

fleet extends systemd to the cluster-level, and adds

some features of its own (using [X-Fleet])

– Template units (run n identical copies of a unit)](https://image.slidesharecdn.com/2c4clusteredcomputingwithcoreosfleetandetcd-140929235711-phpapp01/75/2C4-Clustered-computing-with-CoreOS-fleet-and-etcd-120-2048.jpg)

![fleet + systemd

systemd takes care of things so we don't have to

fleet configuration is just systemd unit files

fleet extends systemd to the cluster-level, and adds

some features of its own (using [X-Fleet])

– Template units (run n identical copies of a unit)

– Global units (run a unit everywhere in the cluster)](https://image.slidesharecdn.com/2c4clusteredcomputingwithcoreosfleetandetcd-140929235711-phpapp01/75/2C4-Clustered-computing-with-CoreOS-fleet-and-etcd-121-2048.jpg)

![fleet + systemd

systemd takes care of things so we don't have to

fleet configuration is just systemd unit files

fleet extends systemd to the cluster-level, and adds

some features of its own (using [X-Fleet])

– Template units (run n identical copies of a unit)

– Global units (run a unit everywhere in the cluster)

– Machine metadata (run only on certain machines)](https://image.slidesharecdn.com/2c4clusteredcomputingwithcoreosfleetandetcd-140929235711-phpapp01/75/2C4-Clustered-computing-with-CoreOS-fleet-and-etcd-122-2048.jpg)

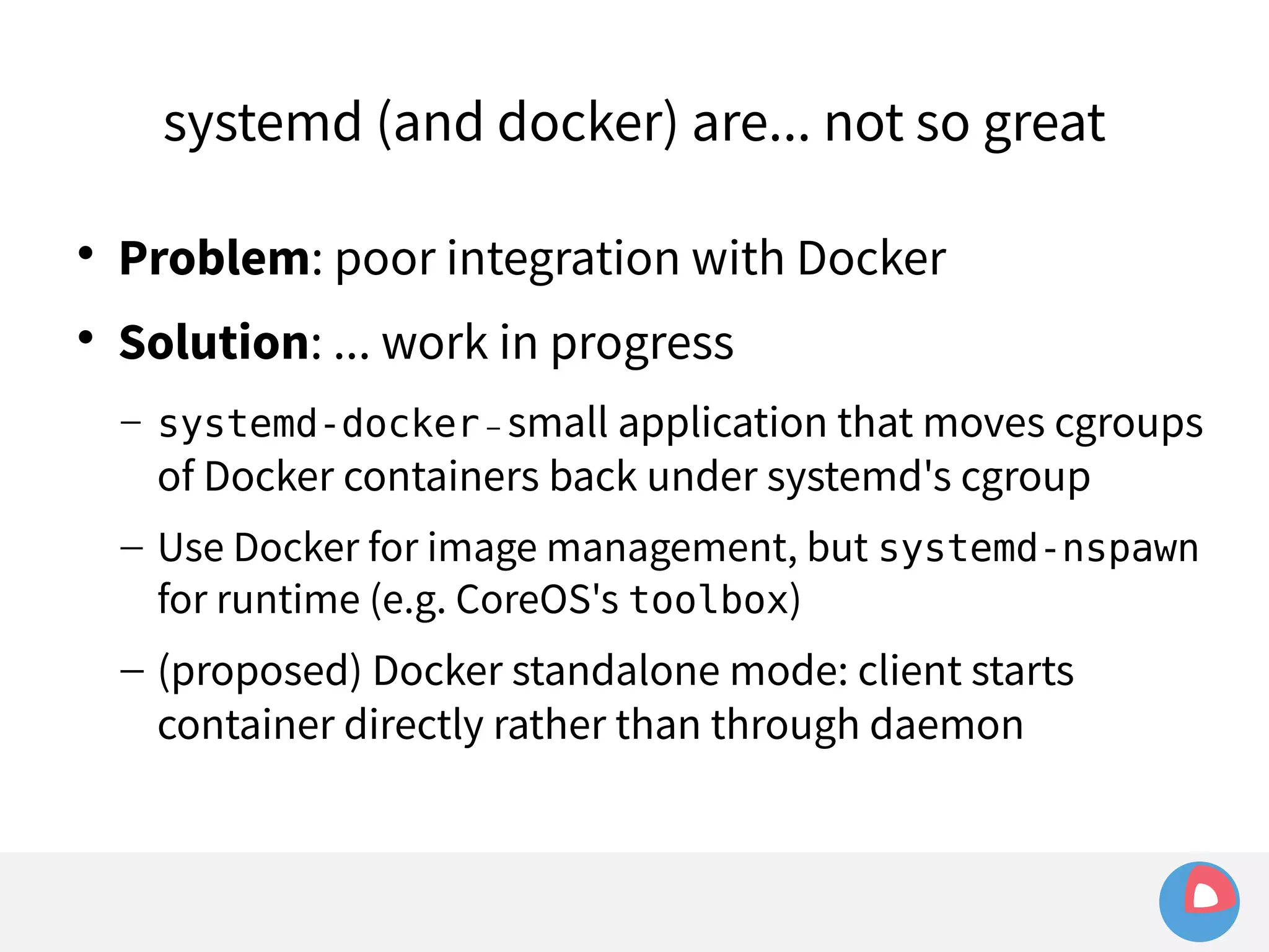

![systemd (and docker) are... not so great

Example: sending signals to a container

– Given a simple container:

[Service]

ExecStart=/usr/bin/docker run busybox /bin/bash -c

"while true; do echo Hello World; sleep 1; done"](https://image.slidesharecdn.com/2c4clusteredcomputingwithcoreosfleetandetcd-140929235711-phpapp01/75/2C4-Clustered-computing-with-CoreOS-fleet-and-etcd-143-2048.jpg)

![systemd (and docker) are... not so great

Example: sending signals to a container

– Given a simple container:

[Service]

ExecStart=/usr/bin/docker run busybox /bin/bash -c

"while true; do echo Hello World; sleep 1; done"

– Try to kill it with systemctl kill hello.service](https://image.slidesharecdn.com/2c4clusteredcomputingwithcoreosfleetandetcd-140929235711-phpapp01/75/2C4-Clustered-computing-with-CoreOS-fleet-and-etcd-144-2048.jpg)

![systemd (and docker) are... not so great

Example: sending signals to a container

– Given a simple container:

[Service]

ExecStart=/usr/bin/docker run busybox /bin/bash -c

"while true; do echo Hello World; sleep 1; done"

– Try to kill it with systemctl kill hello.service

– ... Nothing happens](https://image.slidesharecdn.com/2c4clusteredcomputingwithcoreosfleetandetcd-140929235711-phpapp01/75/2C4-Clustered-computing-with-CoreOS-fleet-and-etcd-145-2048.jpg)

![systemd (and docker) are... not so great

Example: sending signals to a container

– Given a simple container:

[Service]

ExecStart=/usr/bin/docker run busybox /bin/bash -c

"while true; do echo Hello World; sleep 1; done"

– Try to kill it with systemctl kill hello.service

– ... Nothing happens

– Kill command sends SIGTERM, but bash in a Docker

container has PID1, which happily ignores the signal...](https://image.slidesharecdn.com/2c4clusteredcomputingwithcoreosfleetandetcd-140929235711-phpapp01/75/2C4-Clustered-computing-with-CoreOS-fleet-and-etcd-146-2048.jpg)

![systemd (and docker) are... not so great

Example: sending signals to a container

OK, SIGTERM didn't work, so escalate to SIGKILL:

systemctl kill -s SIGKILL hello.service

● Now the systemd service is gone:

hello.service: main process exited, code=killed, status=9/KILL

● But... the Docker container still exists?

# docker ps

CONTAINER ID COMMAND STATUS NAMES

7c7cf8ffabb6 /bin/sh -c 'while tr Up 31 seconds hello

# ps -ef|grep '[d]ocker run'

root 24231 1 0 03:49 ? 00:00:00 /usr/bin/docker run -name hello ...](https://image.slidesharecdn.com/2c4clusteredcomputingwithcoreosfleetandetcd-140929235711-phpapp01/75/2C4-Clustered-computing-with-CoreOS-fleet-and-etcd-151-2048.jpg)

![systemd (and docker) are... not so great

# systemctl cat hello.service

[Service]

ExecStart=/bin/bash -c 'while true; do echo Hello World; sleep 1; done'

# systemd-cgls

...

├─hello.service

│ ├─23201 /bin/bash -c while true; do echo Hello World; sleep 1; done

│ └─24023 sleep 1](https://image.slidesharecdn.com/2c4clusteredcomputingwithcoreosfleetandetcd-140929235711-phpapp01/75/2C4-Clustered-computing-with-CoreOS-fleet-and-etcd-157-2048.jpg)

![systemd (and docker) are... not so great

# systemctl cat hello.service

[Service]

ExecStart=/usr/bin/docker run -name hello busybox /bin/sh -c

"while true; do echo Hello World; sleep 1; done"

# systemd-cgls

...

│ ├─hello.service

│ │ └─24231 /usr/bin/docker run -name hello busybox /bin/sh -c while

true; do echo Hello World; sleep 1; done

...

│ ├─docker-

51a57463047b65487ec80a1dc8b8c9ea14a396c7a49c1e23919d50bdafd4fefb.scope

│ │ ├─24240 /bin/sh -c while true; do echo Hello World; sleep 1; done

│ │ └─24553 sleep 1](https://image.slidesharecdn.com/2c4clusteredcomputingwithcoreosfleetandetcd-140929235711-phpapp01/75/2C4-Clustered-computing-with-CoreOS-fleet-and-etcd-158-2048.jpg)

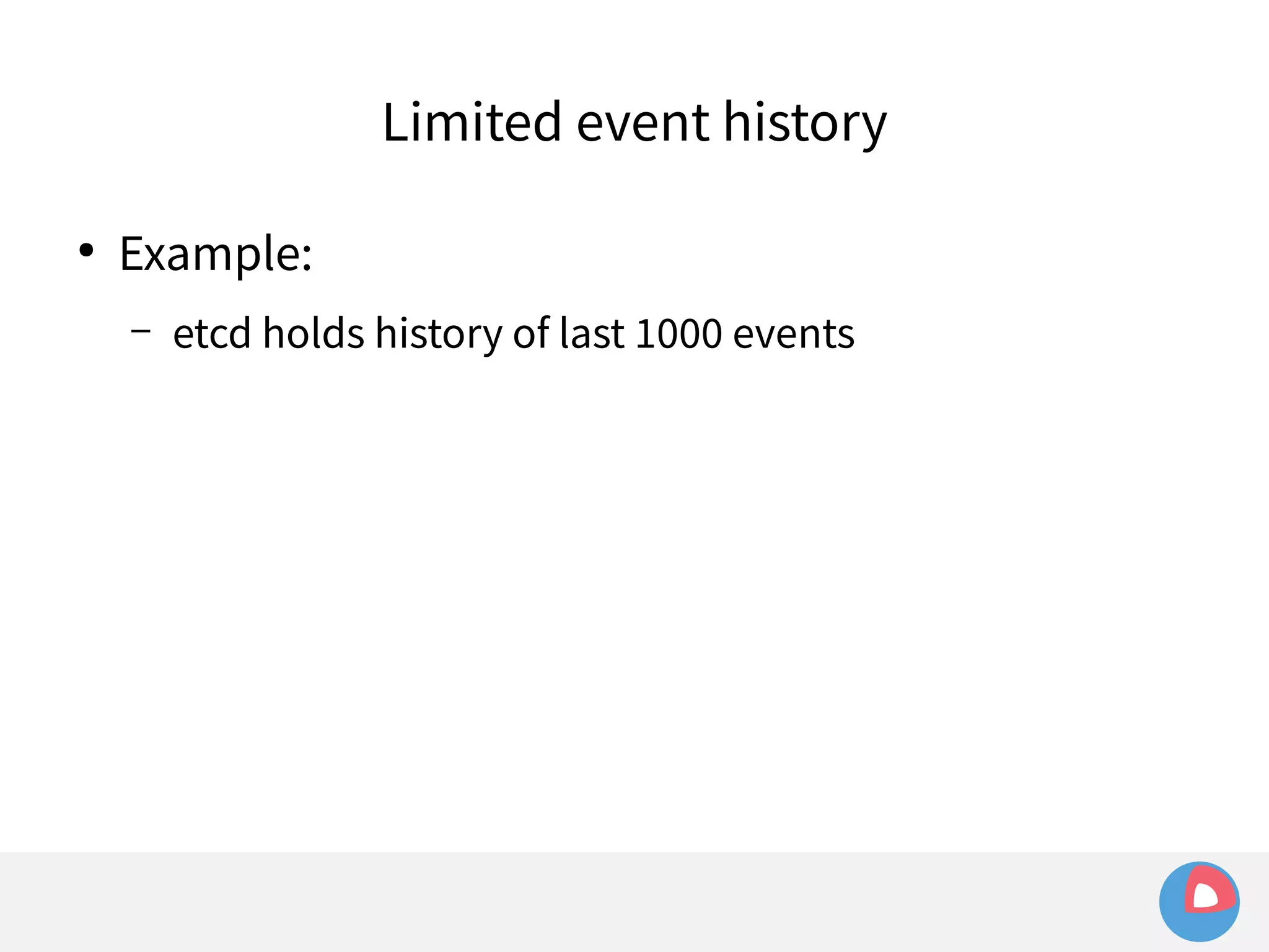

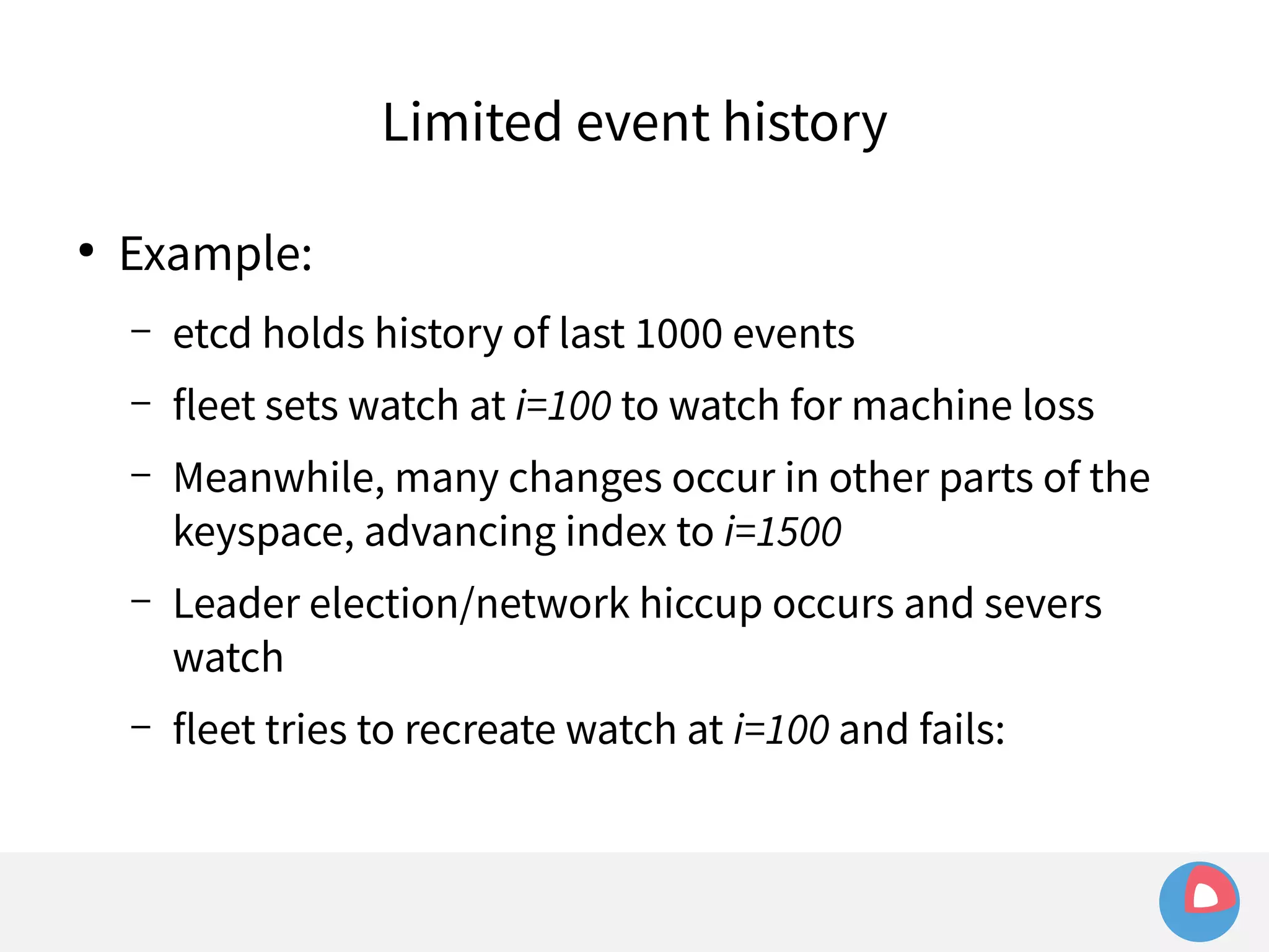

![● Example:

Limited event history

– etcd holds history of last 1000 events

– fleet sets watch at i=100 to watch for machine loss

– Meanwhile, many changes occur in other parts of the

keyspace, advancing index to i=1500

– Leader election/network hiccup occurs and severs watch

– fleet tries to recreate watch at i=100 and fails:

err="401: The event in requested index is outdated and

cleared (the requested history has been cleared [1500/100])](https://image.slidesharecdn.com/2c4clusteredcomputingwithcoreosfleetandetcd-140929235711-phpapp01/75/2C4-Clustered-computing-with-CoreOS-fleet-and-etcd-214-2048.jpg)

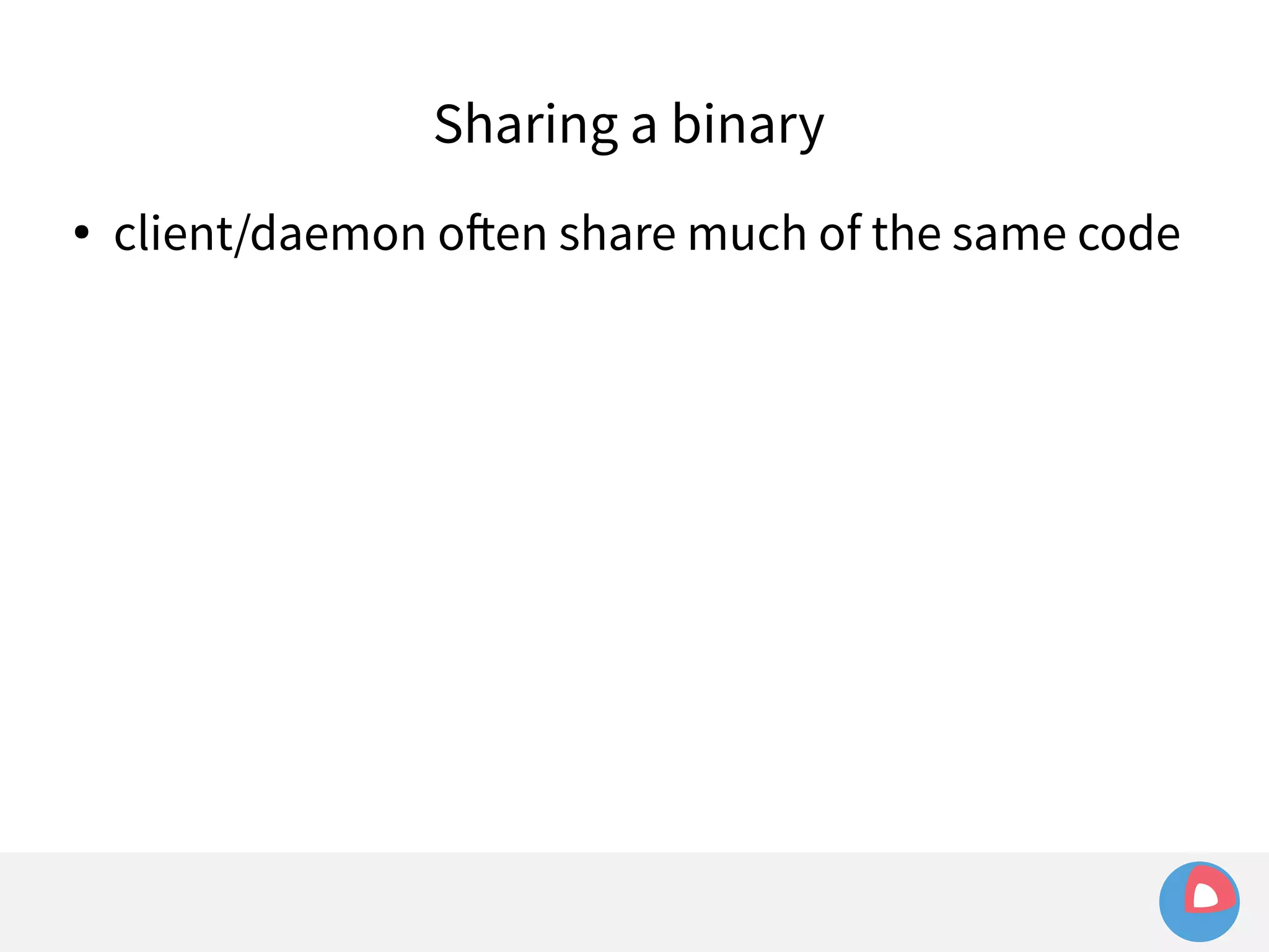

![Sharing a binary

● client/daemon often share much of the same code

– Encapsulate multiple tools in one binary, symlink the

different command names, switch off command name

– Example: fleetd/fleetctl

func main() {

switch os.Args[0] {

case “fleetctl”:

Fleetctl()

case “fleetd”:

Fleetd()

}

}](https://image.slidesharecdn.com/2c4clusteredcomputingwithcoreosfleetandetcd-140929235711-phpapp01/75/2C4-Clustered-computing-with-CoreOS-fleet-and-etcd-269-2048.jpg)

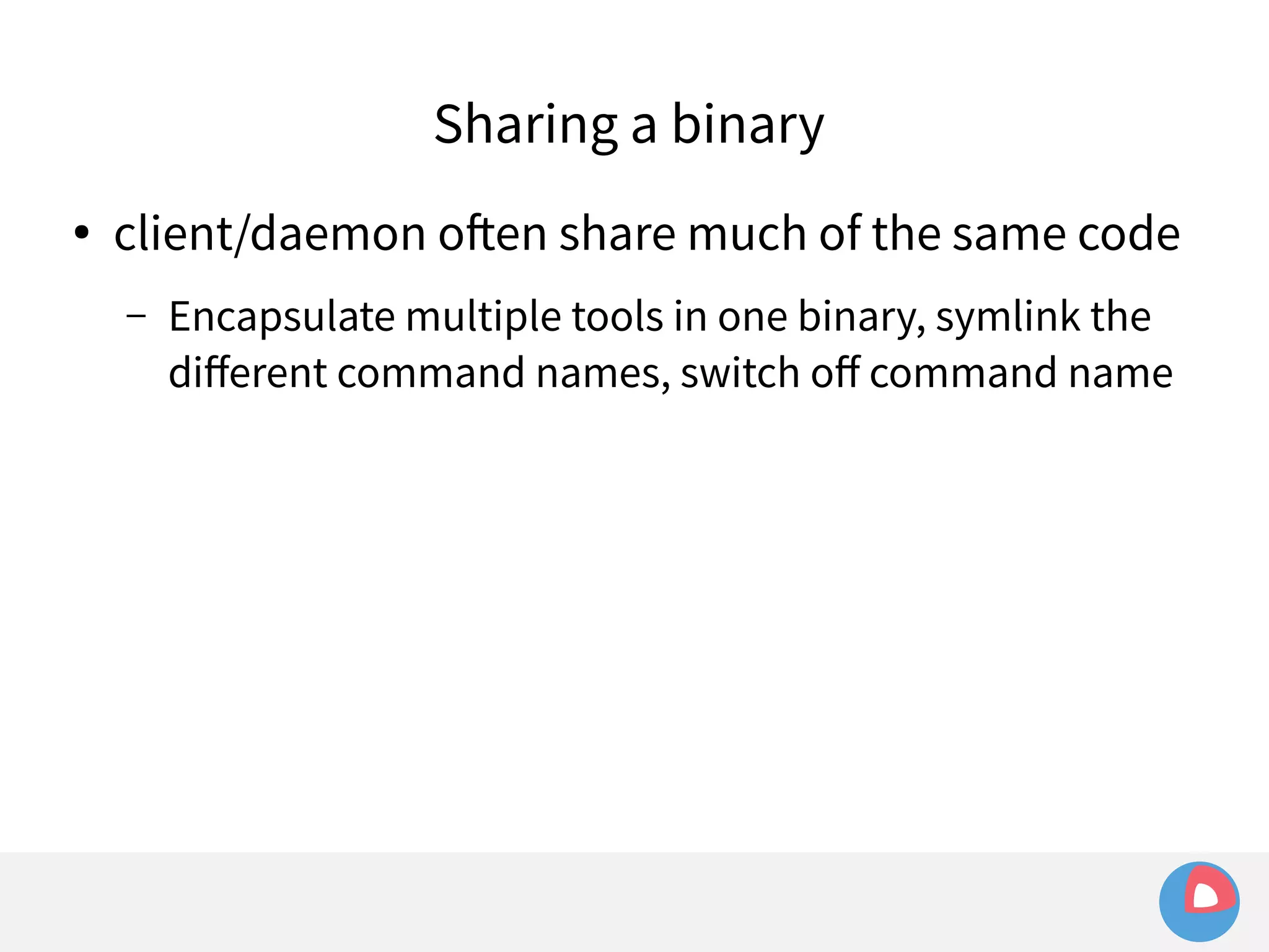

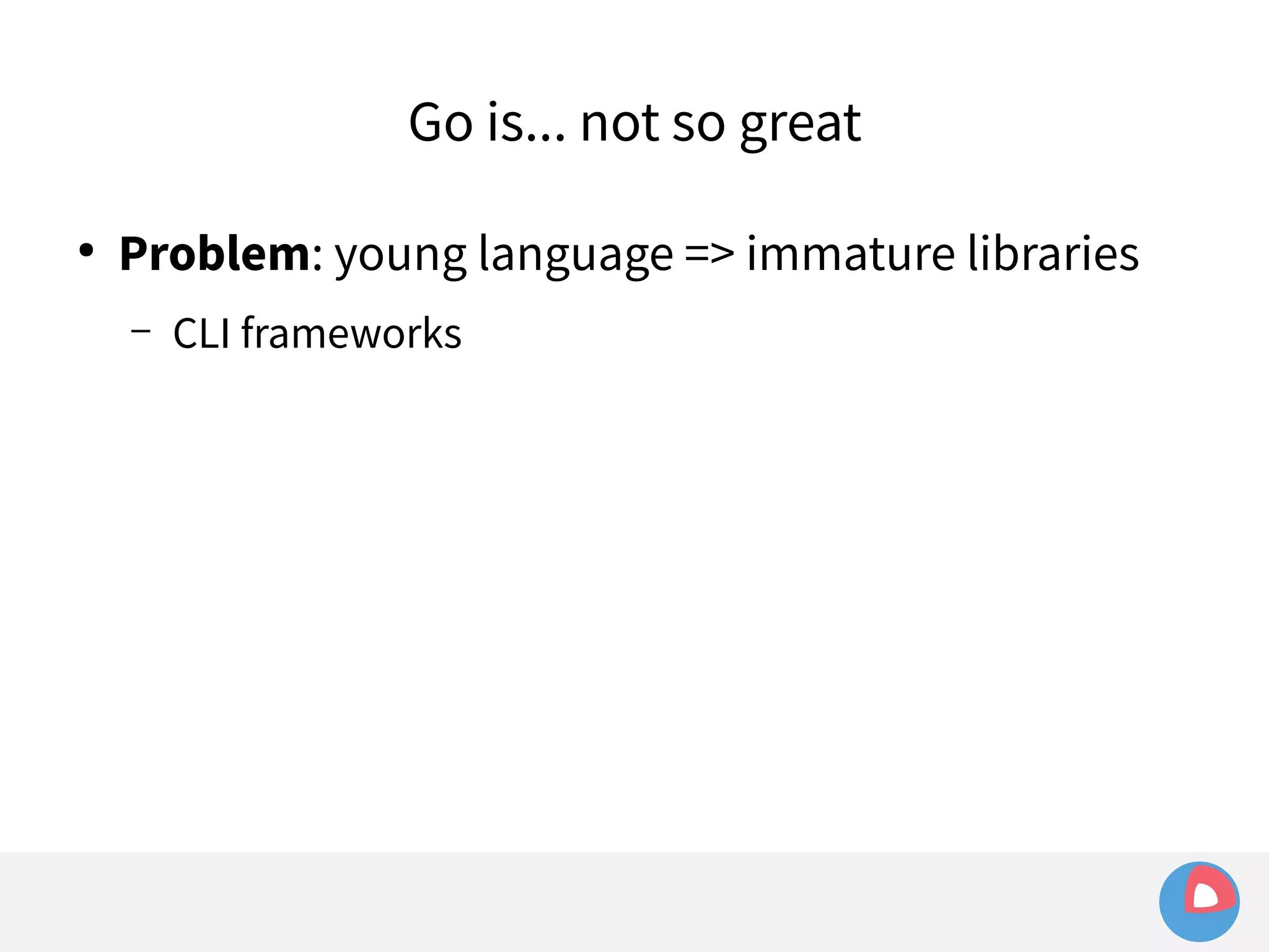

![Sharing a binary

● client/daemon often share much of the same code

– Encapsulate multiple tools in one binary, symlink the

different command names, switch off command name

– Example: fleetd/fleetctl

func main() {

switch os.Args[0] {

case “fleetctl”:

Fleetctl()

case “fleetd”:

Fleetd()

}

}

Before:

9150032 fleetctl

8567416 fleetd

After:

11052256 fleetctl

8 fleetd -> fleetctl](https://image.slidesharecdn.com/2c4clusteredcomputingwithcoreosfleetandetcd-140929235711-phpapp01/75/2C4-Clustered-computing-with-CoreOS-fleet-and-etcd-270-2048.jpg)

![type Command struct {

Name string

Summary string

Usage string

Description string

Flags flag.FlagSet

Run func(args []string) int

}

fleetctl CLI](https://image.slidesharecdn.com/2c4clusteredcomputingwithcoreosfleetandetcd-140929235711-phpapp01/75/2C4-Clustered-computing-with-CoreOS-fleet-and-etcd-278-2048.jpg)

![Wrap up/recap

CoreOS Linux

– Minimal OS with cluster capabilities built-in

– Containerized applications --> a[u]tom[at]ic updates](https://image.slidesharecdn.com/2c4clusteredcomputingwithcoreosfleetandetcd-140929235711-phpapp01/75/2C4-Clustered-computing-with-CoreOS-fleet-and-etcd-282-2048.jpg)

![Wrap up/recap

CoreOS Linux

– Minimal OS with cluster capabilities built-in

– Containerized applications --> a[u]tom[at]ic updates

fleet](https://image.slidesharecdn.com/2c4clusteredcomputingwithcoreosfleetandetcd-140929235711-phpapp01/75/2C4-Clustered-computing-with-CoreOS-fleet-and-etcd-283-2048.jpg)

![Wrap up/recap

CoreOS Linux

– Minimal OS with cluster capabilities built-in

– Containerized applications --> a[u]tom[at]ic updates

fleet

– Simple, powerful cluster-level application manager](https://image.slidesharecdn.com/2c4clusteredcomputingwithcoreosfleetandetcd-140929235711-phpapp01/75/2C4-Clustered-computing-with-CoreOS-fleet-and-etcd-284-2048.jpg)

![Wrap up/recap

CoreOS Linux

– Minimal OS with cluster capabilities built-in

– Containerized applications --> a[u]tom[at]ic updates

fleet

– Simple, powerful cluster-level application manager

– Glue between local init system (systemd) and cluster-level

awareness (etcd)](https://image.slidesharecdn.com/2c4clusteredcomputingwithcoreosfleetandetcd-140929235711-phpapp01/75/2C4-Clustered-computing-with-CoreOS-fleet-and-etcd-285-2048.jpg)

![Wrap up/recap

CoreOS Linux

– Minimal OS with cluster capabilities built-in

– Containerized applications --> a[u]tom[at]ic updates

fleet

– Simple, powerful cluster-level application manager

– Glue between local init system (systemd) and cluster-level

awareness (etcd)

– golang++](https://image.slidesharecdn.com/2c4clusteredcomputingwithcoreosfleetandetcd-140929235711-phpapp01/75/2C4-Clustered-computing-with-CoreOS-fleet-and-etcd-286-2048.jpg)