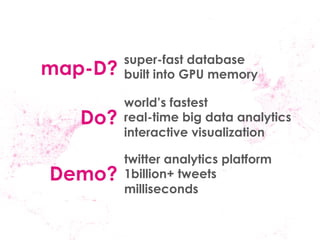

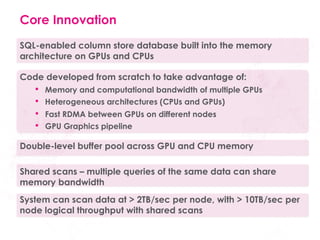

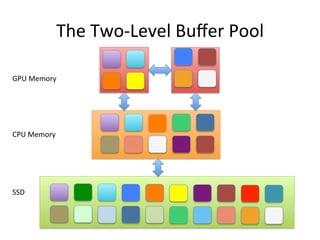

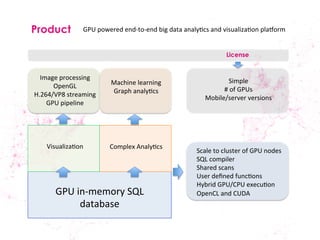

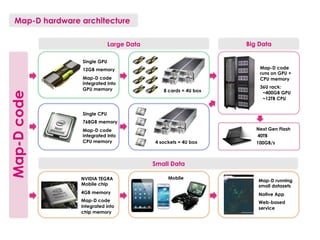

Map-D is a super-fast SQL-enabled columnar database built into GPU memory that allows for real-time analytics and interactive visualization of big data. It is optimized to take advantage of GPU memory bandwidth and computational power. Map-D can scan data at over 2TB/second per node and handle queries of over 1 billion records within milliseconds by leveraging the parallel processing capabilities of GPUs. This allows for truly interactive analysis of large datasets.