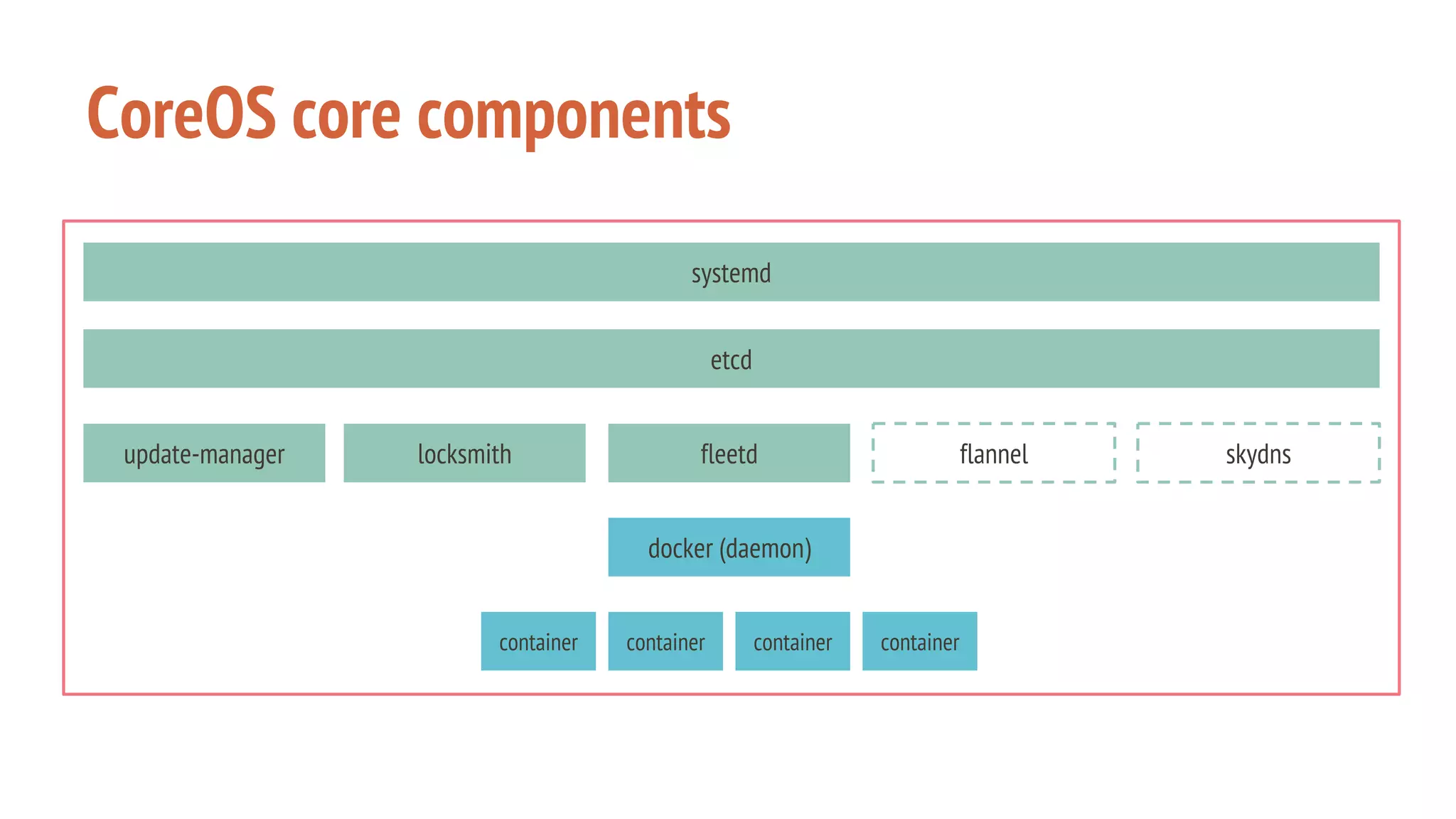

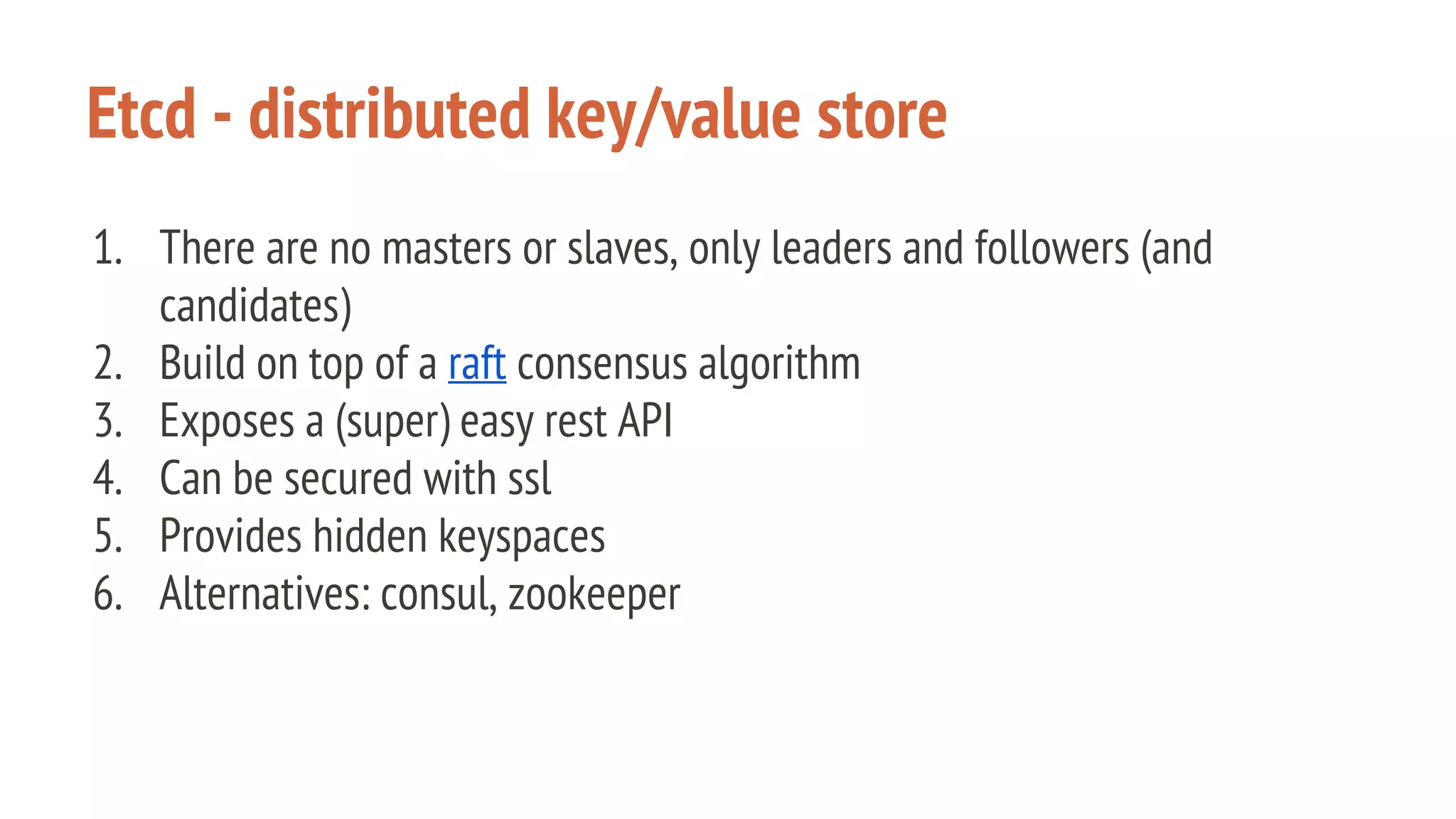

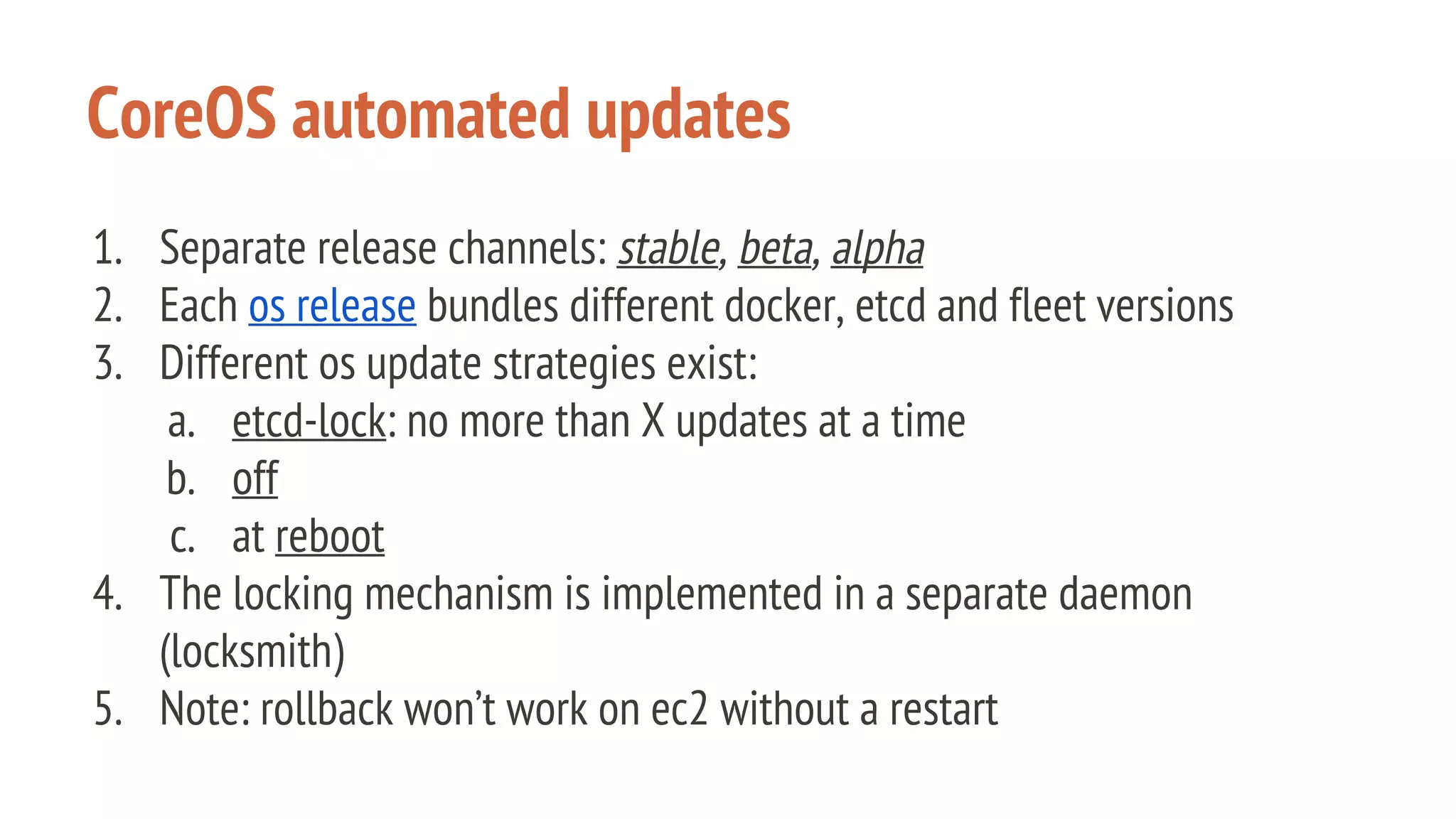

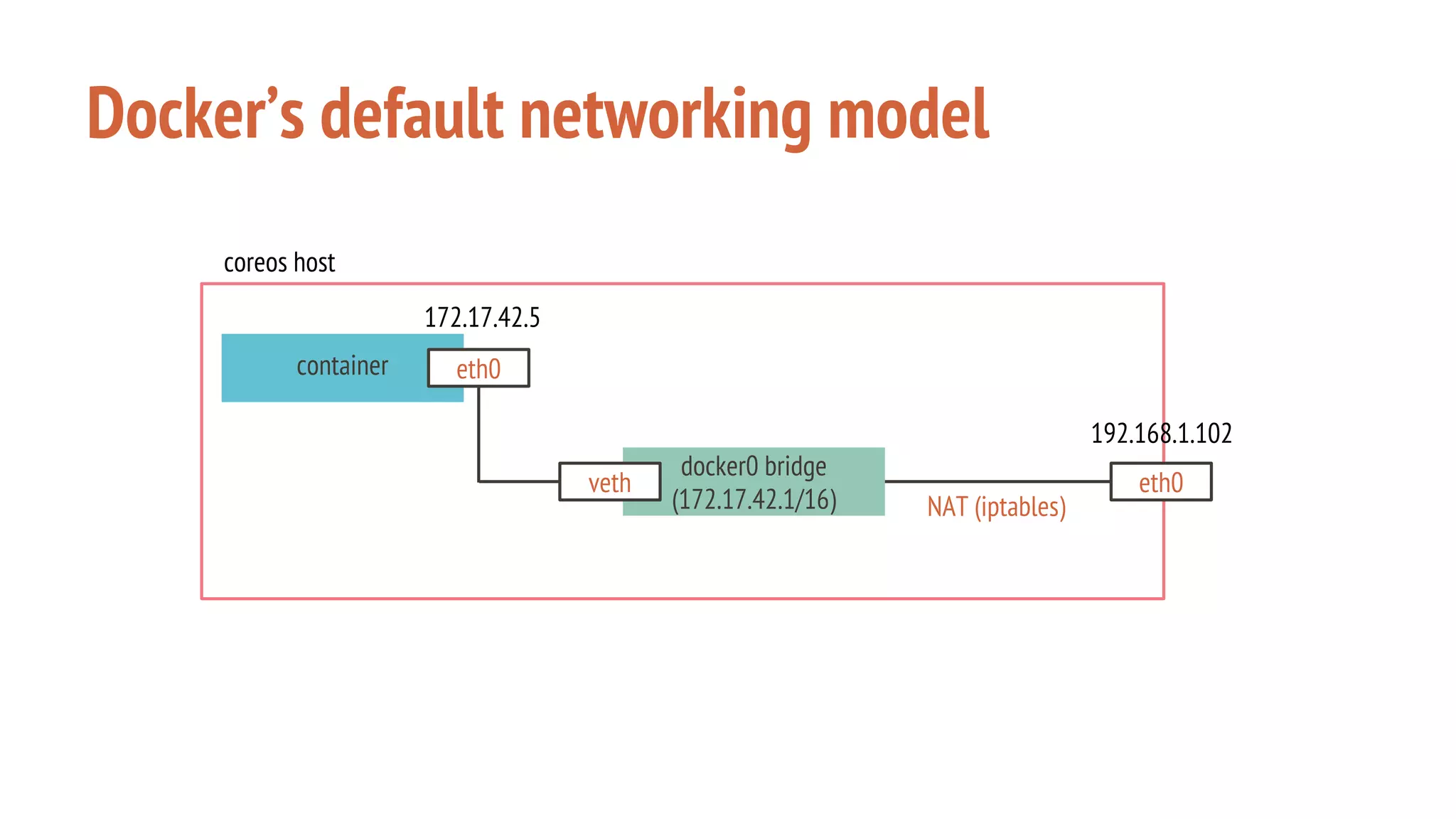

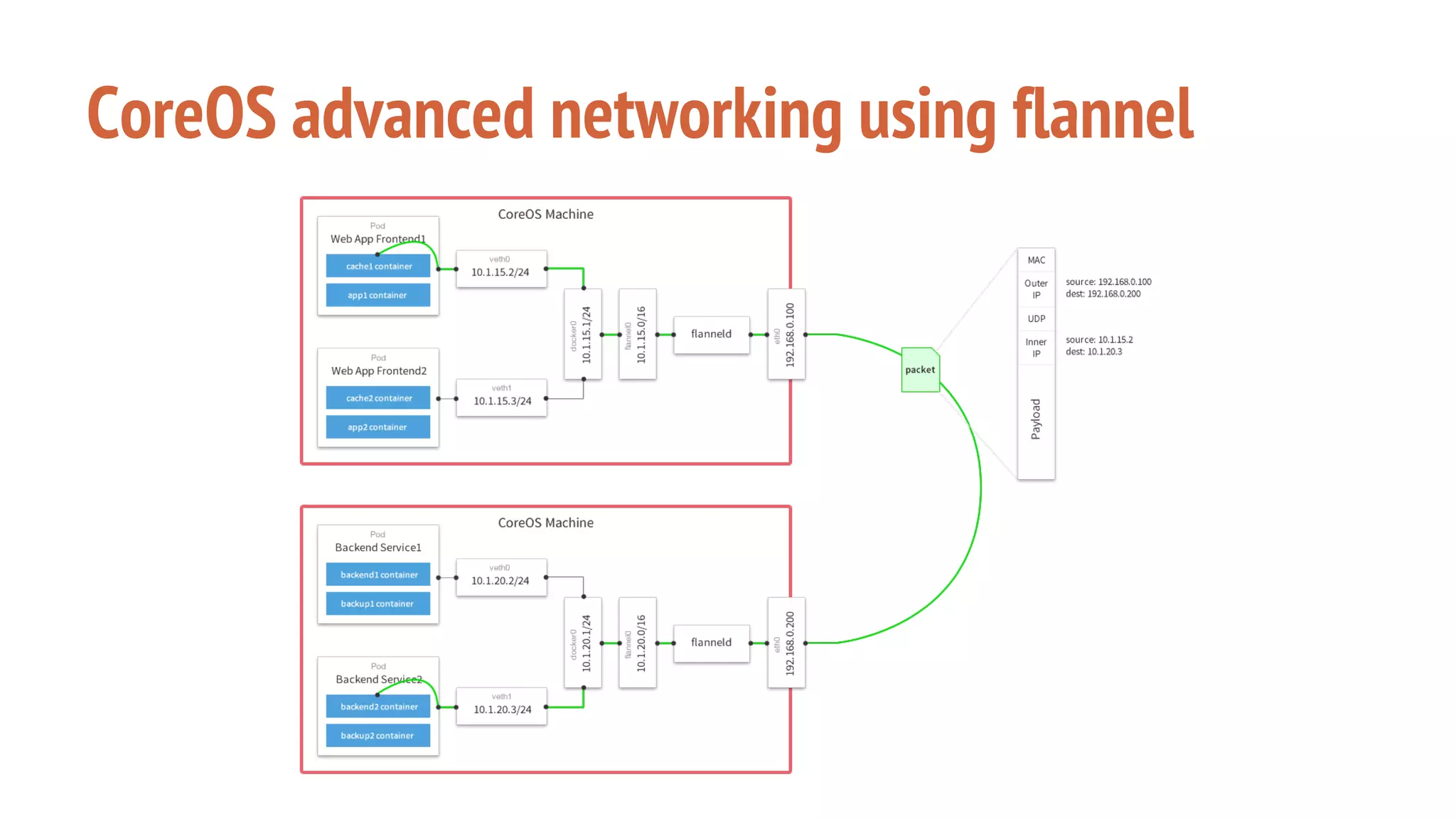

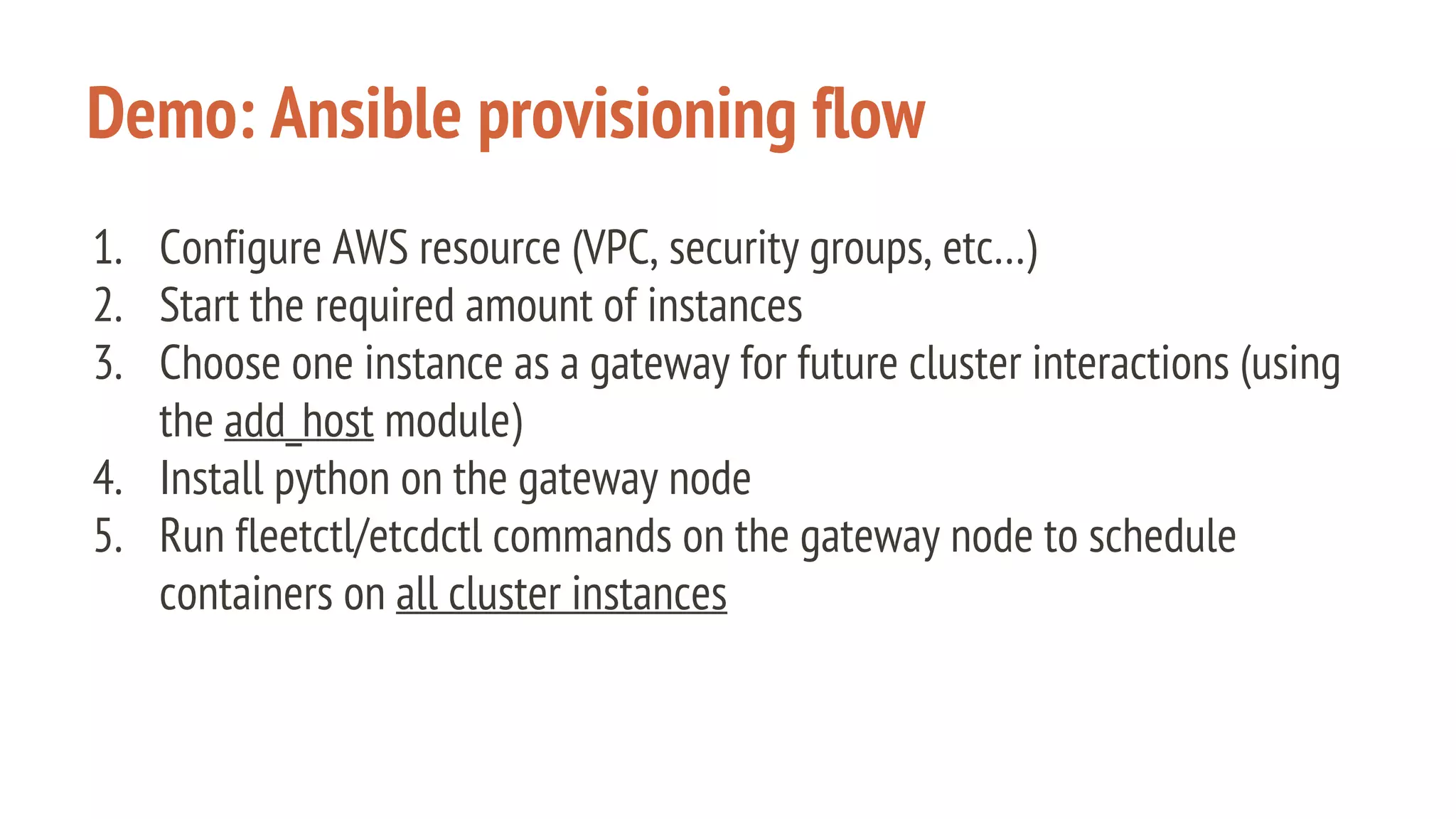

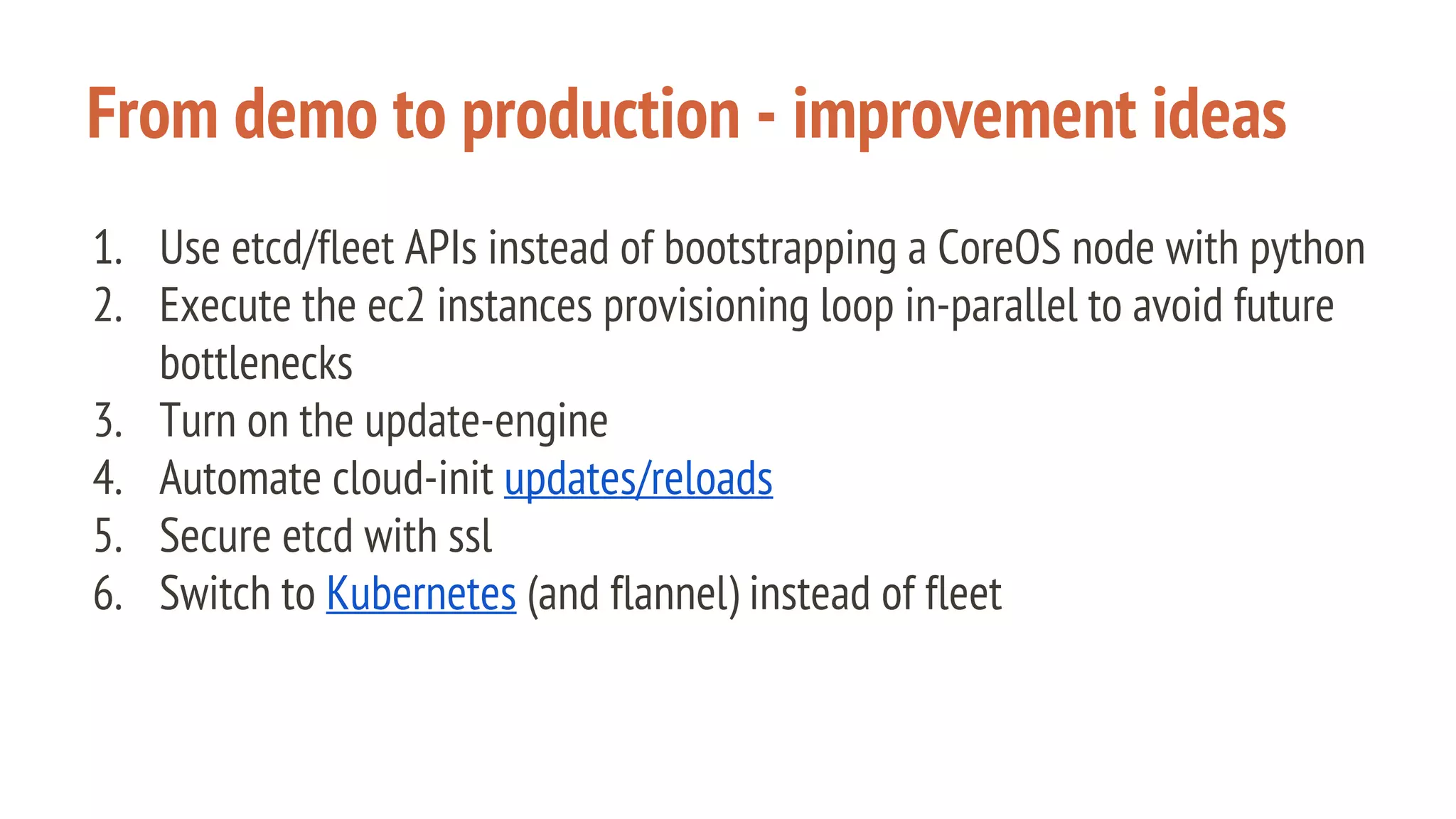

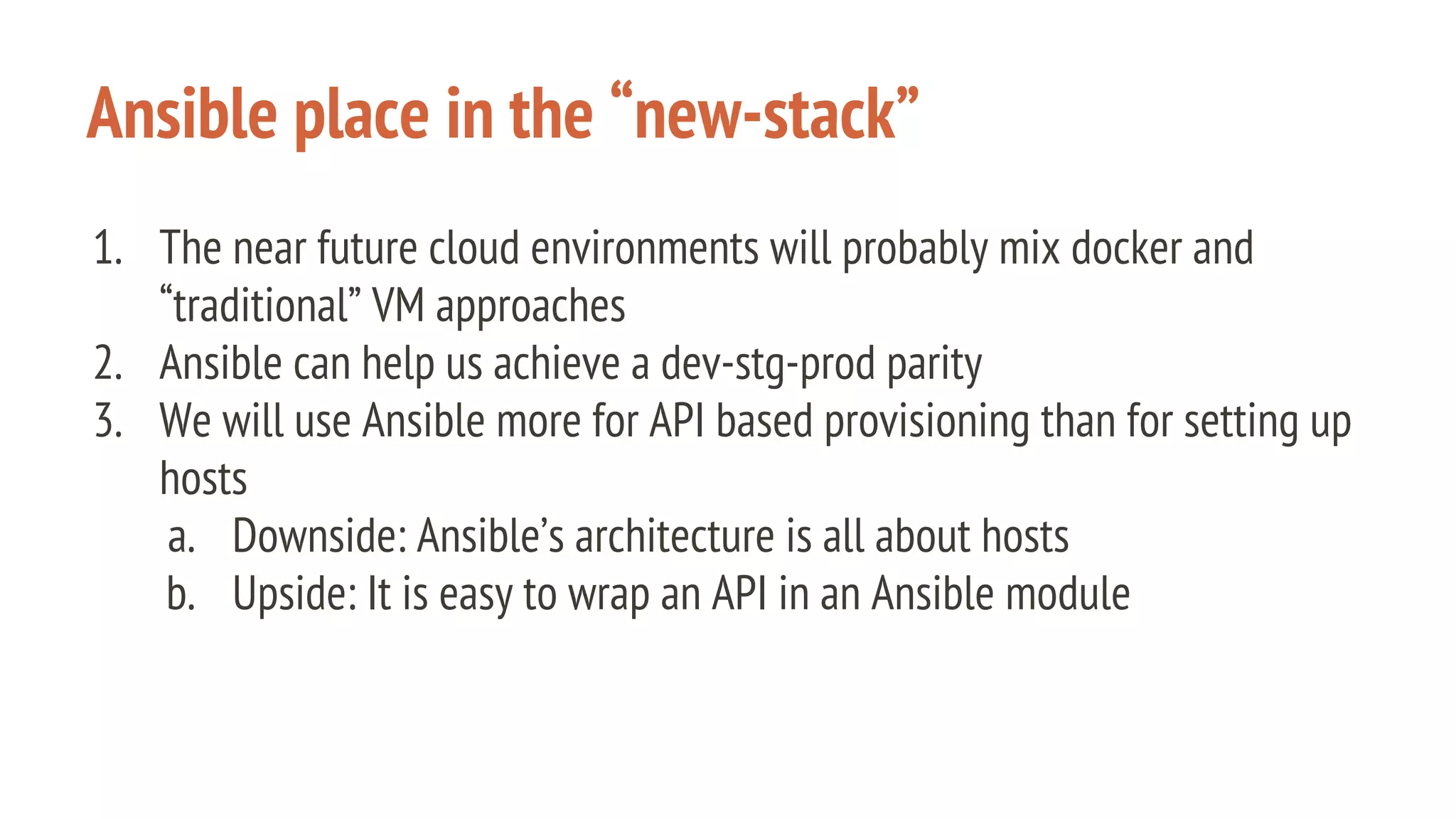

The document outlines a presentation on using Docker orchestration with Ansible and CoreOS, highlighting the author's background and experience in DevOps. It covers CoreOS's architecture, components, and networking solutions, emphasizing the advantages of Flannel for container communication. Additionally, it discusses the current state of Ansible for AWS, improvements for production deployments, and prepares for a future that blends cloud environments and traditional VMs.