- Tsuyoshi Murata from the Tokyo Institute of Technology discusses using deep learning approaches for complex networks and graph neural networks.

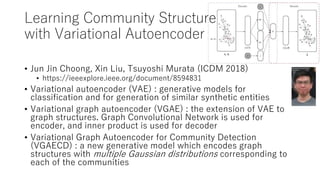

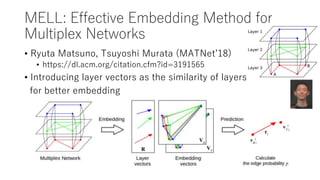

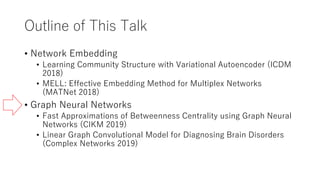

- He summarizes recent work on network embedding, including a paper on learning community structure with variational autoencoders and another on embedding multiplex networks.

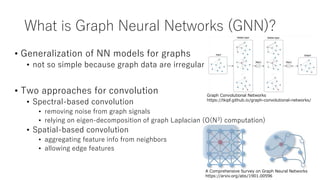

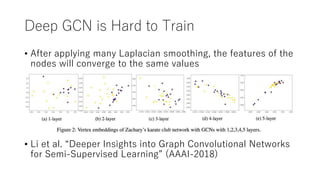

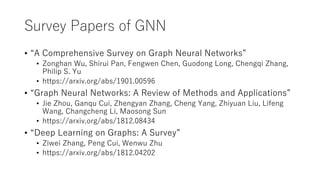

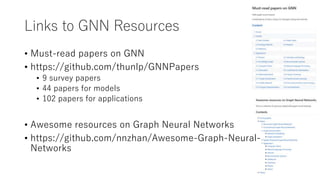

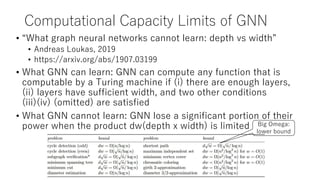

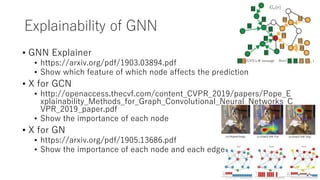

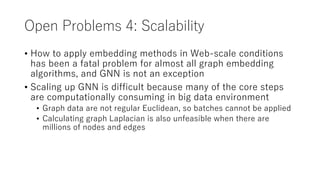

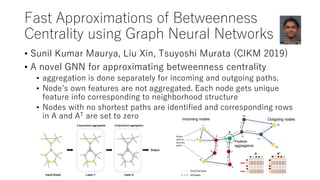

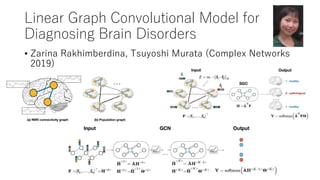

- Murata then discusses applications of graph neural networks, challenges in training deep GCNs, the representational power and limitations of GNNs, and open problems in the field like handling shallow structures, dynamic graphs, and scalability issues.

![Complex Networks + Deep Learning

• Neural networks as complex networks

• Deep compression [Han, ICLR 2016] : compressing neural networks

by pruning, quantization and Huffman coding

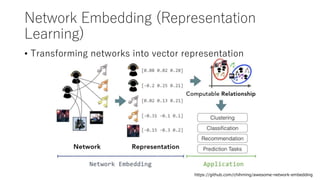

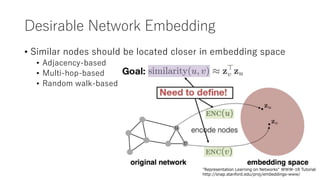

• Using neural networks for the tasks of complex networks

• Network Embedding

• Graph Neural Networks](https://image.slidesharecdn.com/20191107deeplearningapproachesfornetworks-191104084129/85/20191107-deeplearningapproachesfornetworks-4-320.jpg)

![Challenges of Network Embedding

• Complex topological structure

• no spatial locality like grids

• methods for images do not work

• Defining similarity is not easy

• Combination of attributes and structure

• Several types of networks

• Signed networks (SIDE [Kim 2018], StEM [Rahaman 2018], SiNE [Wang

2018])

• Directed networks ([Perrault-joncas 2011], ATP [Sun 2018])

• Multilayer networks (MTNE [Xu 2017], MELL [Matsuno 2018])

• Temporal networks ([Singer 2019])

A Comprehensive Survey on Graph Neural Networks

https://arxiv.org/abs/1901.00596](https://image.slidesharecdn.com/20191107deeplearningapproachesfornetworks-191104084129/85/20191107-deeplearningapproachesfornetworks-8-320.jpg)

![Survey, Tutorial, Link

• A Survey on Network Embedding [Cui et al., 2017]

• https://arxiv.org/abs/1711.08752

• WWW-18 Tutorial : Representation Learning on Networks

• http://snap.stanford.edu/proj/embeddings-www/

• Awesome network embedding (links to papers and codes)

• https://github.com/chihming/awesome-network-embedding](https://image.slidesharecdn.com/20191107deeplearningapproachesfornetworks-191104084129/85/20191107-deeplearningapproachesfornetworks-9-320.jpg)

![Towards Deep and Large GNNs

• “Cluster-GCN : An Efficient Algorithm for Training Deep and

Large Graph Convolutional Networks”, Wei-Lin Chiang,

Xuanqing Liu, Si Si, Yang Li, Samy Bengio, Cho-Jui Hsieh

[KDD 2019]

• https://arxiv.org/abs/1905.07953

• Neighborhood expansion is restricted within the clusters](https://image.slidesharecdn.com/20191107deeplearningapproachesfornetworks-191104084129/85/20191107-deeplearningapproachesfornetworks-21-320.jpg)

![Semi-supervised learning on network using

structure features and graph convolution

[Tachibana et al., 2018]

• GCN uses local info only(such as 2-hop neighborhood info)

• Info about global structure is expected to improve the

performance

+

JSAI incentive award 2018

Semi-supervised learning on network using structure

features and graph convolution

https://jsai.ixsq.nii.ac.jp/ej/?action=pages_view_main&

active_action=repository_view_main_item_detail&item_i

d=9541&item_no=1&page_id=13&block_id=23](https://image.slidesharecdn.com/20191107deeplearningapproachesfornetworks-191104084129/85/20191107-deeplearningapproachesfornetworks-35-320.jpg)

![Complex Networks + Deep Learning :

Future Directions

• Explainable AI (XAI)

• Interpretable Machine Learning

• https://christophm.github.io/interpretable-ml-book/index.html

• Scene graph

• Interactions among objects can be easily modeled as a graph

• “LinkNet: Relational Embedding for Scene Graph” [NIPS 2018]

• Neural networks as complex networks

• Deep compression [Han, ICLR 2016]

• Deep learning systems as complex networks [Testolin, 2018]

• https://arxiv.org/abs/1809.10941](https://image.slidesharecdn.com/20191107deeplearningapproachesfornetworks-191104084129/85/20191107-deeplearningapproachesfornetworks-36-320.jpg)