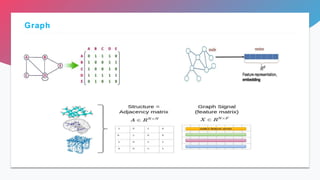

Graph neural networks (GNNs) are neural network architectures that operate on graph-structured data. GNNs iteratively update node representations by aggregating neighbor representations and can be used for tasks like node classification. There are many frontiers for GNN research, including graph generation/transformation, dynamic/heterogeneous graphs, and applications in domains that can be modeled with graphs like social networks and drug discovery. Automated machine learning techniques are also being applied to GNNs.

![Graph classification

• Graph pooling layers are needed to further compute graph-level

representation, which summarizes the key characteristics of input graph-

structure, is the critical component for the graph classifcation.

• These methods can be generally categorized into four groups: [ simple flat -

pooling, attention-based pooling, cluster-based pooling , and other type of

pooling ].

Graph Classification and Link Prediction.](https://image.slidesharecdn.com/chapter3-240210150827-40514cdd/85/Chapter-3-pptx-14-320.jpg)

![Graph Generation

• There are three representative GNN based learning paradigms for graph

generation including:[ GraphVAE approaches , GraphGAN approaches and

Deep Autoregressive methods].

Graph Transformation

• Aims to learn a translation mapping between the input source graph and the

output target graph. This problem can be generally grouped into four

categories:[including node-level transformation, edge-level transformation ,

node-edge co-transformation , and graph-involved transformation].

Graph Generation and Graph Transformation.](https://image.slidesharecdn.com/chapter3-240210150827-40514cdd/85/Chapter-3-pptx-16-320.jpg)

![Dynamic Graph Neural Networks

• In real-world applications, the graph nodes (entities) and the graph edges

(relations) are often evolving over time.

• Various GNNs cannot be directly applied to the dynamic graphs.

• A simple approach is converting dynamic graphs into static graphs, leading

to potential loss of information.

• There are two major categories of GNN based methods:[ GNNs for discrete -

time dynamic graphs and GNNs for continue-time dynamic graphs].

Dynamic Graph Neural Networks and Heterogeneous Graph

Neural Networks](https://image.slidesharecdn.com/chapter3-240210150827-40514cdd/85/Chapter-3-pptx-18-320.jpg)

![Heterogeneous Graph Neural Networks

• That consist of different types of graph nodes and edges.

• Different GNNs for homogeneous graphs are not applicable in

Heterogeneous Graph .

• A new line of research has been devoted to developing various

heterogeneous graph neural networks :[ message passing based methods,

encoder-decoder based methods , and adversarial based methods].

Dynamic Graph Neural Networks and Heterogeneous Graph

Neural Networks](https://image.slidesharecdn.com/chapter3-240210150827-40514cdd/85/Chapter-3-pptx-19-320.jpg)

![• After getting the graph expression of the input data, the next step is applying

GNNs for learning the graph representations. Some works directly utilize the

typical GNNs, such as GCN, GAT, GGNN and GraphSage, which can be

generalized to different application tasks.

• While some special tasks needs an additional design on the GNN

architecture to better handle the specific problem [PinSage, KGCN, KGAT].

Graph Representation Learning](https://image.slidesharecdn.com/chapter3-240210150827-40514cdd/85/Chapter-3-pptx-25-320.jpg)