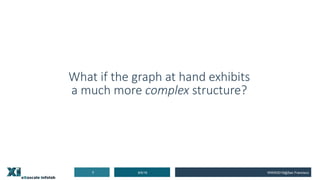

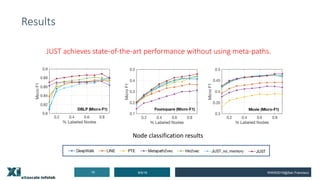

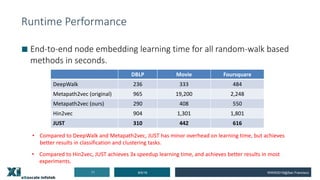

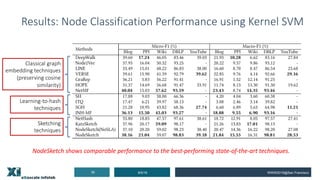

This document discusses advances in representation learning on graphs, focusing on techniques for embedding heterogeneous graphs and complex structures without relying on meta-paths. It highlights various methods, including 'just' for embedding heterogeneous graphs and 'nodesketch' for efficient graph embeddings via recursive sketching, showcasing their performance advantages in node classification and clustering tasks. The document concludes by mentioning future directions for graph representation learning, such as attributed and dynamic graphs.

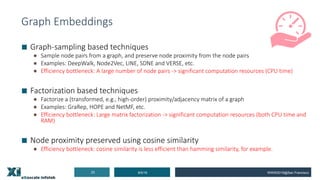

![Outlines

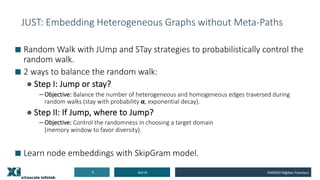

■ JUST: Embedding heterogeneous graphs without meta-paths

[CIKM’18]

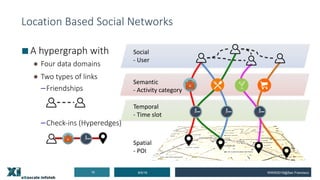

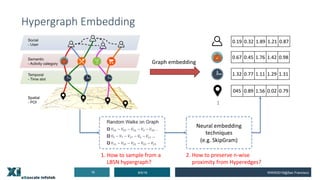

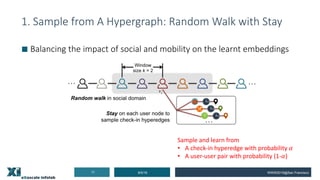

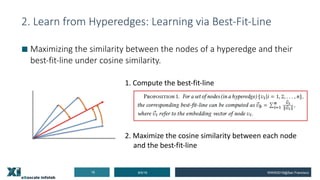

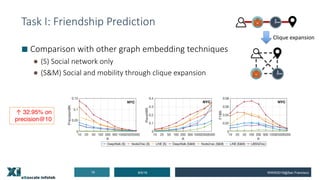

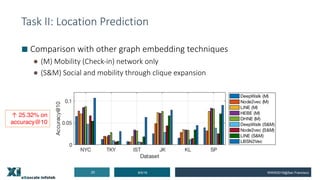

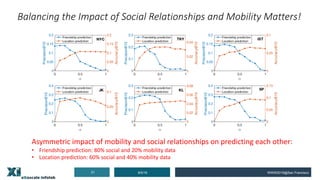

■ LBSN2Vec: Embedding heterogeneous hypergraphs from LBSNs

[WWW’19]

■ NodeSketch: Highly-efficient graph embeddings via recursive

sketching [KDD’19]

8/5/194 WWW2019@San Francisco](https://image.slidesharecdn.com/invitedtalkdl4gpcm-190805132752/85/Representation-Learning-on-Complex-Graphs-4-320.jpg)

![Outlines

■ JUST: Embedding heterogeneous graphs without meta-paths

[CIKM’18]

■ LBSN2Vec: Embedding heterogeneous hypergraphs from LBSNs

[WWW’19]

■ NodeSketch: Highly-efficient graph embeddings via recursive

sketching [KDD’19]

8/5/1912 WWW2019@San Francisco](https://image.slidesharecdn.com/invitedtalkdl4gpcm-190805132752/85/Representation-Learning-on-Complex-Graphs-12-320.jpg)

![Outlines

■ JUST: Embedding heterogeneous graphs without meta-paths

[CIKM’18]

■ LBSN2Vec: Embedding heterogeneous hypergraphs from LBSNs

[WWW’19]

■ NodeSketch: Highly-efficient graph embeddings via recursive

sketching [KDD’19]

8/5/1922 WWW2019@San Francisco](https://image.slidesharecdn.com/invitedtalkdl4gpcm-190805132752/85/Representation-Learning-on-Complex-Graphs-22-320.jpg)

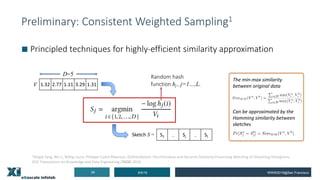

![NodeSketch: High-Order Node Embeddings

8/5/1929 WWW2019@San Francisco

1 1

0.33 0.33 0.33

Neighbors

𝒏 ∈ 𝜞 𝒓

Node 2 2 3 1

SLA adjacency vector '𝑽 𝒓

Sketch element distribution

𝟏

𝑳

∑𝒋-𝟏

𝑳

𝕝[𝑺 𝒋

𝒏

𝒌2𝟏 -𝒊], 𝑖=1,..,D

1.066 1.066 0.066

Approximate 𝑘-order

SLA adjacency vector '𝑽 𝒓

(𝒌)

node 1

Sketching using Eq. 3

*Weight

α=0.2

Merge

1 1

1 1 1

1 1 1 1

1 1

1 1

SLA adjacency

matrix '𝑨

2 1 1

2 3 1

2 3 4

4 3 4

5 3 5

(𝑘-1)-order node

embeddings 𝑺(𝒌 − 𝟏)

𝑘-order

embeddings 𝑺(𝒌)

2 1 3

2 3 4

2 3 4

2 3 4

4 3 5

(𝑘-1)-order Sketches

𝑺 𝒏

(𝒌 − 𝟏)

… … …

Uniformity of the generated samples:

The foundation of our recursive sketching process

1

2

3

4 5](https://image.slidesharecdn.com/invitedtalkdl4gpcm-190805132752/85/Representation-Learning-on-Complex-Graphs-29-320.jpg)

![Take-Away Messages

■ JUST: Meta-path free heterogeneous graph embedding can achieve state-

of-the-art performance efficiently. [CIKM’18]

■ LBSN2Vec: Asymmetric impact of social and mobility on each other

[WWW’19]

■ NodeSketch: High-quality node embeddings can be generated via highly-

efficient sketching techniques [KDD’19]

8/5/1932 WWW2019@San Francisco

[CIKM’18] Hussein, Rana, Dingqi Yang, and Philippe Cudré-Mauroux. "Are Meta-Paths Necessary?: Revisiting Heterogeneous Graph Embeddings." CIKM’18.

[WWW’19] Dingqi Yang, Bingqing Qu, Jie Yang, Philippe Cudre-Mauroux, ”Revisiting User Mobility and Social Relationships in LBSNs: A Hypergraph Embedding Approach.” WWW’19.

[KDD’19] Dingqi Yang, Paolo Rosso, Bin Li and Philippe Cudre-Mauroux, “NodeSketch: Highly-Efficient Graph Embeddings via Recursive Sketching.” KDD’19.](https://image.slidesharecdn.com/invitedtalkdl4gpcm-190805132752/85/Representation-Learning-on-Complex-Graphs-32-320.jpg)