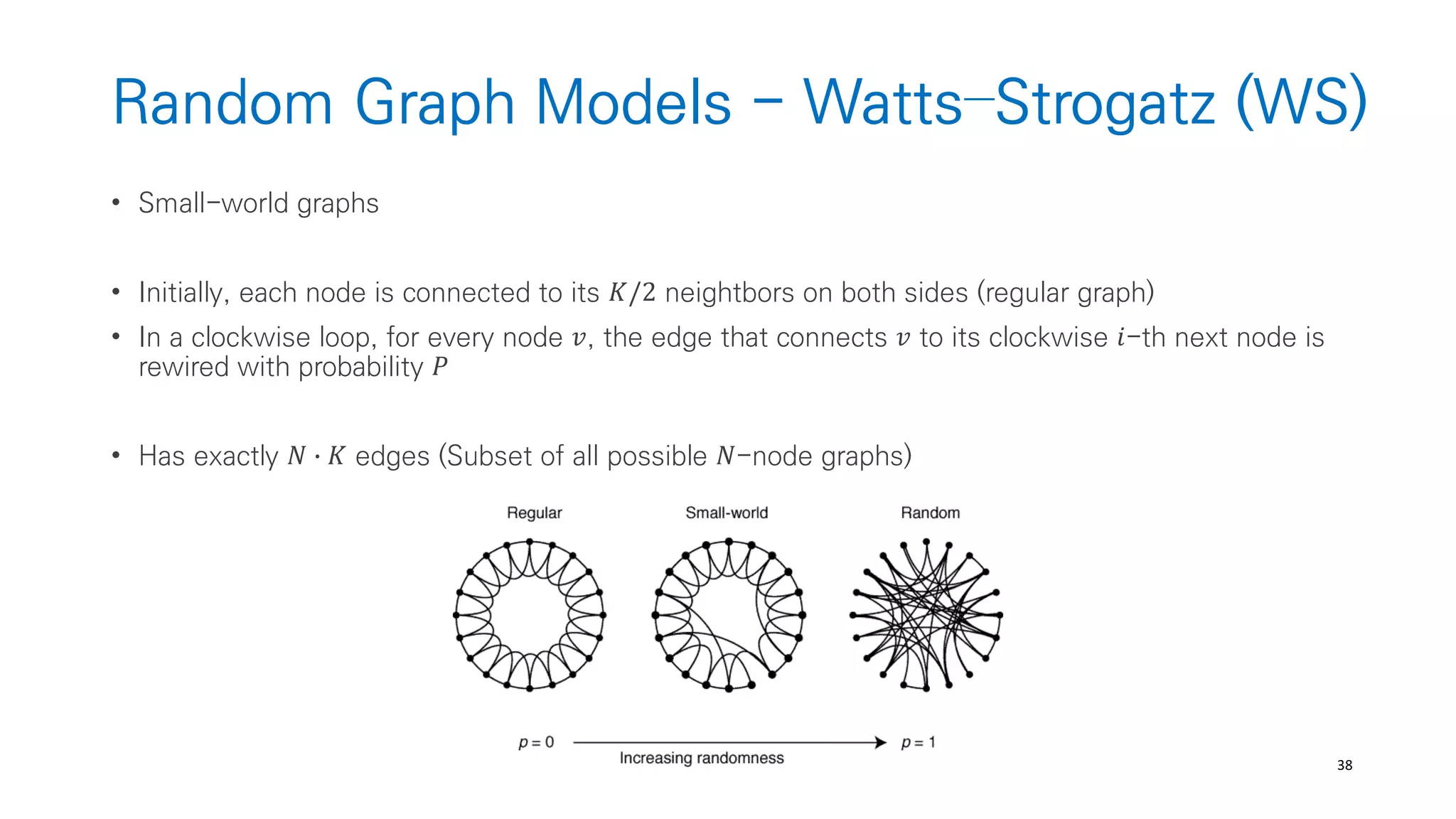

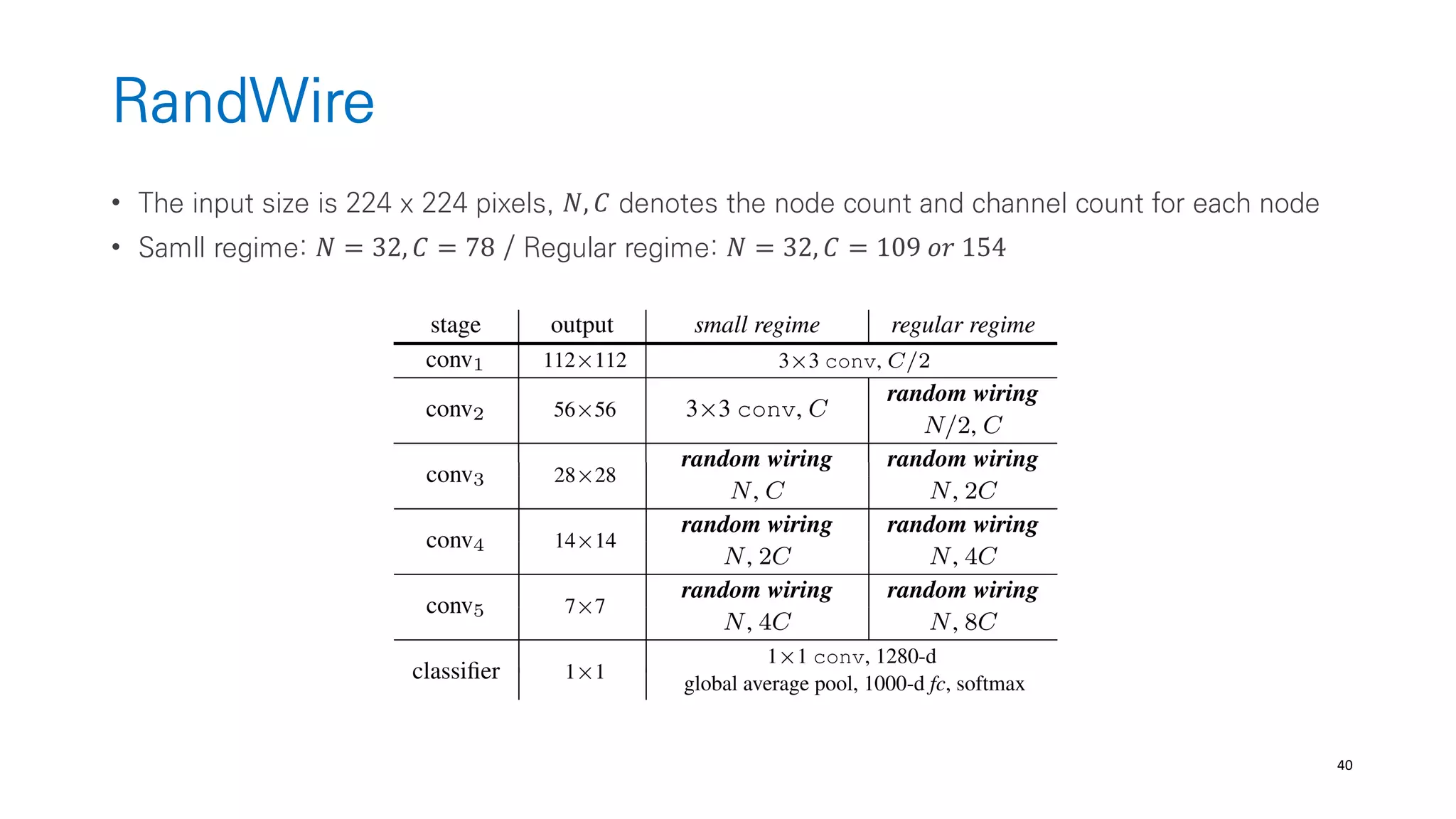

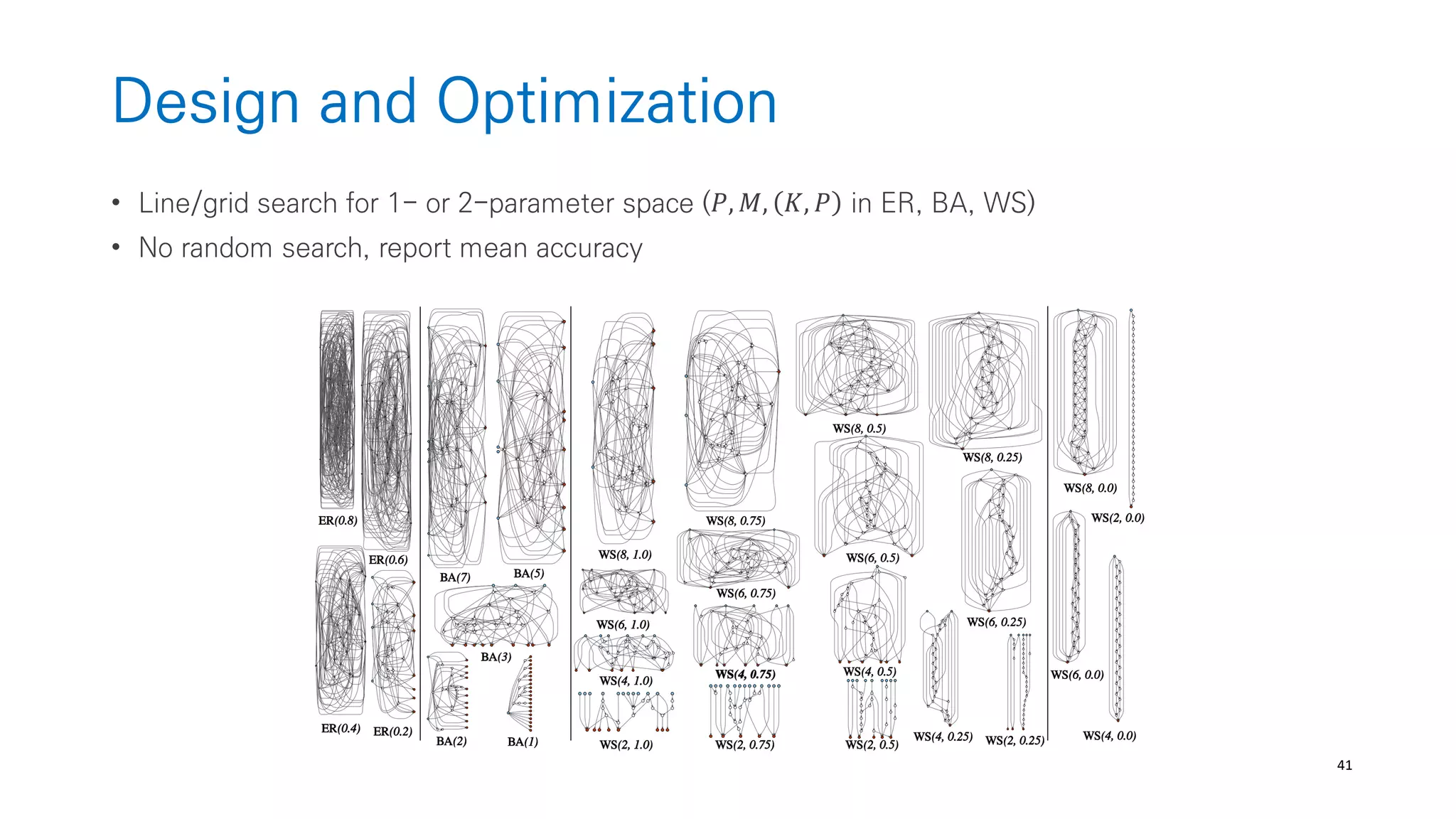

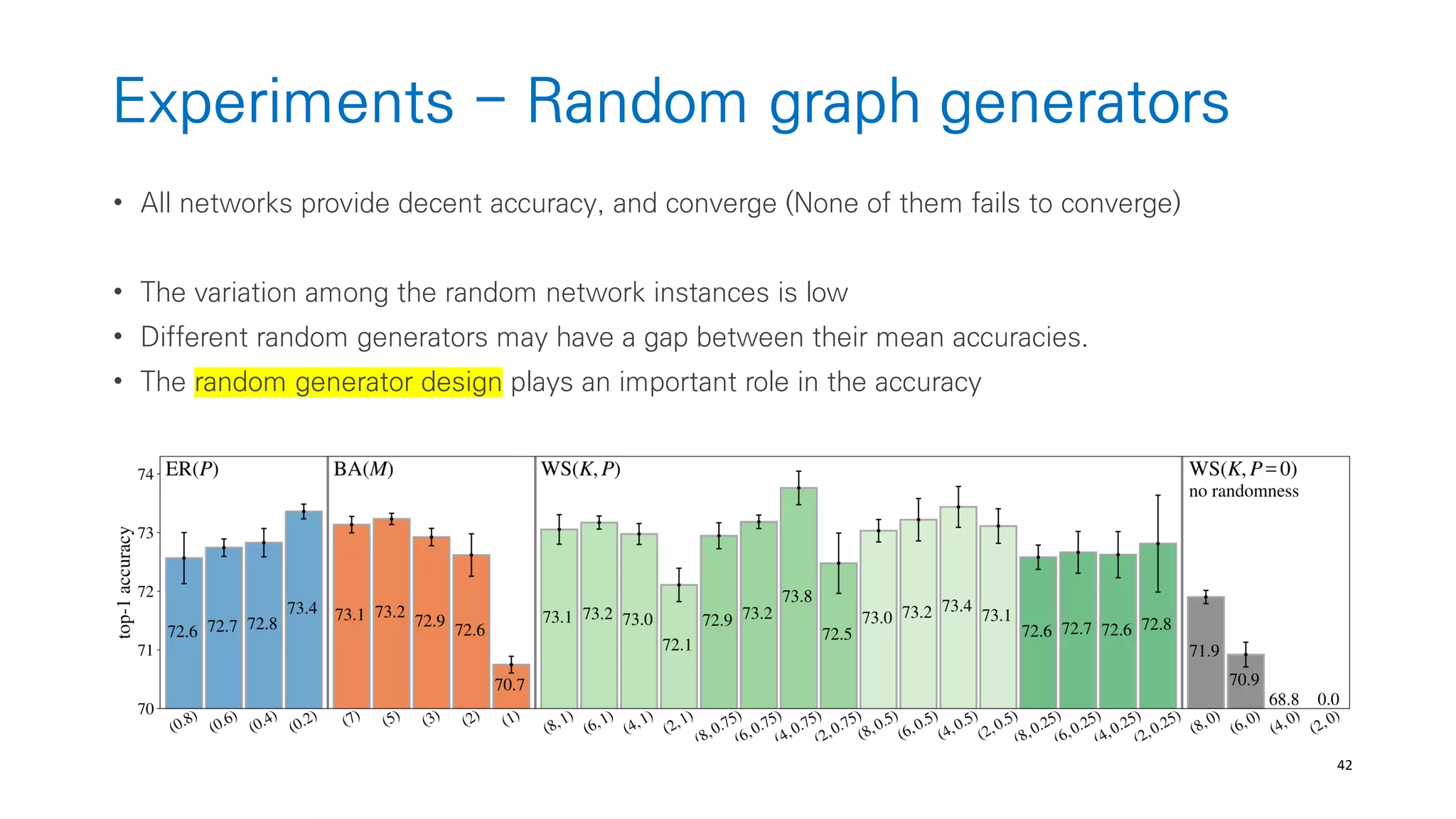

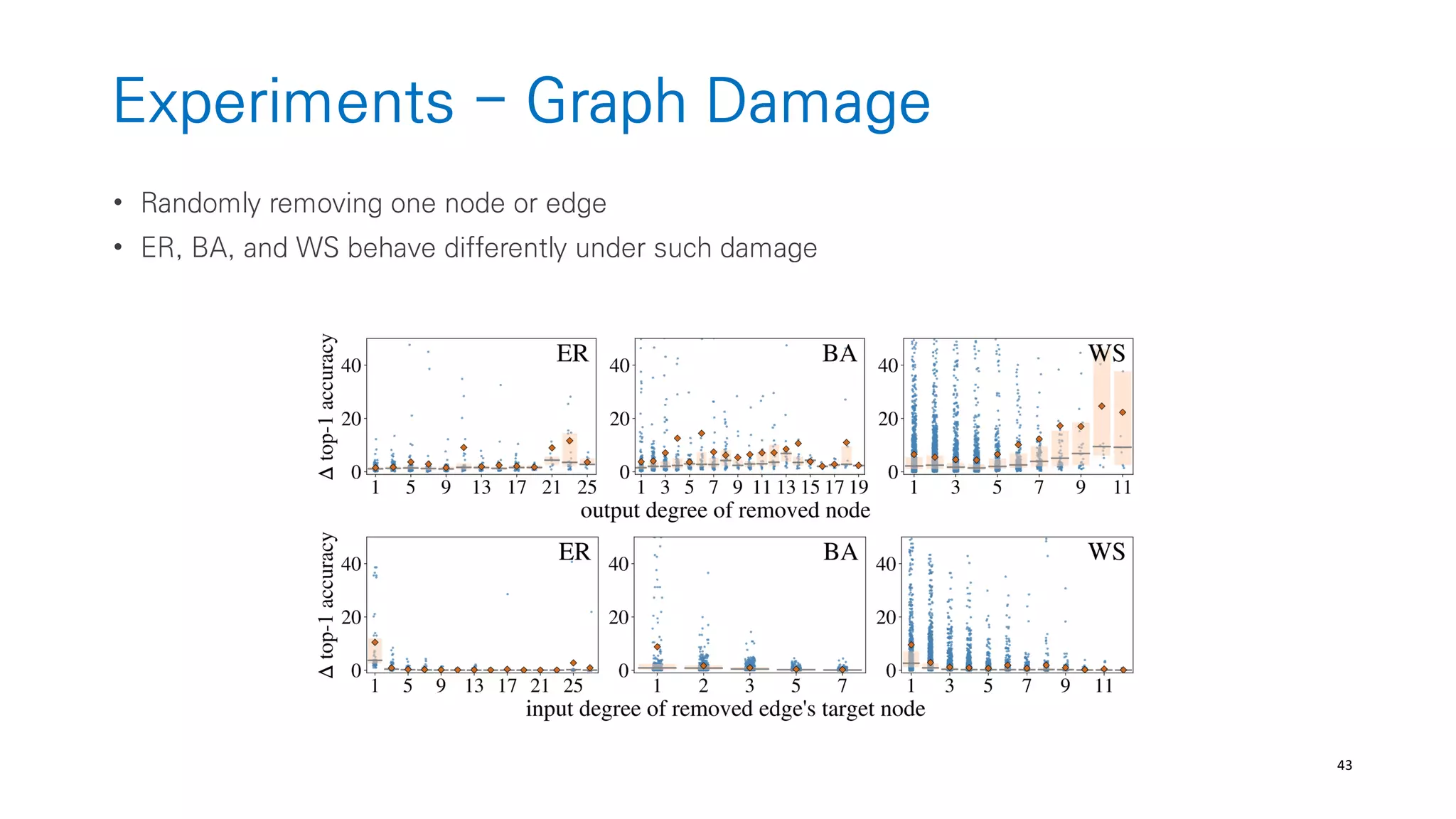

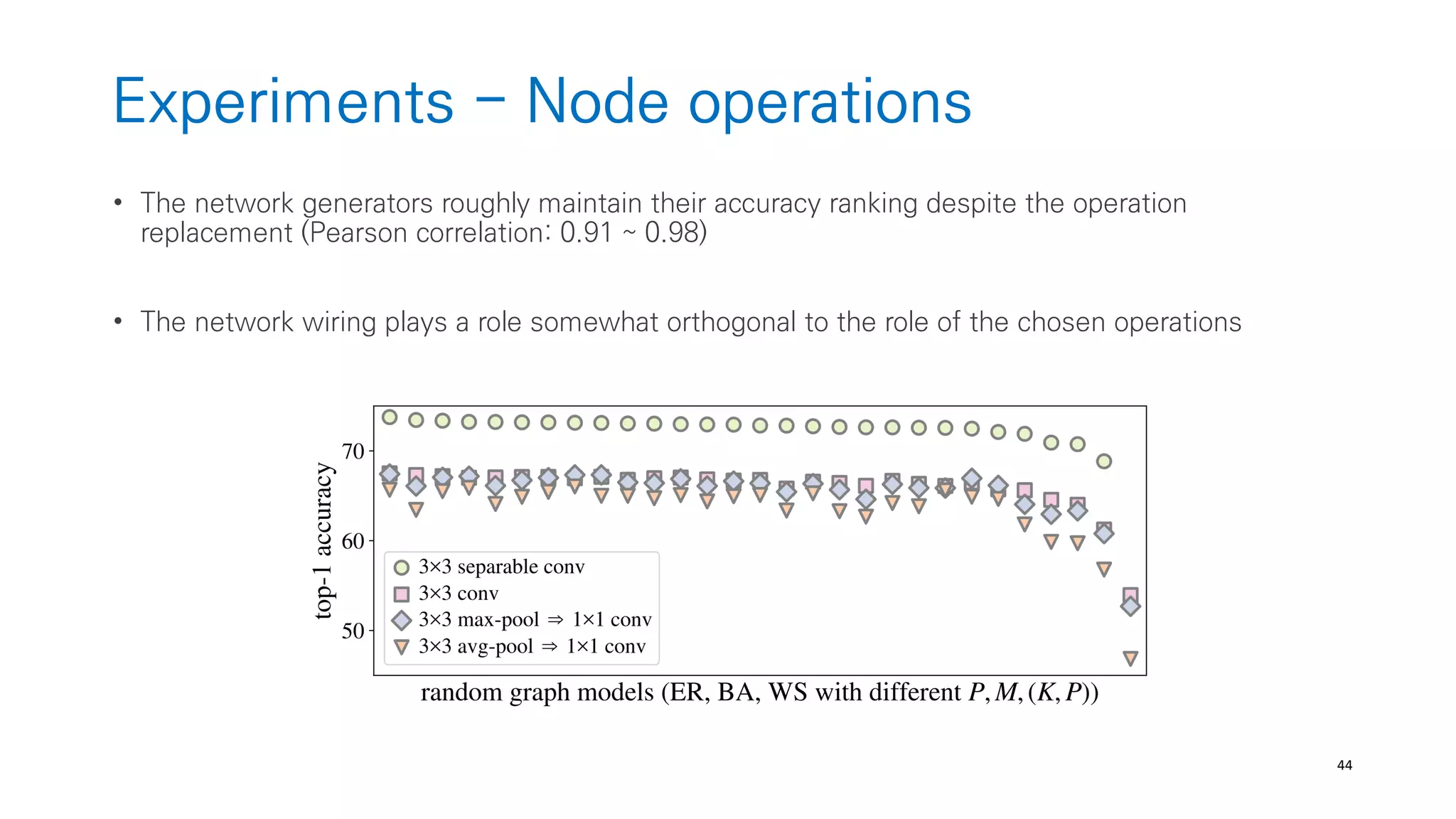

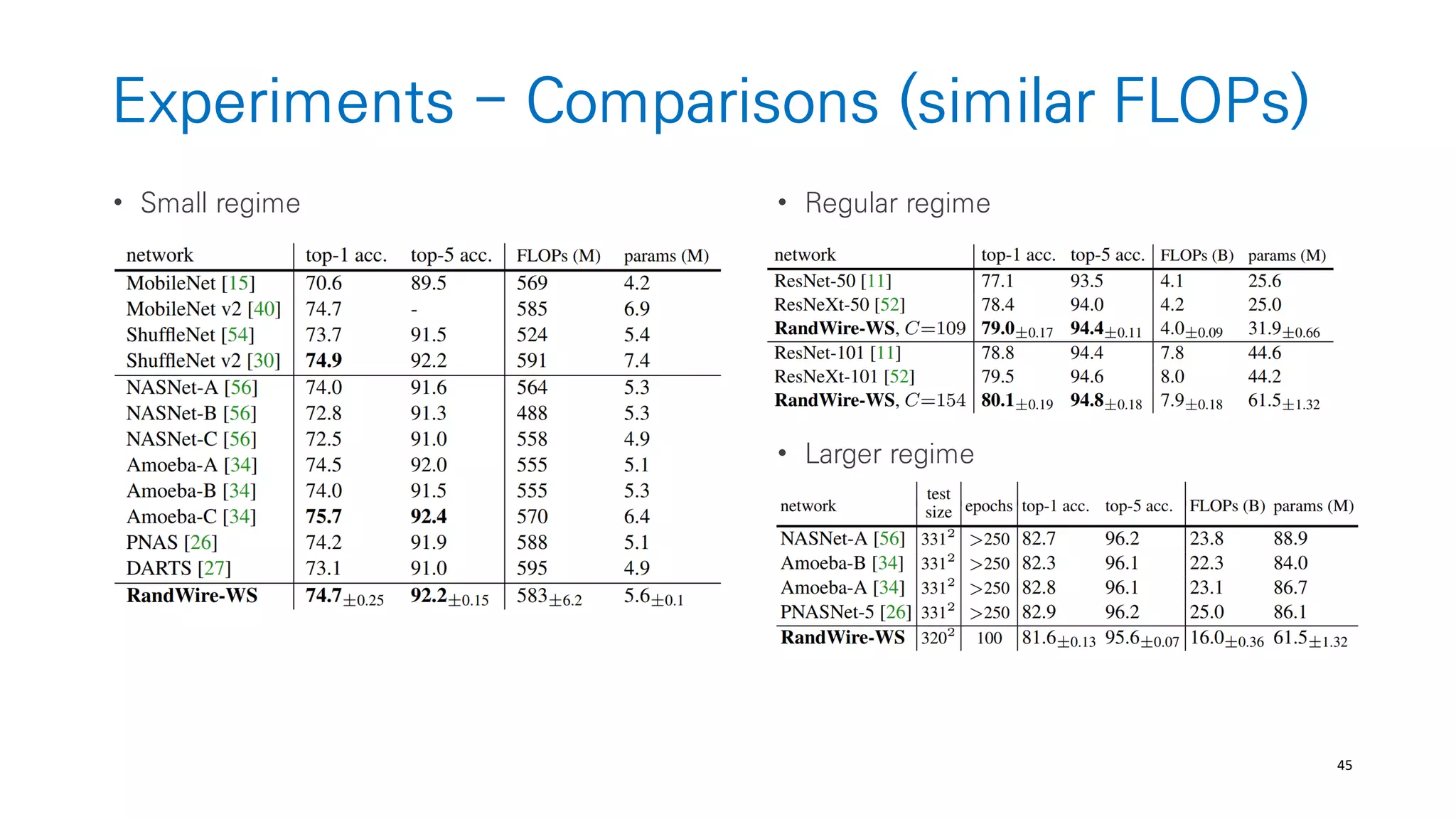

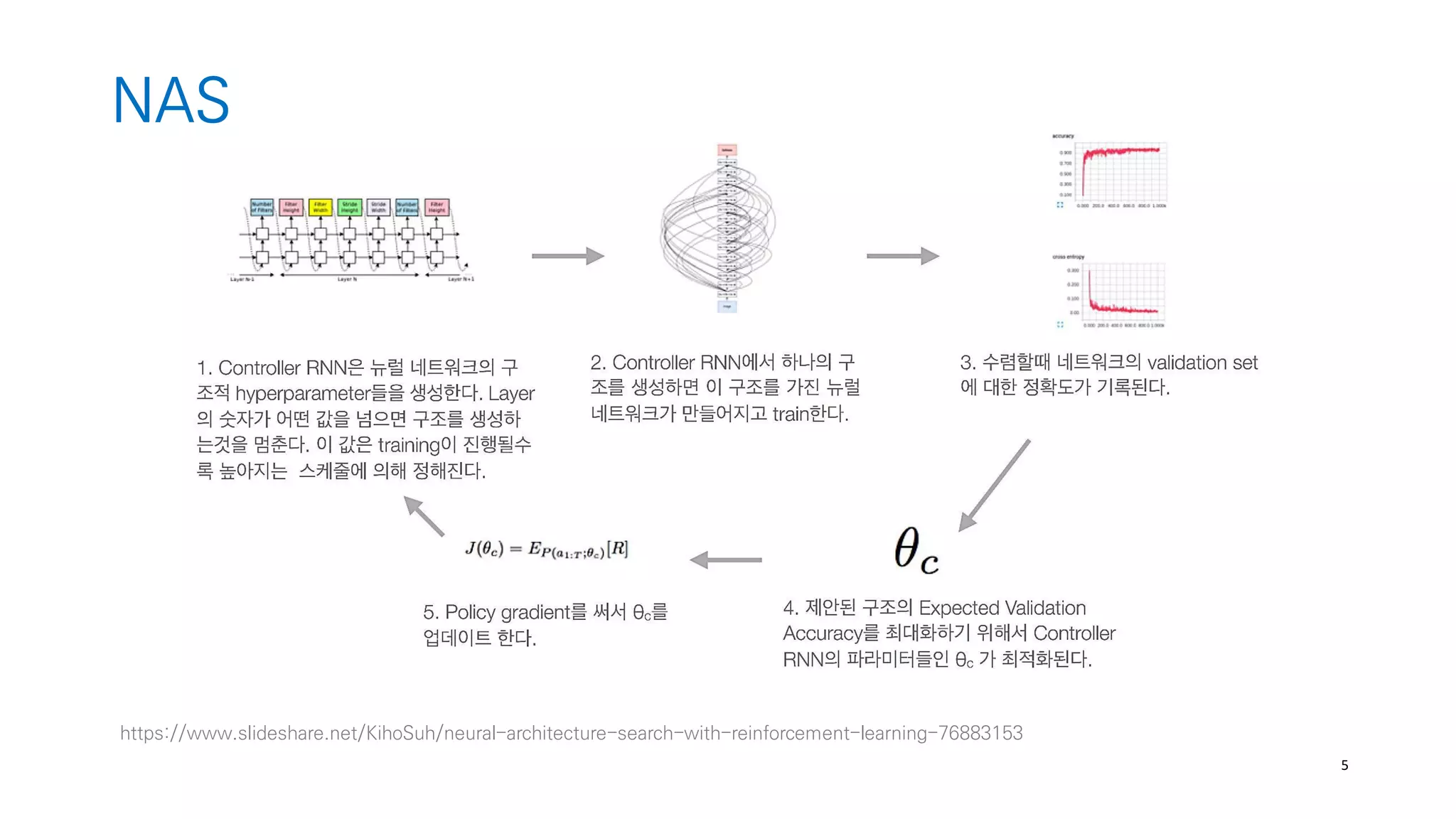

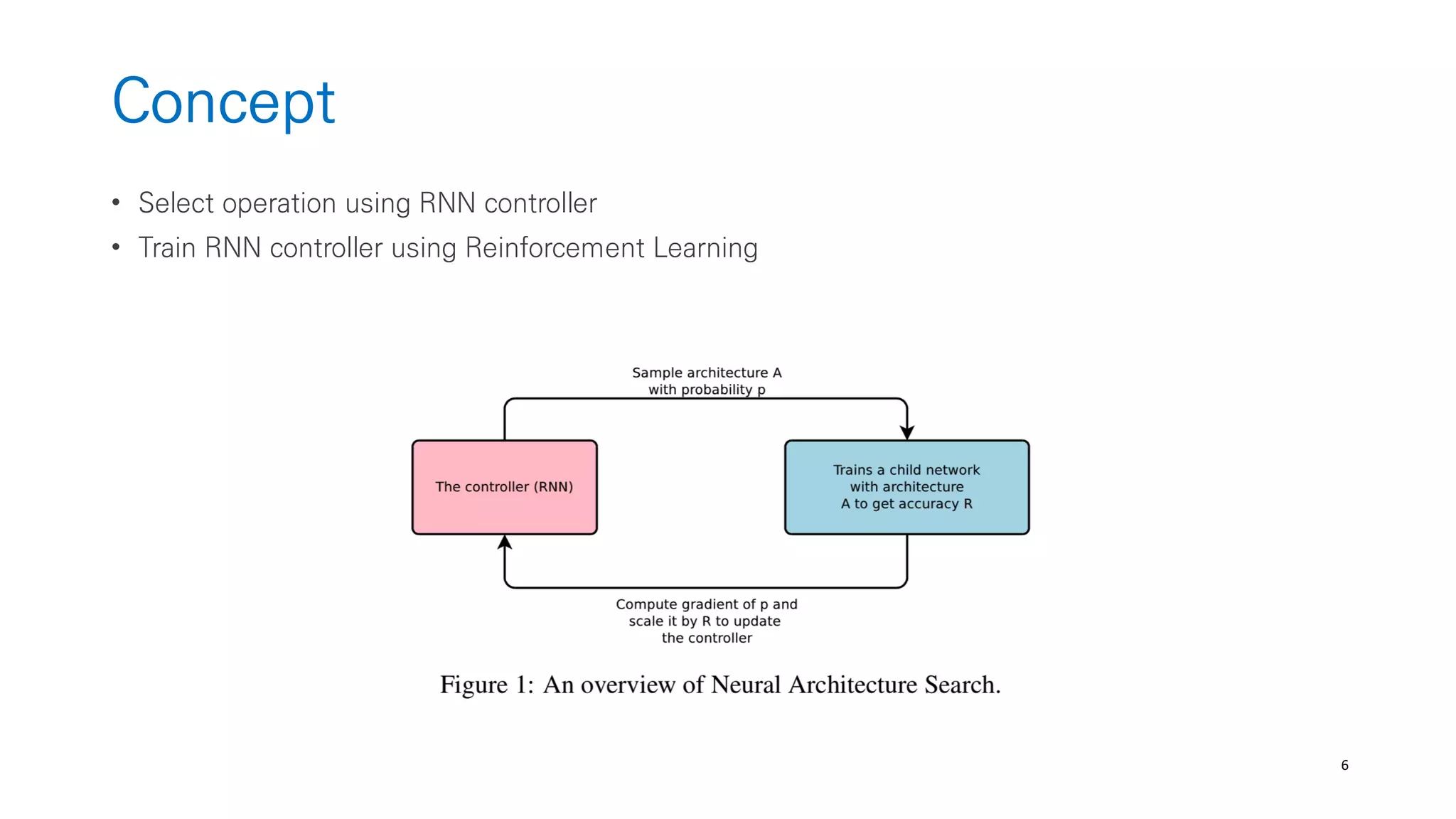

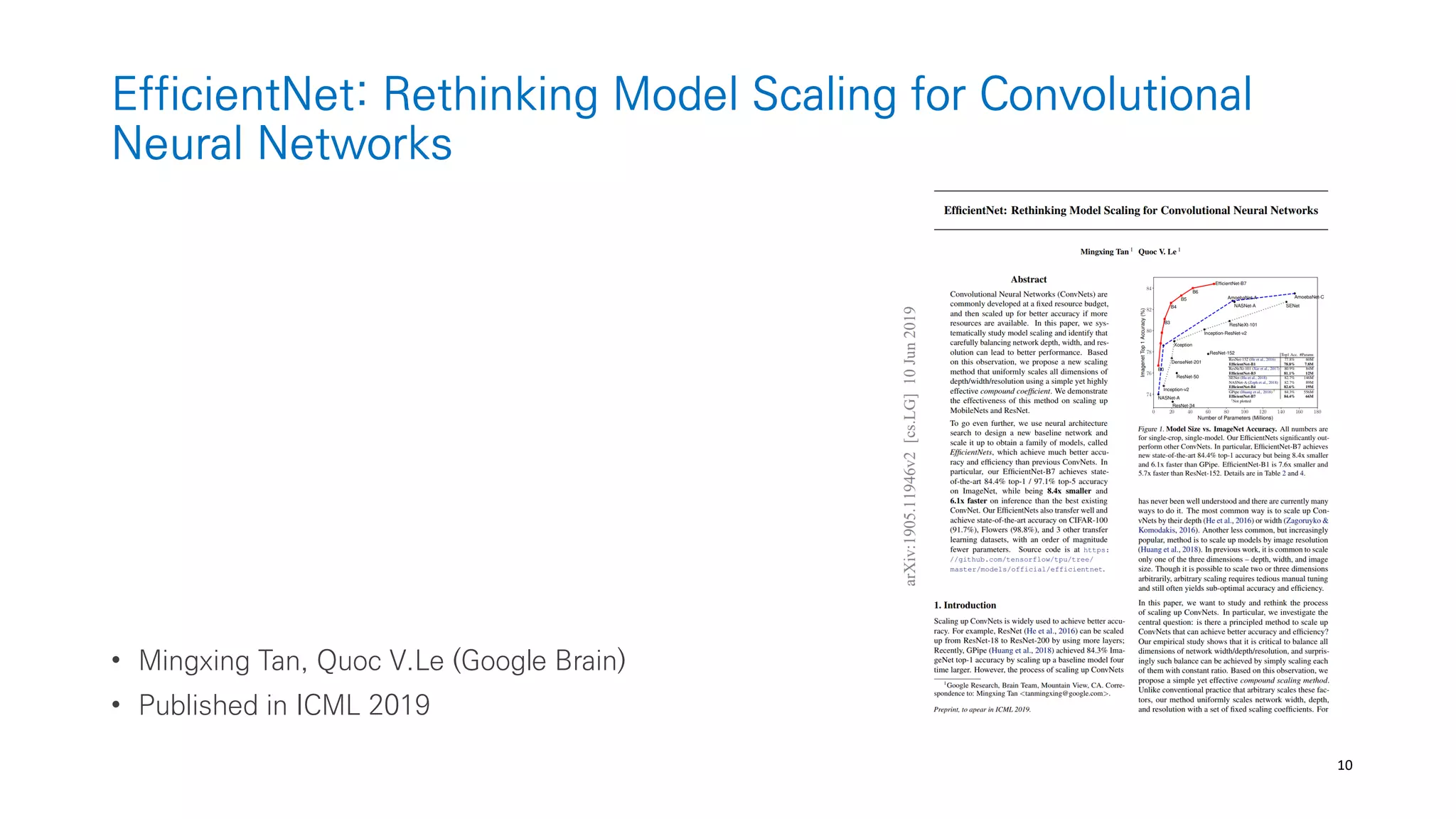

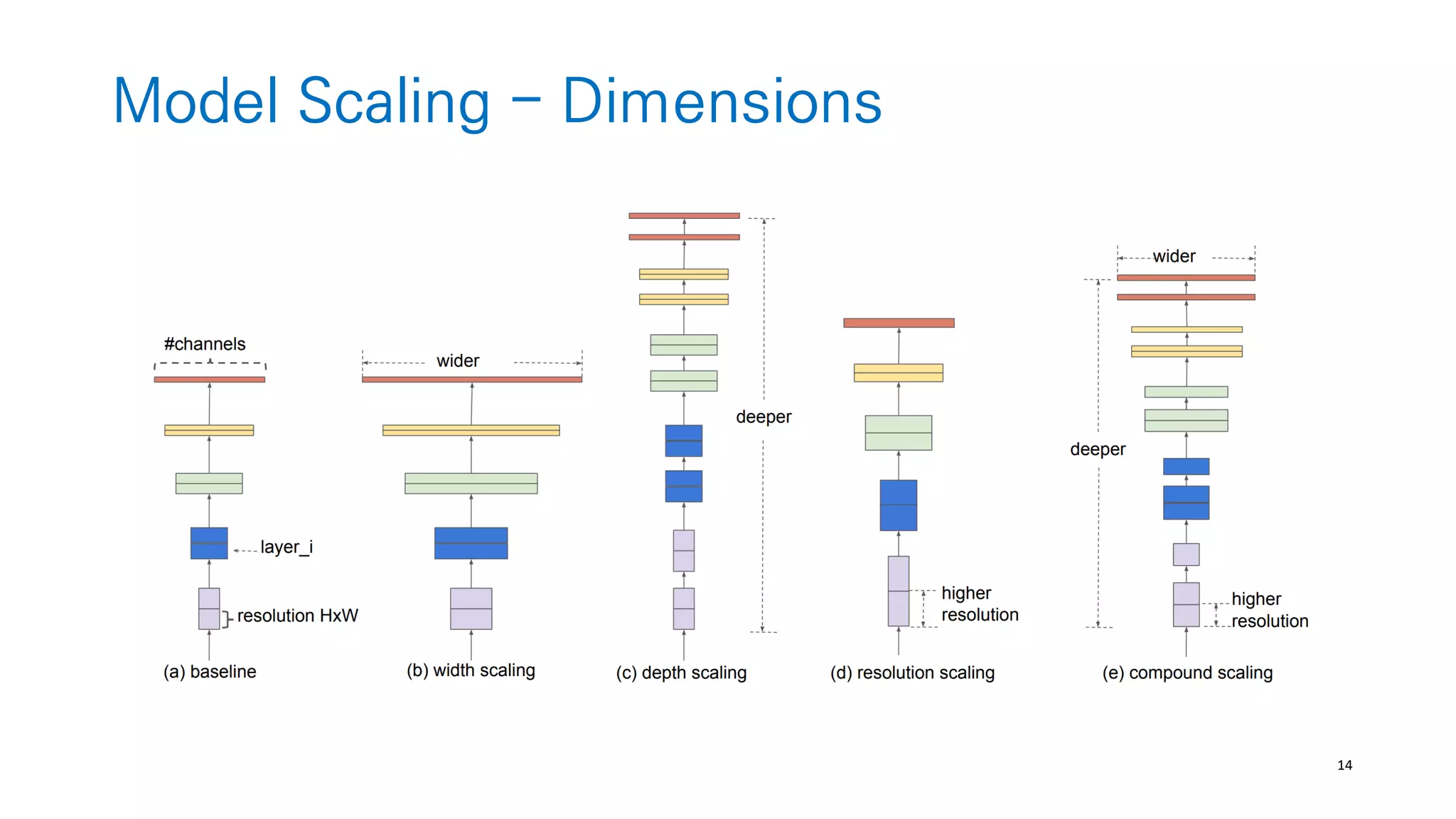

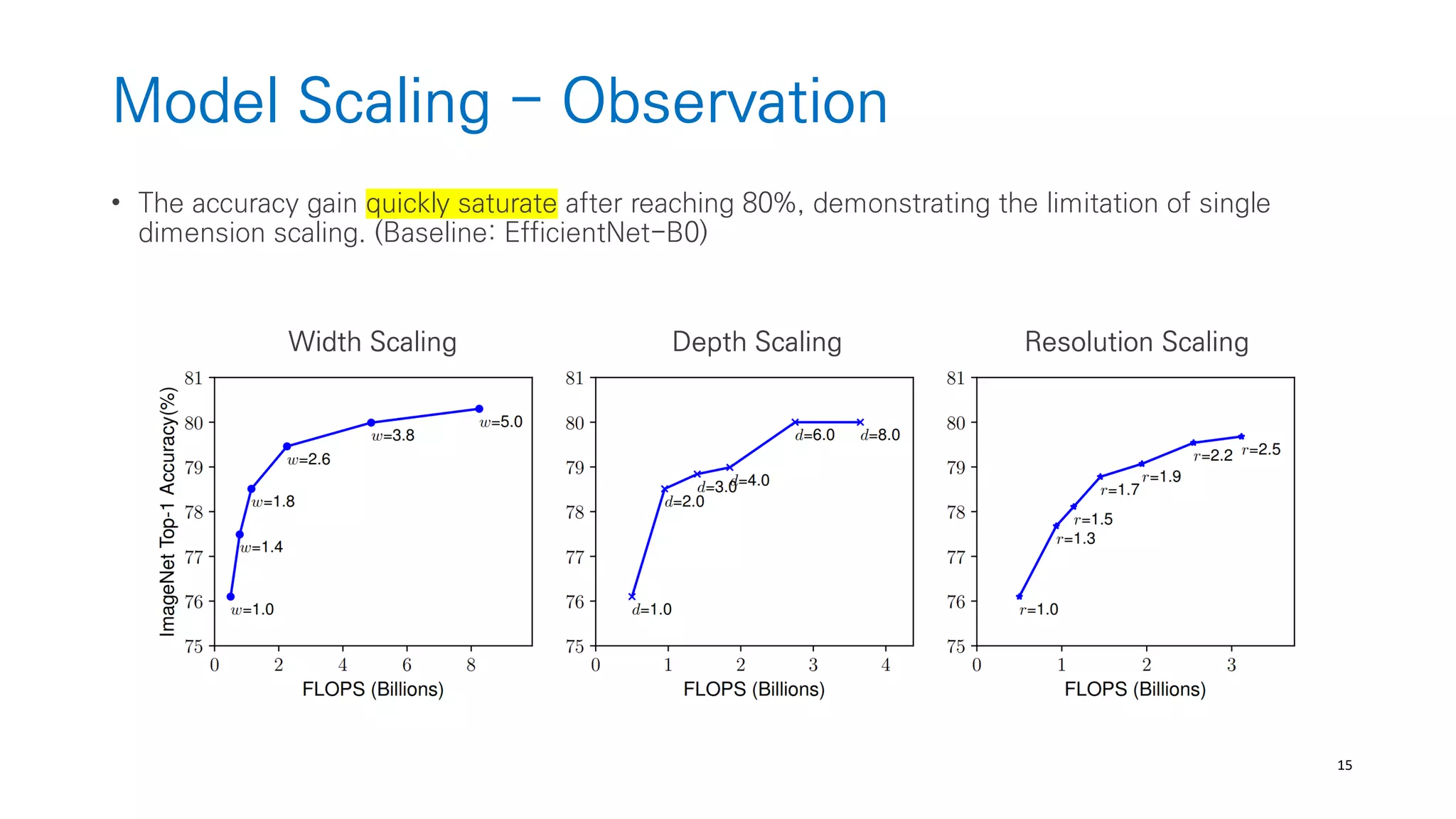

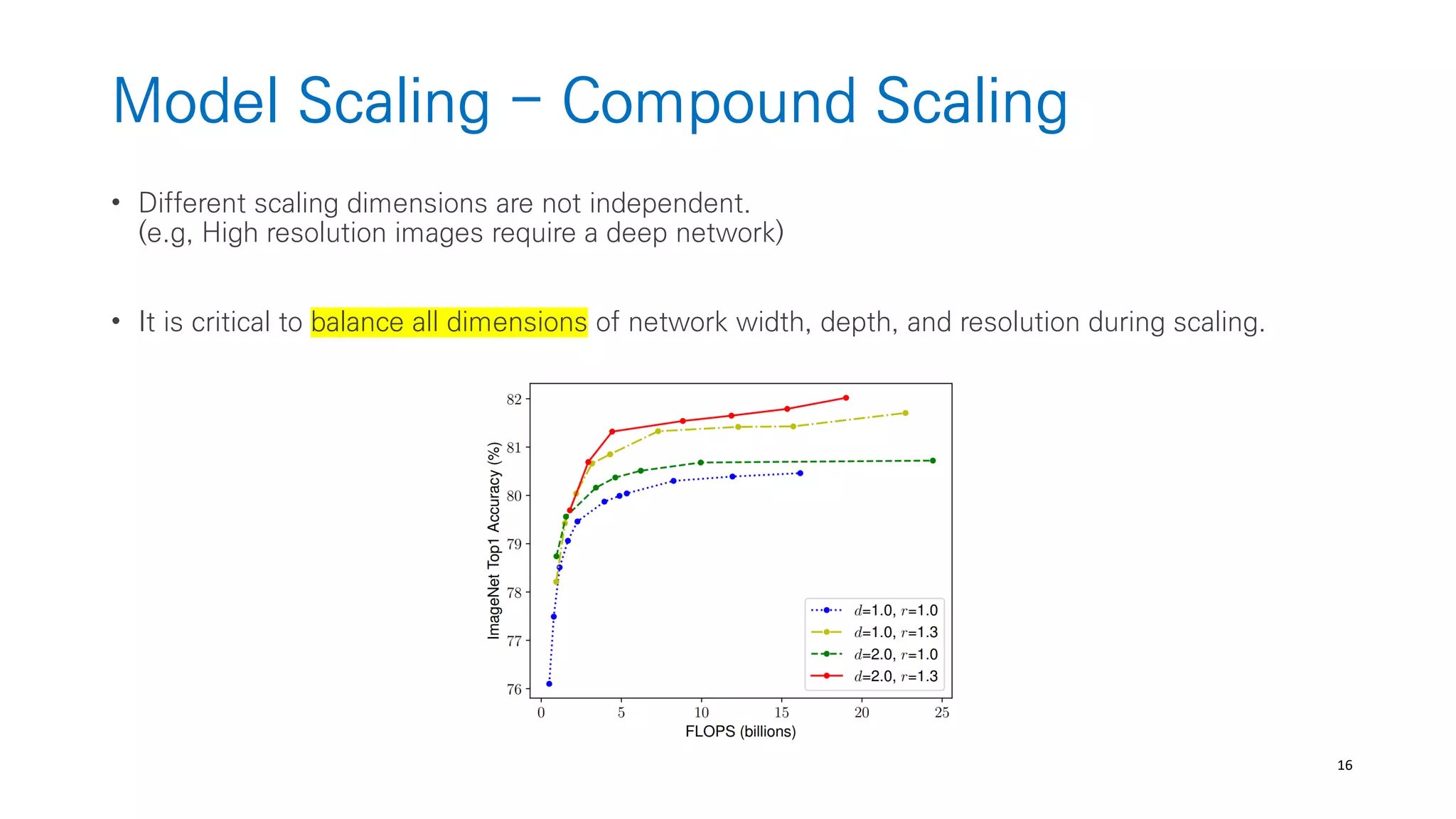

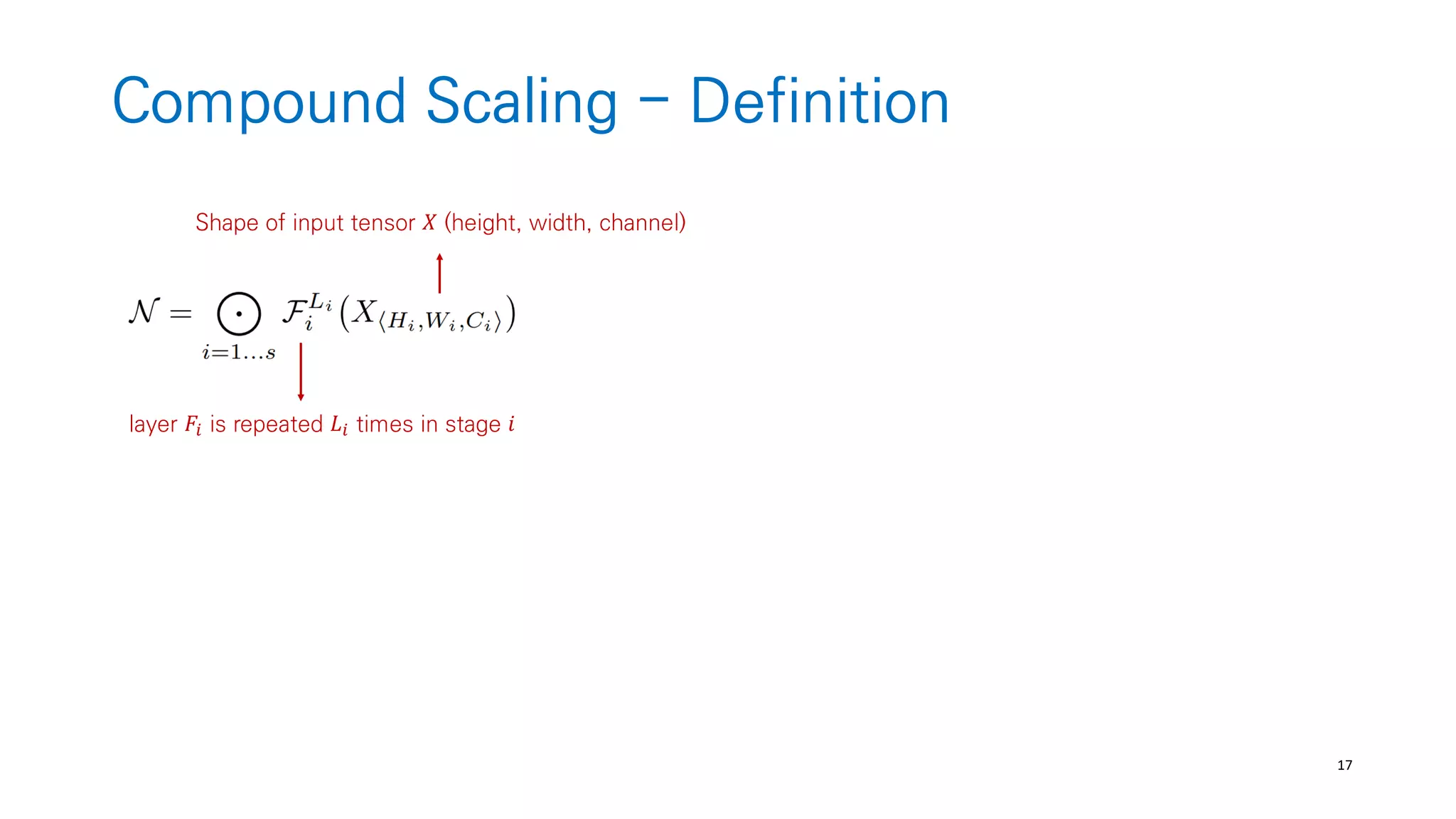

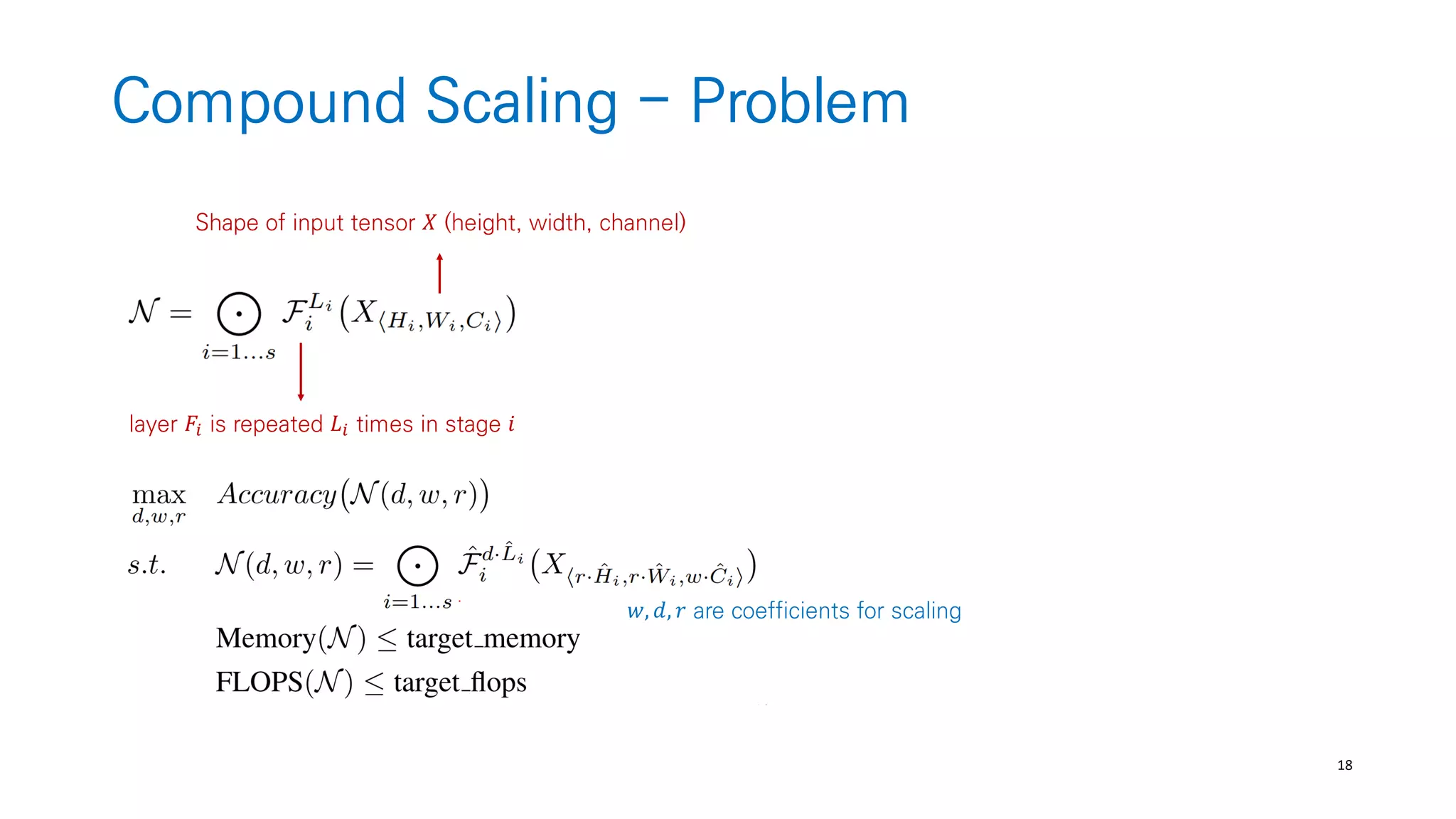

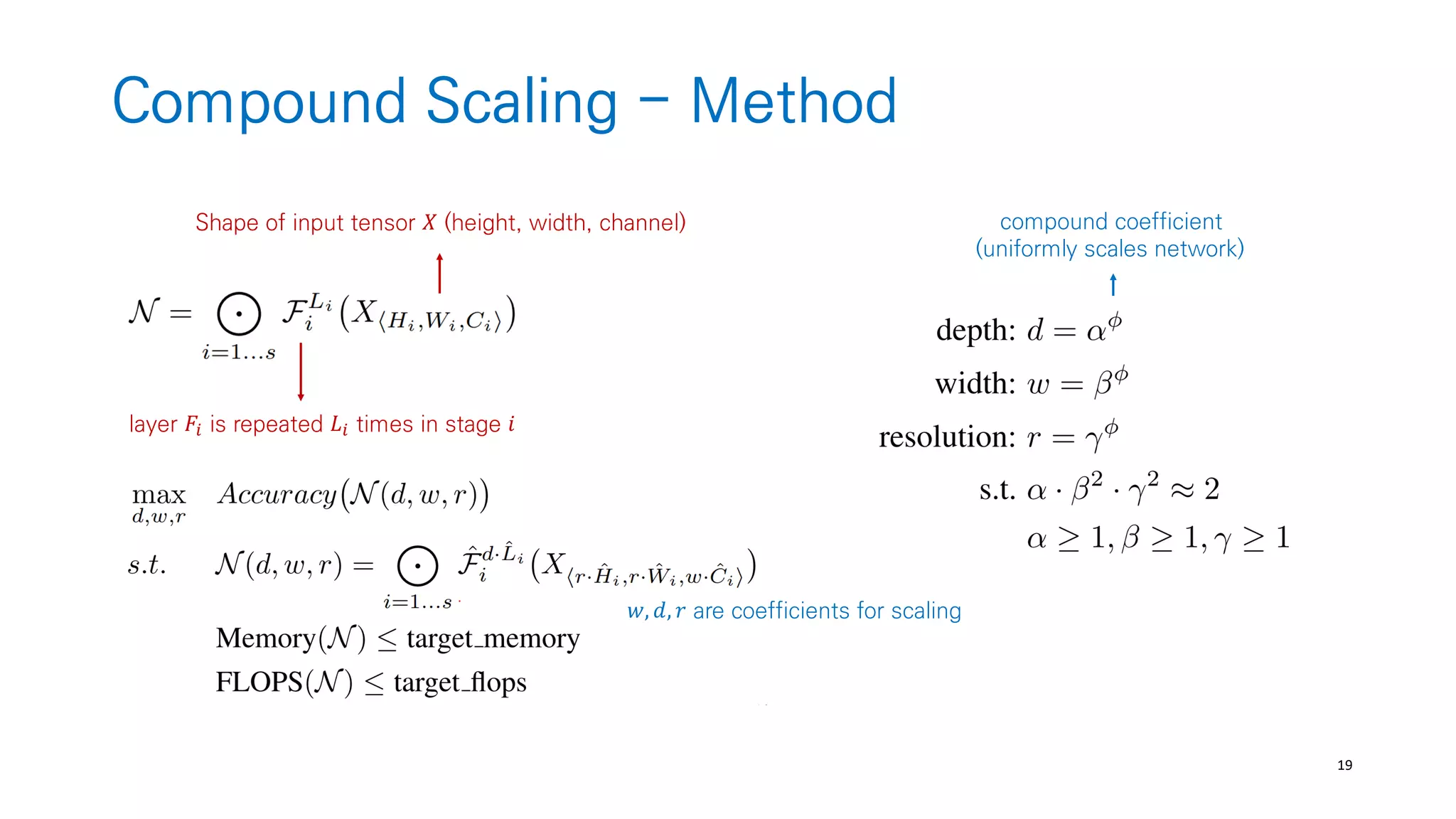

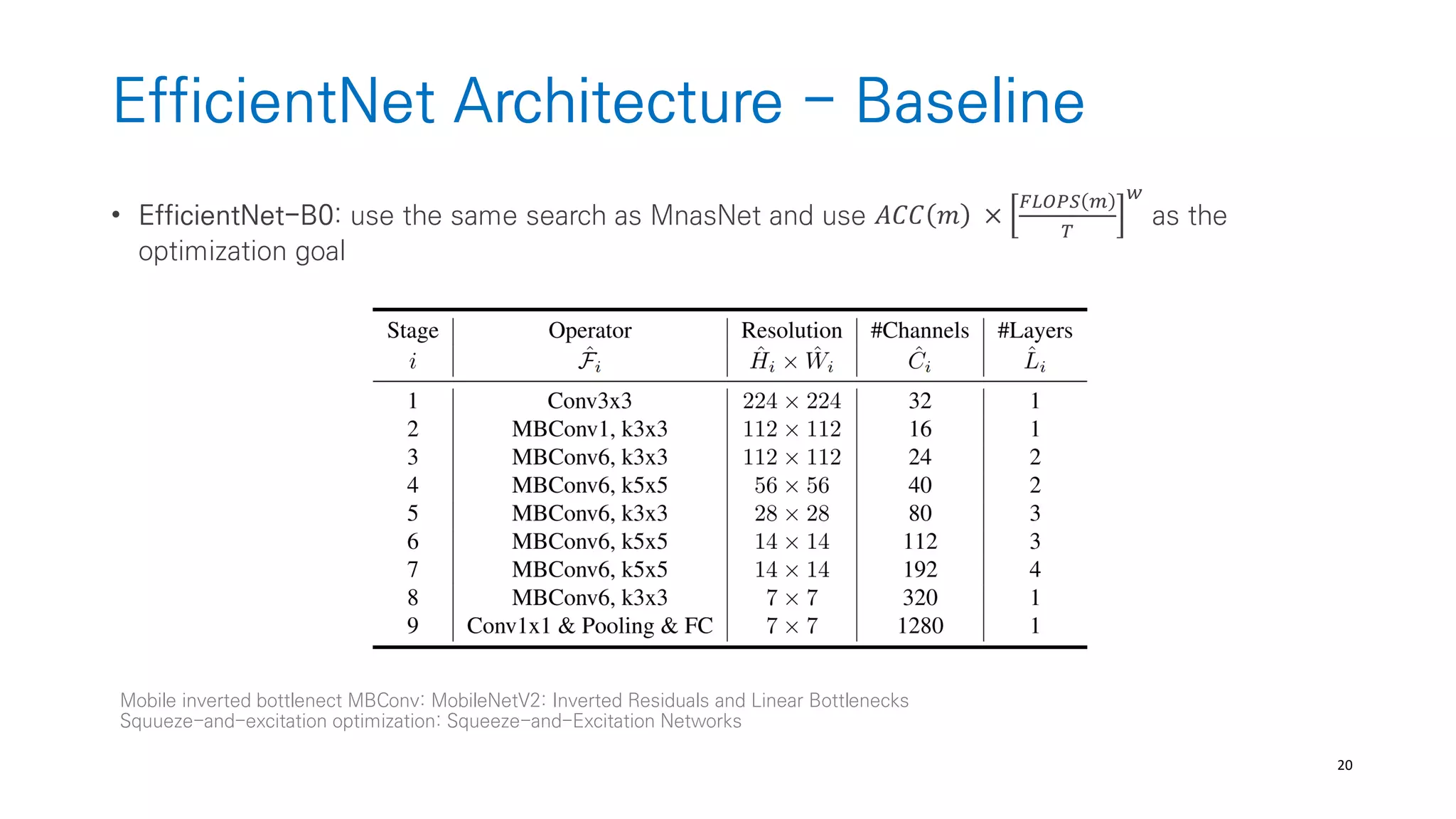

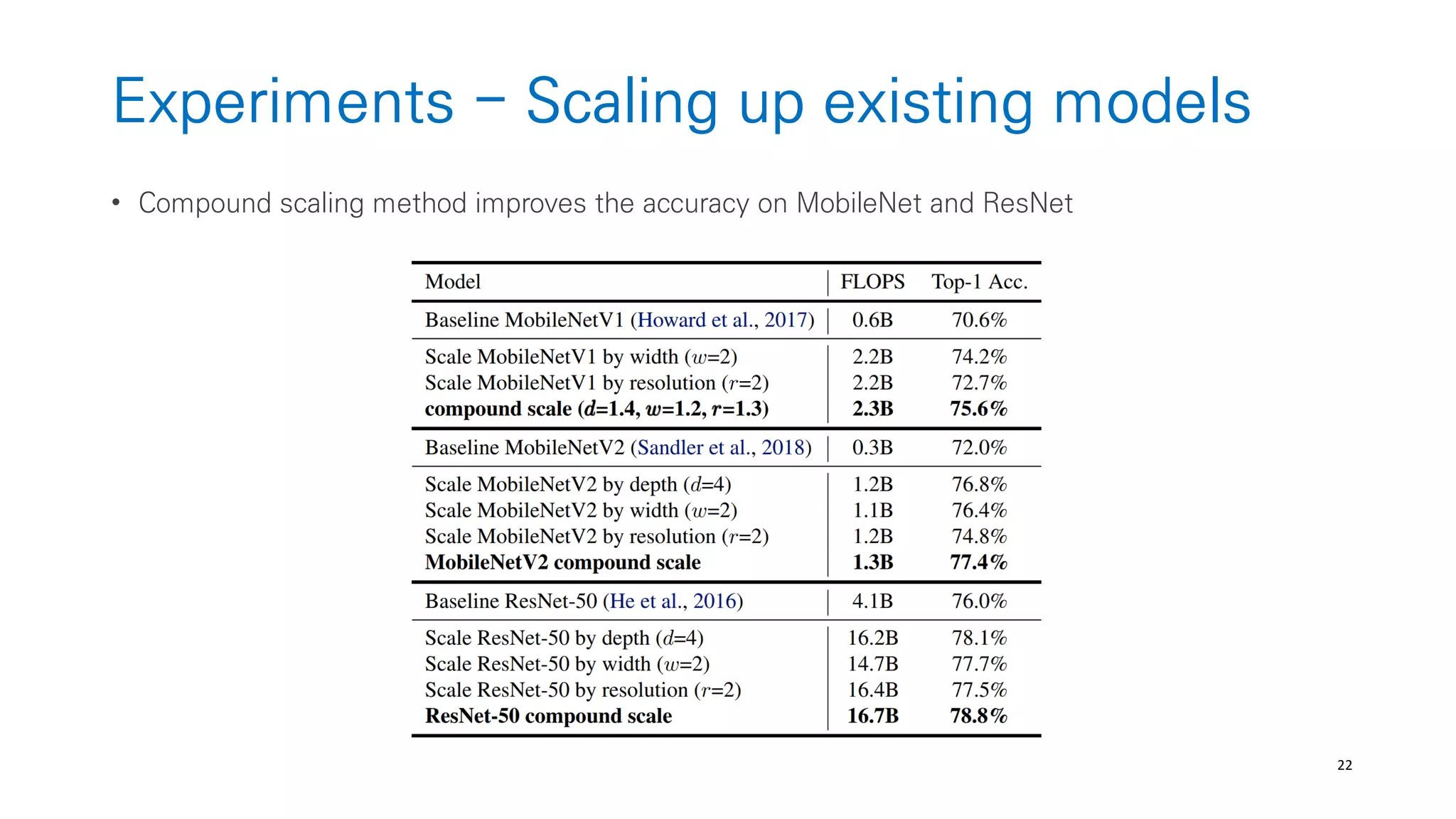

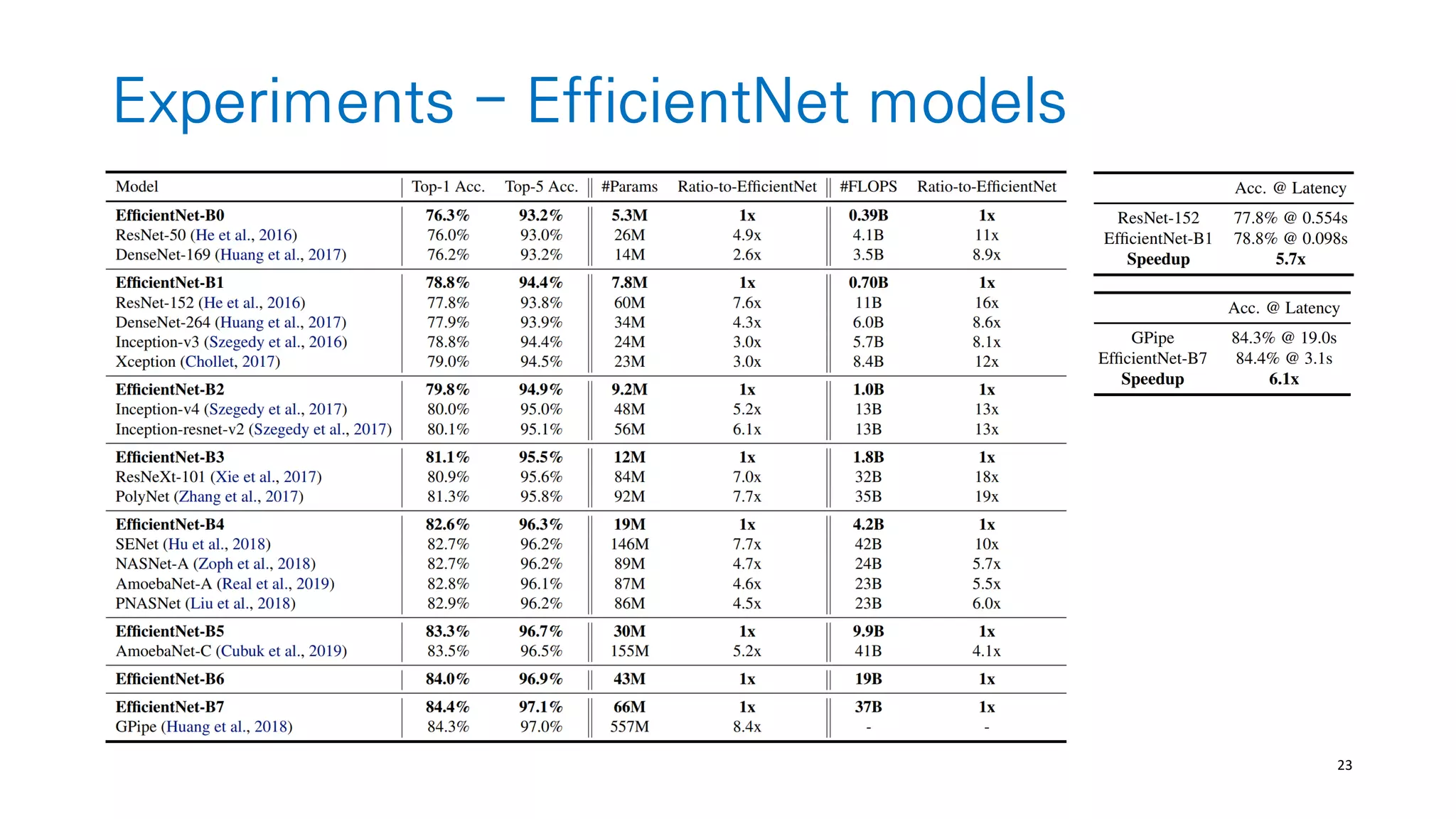

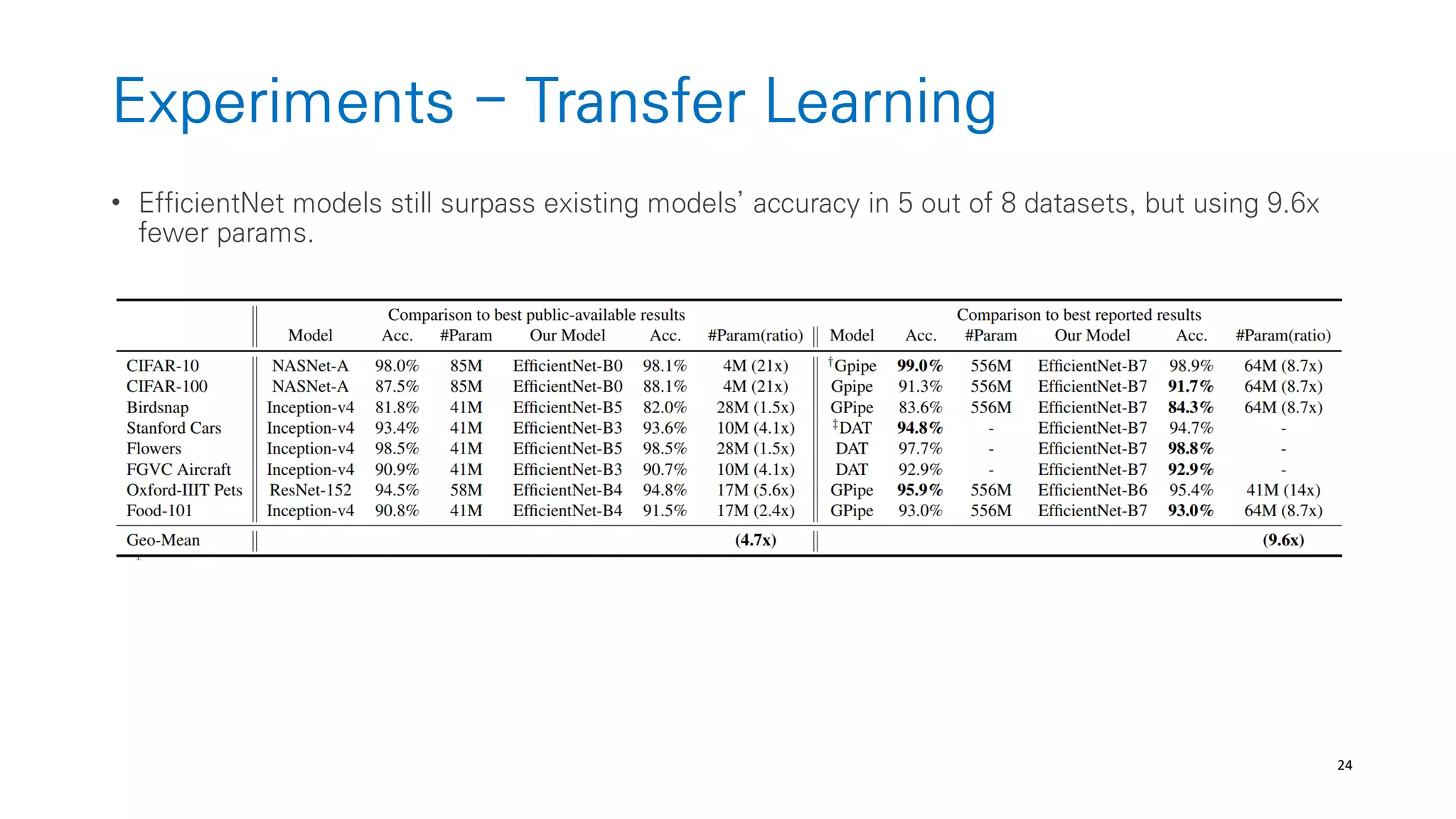

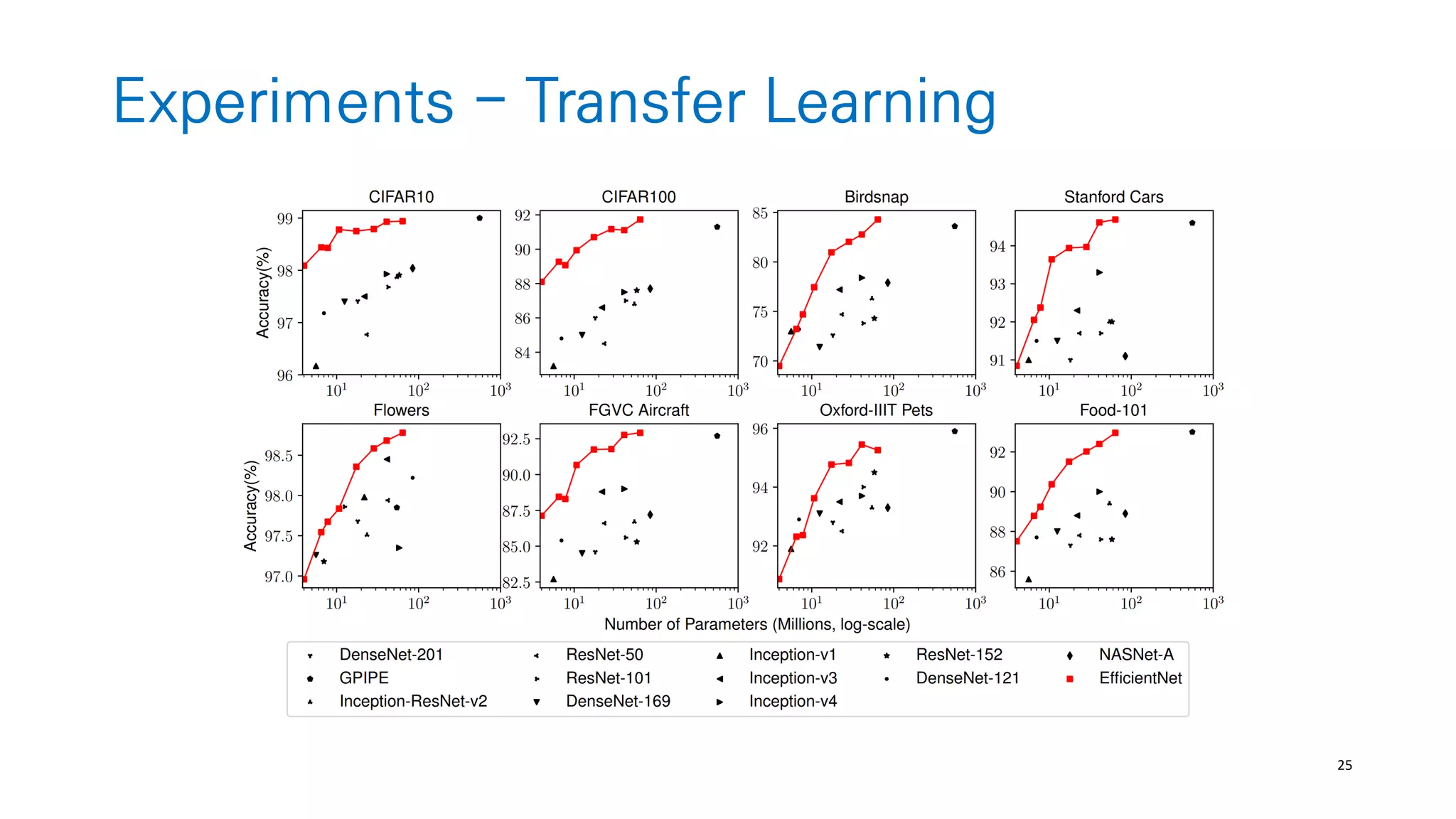

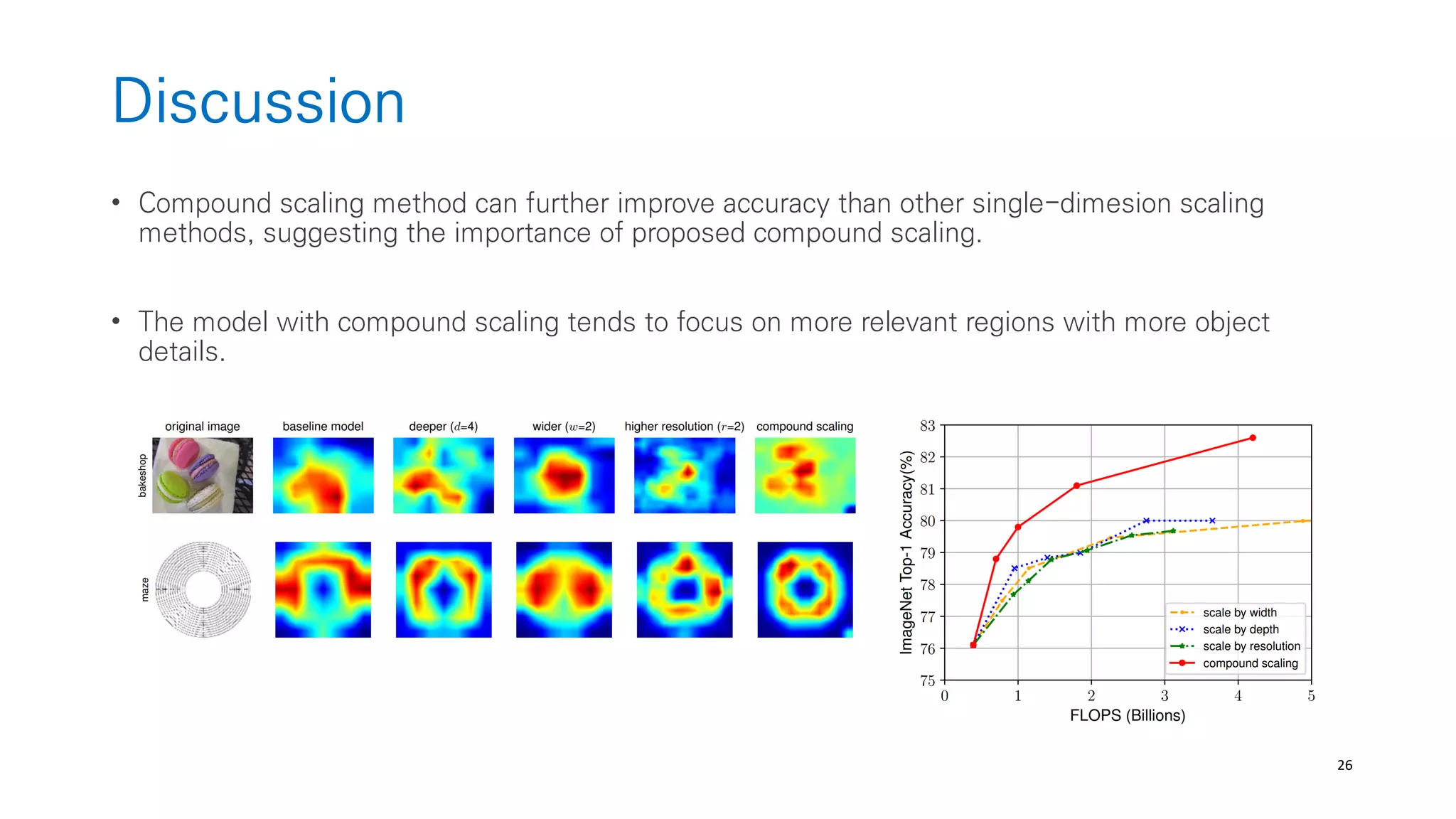

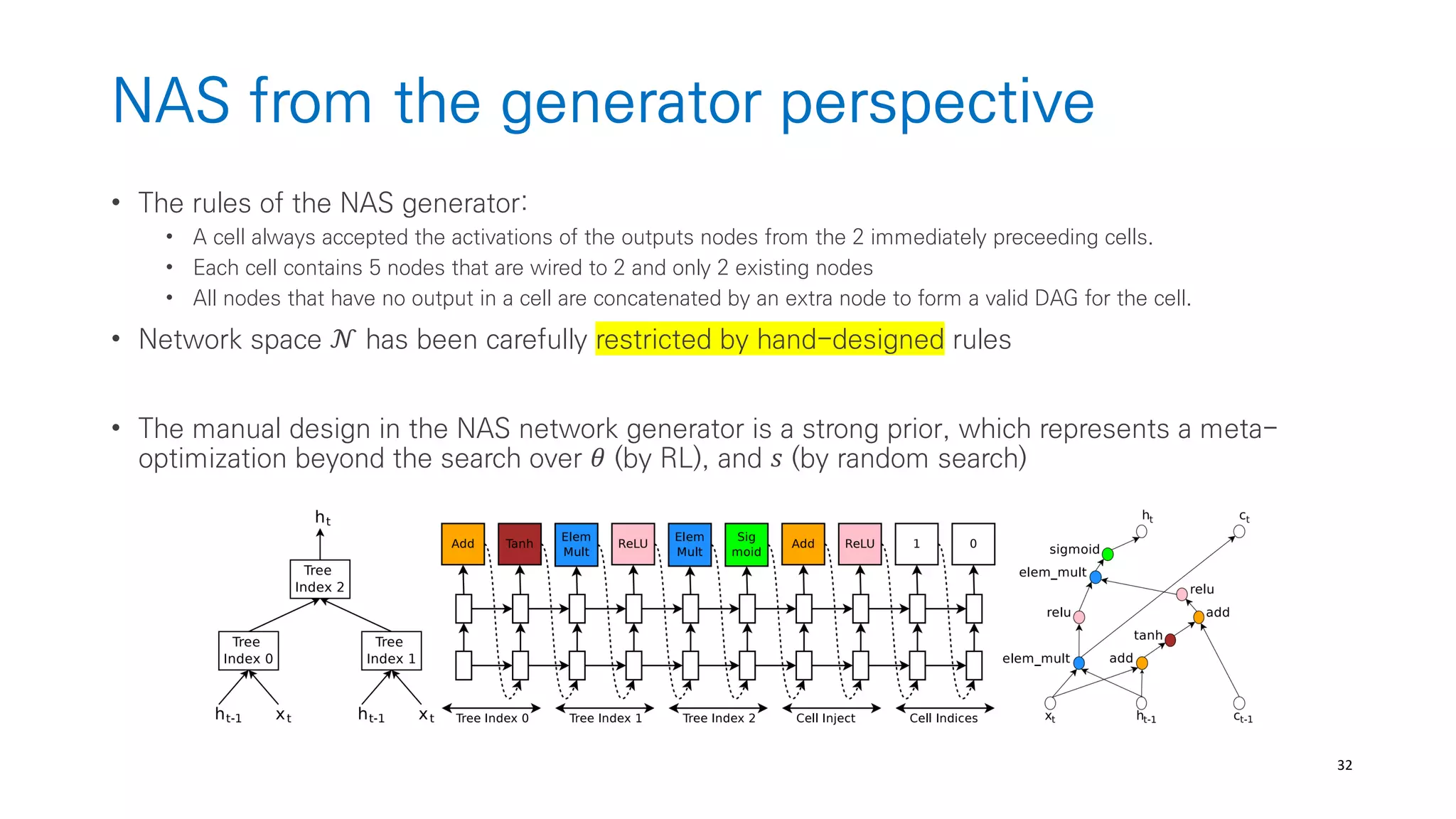

The document discusses AutoML and Neural Architecture Search (NAS), highlighting EfficientNet and randomly wired neural networks for image recognition. EfficientNet emphasizes compound scaling for improved accuracy with fewer parameters, while the research on randomly wired networks explores the potential for novel network designs that could enhance performance. Overall, the text underscores the significance of network generation methods in the evolution of neural architecture search.

![33

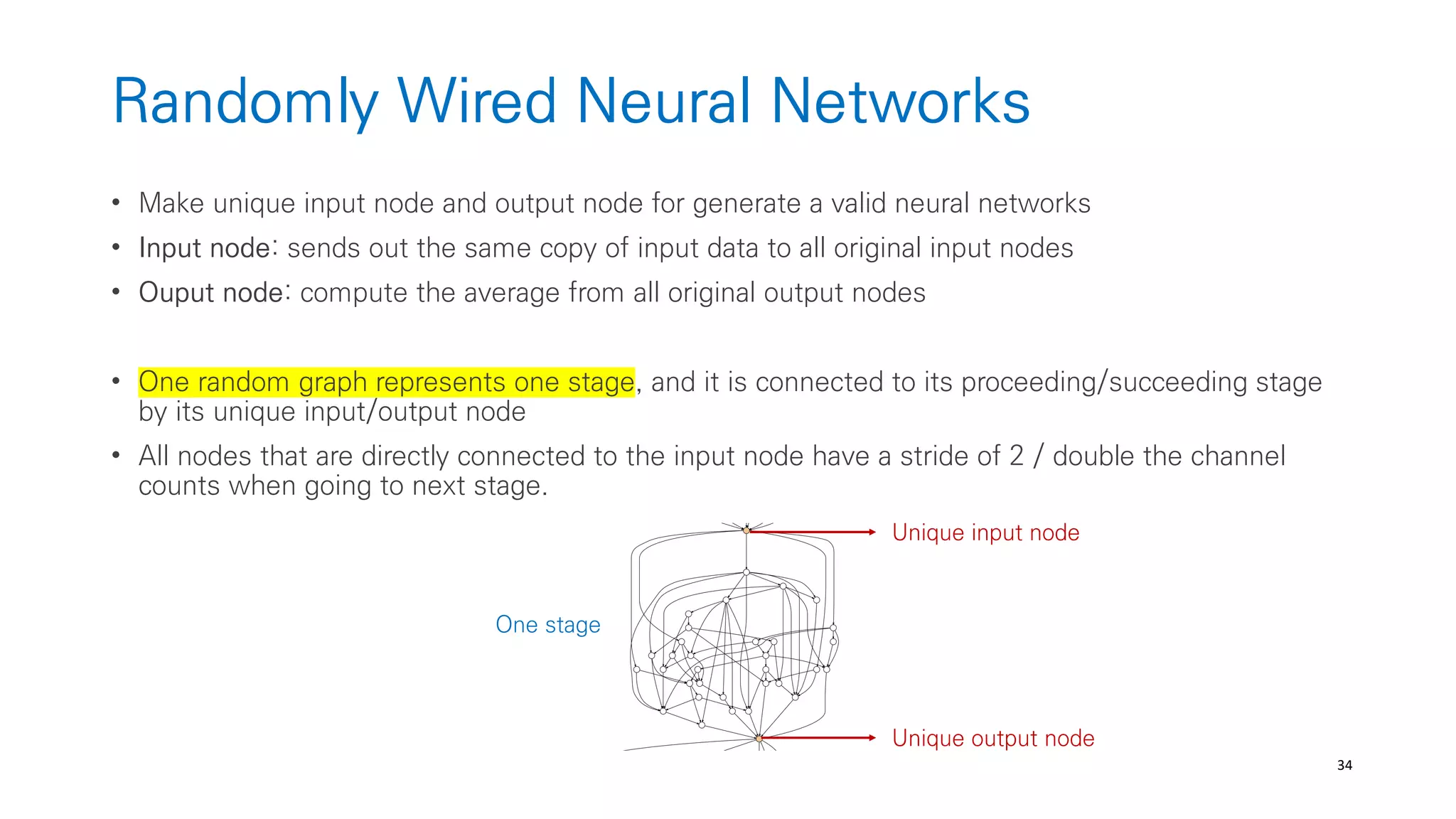

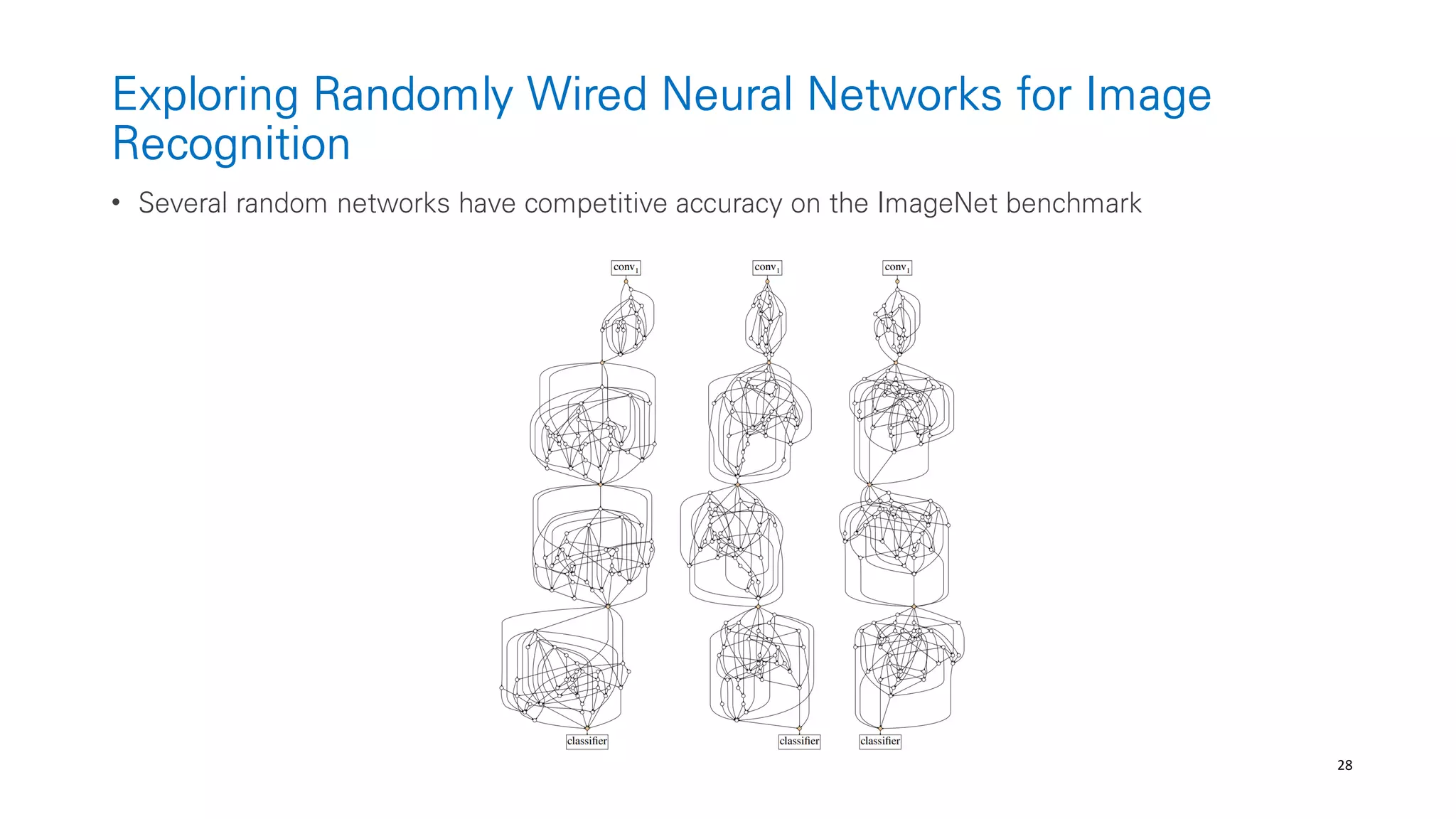

Randomly Wired Neural Networks

• Generate a general graphs w.o restricting how the graphs correspond to neural-networks

(from graph theory like ER, BA, WS)

• The edges are data flow (send data from one node to another node)

• Node operation

Aggregation: The input data are combied via a weighted sum; The weights are positive

Transformation: The aggregated data is processed by [ReLU-convolution-BN]

All nodes have same type of convolution!

Distribtuion: The same copy of the transformed data is sent out to other nodes.](https://image.slidesharecdn.com/201907automlandneuralarchitecturesearch-190705085959/75/201907-AutoML-and-Neural-Architecture-Search-33-2048.jpg)