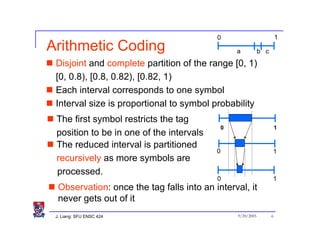

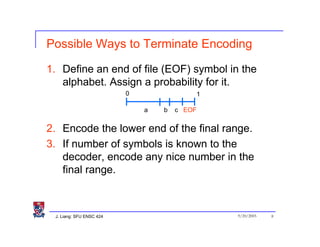

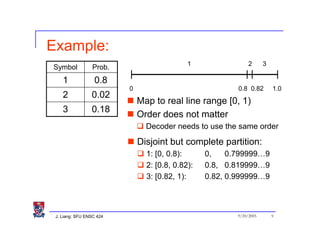

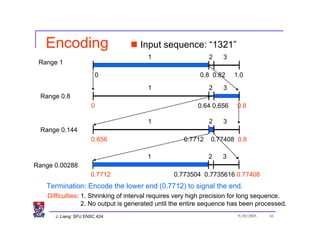

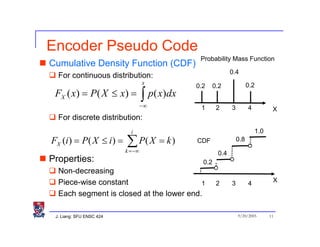

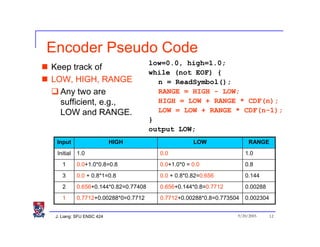

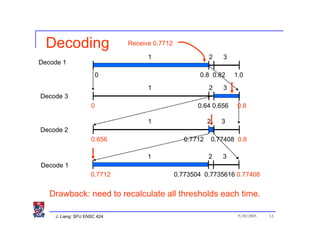

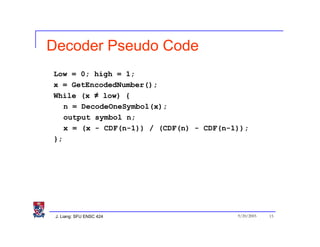

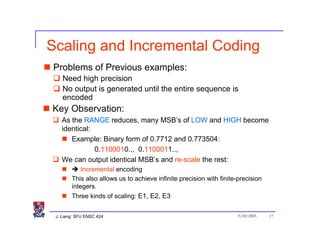

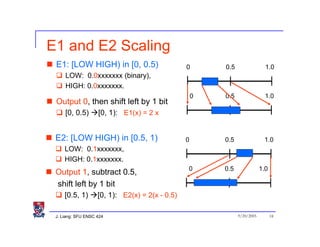

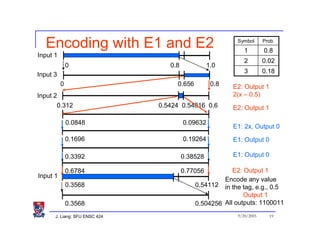

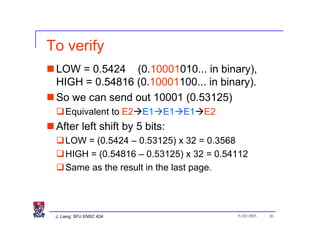

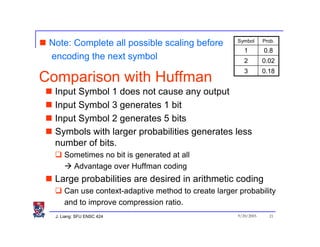

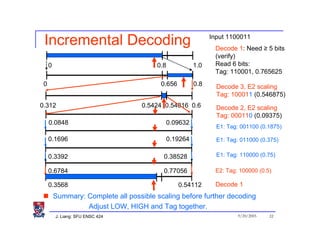

This document discusses arithmetic coding, an entropy encoding technique. It begins with an introduction comparing arithmetic coding to Huffman coding. The document then provides pseudocode for the basic encoding and decoding algorithms. It describes how scaling techniques like E1 and E2 scaling allow for incremental encoding and decoding as well as achieving infinite precision with finite-precision integers. The document outlines applications of arithmetic coding in areas like JBIG, H.264, and JPEG 2000.

![Introduction

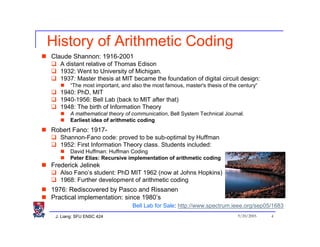

Recall table look-up decoding of Huffman code

N: alphabet size

1

L: Max codeword length

00

Divide [0, 2^L] into N intervals

One interval for one symbol

010 011

Interval size is roughly

proportional to symbol prob. 000 010 011 100

Arithmetic coding applies this idea recursively

Normalizes the range [0, 2^L] to [0, 1].

Map an input sequence to a unique tag in [0, 1).

abcd…..

dcba….. 0 1

J. Liang: SFU ENSC 424 9/20/2005 5](https://image.slidesharecdn.com/06arithmetic1-100208224421-phpapp02/85/06-Arithmetic-1-5-320.jpg)