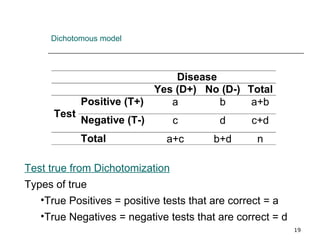

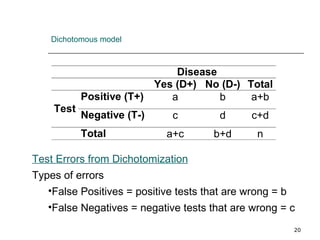

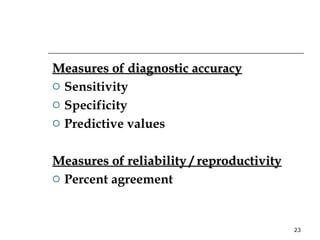

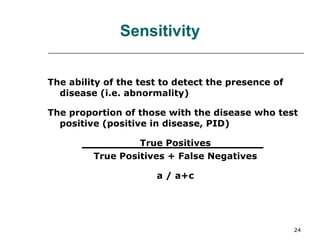

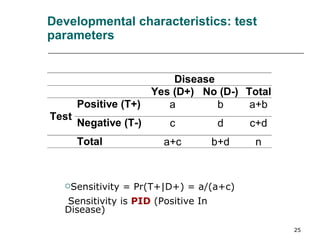

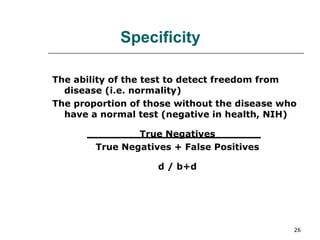

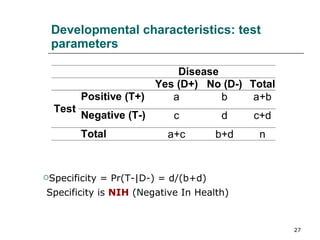

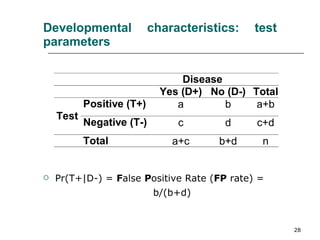

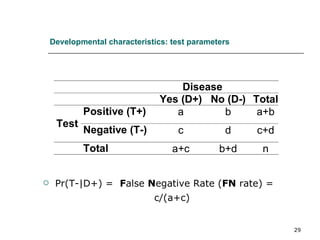

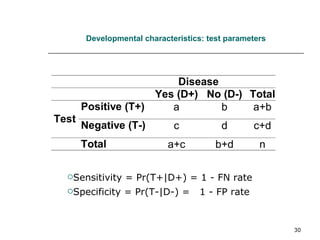

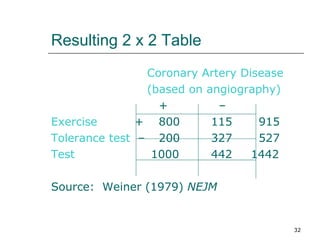

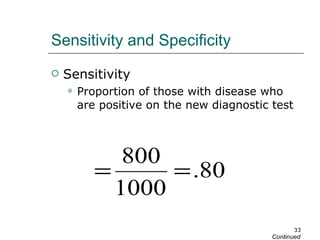

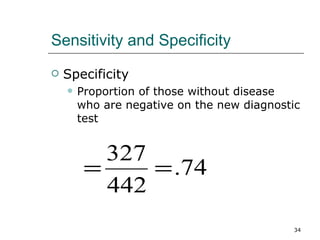

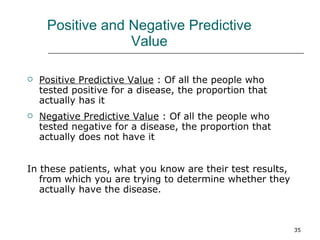

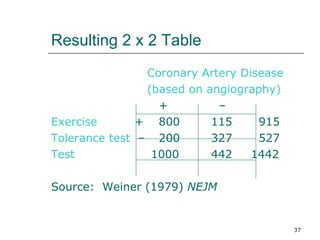

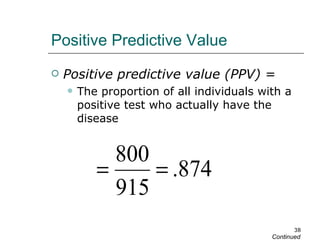

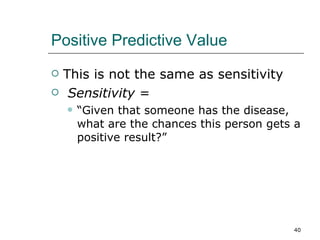

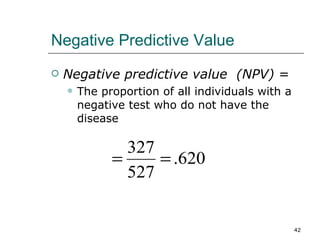

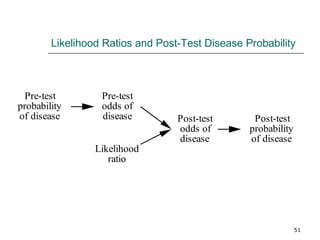

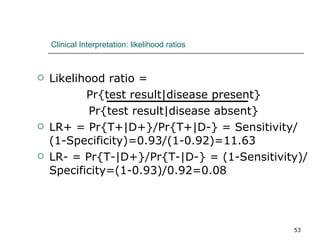

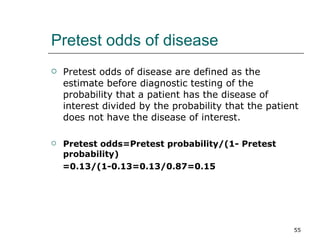

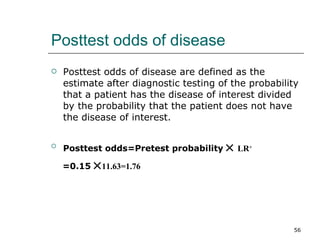

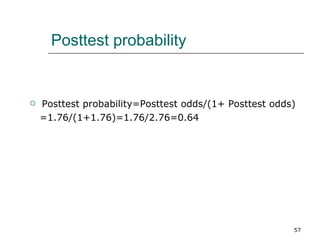

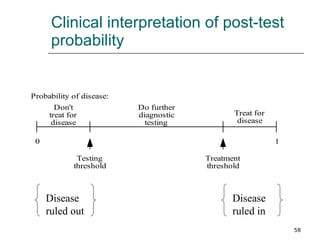

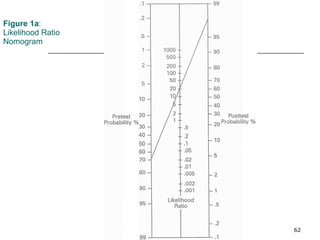

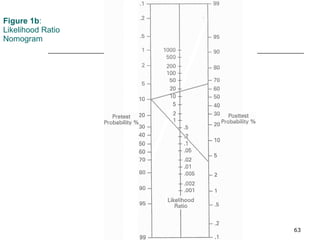

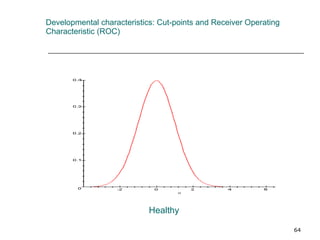

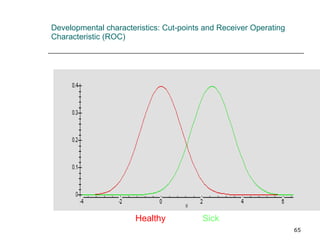

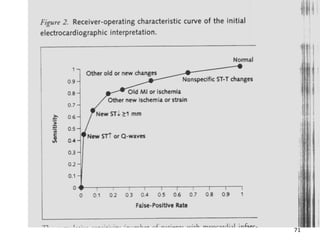

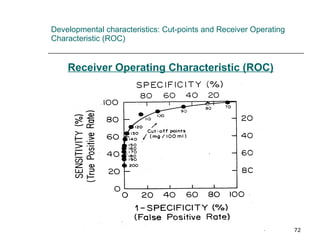

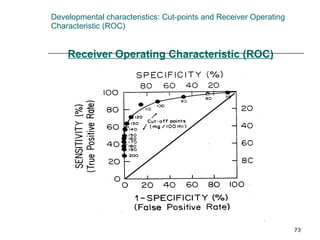

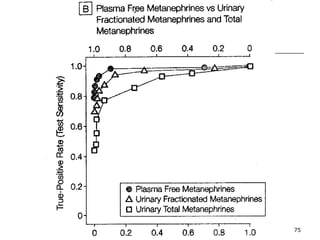

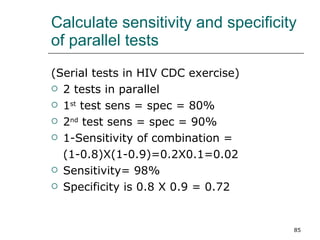

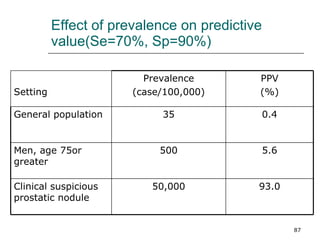

This document provides an overview of diagnostic testing and assessing diagnostic accuracy. It defines key concepts like sensitivity, specificity, predictive values, and likelihood ratios. Sensitivity measures the ability of a test to detect true positives, or people with the disease. Specificity measures the ability to detect true negatives, or people without the disease. Positive and negative predictive values depend on disease prevalence and estimate the probability of actual disease given a test result. Likelihood ratios quantify how much a test result changes the odds of disease. The document uses examples to demonstrate calculating and interpreting these performance measures.

![Diagnostic Testing Prof. Wei-Qing Chen MD PhD Department of Biostatistics and Epidemiology School of Public Health 87332199 [email_address]](https://image.slidesharecdn.com/05diagnostictestscwq-100703034350-phpapp02/75/05-diagnostic-tests-cwq-1-2048.jpg)