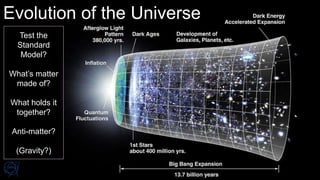

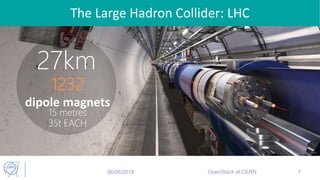

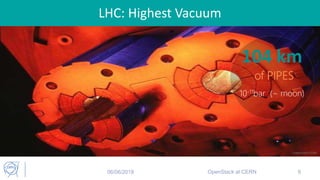

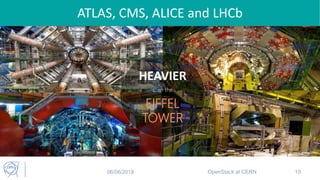

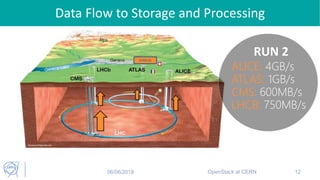

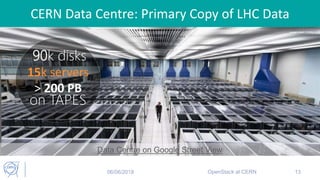

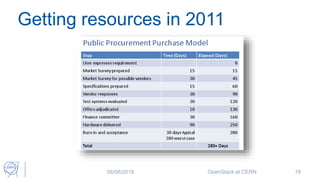

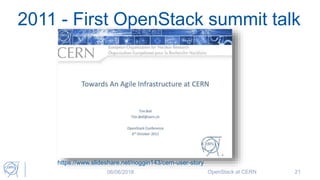

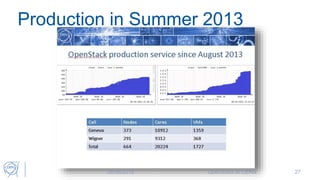

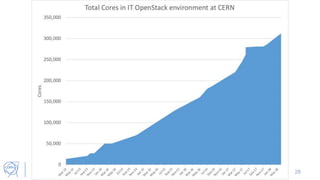

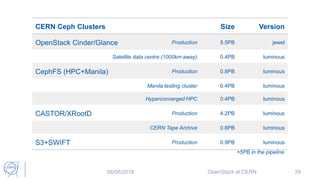

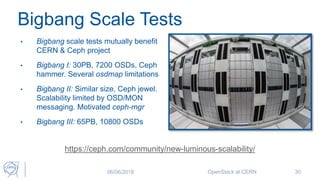

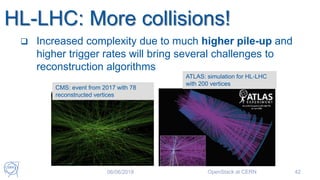

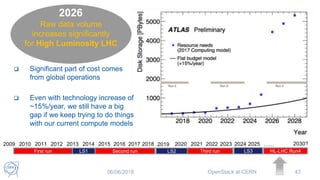

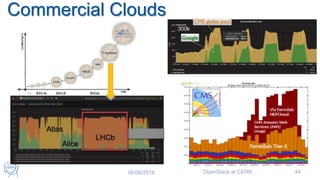

This document summarizes Tim Bell's presentation on OpenStack at CERN. It discusses how CERN adopted OpenStack in 2011 to manage its growing computing infrastructure needs for processing massive data sets from the Large Hadron Collider. OpenStack has since been scaled up to manage over 300,000 CPU cores and 500,000 physics jobs per day across CERN's private cloud. The document also briefly outlines CERN's use of other open source technologies like Ceph and Kubernetes.