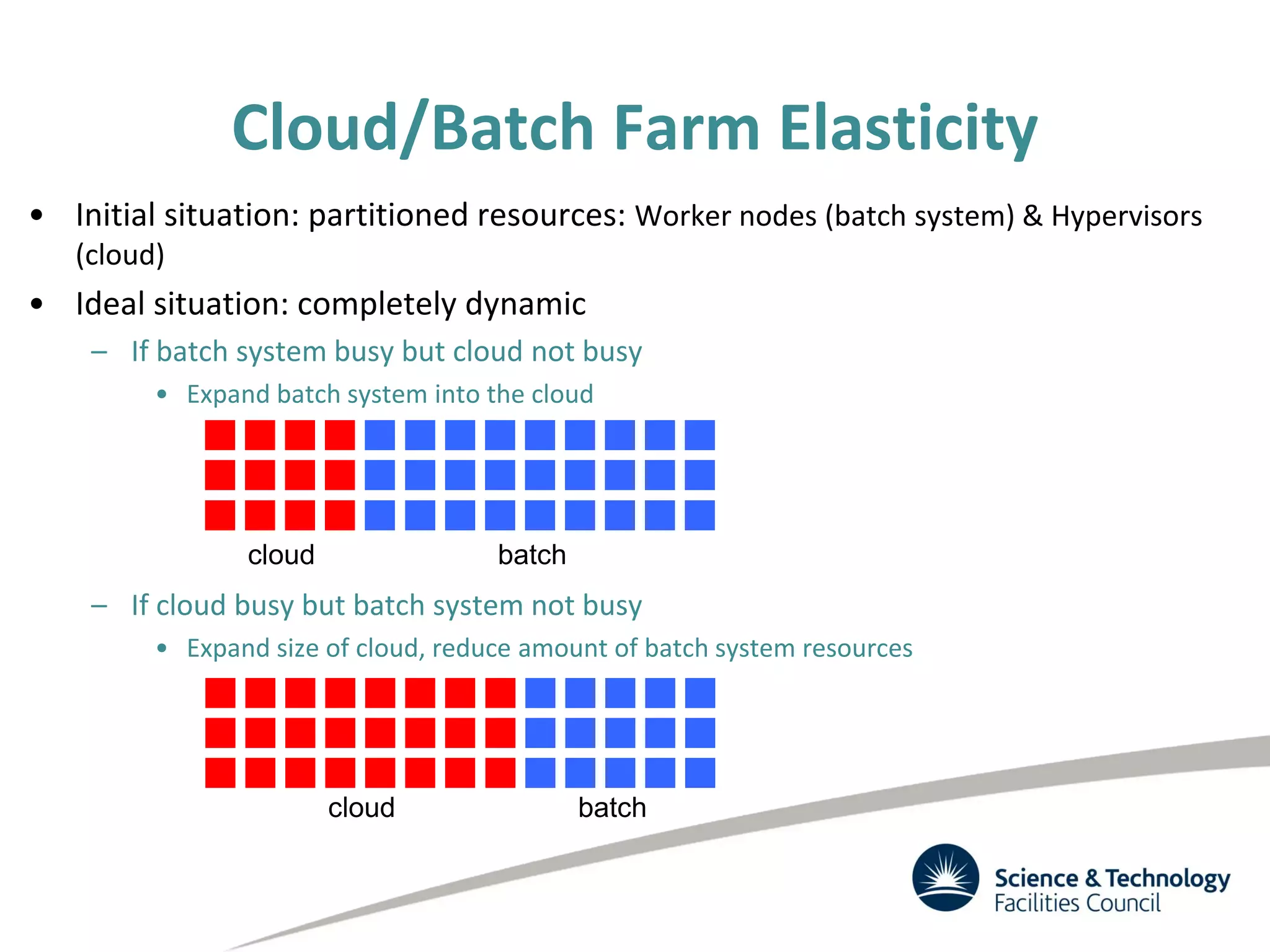

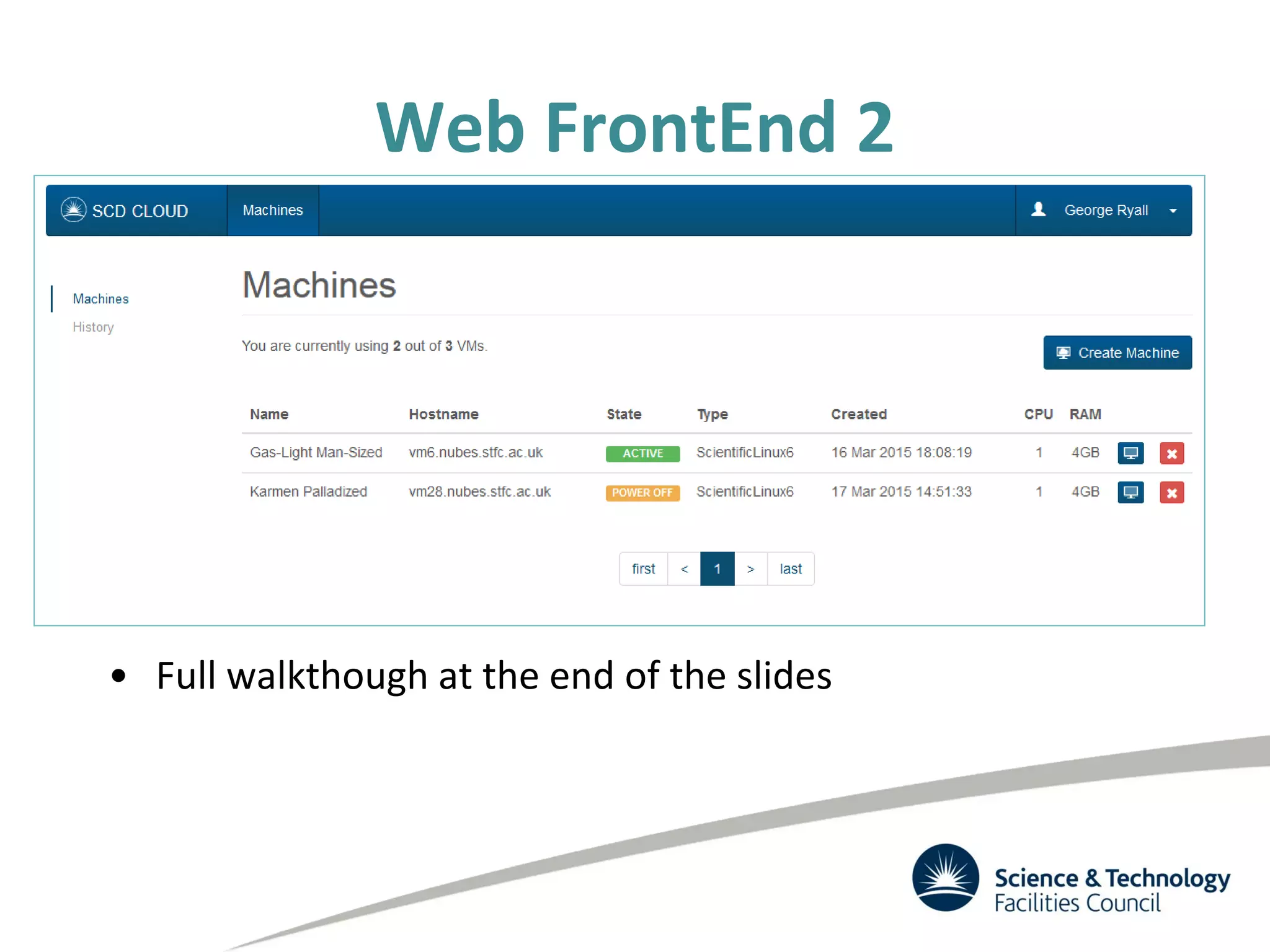

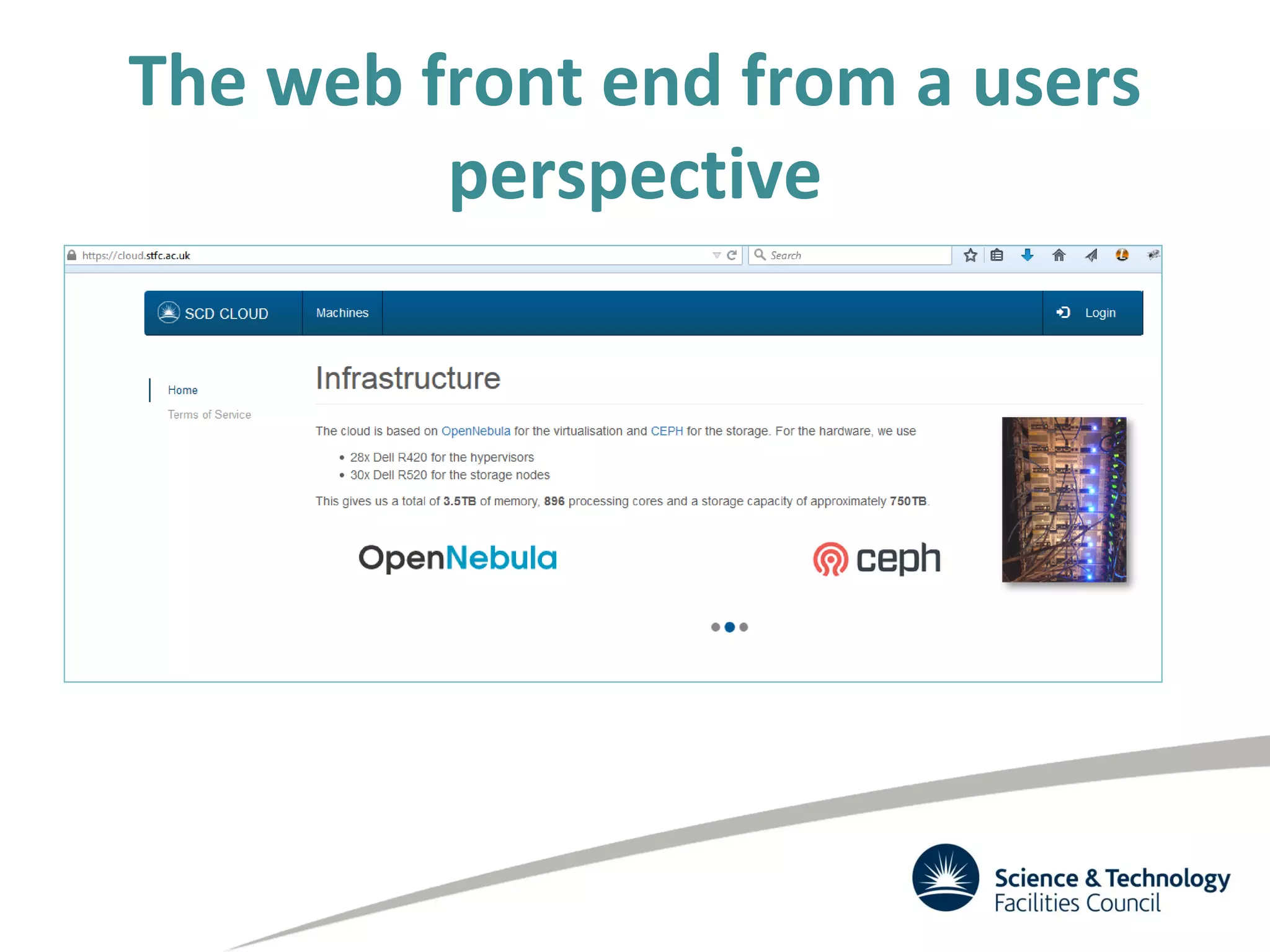

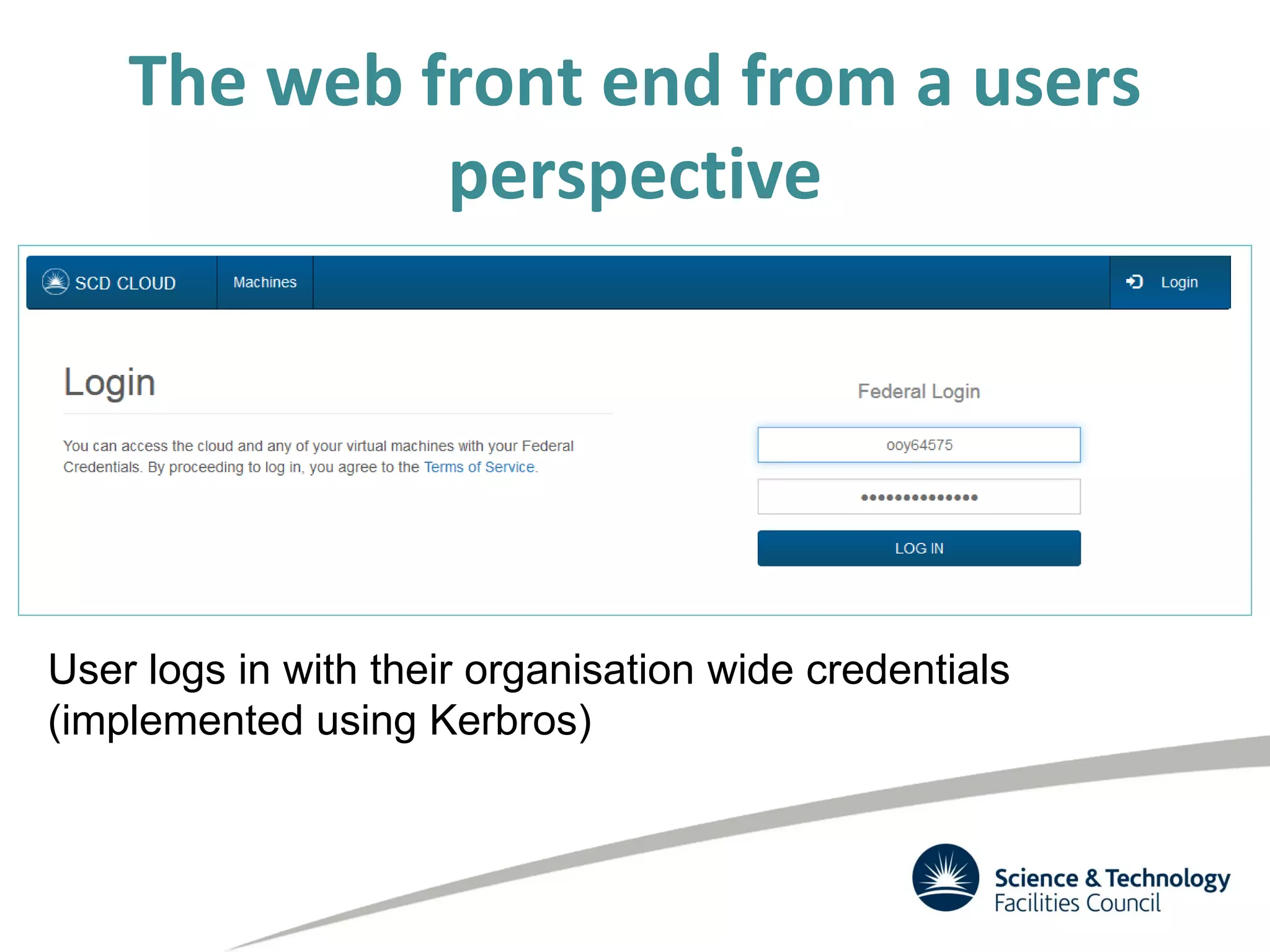

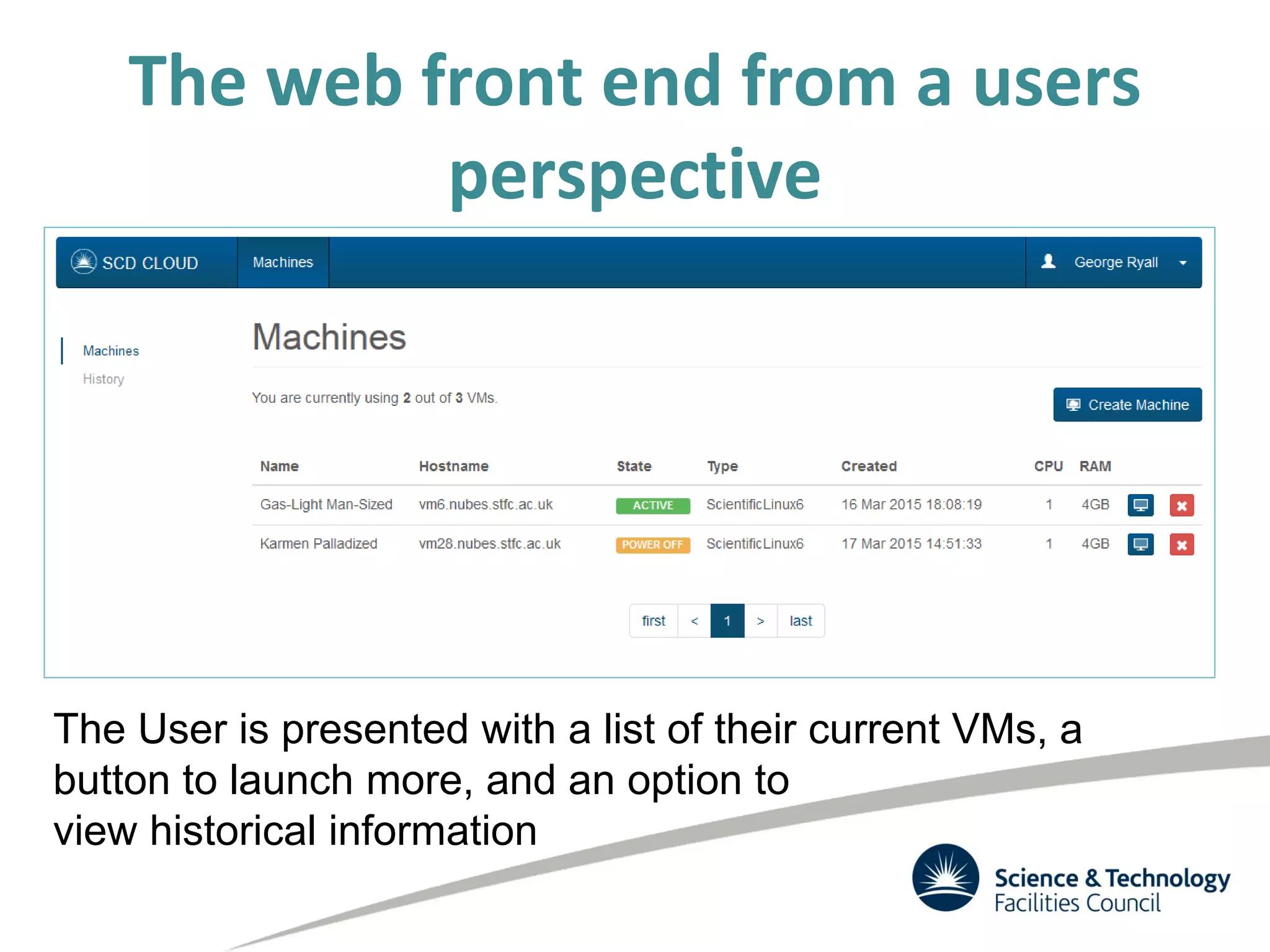

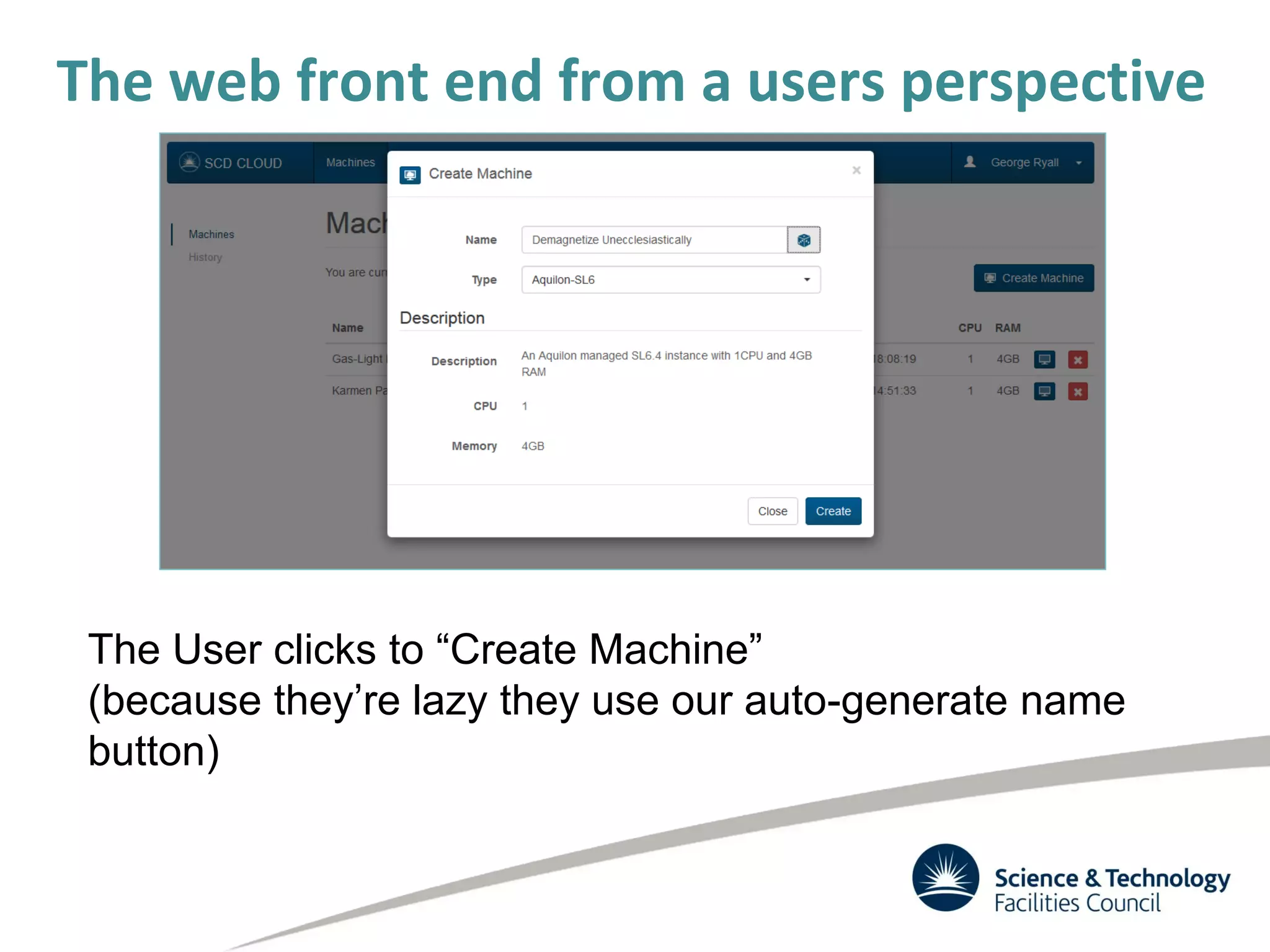

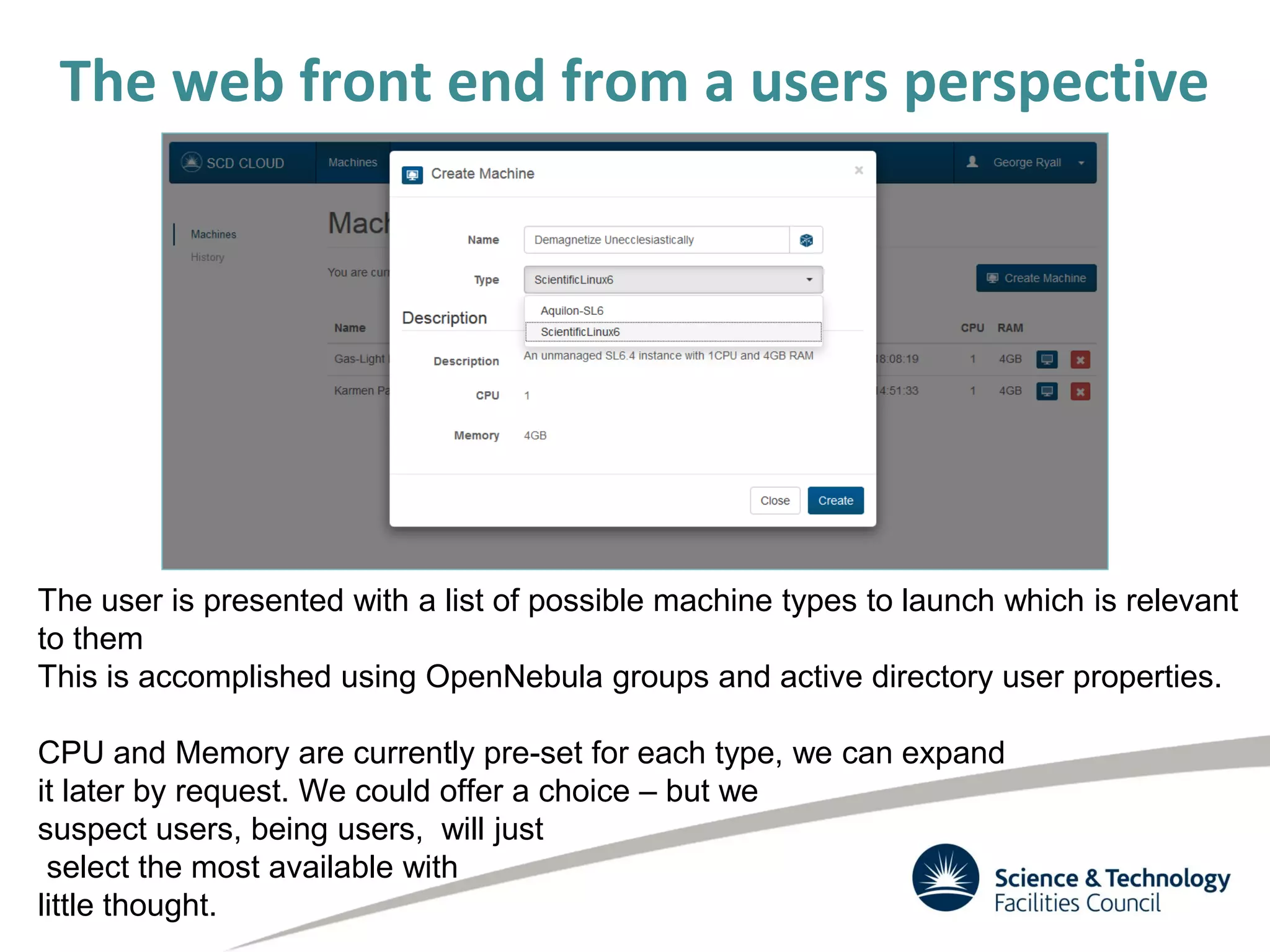

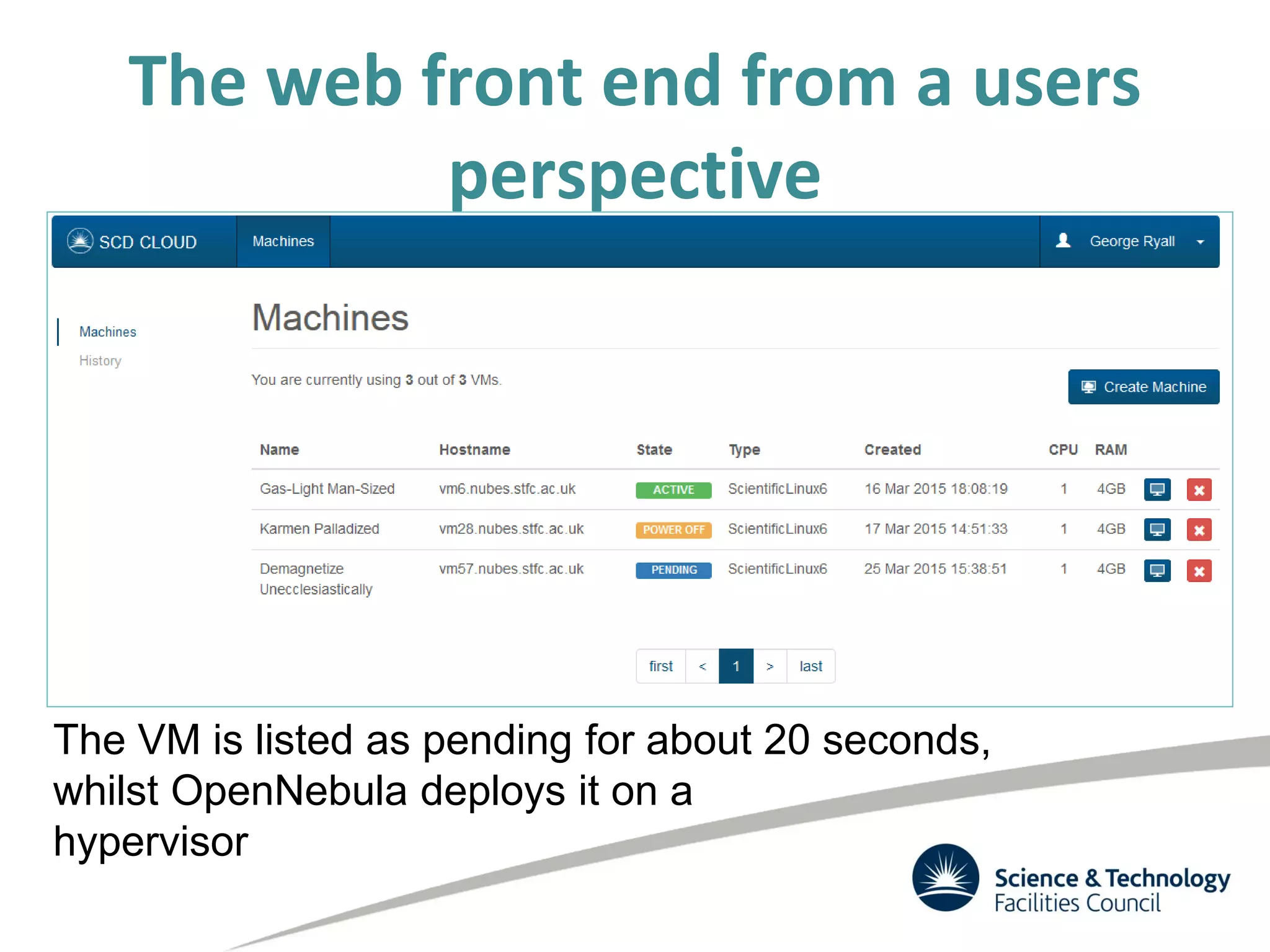

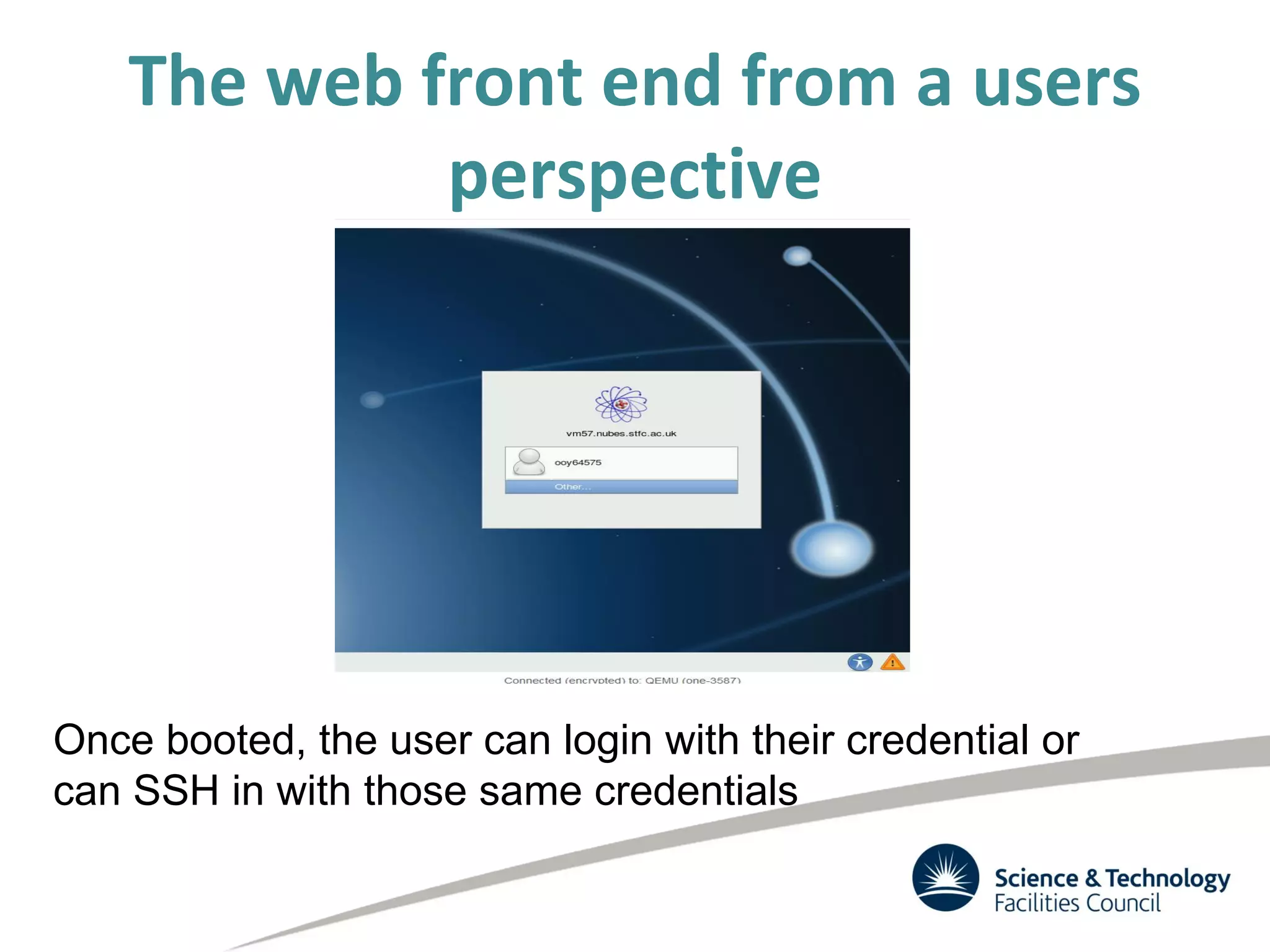

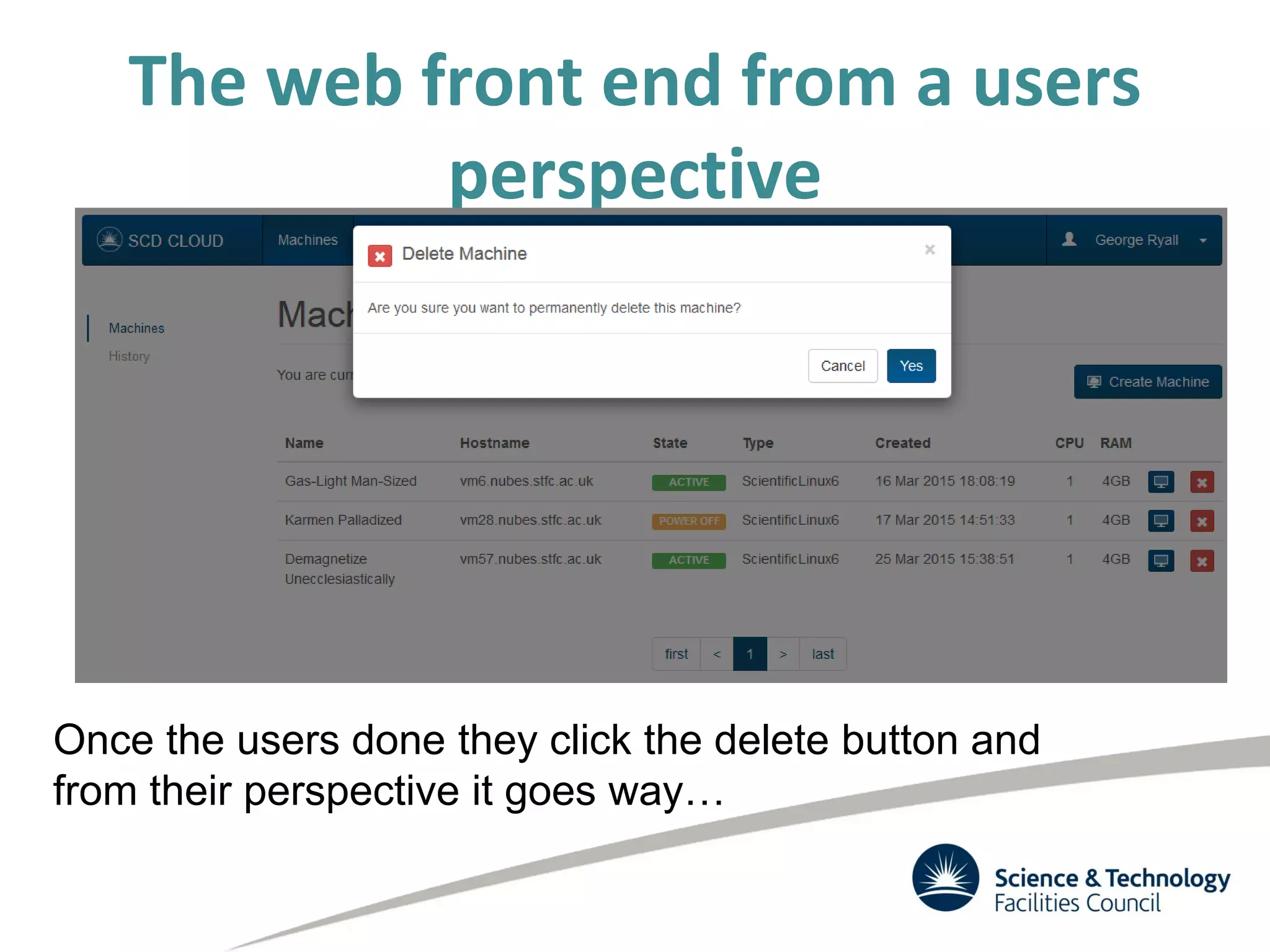

The document discusses the STFC's cloud computing capabilities for scientific research, outlining its background, use cases, and current projects. It highlights the integration of self-service virtual machines and cloud bursting with batch processing systems, along with the need for network isolation and traceability for user activity. The summary also notes the development of a user-friendly web interface and future plans for system upgrades and feature enhancements.