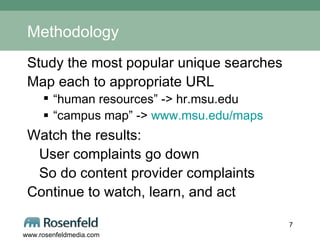

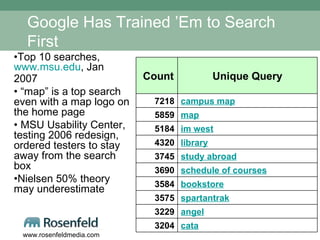

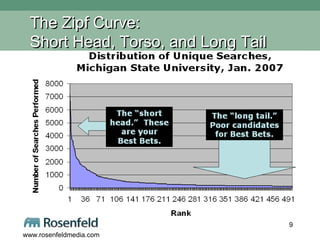

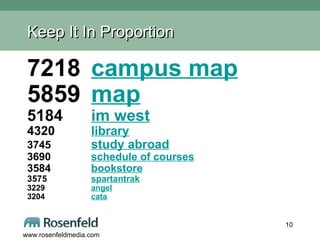

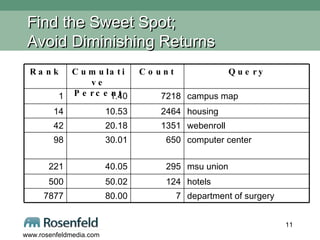

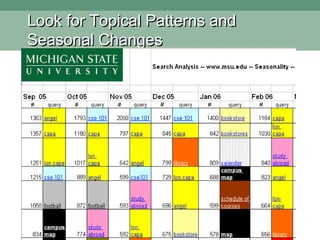

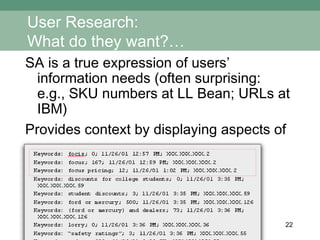

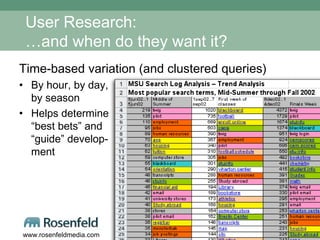

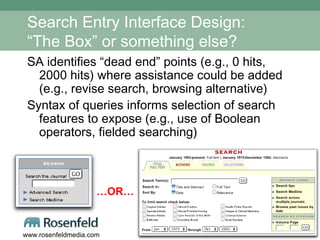

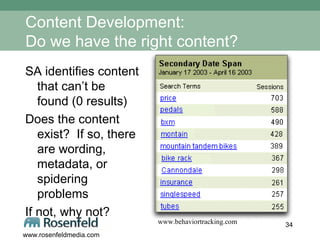

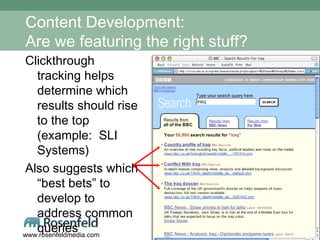

This document discusses the use of search analytics to improve information architecture by analyzing user search logs to better meet their needs. It emphasizes the importance of understanding user behavior and adapting search functionalities accordingly to enhance user satisfaction. The authors, Rich Wiggins and Lou Rosenfeld, advocate for continuous monitoring and refining of search systems to address user queries and improve content discoverability.

![Anatomy of a Search Log (from Google Search Appliance) Critical elements in bold: IP address , time/date stamp , query , and # of results: XXX.XXX.X.104 - - [ 10/Jul/2006:10:25:46 -0800] "GET /search?access=p&entqr=0&output=xml_no_dtd&sort=date%3AD%3AL%3Ad1&ud=1&site=AllSites&ie=UTF-8&client=www&oe=UTF-8&proxystylesheet=www&q= lincense+plate &ip=XXX.XXX.X.104 HTTP/1.1" 200 971 0 0.02 XXX.XXX.X.104 - - [ 10/Jul/2006:10:25:48 -0800] "GET /search?access=p&entqr=0&output=xml_no_dtd&sort=date%3AD%3AL%3Ad1&ie=UTF-8&client=www&q= license+plate &ud=1&site=AllSites&spell=1&oe=UTF-8&proxystylesheet=www&ip=XXX.XXX.X.104 HTTP/1.1" 200 8283 146 0.16 XXX.XXX.XX.130 - - [ 10/Jul/2006:10:24:38 -0800] "GET /search?access=p&entqr=0&output=xml_no_dtd&sort=date%3AD%3AL%3Ad1&ud=1&site=AllSites&ie=UTF-8&client=www&oe=UTF-8&proxystylesheet=www&q= regional+transportation+governance+commission &ip=XXX.XXX.X.130 HTTP/1.1" 200 9718 62 0.17 Full legend and more examples available from book site](https://image.slidesharecdn.com/using-search-analytics-to-diagnose-whats-ailing-your-information-architecture-26813/85/Using-Search-Analytics-to-Diagnose-What-s-Ailing-your-Information-Architecture-16-320.jpg)

![Contact Information Rich Wiggins [email_address] Louis Rosenfeld [email_address] http://rosenfeldmedia.com/books/searchanalytics](https://image.slidesharecdn.com/using-search-analytics-to-diagnose-whats-ailing-your-information-architecture-26813/85/Using-Search-Analytics-to-Diagnose-What-s-Ailing-your-Information-Architecture-41-320.jpg)