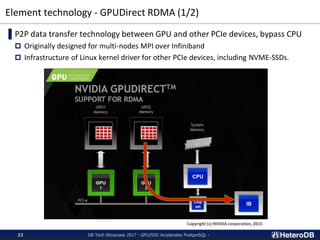

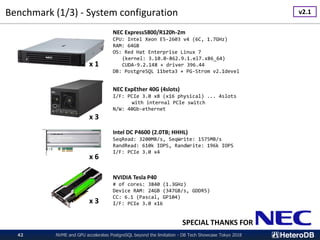

The document discusses how NVMe and GPU technology can enhance PostgreSQL's query execution performance, achieving throughput rates of up to 13.5 GB/s with a single-node setup. It details the architecture of PG-Strom, a PostgreSQL extension that utilizes GPUs for accelerating SQL workloads, as well as the infrastructure involving hardware components like NVIDIA Tesla GPUs and NVMe SSDs. The presentation was given by Kohei Kaigai, CEO of HeteroDB, and highlights the effectiveness of using GPUs for data analytics and machine learning in a database context.

![Here are mysterious benchmark results

NVME and GPU accelerates PostgreSQL beyond the limitation - DB Tech Showcase Tokyo 20182

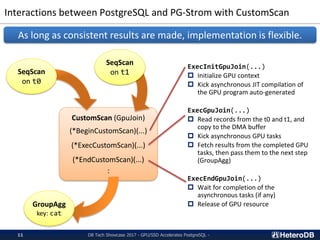

Benchmark conditions:

By the PostgreSQL v11beta3 + PG-Strom v2.1devel on a single-node server system

13 queries of Star-schema benchmark onto the 1055GB data set

0

2,000

4,000

6,000

8,000

10,000

12,000

14,000

Q1_1 Q1_2 Q1_3 Q2_1 Q2_2 Q2_3 Q3_1 Q3_2 Q3_3 Q3_4 Q4_1 Q4_2 Q4_3

QueryExecutionThroughput[MB/s]

Star Schema Benchmark for PostgreSQL 11beta3 + PG-Strom v2.1devel

PG-Strom v2.1devel

max 13.5GB/s in query execution throughput on single-node PostgreSQL](https://image.slidesharecdn.com/20180920dbtsbeyondpostgresqlen-180918041958/85/20180920_DBTS_PGStrom_EN-2-320.jpg)

![Characteristics of GPU (2/3) – Reduction algorithm

●item[0]

step.1 step.2 step.4step.3

Calculation of the total

sum of an array by GPU

Σi=0...N-1item[i]

◆

●

▲ ■ ★

● ◆

●

● ◆ ▲

●

● ◆

●

● ◆ ▲ ■

●

● ◆

●

● ◆ ▲

●

● ◆

●

item[1]

item[2]

item[3]

item[4]

item[5]

item[6]

item[7]

item[8]

item[9]

item[10]

item[11]

item[12]

item[13]

item[14]

item[15]

Total sum of items[]

with log2N steps

Inter-cores synchronization with hardware support

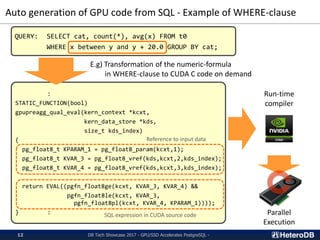

SELECT count(X),

sum(Y),

avg(Z)

FROM my_table;

Same logic is internally used to

implement aggregate function.

DB Tech Showcase 2017 - GPU/SSD Accelerates PostgreSQL -6](https://image.slidesharecdn.com/20180920dbtsbeyondpostgresqlen-180918041958/85/20180920_DBTS_PGStrom_EN-6-320.jpg)

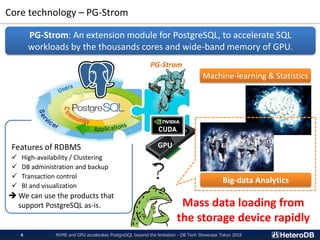

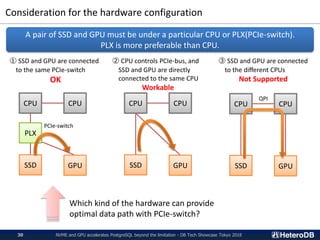

![Benchmark Results – single-node version

NVME and GPU accelerates PostgreSQL beyond the limitation - DB Tech Showcase Tokyo 201821

2172.3 2159.6 2158.9 2086.0 2127.2 2104.3

1920.3

2023.4 2101.1 2126.9

1900.0 1960.3

2072.1

6149.4 6279.3 6282.5

5985.6 6055.3 6152.5

5479.3

6051.2 6061.5 6074.2

5813.7 5871.8 5800.1

0

1000

2000

3000

4000

5000

6000

7000

Q1_1 Q1_2 Q1_3 Q2_1 Q2_2 Q2_3 Q3_1 Q3_2 Q3_3 Q3_4 Q4_1 Q4_2 Q4_3

QueryProcessingThroughput[MB/sec]

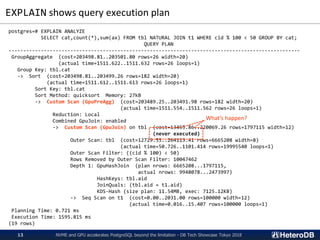

Star Schema Benchmark on NVMe-SSD + md-raid0

PgSQL9.6(SSDx3) PGStrom2.0(SSDx3) H/W Spec (3xSSD)

SSD-to-GPU Direct SQL pulls out an awesome performance close to the H/W spec

Measurement by the Star Schema Benchmark; which is a set of typical batch / reporting workloads.

CPU: Intel Xeon E5-2650v4, RAM: 128GB, GPU: NVIDIA Tesla P40, SSD: Intel 750 (400GB; SeqRead 2.2GB/s)x3

Size of dataset is 353GB (sf: 401), to ensure I/O bounds workload](https://image.slidesharecdn.com/20180920dbtsbeyondpostgresqlen-180918041958/85/20180920_DBTS_PGStrom_EN-21-320.jpg)

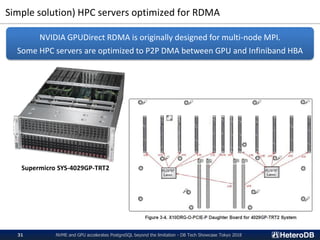

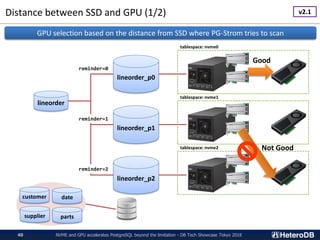

![Distance between SSD and GPU (2/2)

NVME and GPU accelerates PostgreSQL beyond the limitation - DB Tech Showcase Tokyo 201841

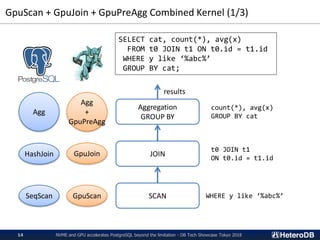

$ pg_ctl restart

:

LOG: - PCIe[0000:80]

LOG: - PCIe(0000:80:02.0)

LOG: - PCIe(0000:83:00.0)

LOG: - PCIe(0000:84:00.0)

LOG: - PCIe(0000:85:00.0) nvme0 (INTEL SSDPEDKE020T7)

LOG: - PCIe(0000:84:01.0)

LOG: - PCIe(0000:86:00.0) GPU0 (Tesla P40)

LOG: - PCIe(0000:84:02.0)

LOG: - PCIe(0000:87:00.0) nvme1 (INTEL SSDPEDKE020T7)

LOG: - PCIe(0000:80:03.0)

LOG: - PCIe(0000:c0:00.0)

LOG: - PCIe(0000:c1:00.0)

LOG: - PCIe(0000:c2:00.0) nvme2 (INTEL SSDPEDKE020T7)

LOG: - PCIe(0000:c1:01.0)

LOG: - PCIe(0000:c3:00.0) GPU1 (Tesla P40)

LOG: - PCIe(0000:c1:02.0)

LOG: - PCIe(0000:c4:00.0) nvme3 (INTEL SSDPEDKE020T7)

LOG: - PCIe(0000:80:03.2)

LOG: - PCIe(0000:e0:00.0)

LOG: - PCIe(0000:e1:00.0)

LOG: - PCIe(0000:e2:00.0) nvme4 (INTEL SSDPEDKE020T7)

LOG: - PCIe(0000:e1:01.0)

LOG: - PCIe(0000:e3:00.0) GPU2 (Tesla P40)

LOG: - PCIe(0000:e1:02.0)

LOG: - PCIe(0000:e4:00.0) nvme5 (INTEL SSDPEDKE020T7)

LOG: GPU<->SSD Distance Matrix

LOG: GPU0 GPU1 GPU2

LOG: nvme0 ( 3) 7 7

LOG: nvme5 7 7 ( 3)

LOG: nvme4 7 7 ( 3)

LOG: nvme2 7 ( 3) 7

LOG: nvme1 ( 3) 7 7

LOG: nvme3 7 ( 3) 7

Auto selection of the optimal GPU according to

the distance between PCIe devices

v2.1](https://image.slidesharecdn.com/20180920dbtsbeyondpostgresqlen-180918041958/85/20180920_DBTS_PGStrom_EN-41-320.jpg)

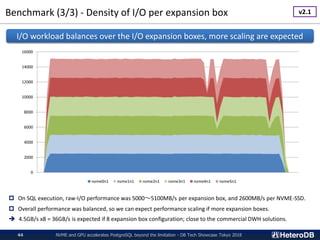

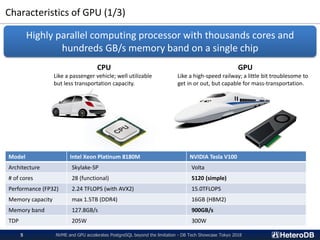

![Benchmark (2/3) - Result of query execution performance

NVME and GPU accelerates PostgreSQL beyond the limitation - DB Tech Showcase Tokyo 201843

13 SSBM queries to 1055GB database in total (a.k.a 351GB per I/O expansion box)

Raw I/O data transfer without SQL execution was up to 9GB/s.

In other words, SQL execution was faster than simple storage read with raw-I/O.

13,401 13,534 13,536 13,330

12,696

12,965

12,533

11,498

12,312 12,419 12,414 12,622 12,594

2,388 2,477 2,493 2,502 2,739 2,831

1,865

2,268 2,442 2,418

1,789 1,848

2,202

0

2,000

4,000

6,000

8,000

10,000

12,000

14,000

Q1_1 Q1_2 Q1_3 Q2_1 Q2_2 Q2_3 Q3_1 Q3_2 Q3_3 Q3_4 Q4_1 Q4_2 Q4_3

QueryExecutionThroughput[MB/s]

Star Schema Benchmark for PgSQL v11beta3 / PG-Strom v2.1devel on NEC ExpEther x3

PostgreSQL v11beta3 PG-Strom v2.1devel Raw I/O Limitation

max 13.5GB/s for query execution performance with 3x I/O expansion boxes!!

v2.1](https://image.slidesharecdn.com/20180920dbtsbeyondpostgresqlen-180918041958/85/20180920_DBTS_PGStrom_EN-43-320.jpg)