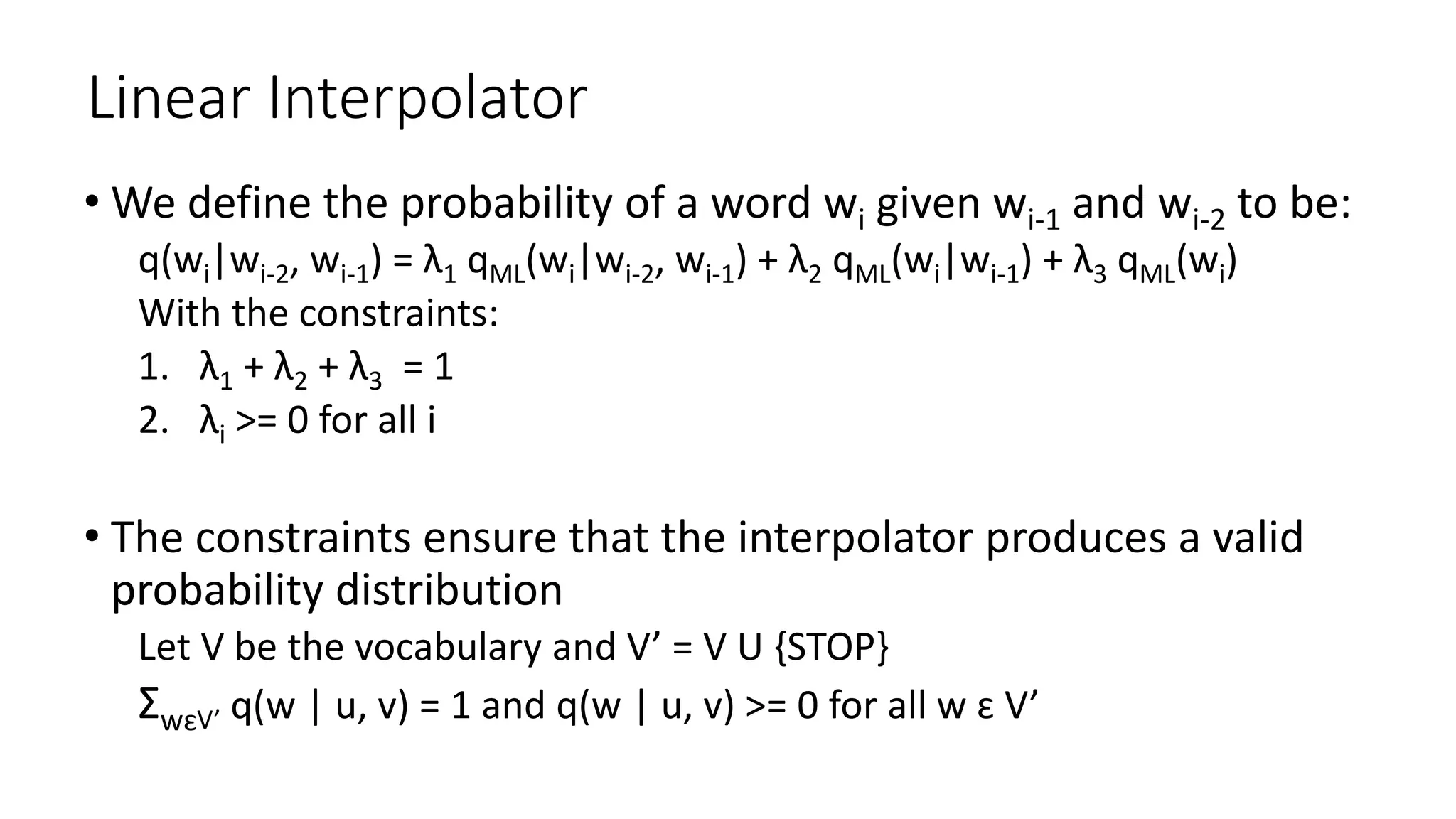

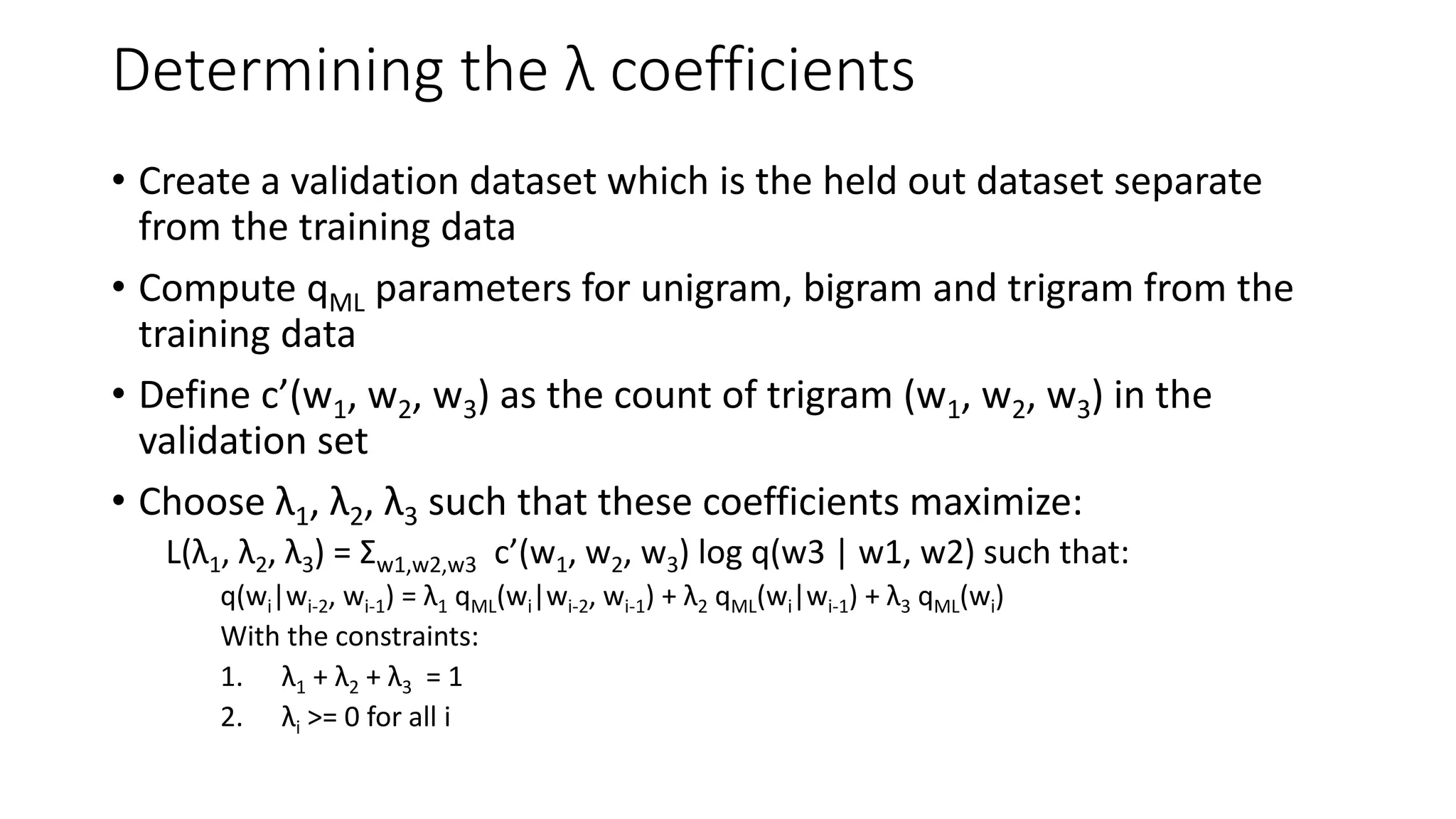

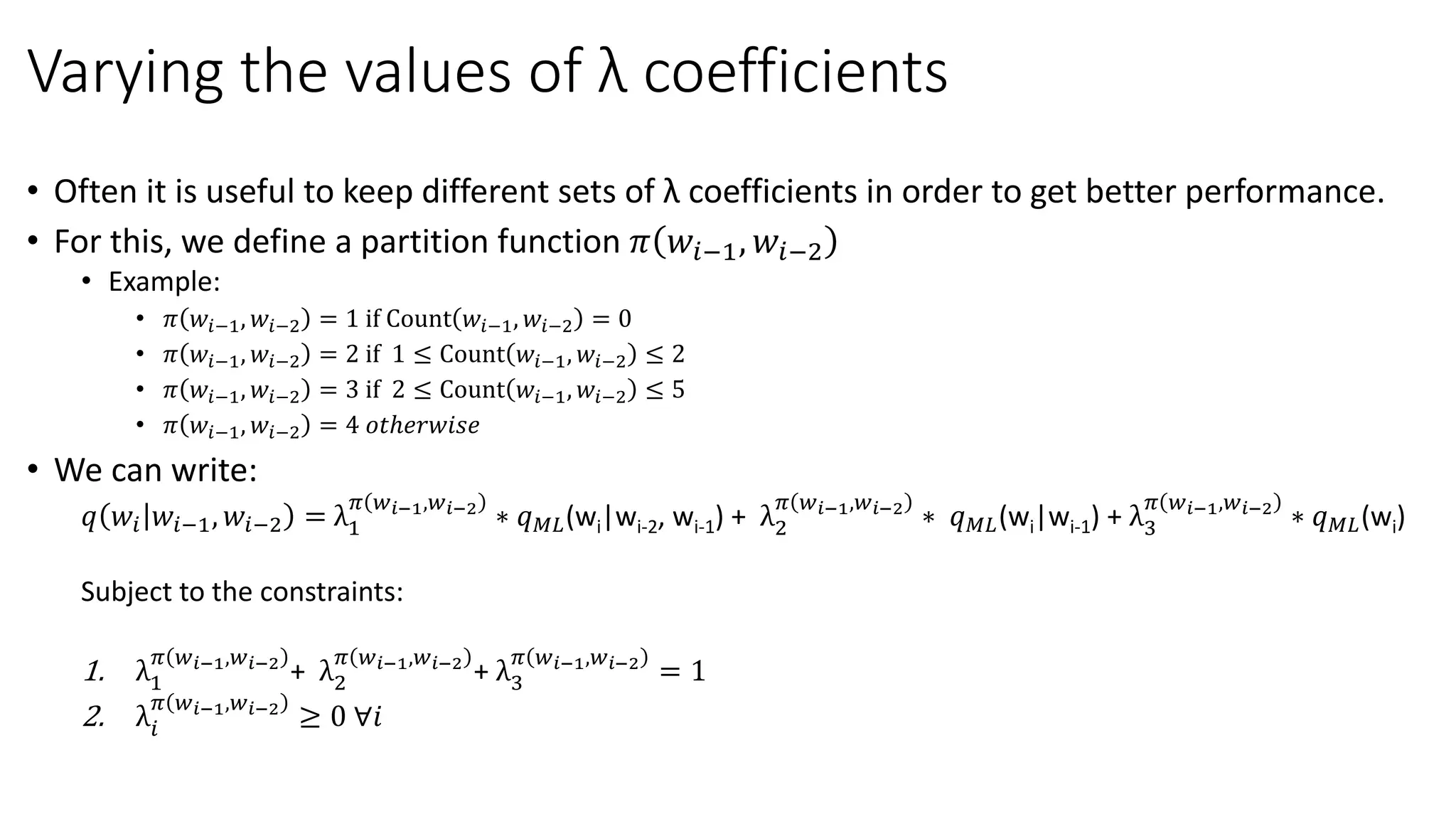

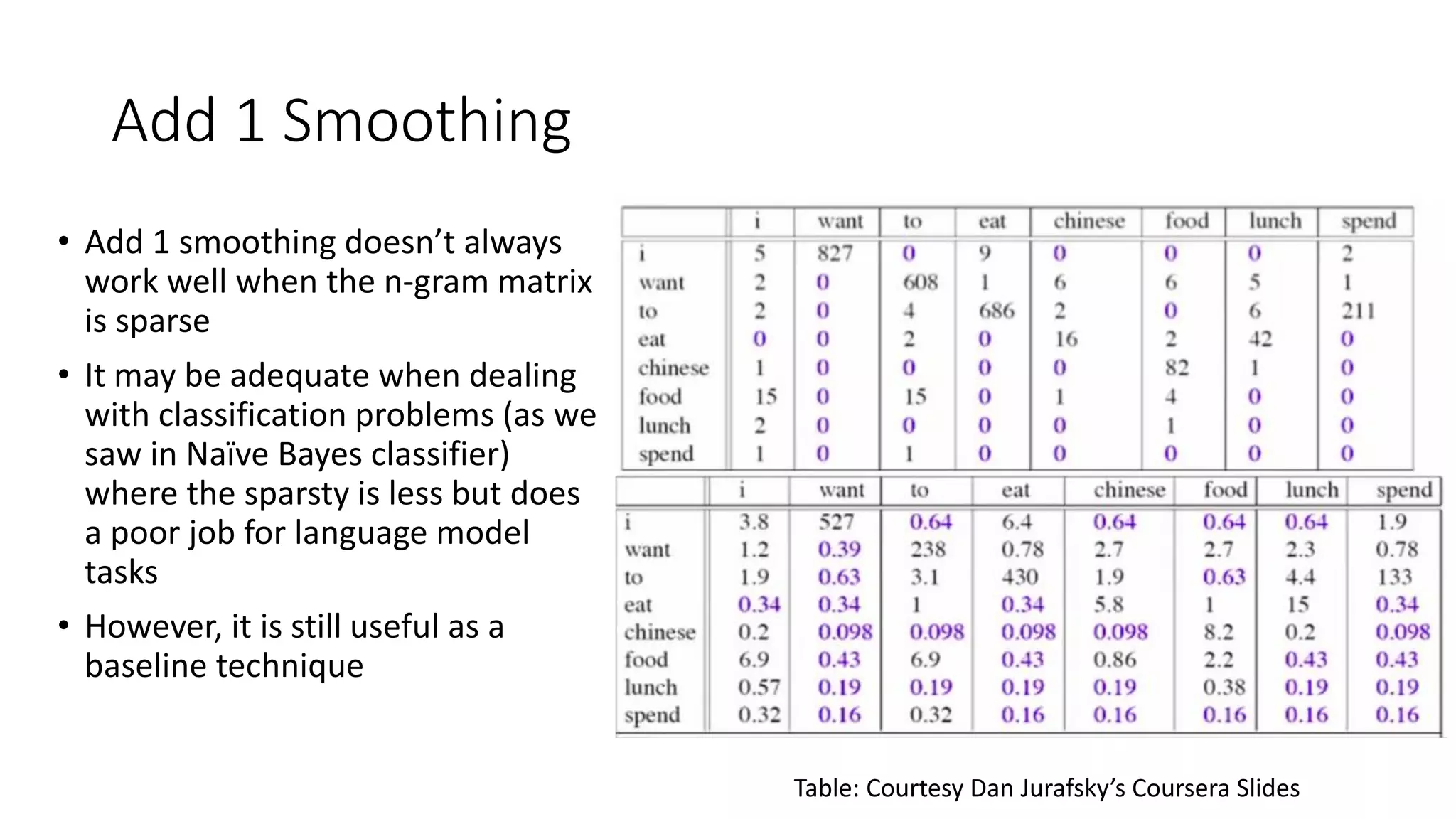

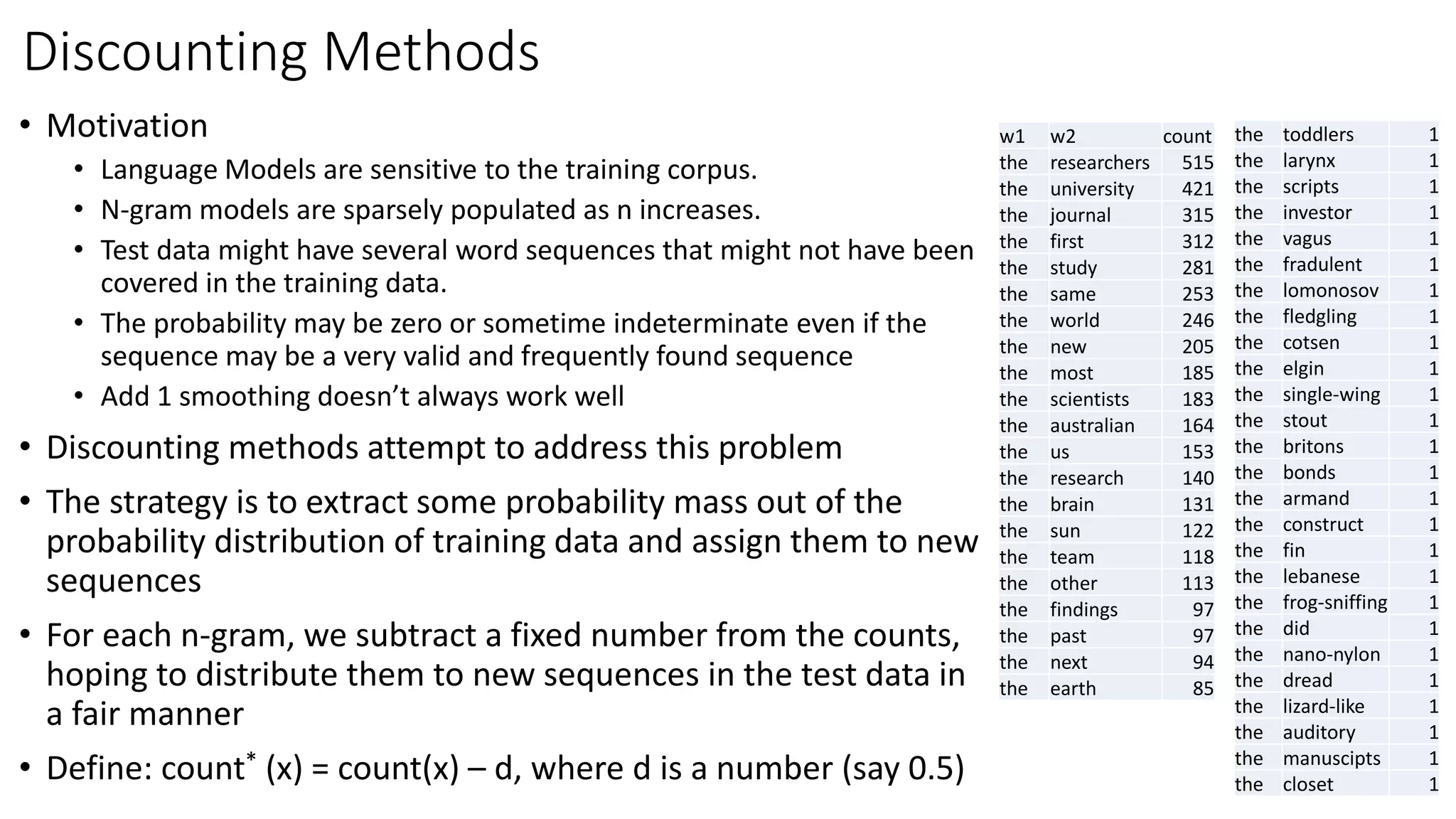

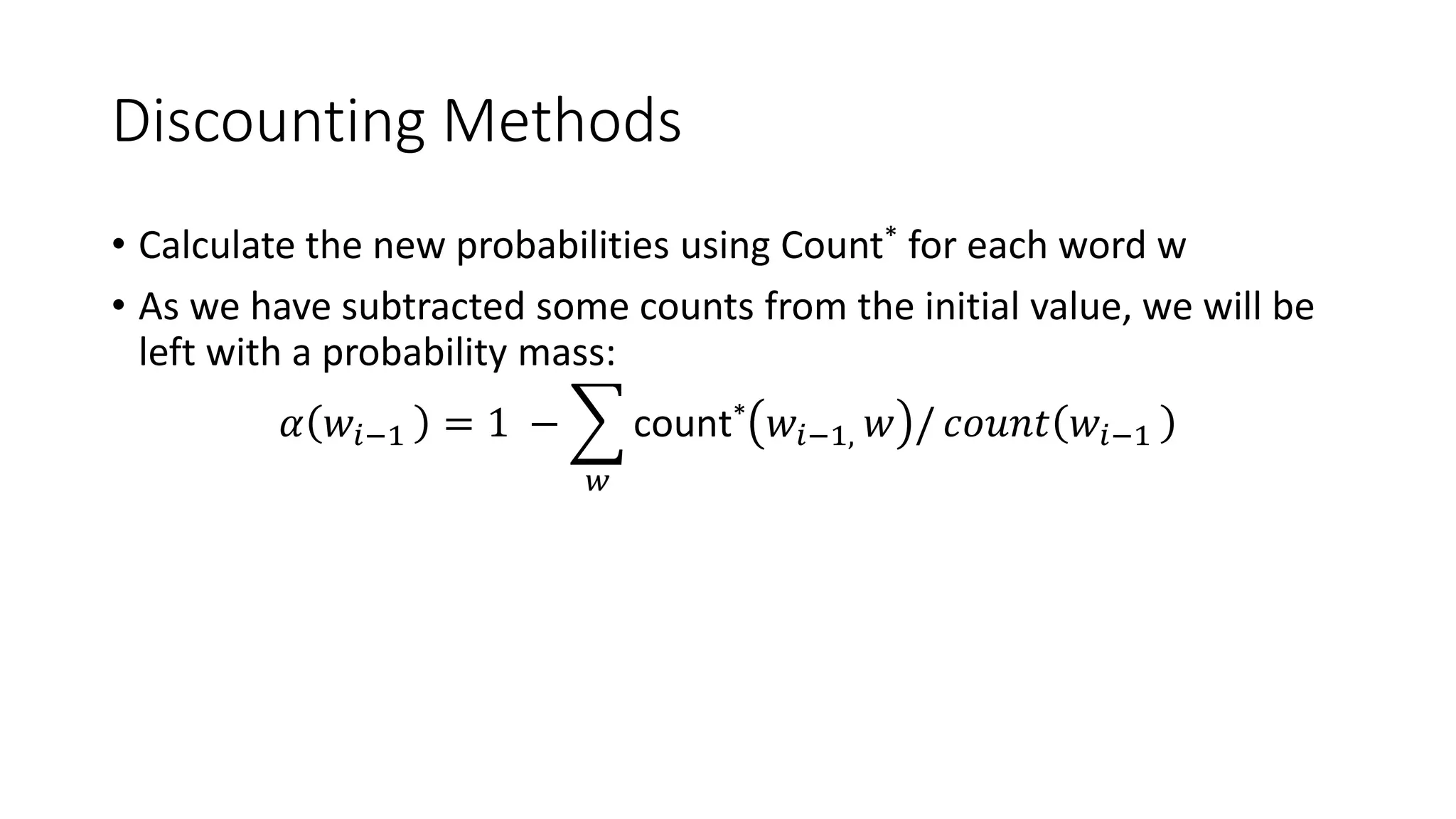

The document discusses language models in natural language processing, focusing on n-gram models such as unigram, bigram, and trigram, along with their parameter estimation techniques, smoothing methods, and performance metrics like perplexity. It highlights the importance of choosing the right model complexity and addresses challenges such as sparse data, along with solutions like back off and interpolation techniques. Additionally, it presents mathematical formulations for estimating probabilities and adjusting coefficients to improve model performance.