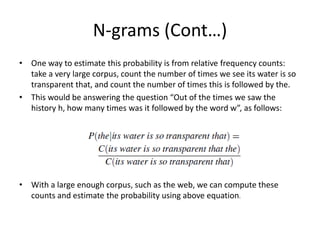

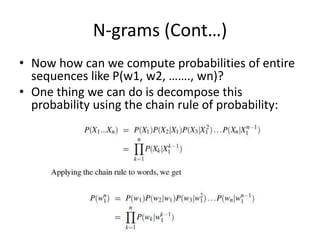

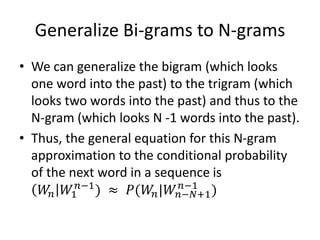

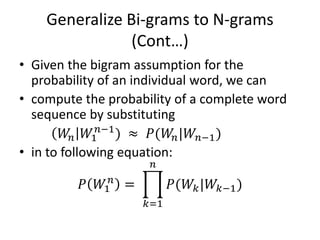

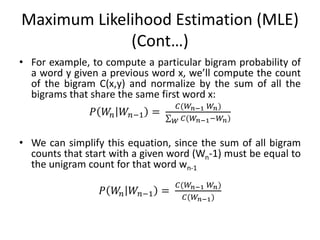

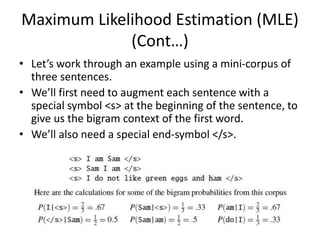

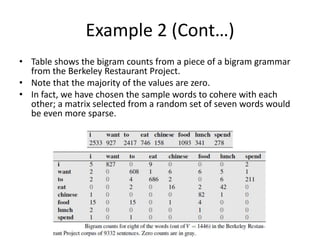

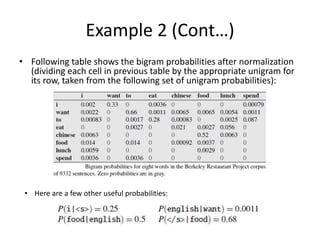

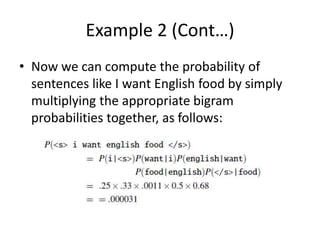

The document discusses N-gram language models, which assign probabilities to sequences of words. An N-gram is a sequence of N words, such as a bigram (two words) or trigram (three words). The N-gram model approximates the probability of a word given its history as the probability given the previous N-1 words. This is called the Markov assumption. Maximum likelihood estimation is used to estimate N-gram probabilities from word counts in a corpus.