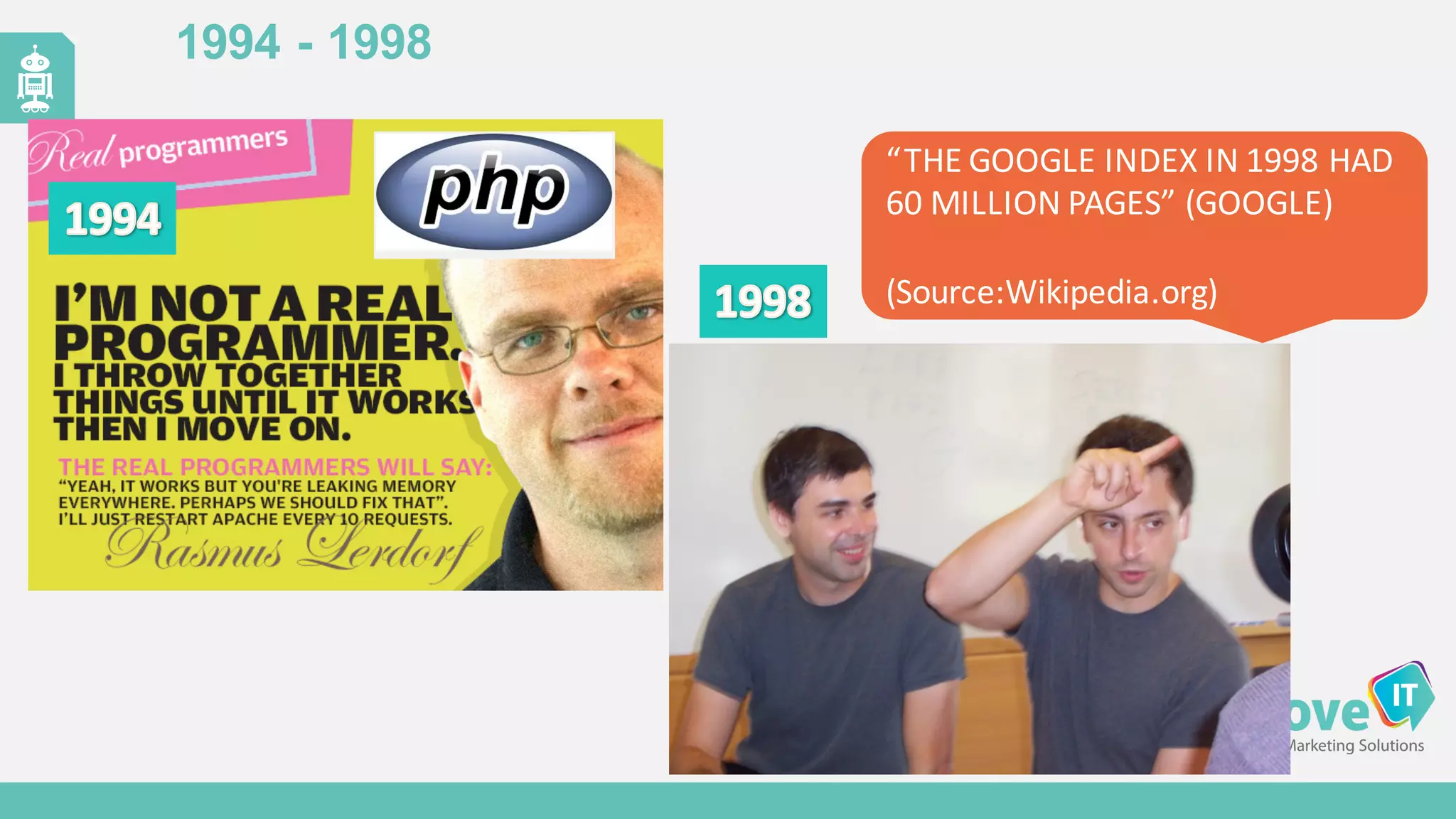

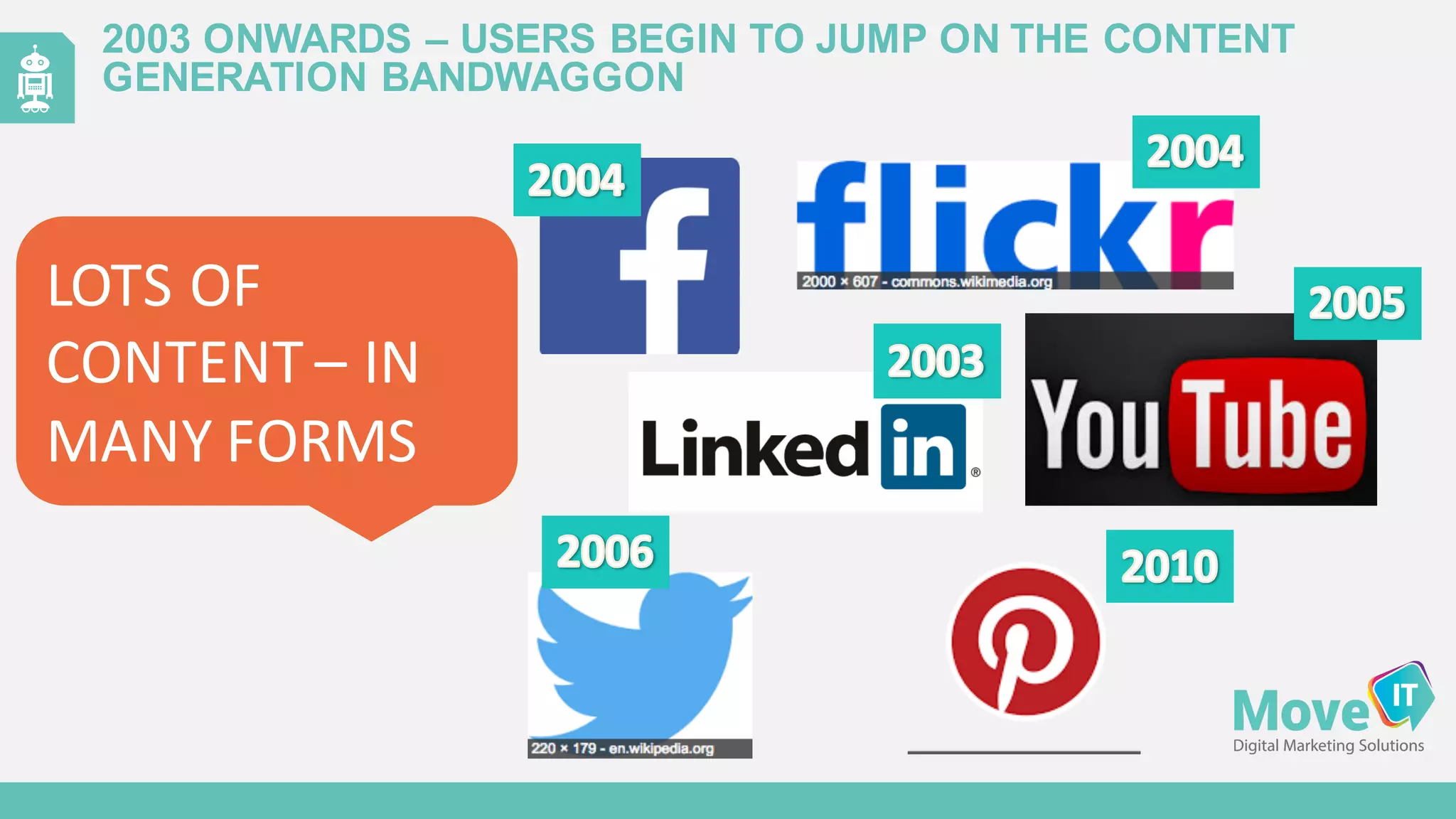

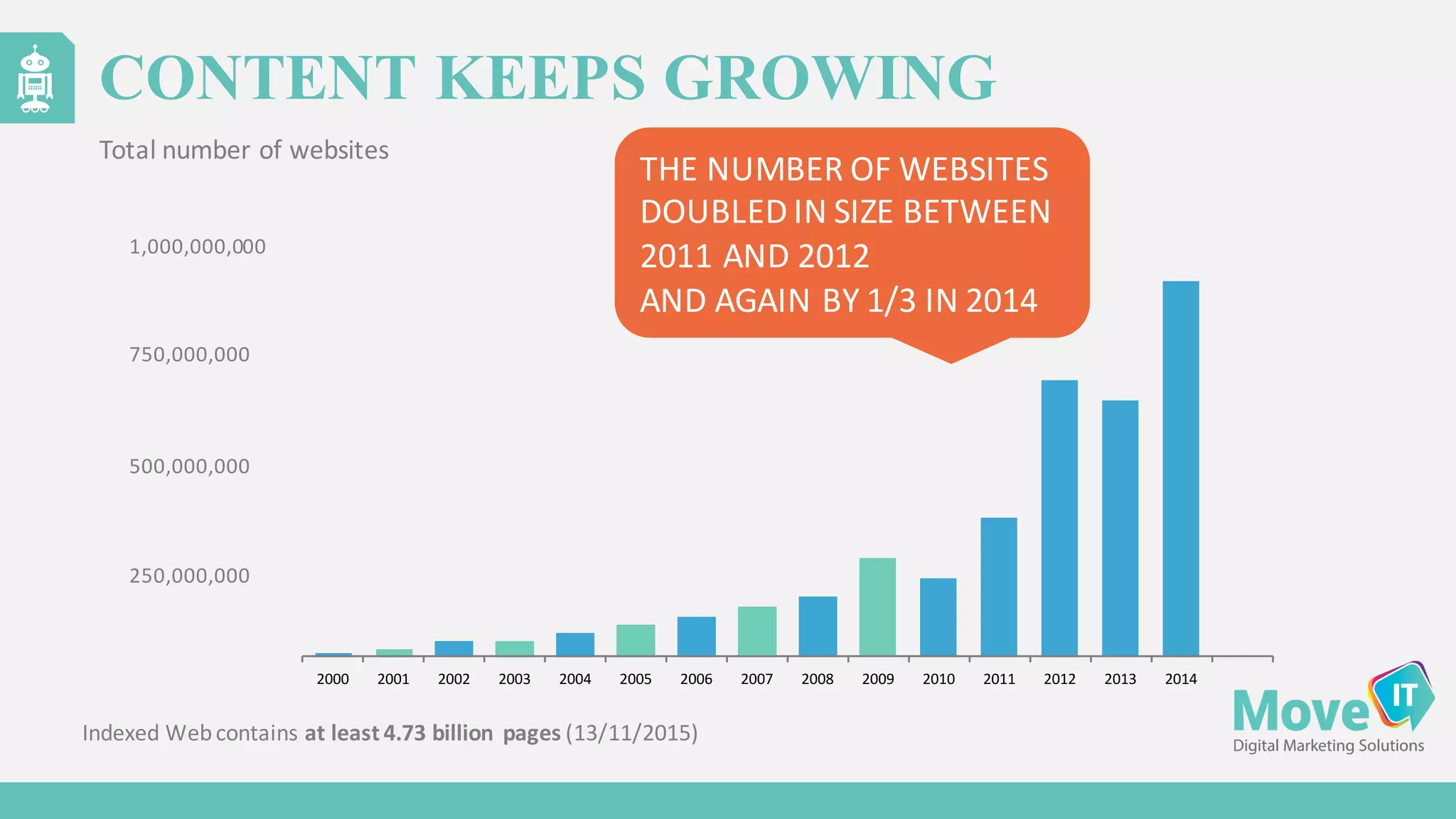

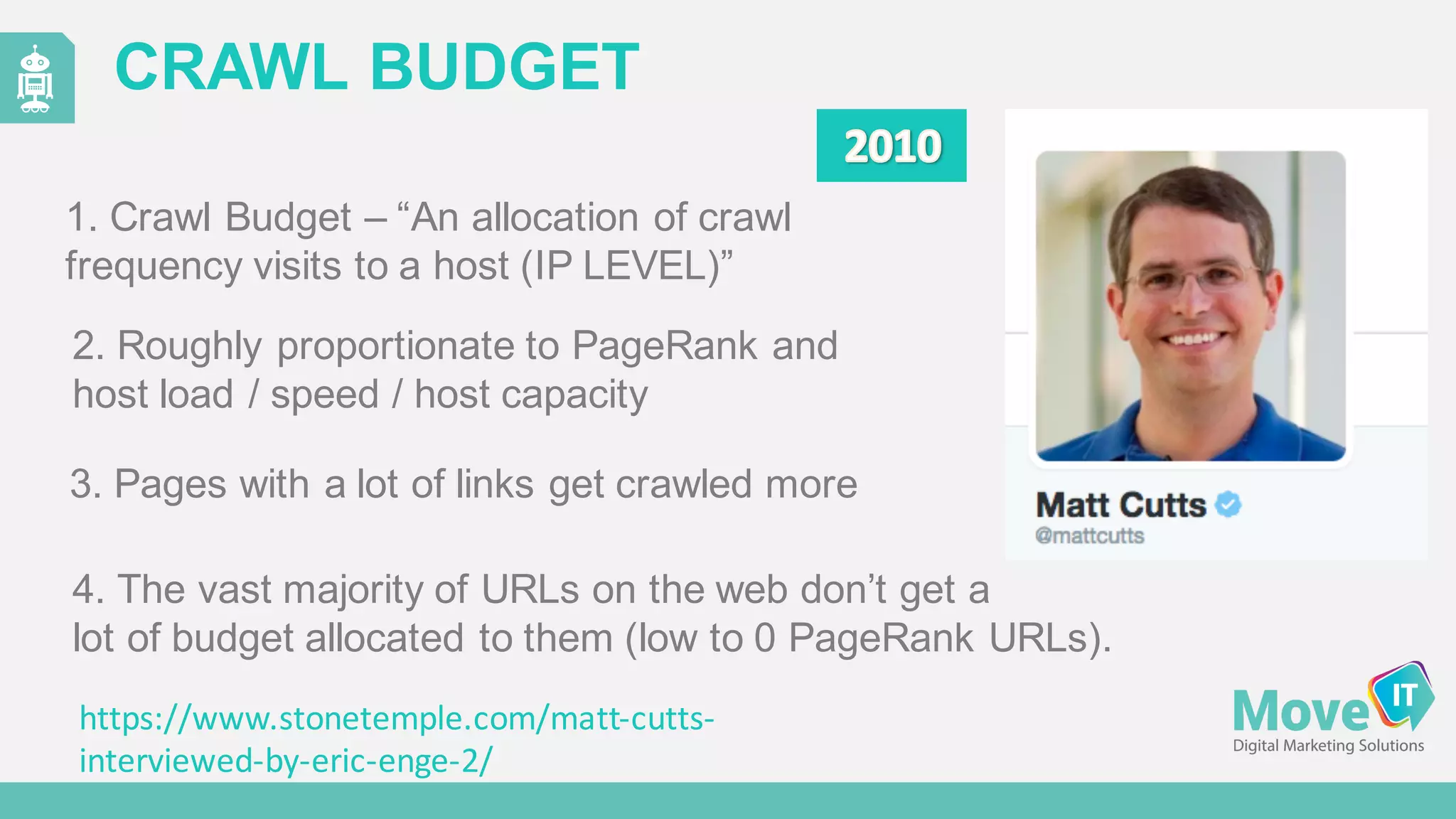

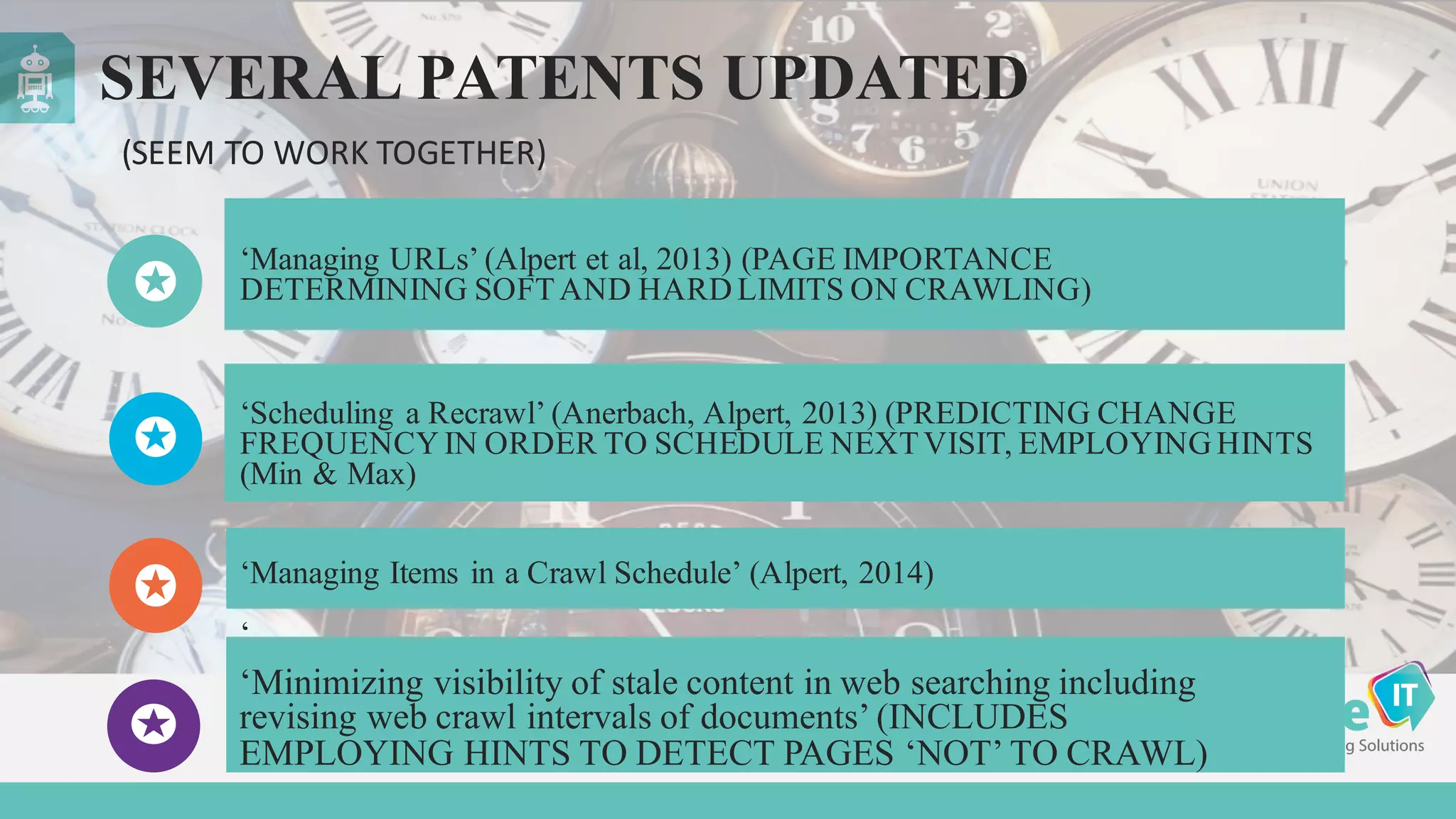

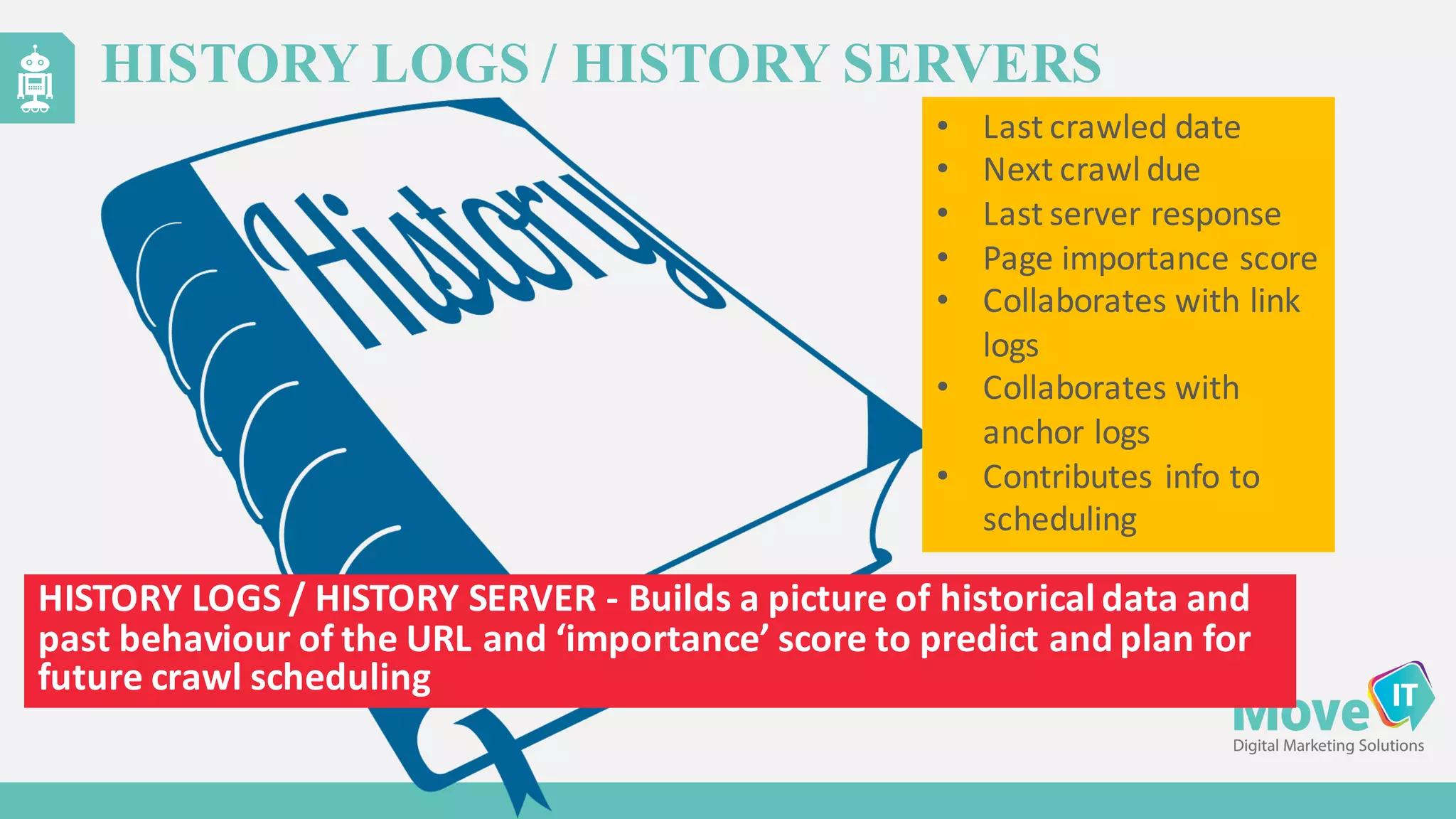

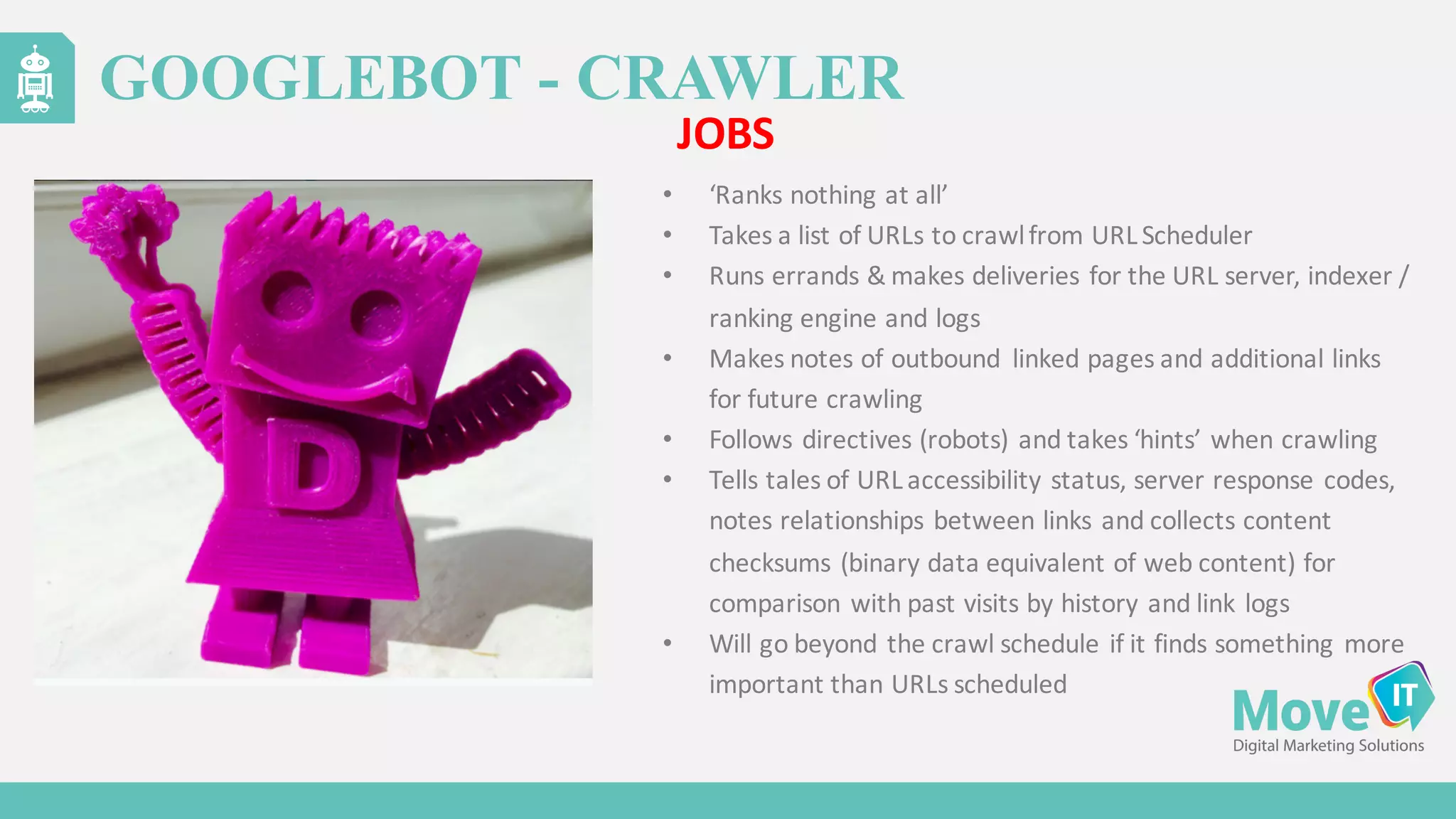

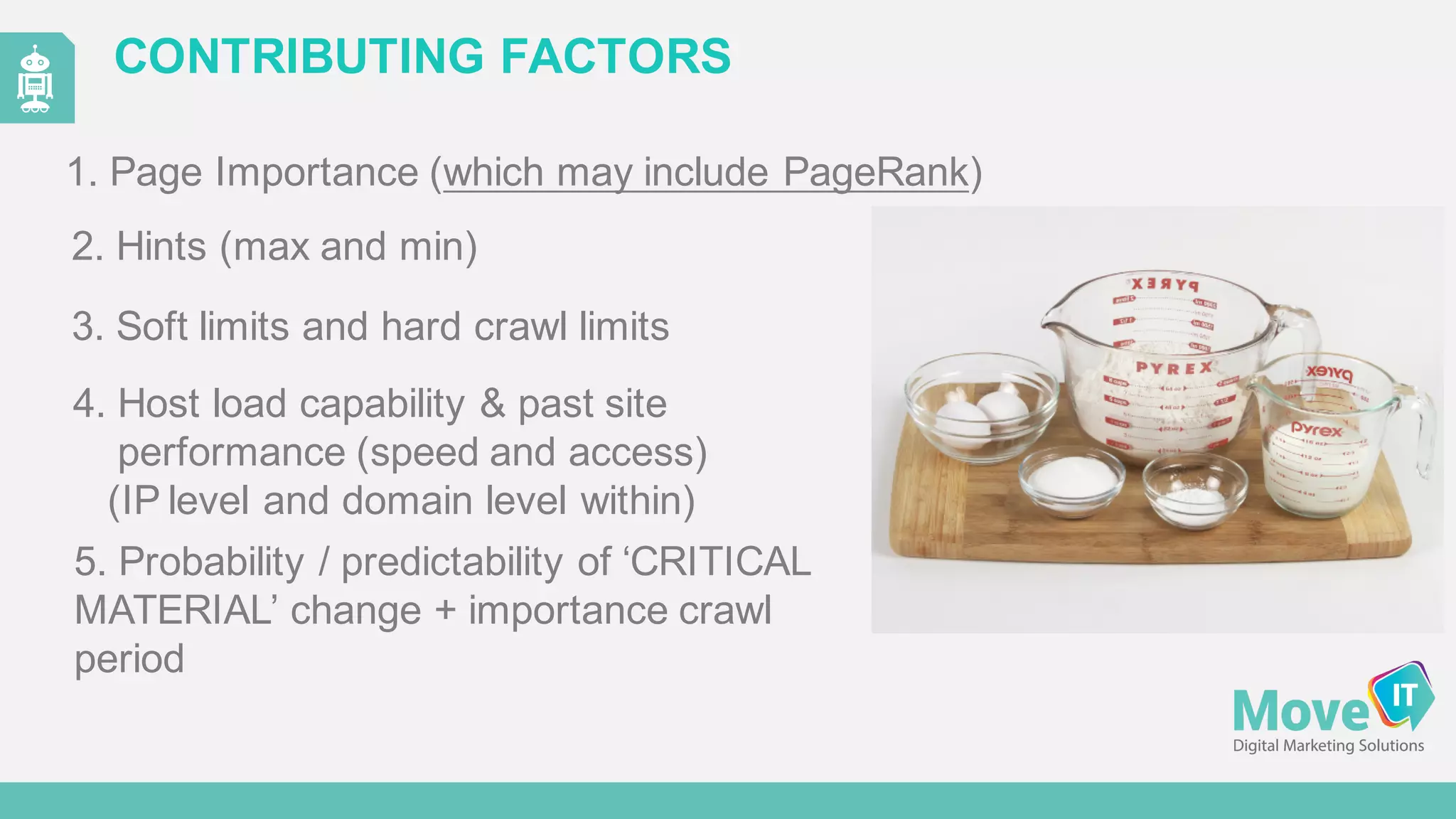

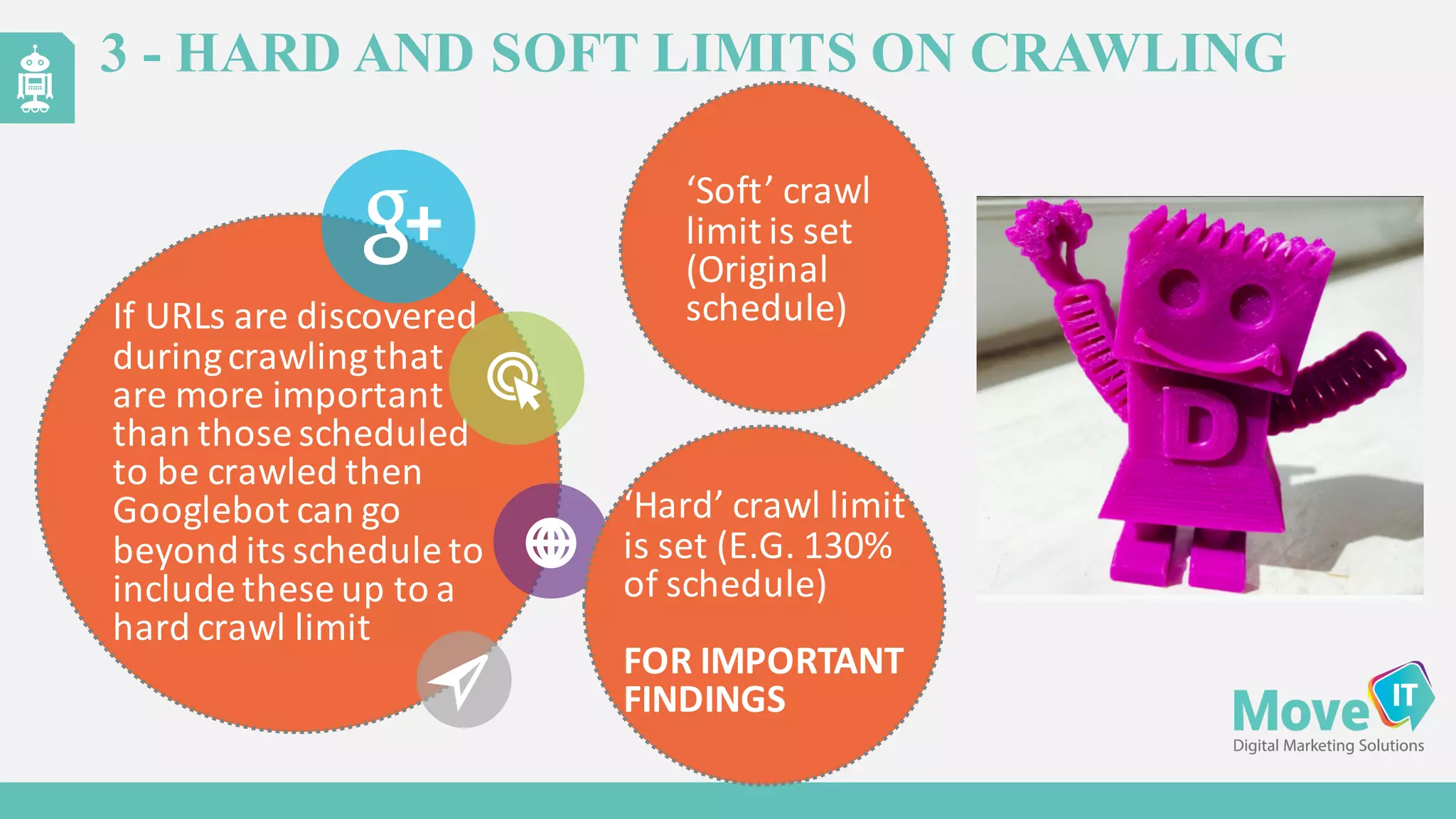

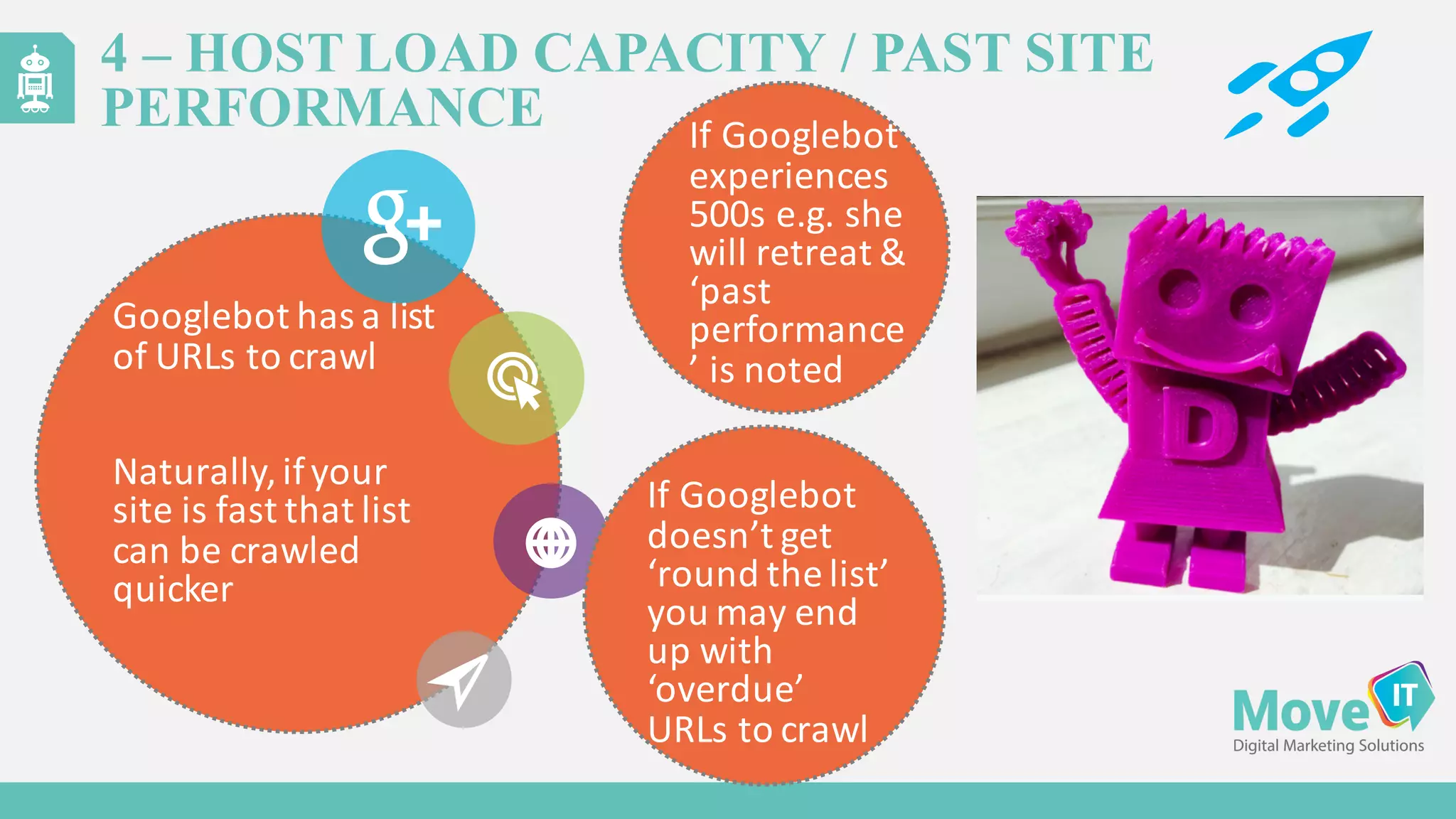

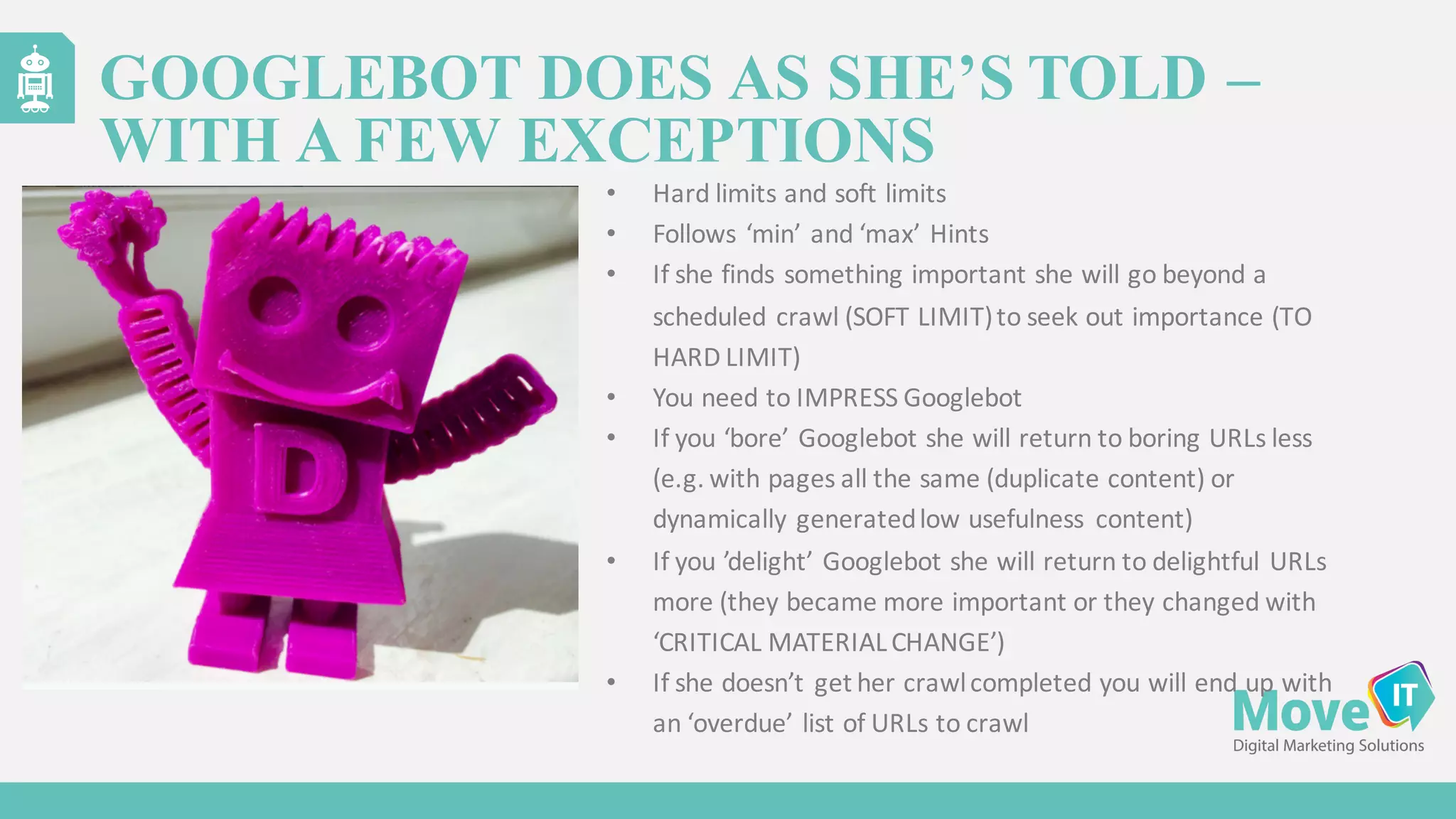

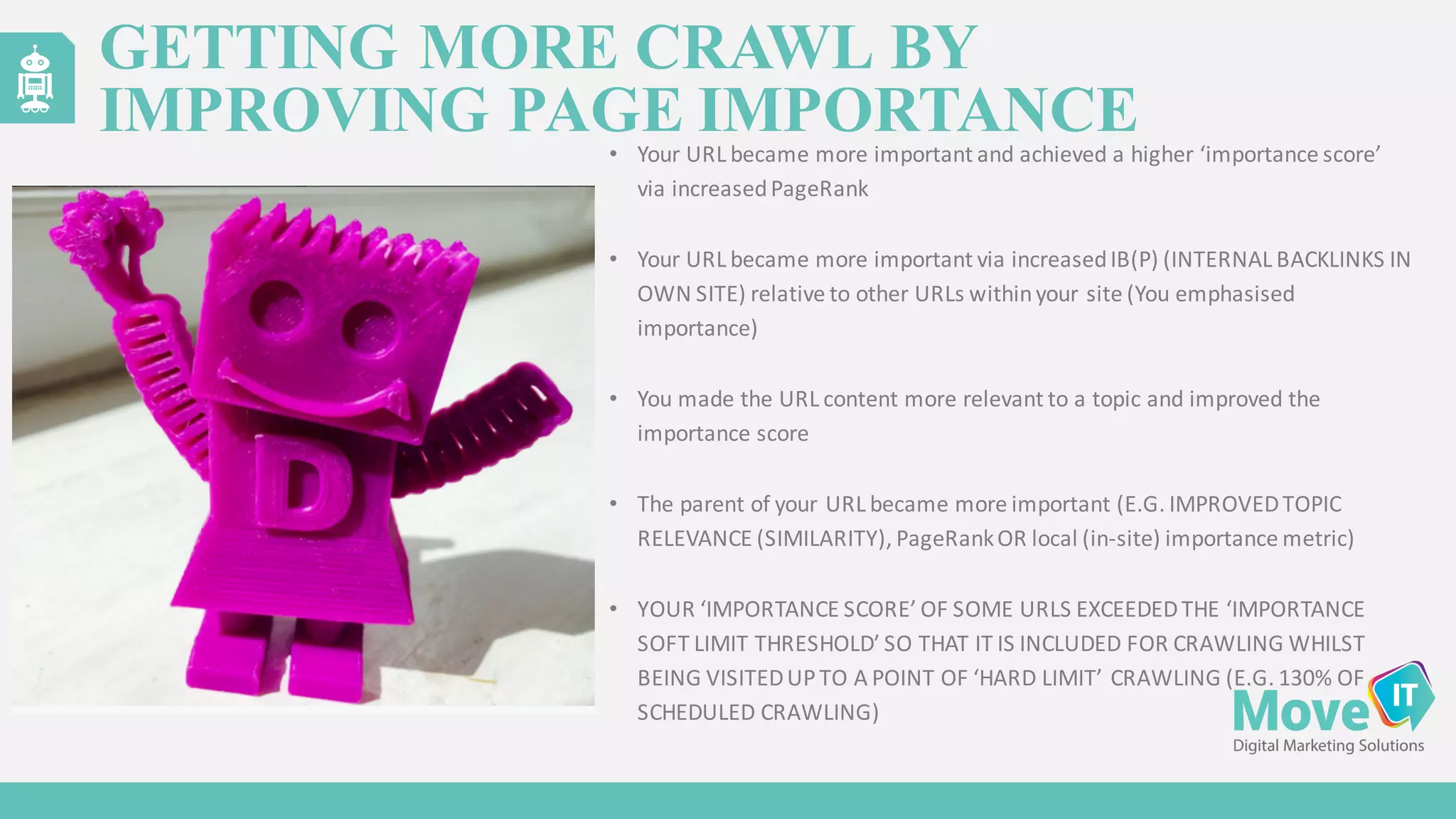

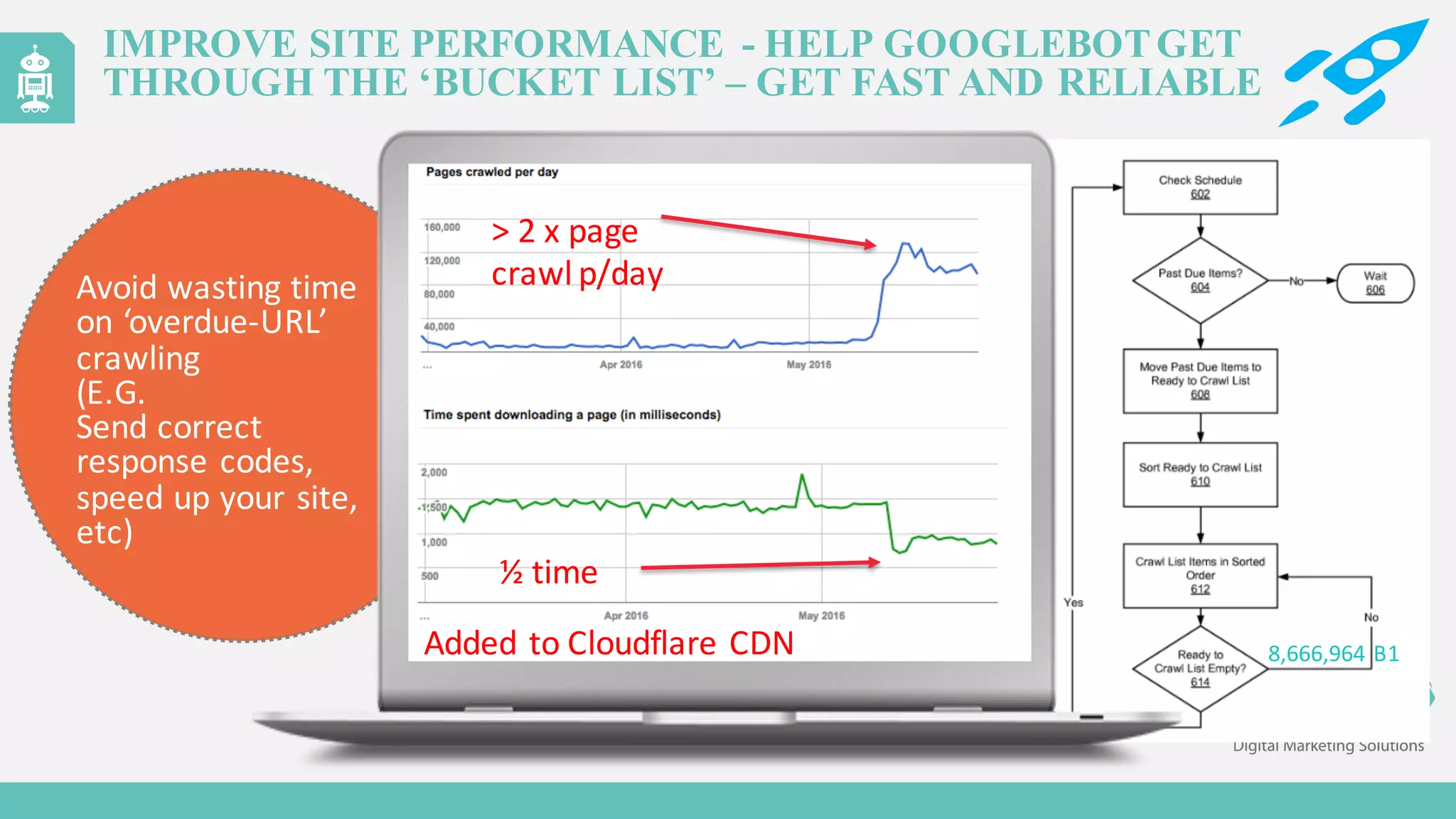

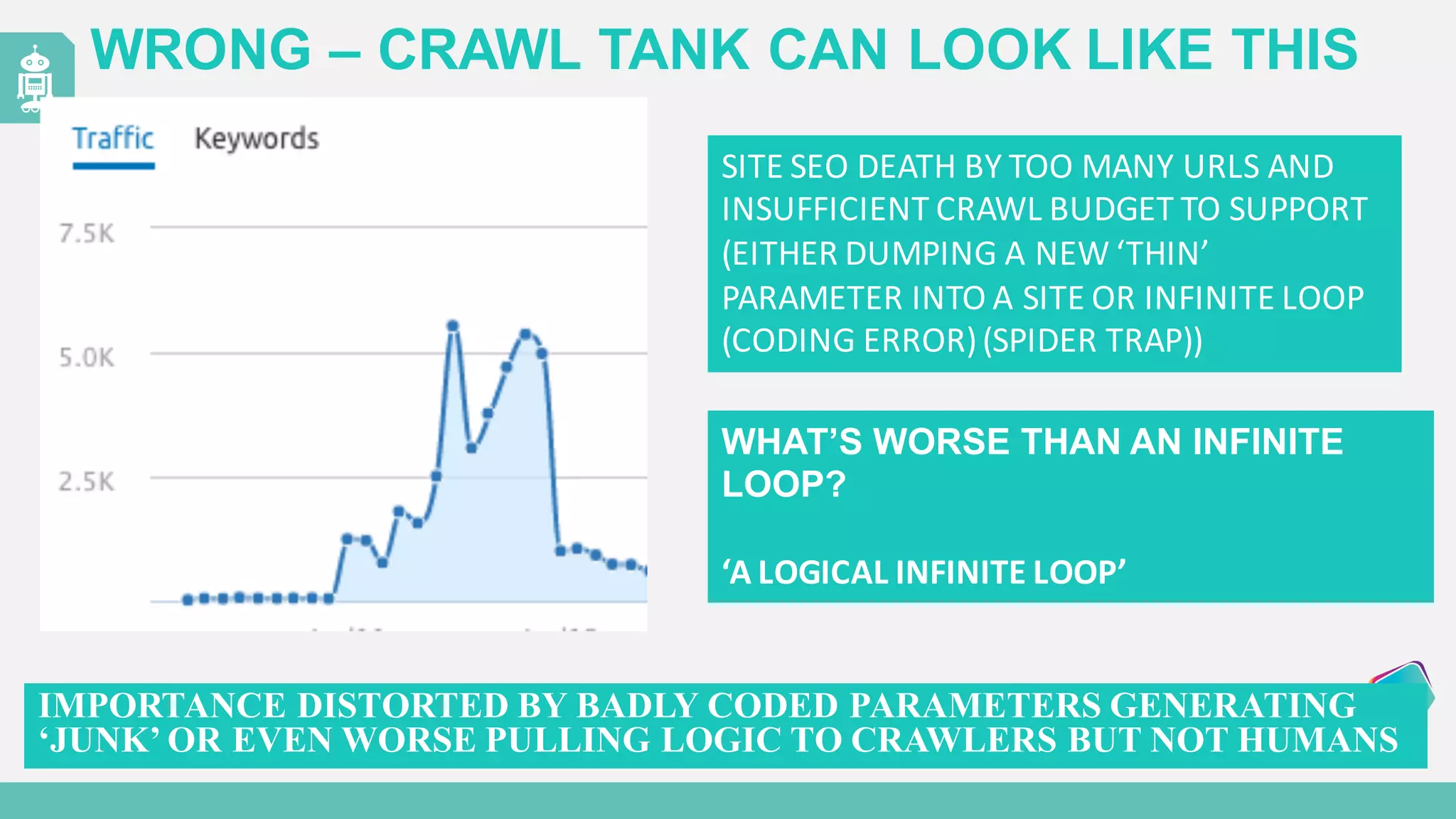

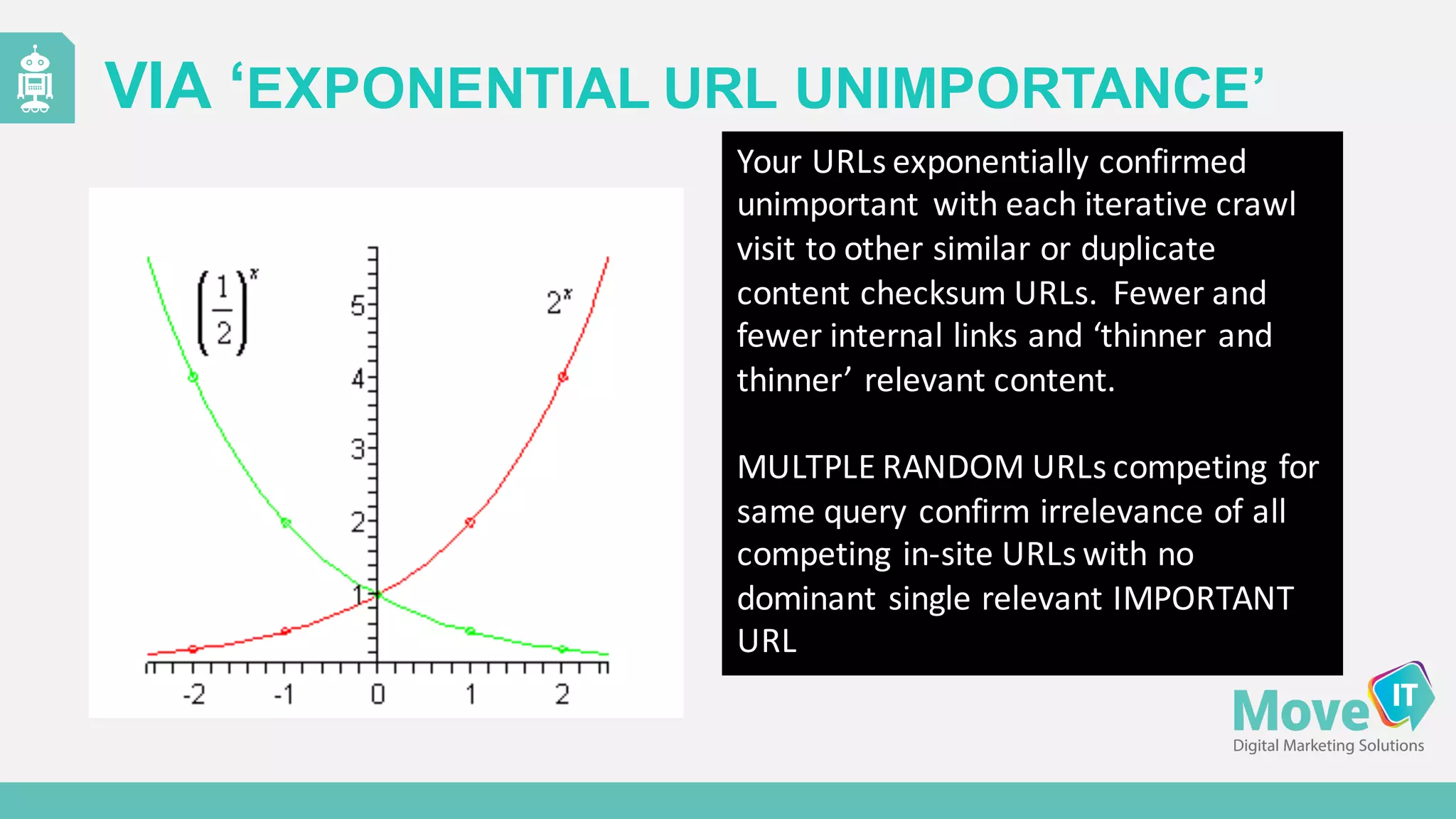

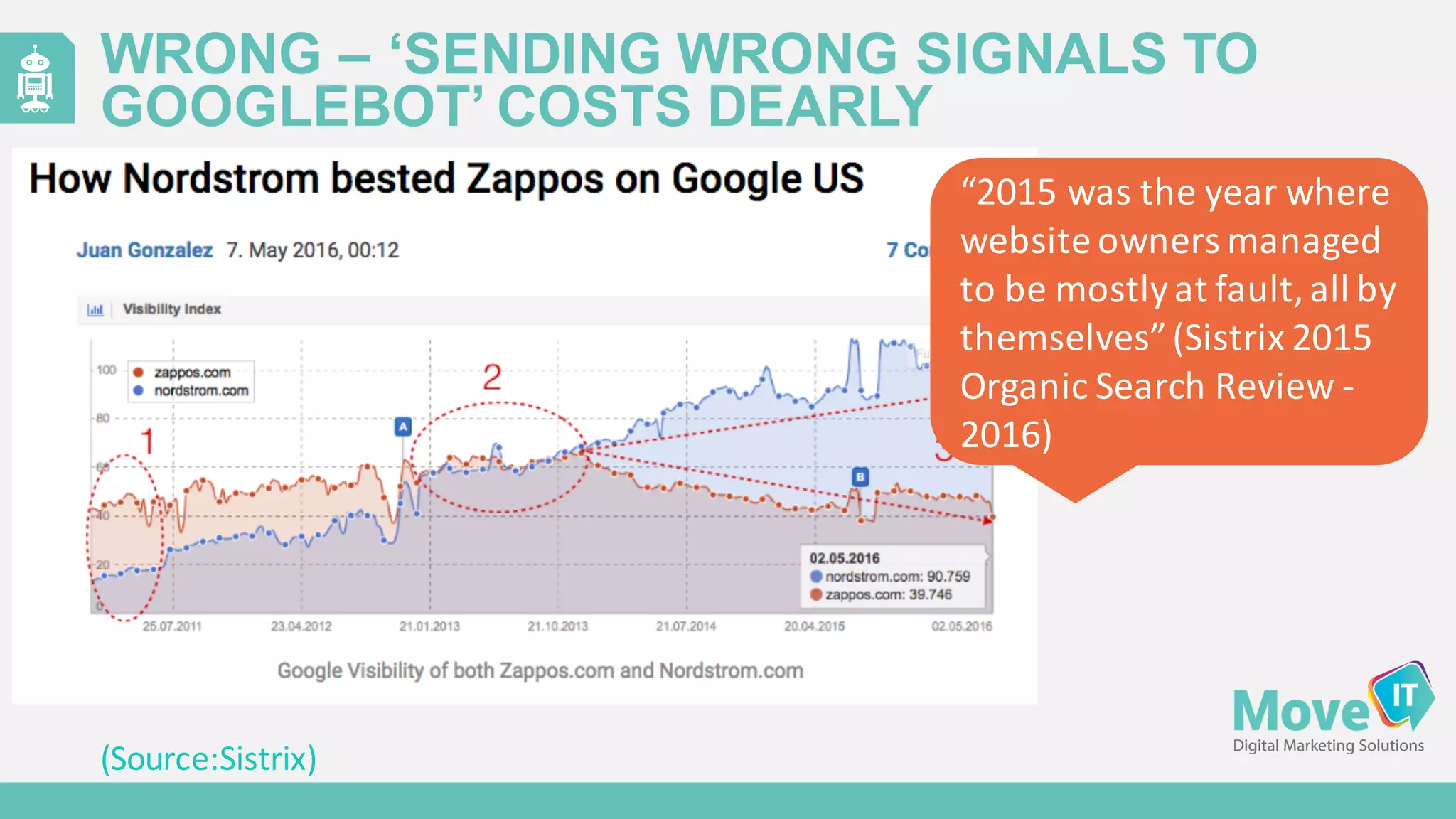

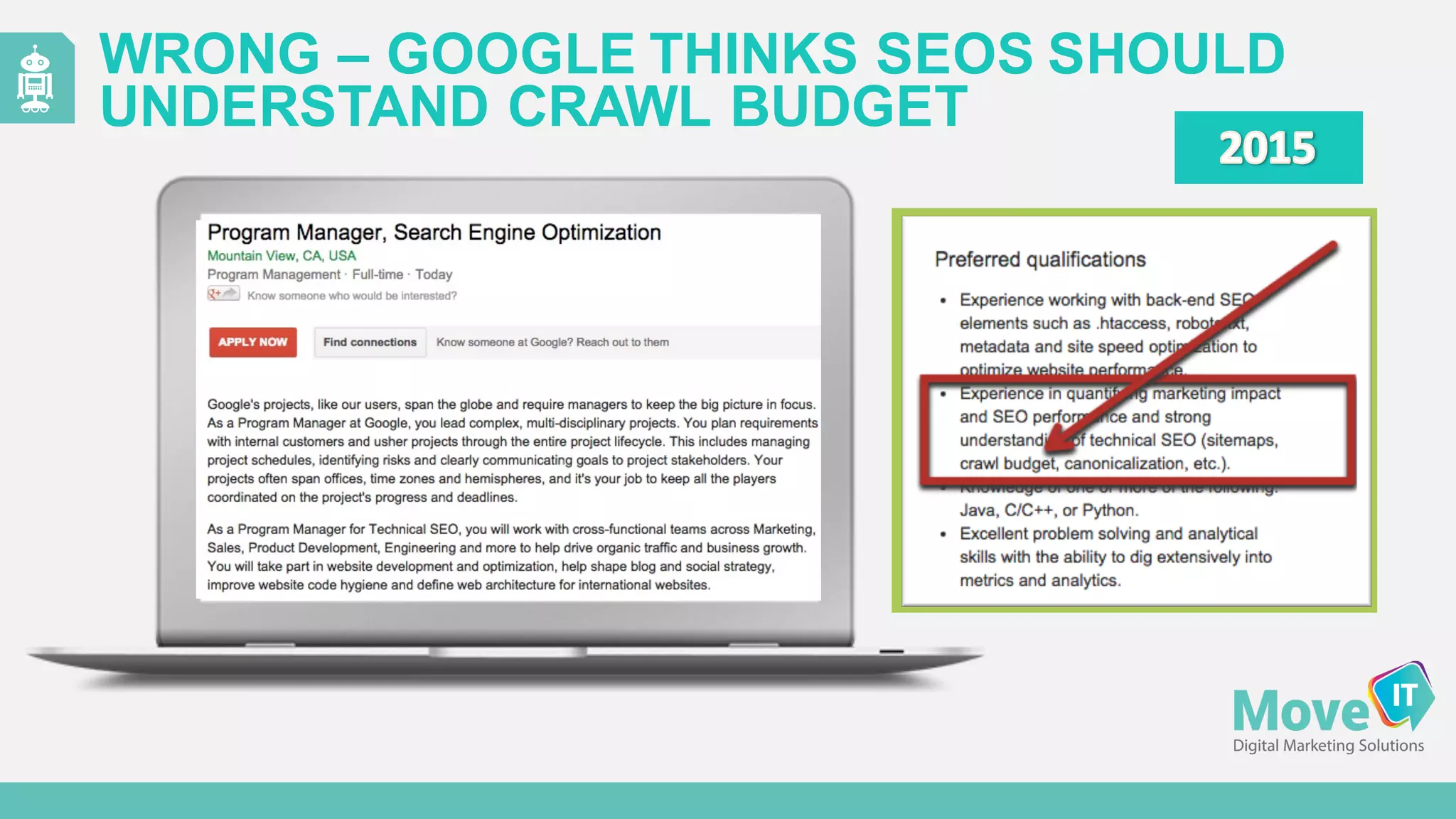

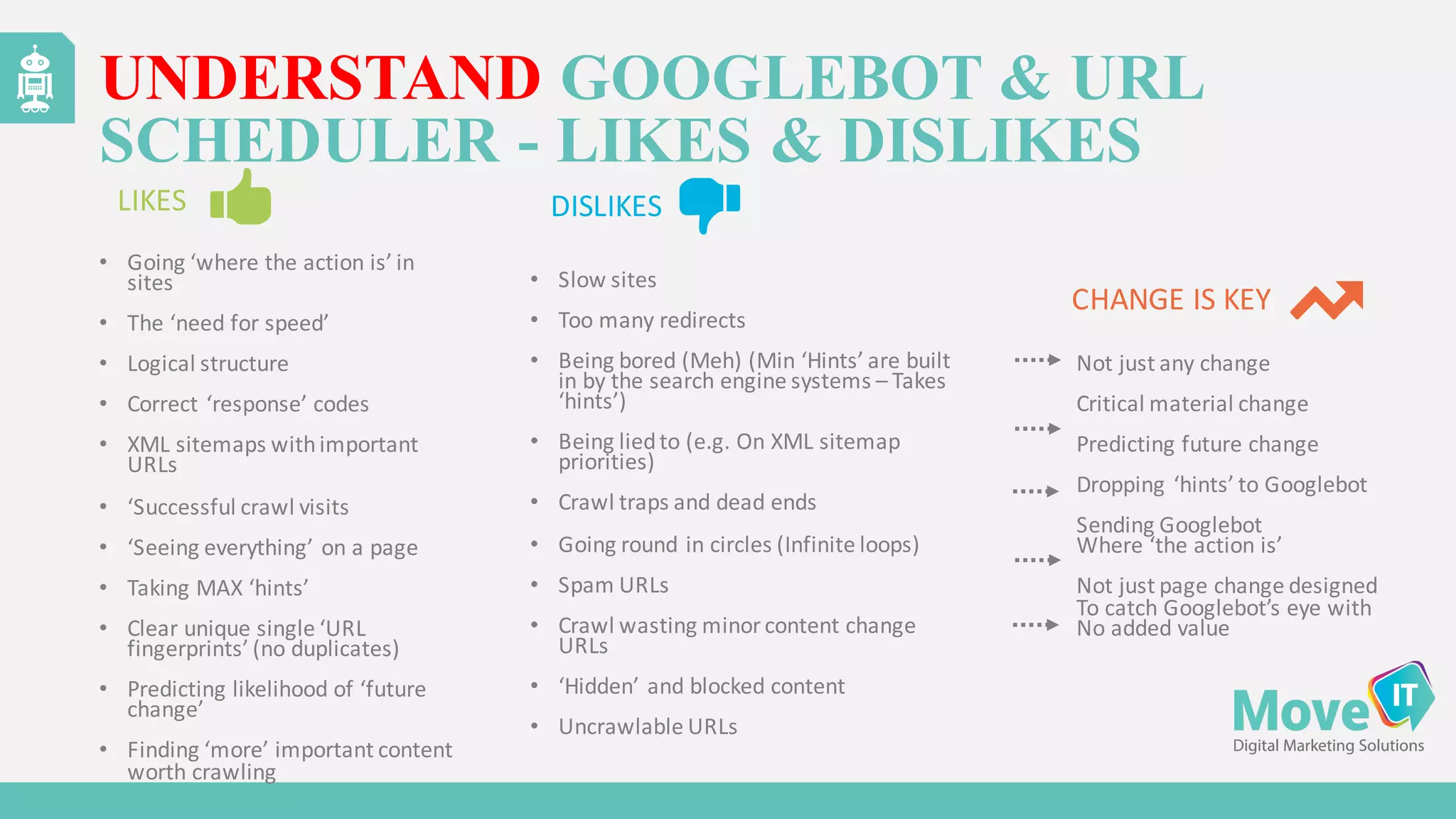

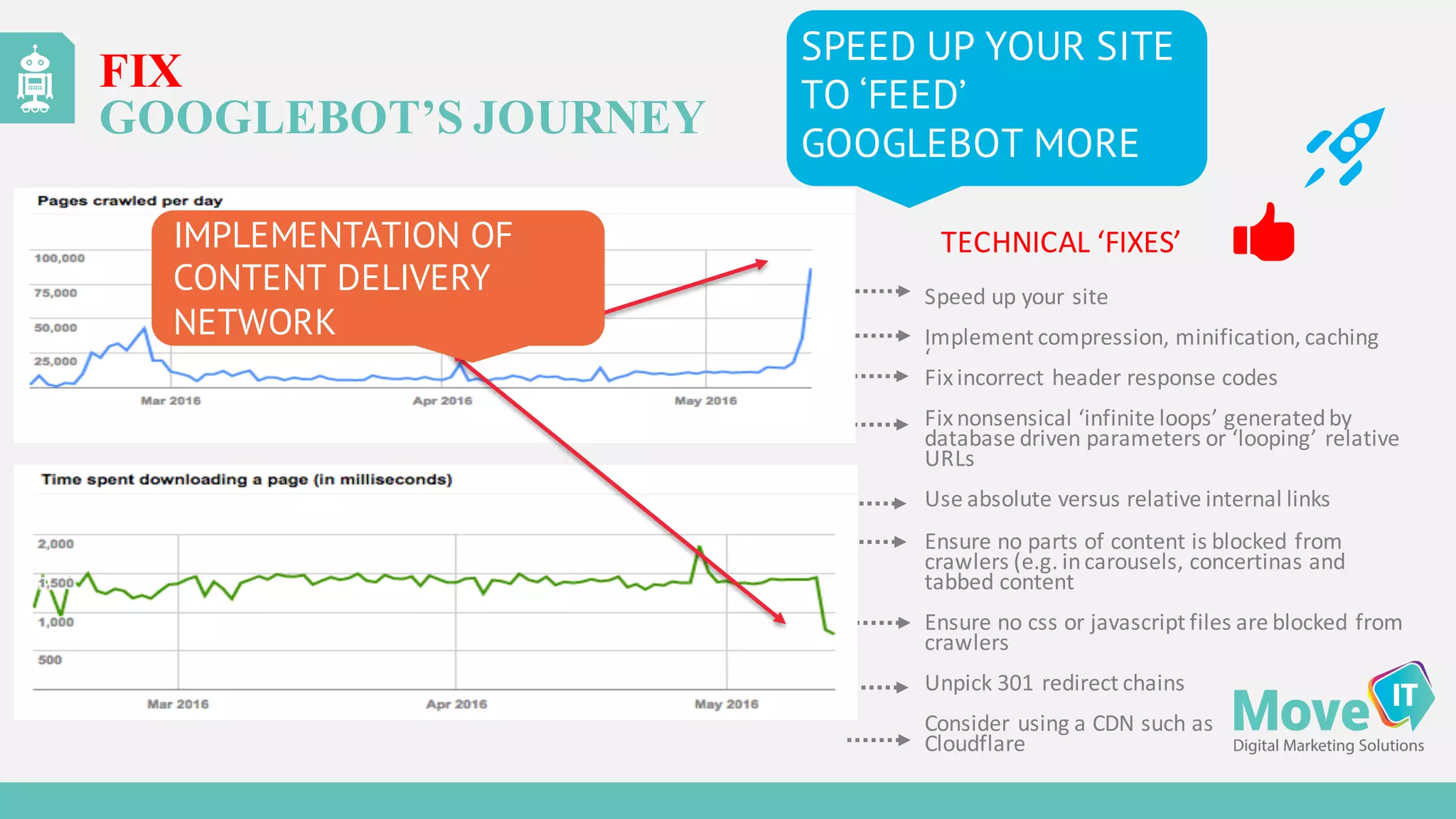

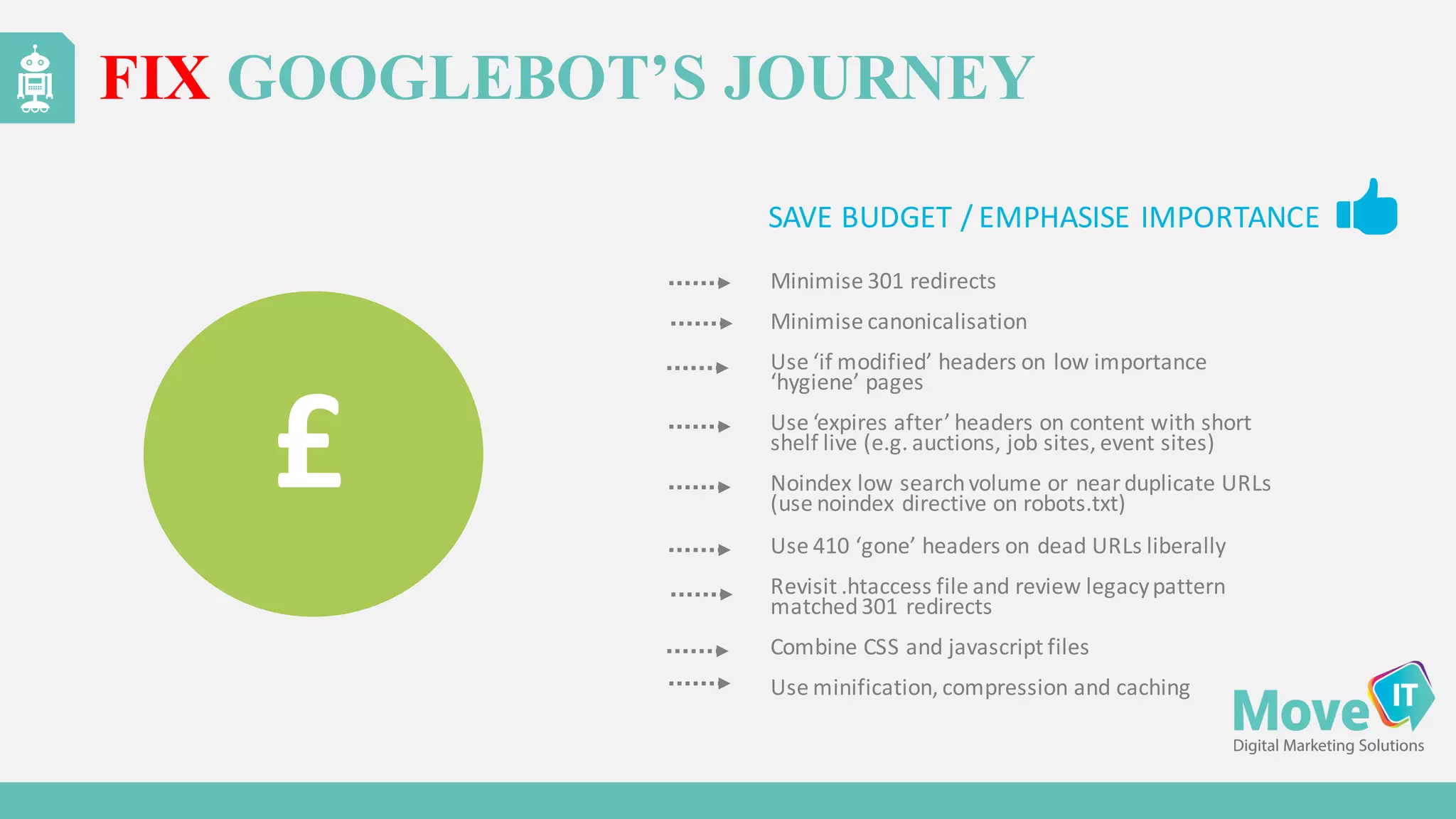

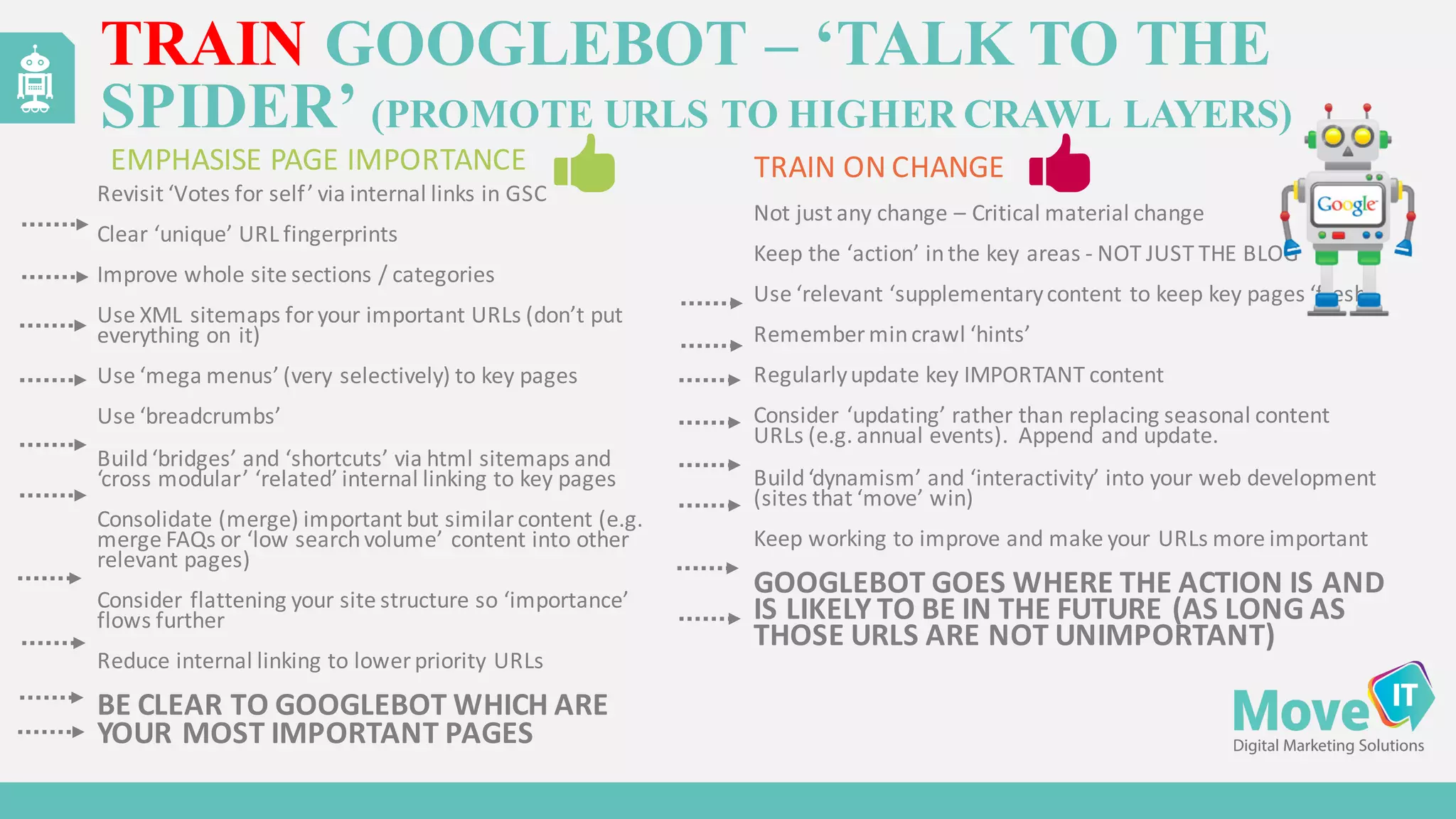

The document discusses the evolution of web content generation and Google's crawling mechanisms, highlighting the growth of user-generated content and the challenges of managing crawl budgets. It details how Googlebot prioritizes which pages to crawl based on factors like page importance, server performance, and historical data. Additionally, it outlines strategies for webmasters to improve their site's crawlability and subsequently influence their search engine visibility.