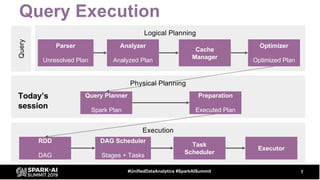

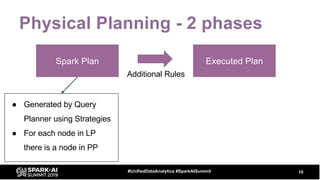

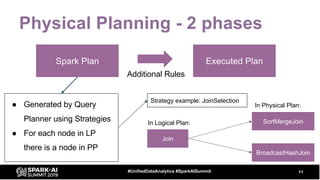

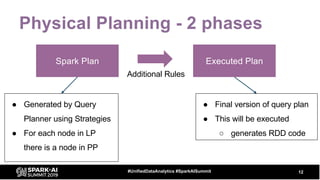

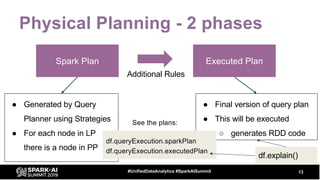

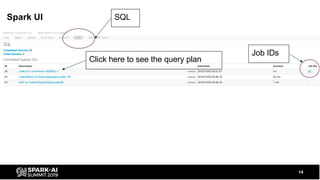

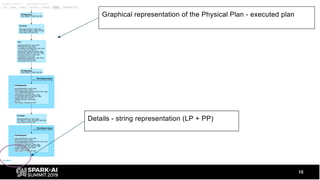

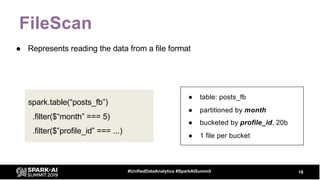

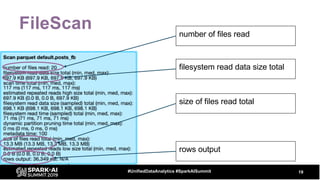

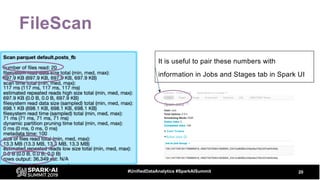

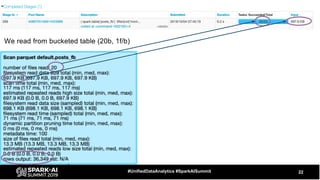

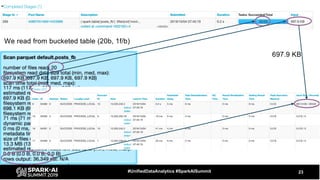

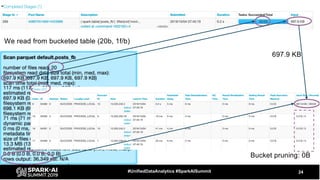

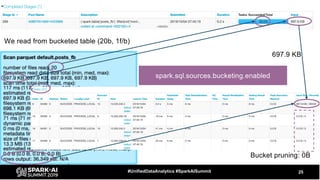

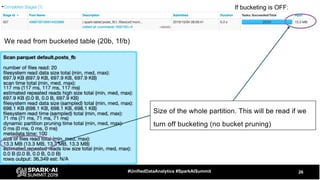

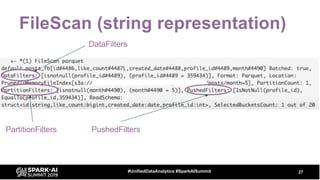

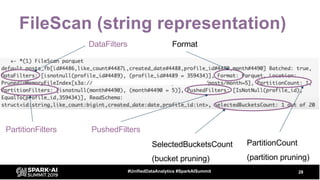

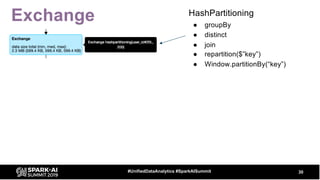

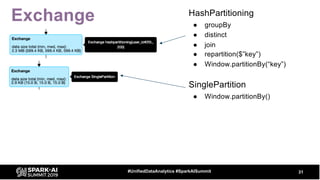

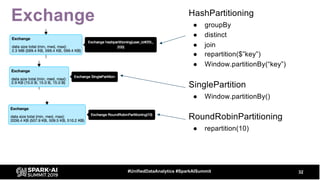

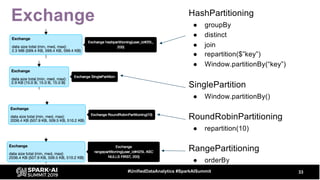

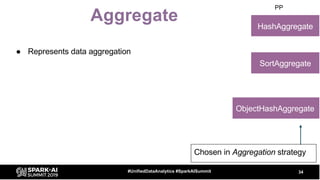

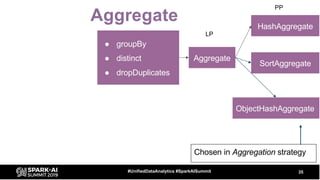

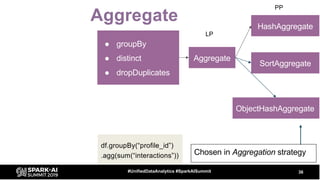

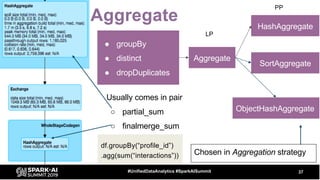

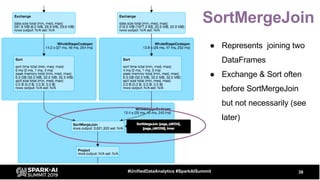

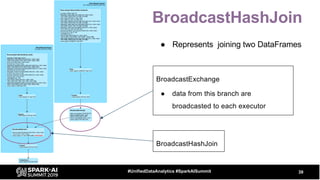

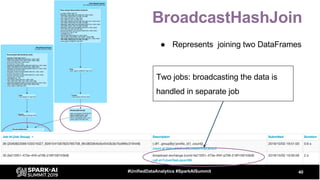

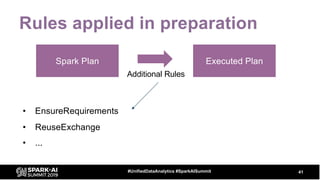

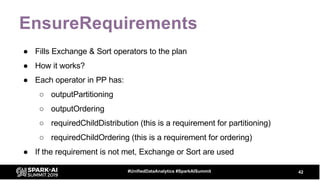

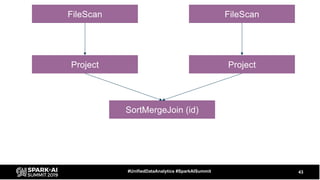

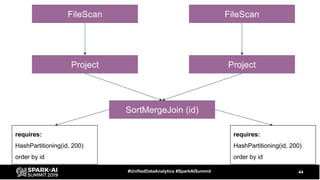

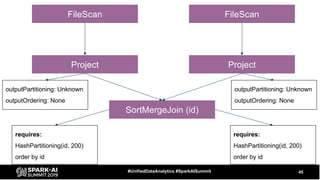

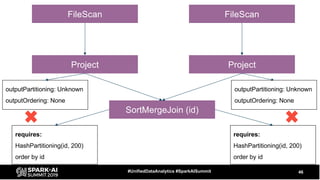

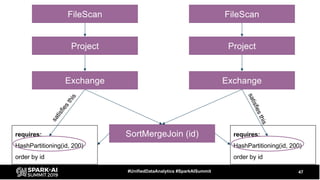

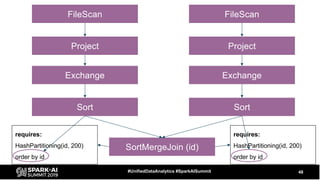

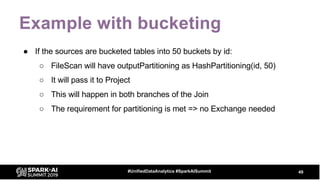

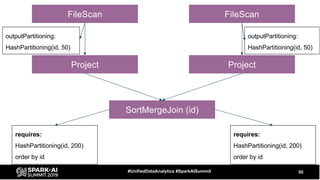

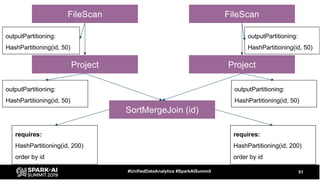

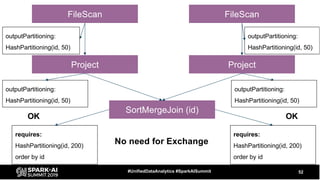

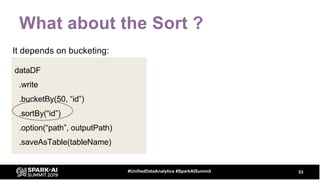

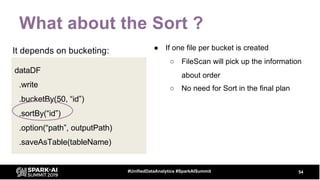

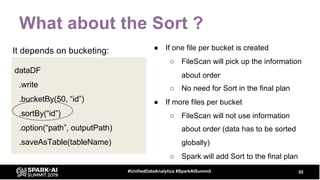

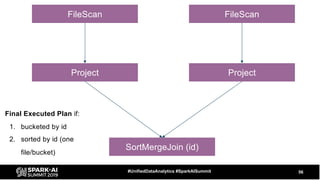

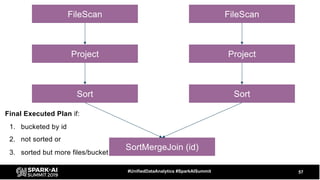

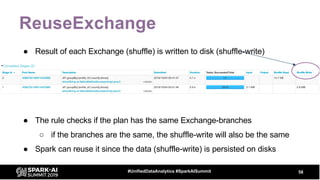

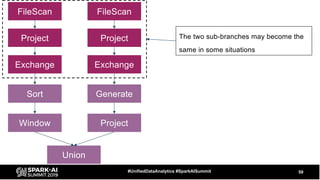

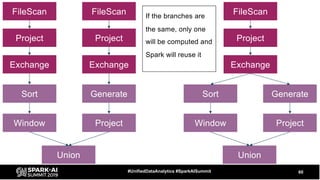

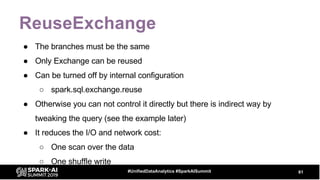

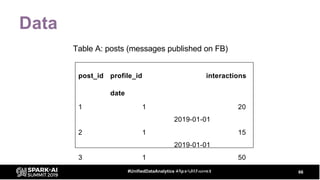

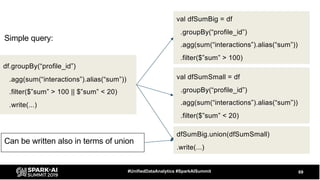

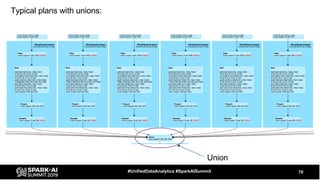

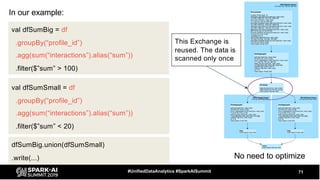

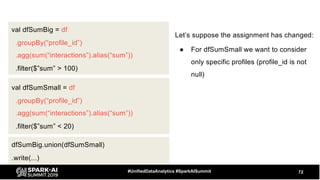

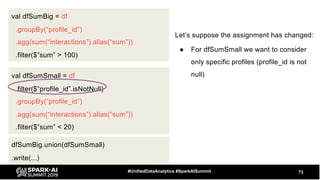

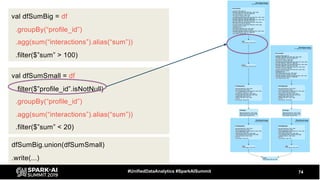

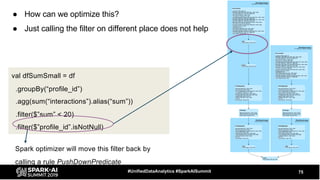

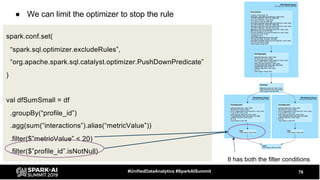

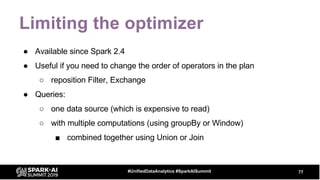

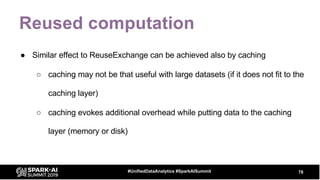

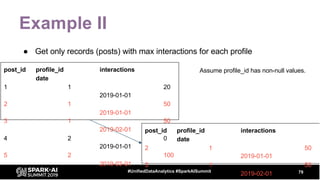

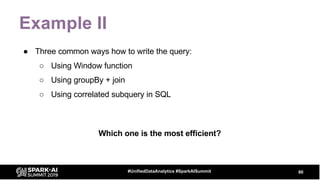

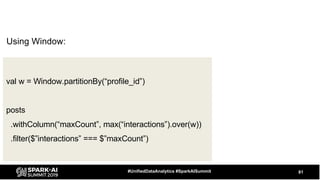

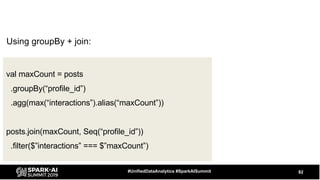

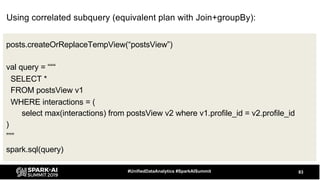

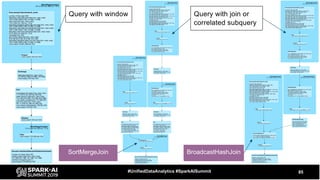

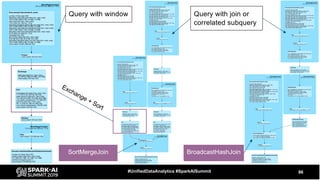

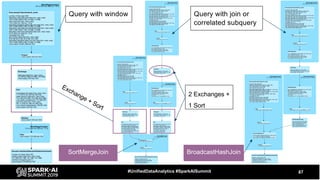

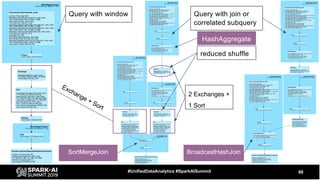

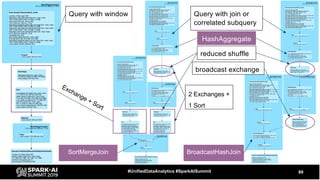

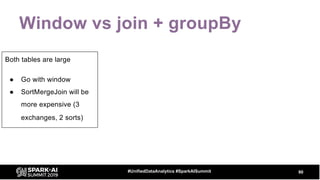

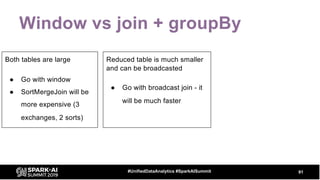

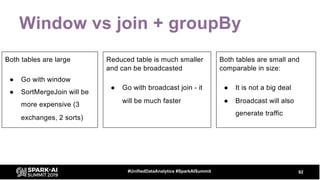

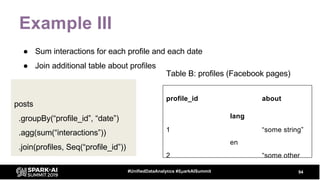

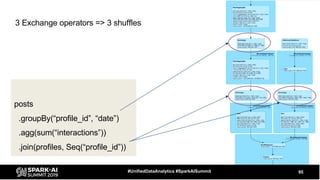

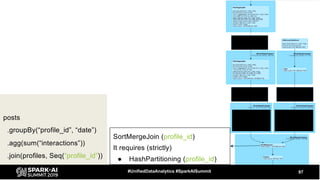

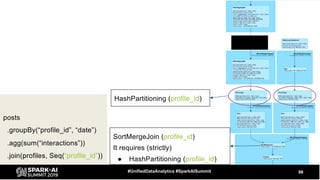

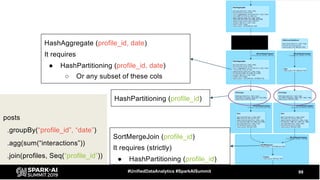

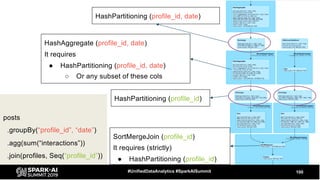

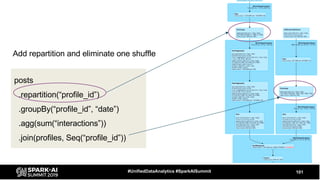

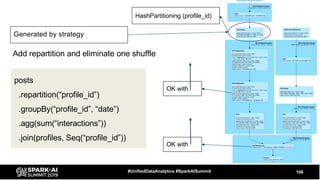

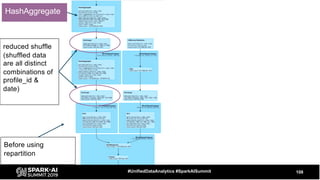

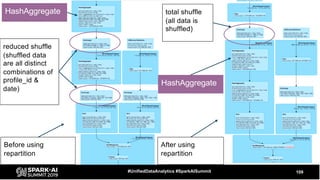

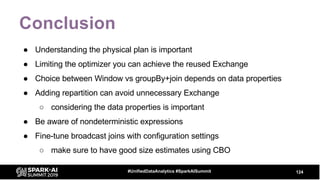

The document discusses the development and optimization of ETL pipelines using Spark, focusing on query execution, logical planning, and physical plan operators. It covers a two-part presentation by David Vrba, detailing theoretical aspects of Spark's query execution and providing practical examples of query optimization. Key topics include the understanding of Spark's execution plans, physical planning phases, and strategies to improve performance in data processing tasks.