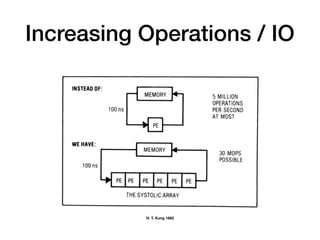

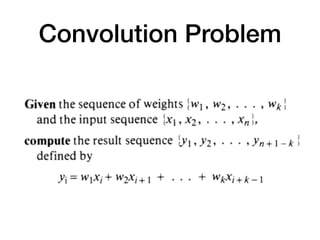

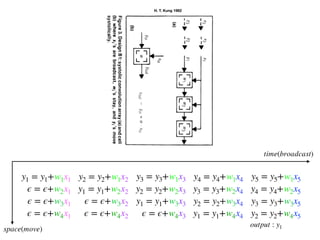

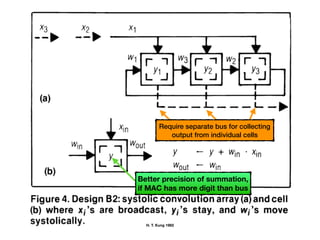

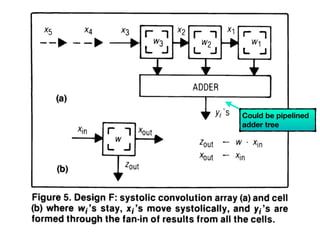

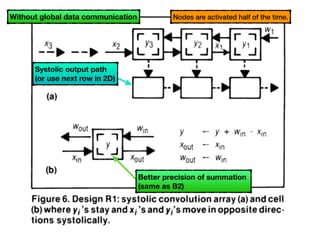

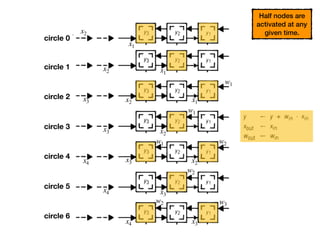

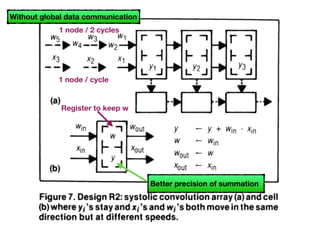

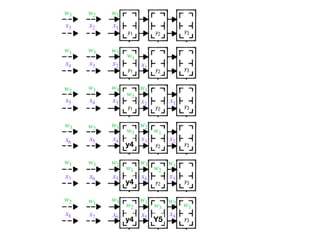

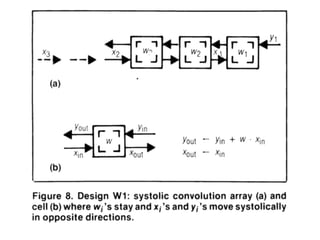

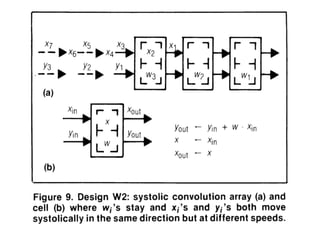

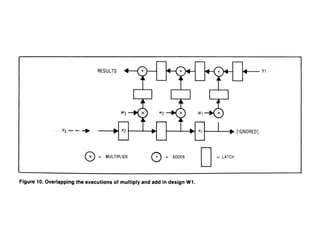

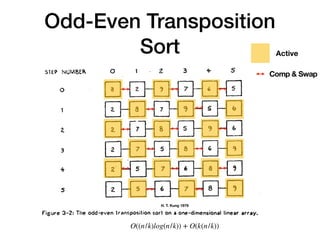

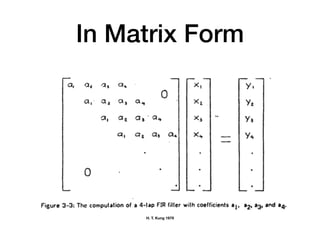

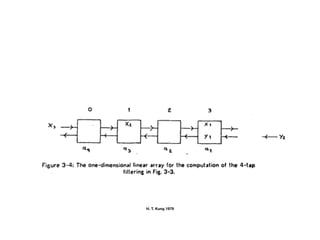

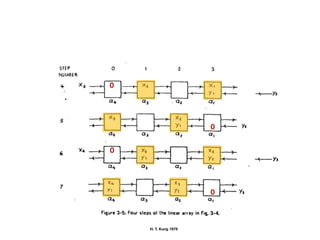

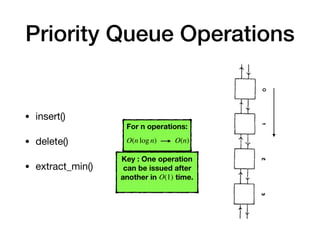

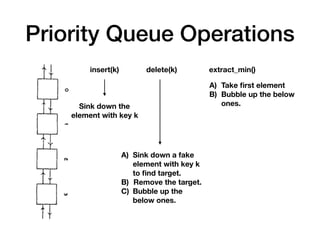

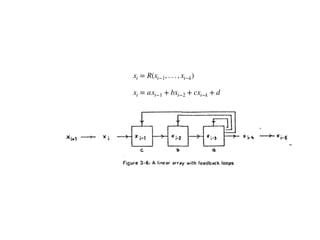

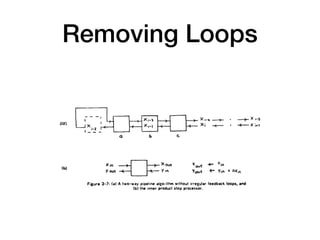

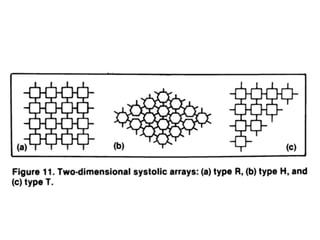

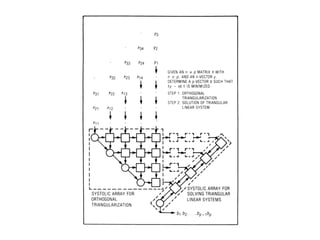

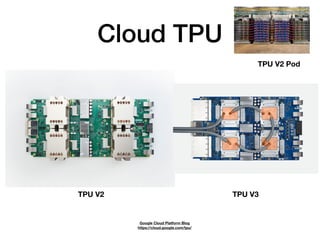

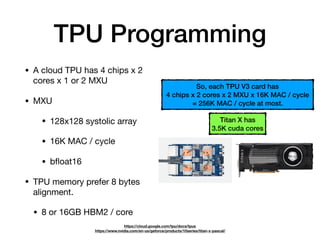

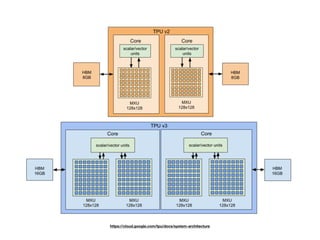

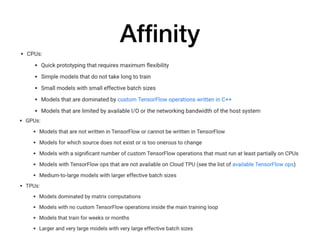

The document discusses systolic arrays and their use in hardware acceleration. It describes how systolic arrays can be used to efficiently implement algorithms like convolution, sorting, filtering, and priority queues in a massively parallel manner. Systolic arrays arrange processing elements in a grid and propagate data through the grid to perform computations. They are well-suited for hardware due to their regular structure and local data movement. The document also discusses Google's Tensor Processing Unit (TPU) which uses a systolic array design to accelerate deep learning workloads.