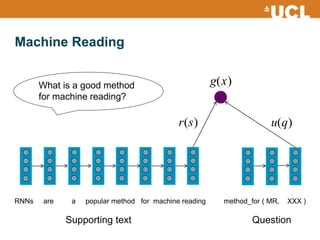

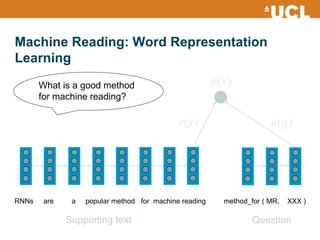

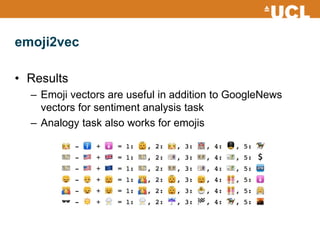

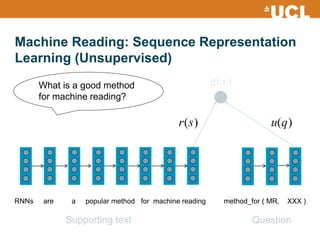

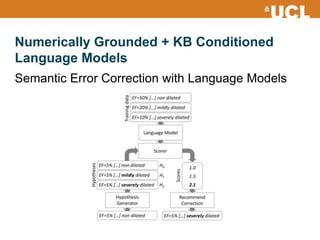

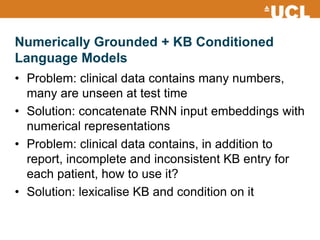

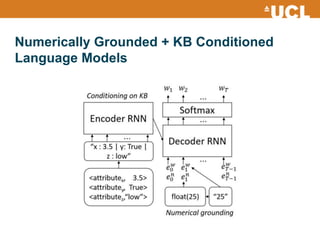

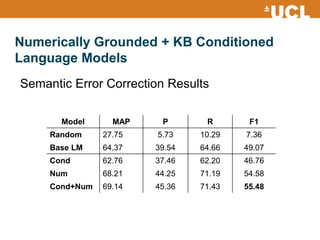

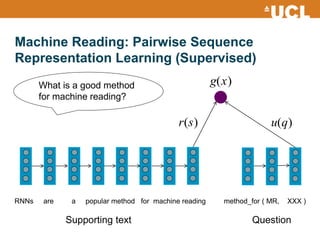

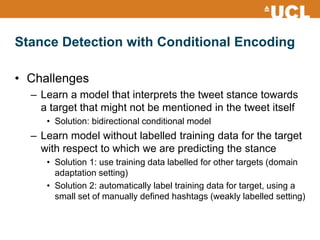

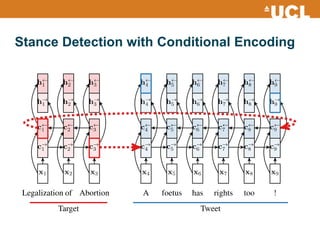

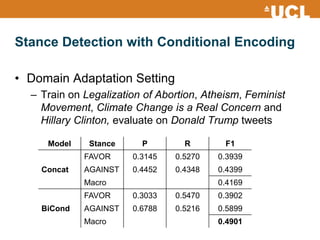

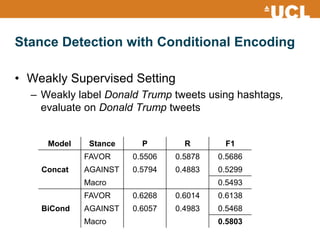

The document discusses weakly supervised machine reading techniques, focusing on various methods such as word representation learning, sequence representation learning, and stance detection. It highlights the use of RNNs for these tasks and introduces innovative approaches like emoji2vec for understanding emojis and numerically grounded language models for semantic error correction. Additionally, it emphasizes the importance of conditional encoding in stance detection to classify attitudes towards targets, even in the absence of labeled training data.