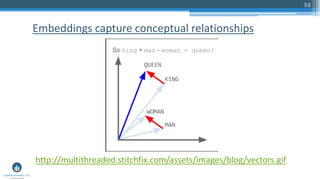

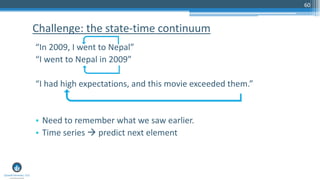

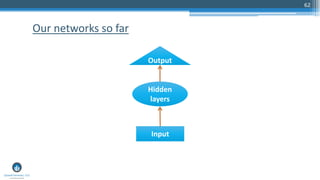

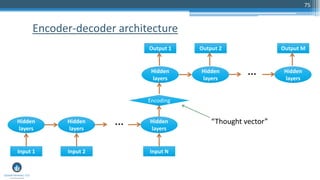

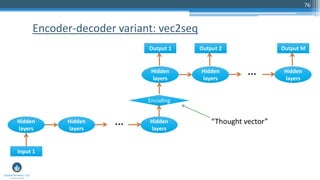

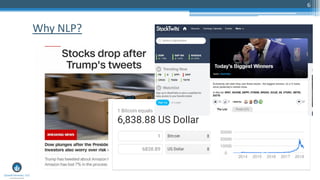

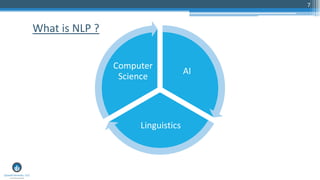

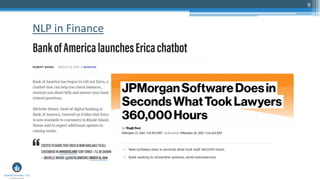

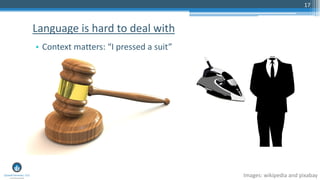

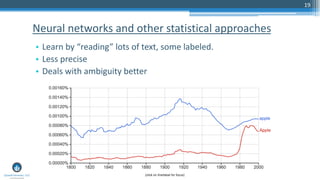

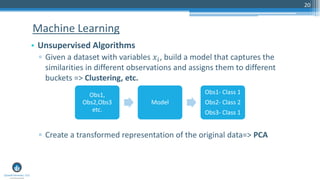

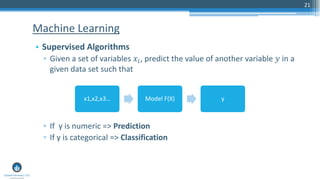

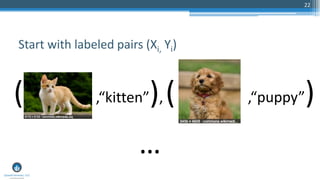

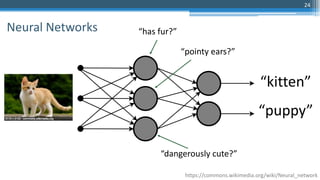

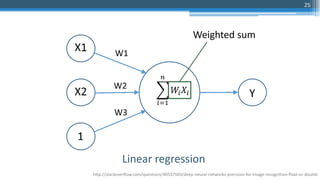

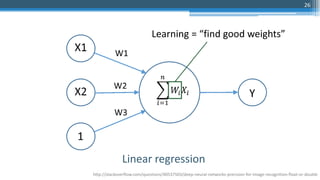

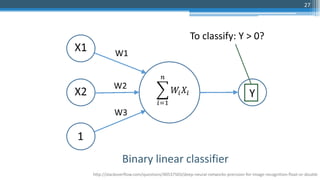

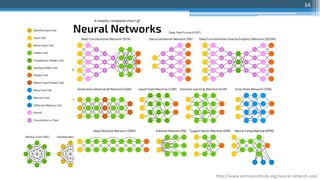

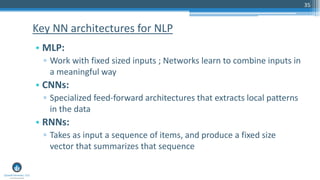

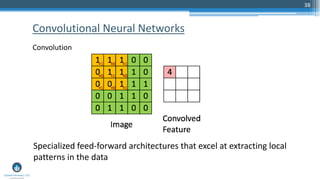

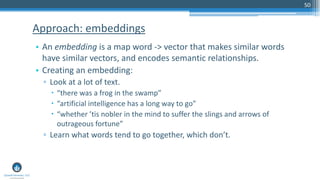

The document is a comprehensive primer on neural network models specifically tailored for natural language processing (NLP), covering foundational concepts, applications, and various architectures such as recurrent and convolutional neural networks. It discusses the importance of understanding language, the challenges presented by ambiguity and context, and outlines applications in fields like finance and sentiment analysis. Additionally, it highlights training methods and the use of embeddings to improve word representation in NLP tasks.

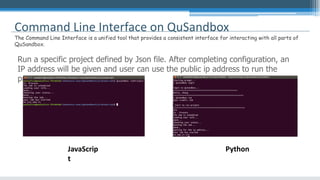

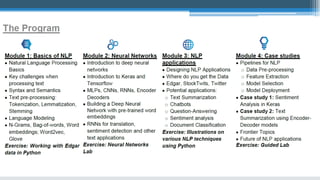

![52

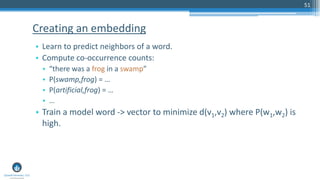

Frog:

Swamp:

Computer:

…

Compute error in predicting P(w1,w2) given d(v1,v2).

Update weights:

Frog:

Swamp:

Computer:

Creating an embedding

[0.2, 0.7, 0.11, …, 0.52]

[0.9, 0.55, 0.4, …, 0.8]

[0.3, 0.6, 0.01, …, 0.7]

[0.3, 0.65, 0.3, …, 0.6]

[0.7, 0.6, 0.4, …, 0.7]

[0.5, 0.3, 0.02, …, 0.4]

1)

2)

3)](https://image.slidesharecdn.com/nlp-workshop-odsc-180507165339/85/Nlp-and-Neural-Networks-workshop-52-320.jpg)