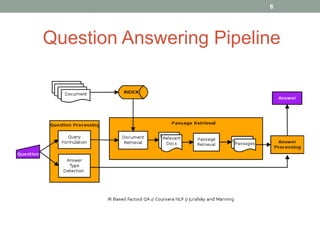

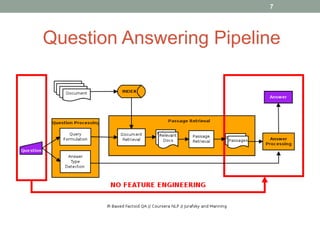

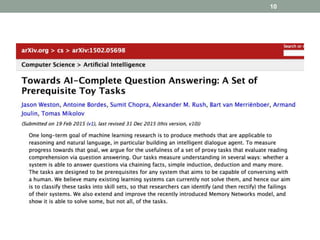

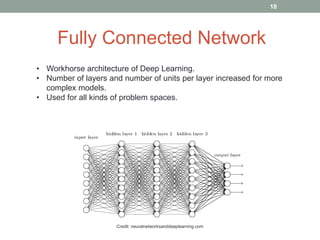

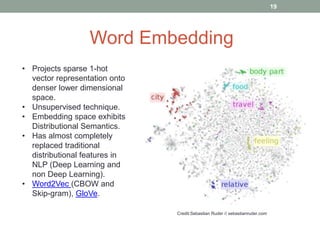

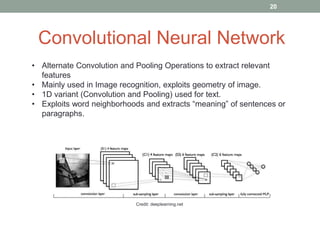

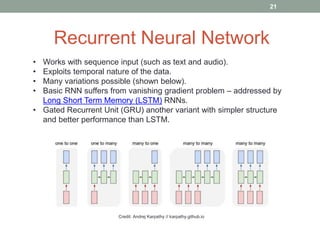

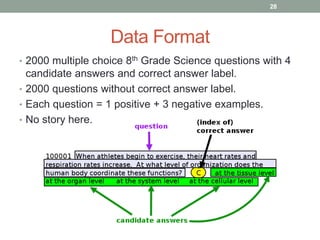

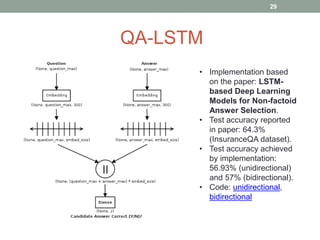

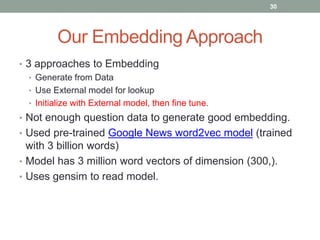

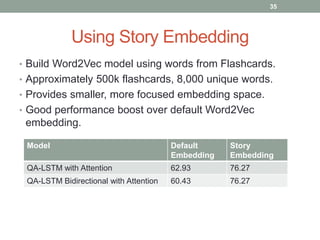

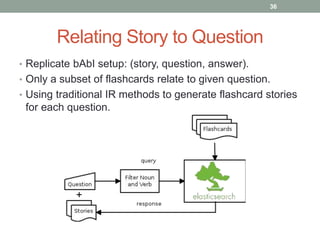

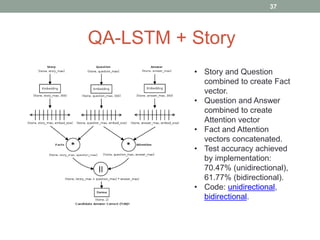

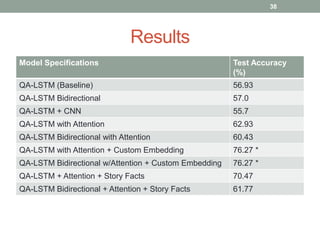

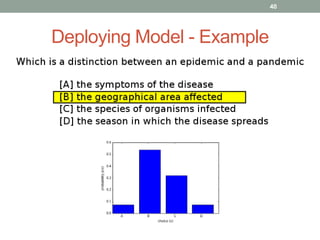

This document discusses deep learning models for question answering. It provides an overview of common deep learning building blocks such as fully connected networks, word embeddings, convolutional neural networks and recurrent neural networks. It then summarizes the authors' experiments using these techniques on benchmark question answering datasets like bAbI and a Kaggle science question dataset. Their best model achieved an accuracy of 76.27% by incorporating custom word embeddings trained on external knowledge sources. The authors discuss future work including trying additional models and deploying the trained systems.