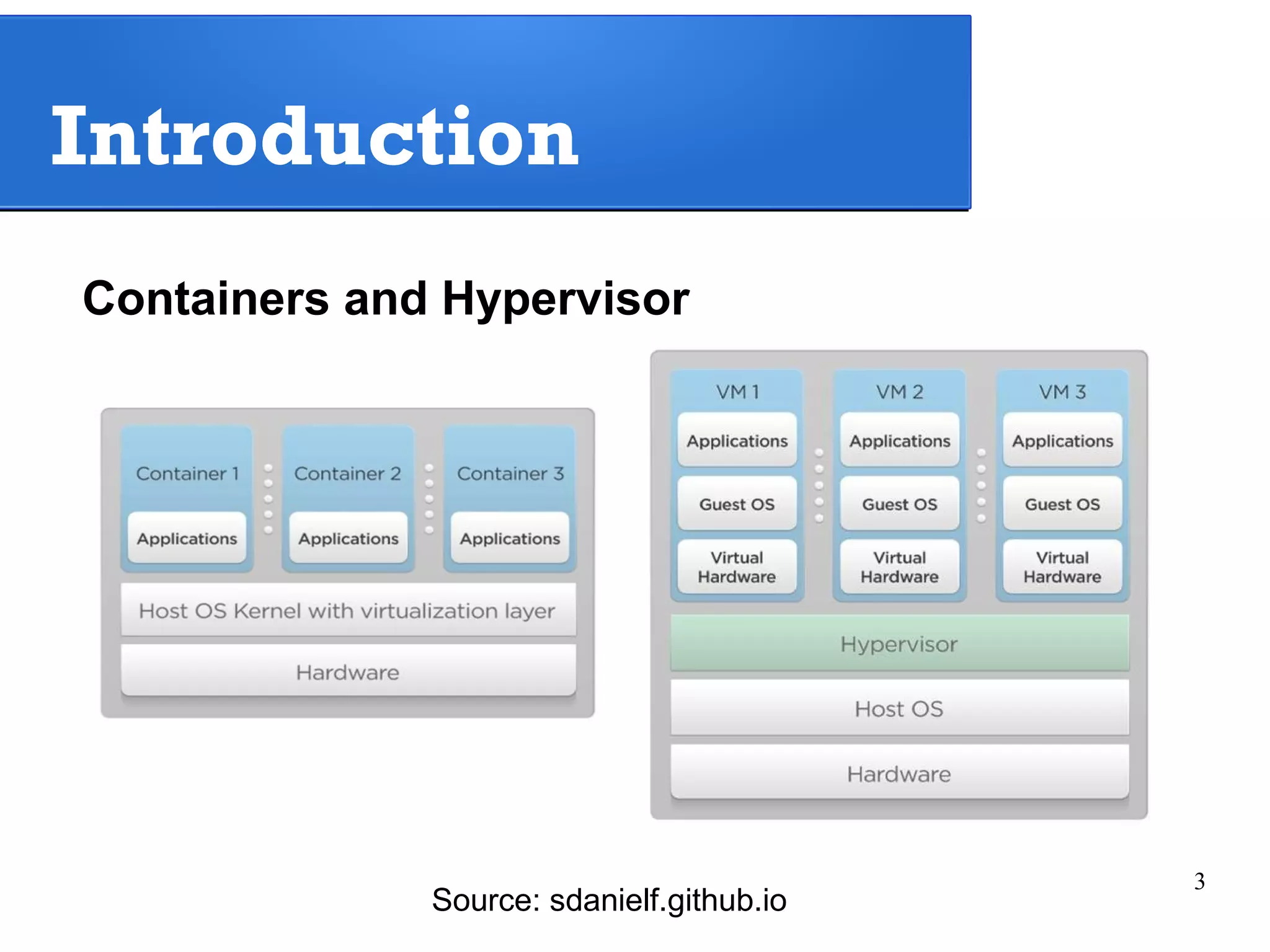

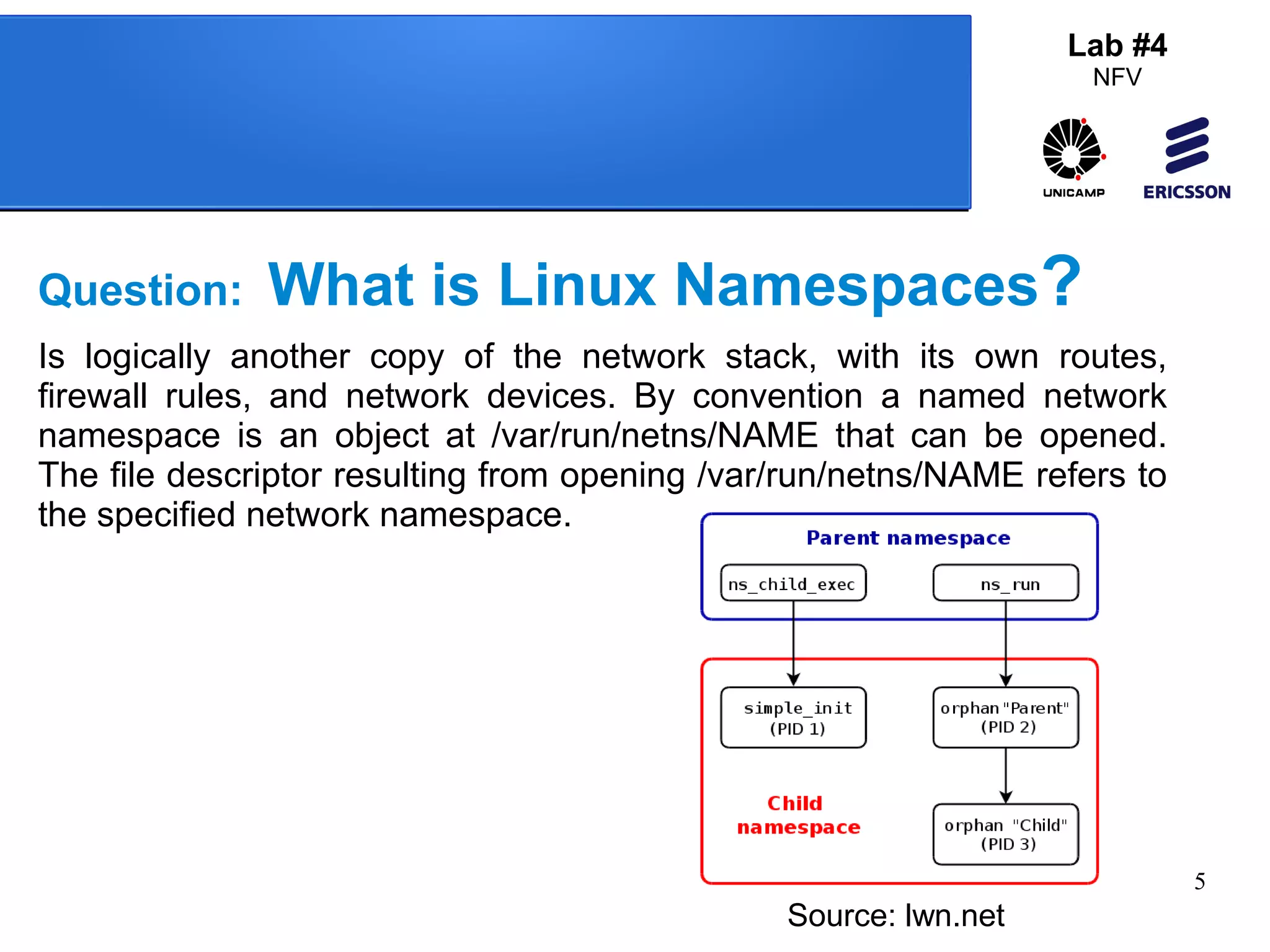

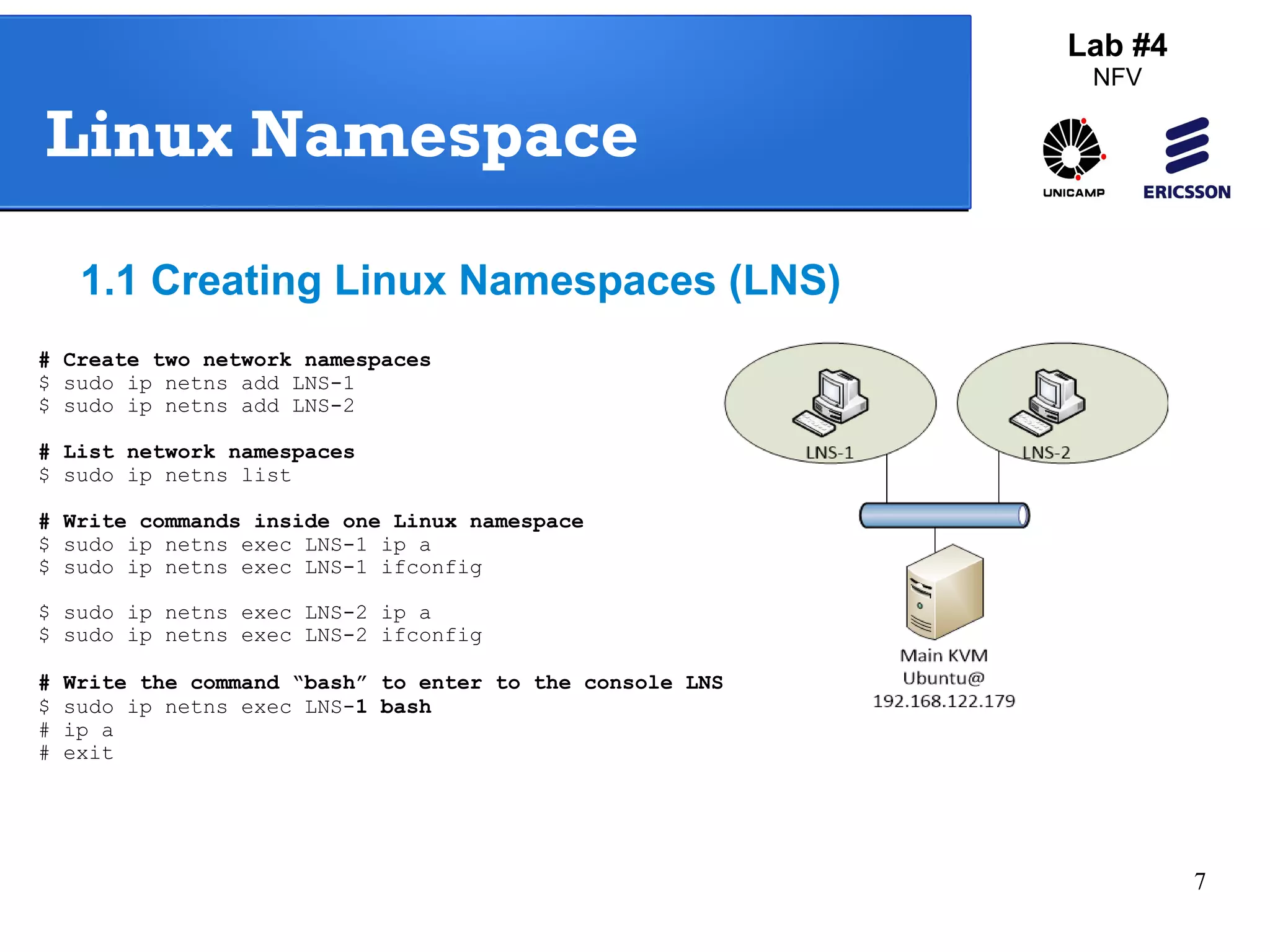

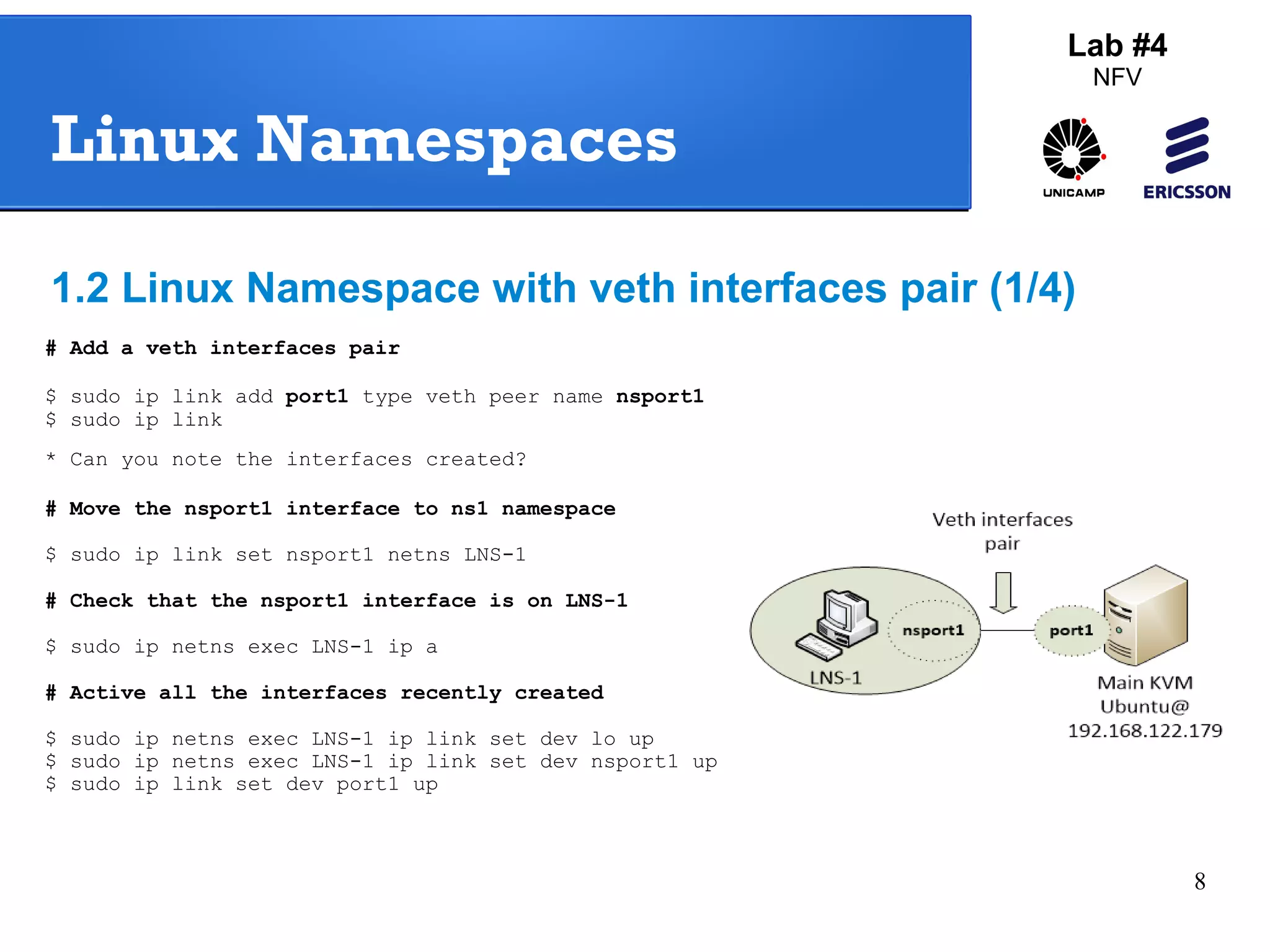

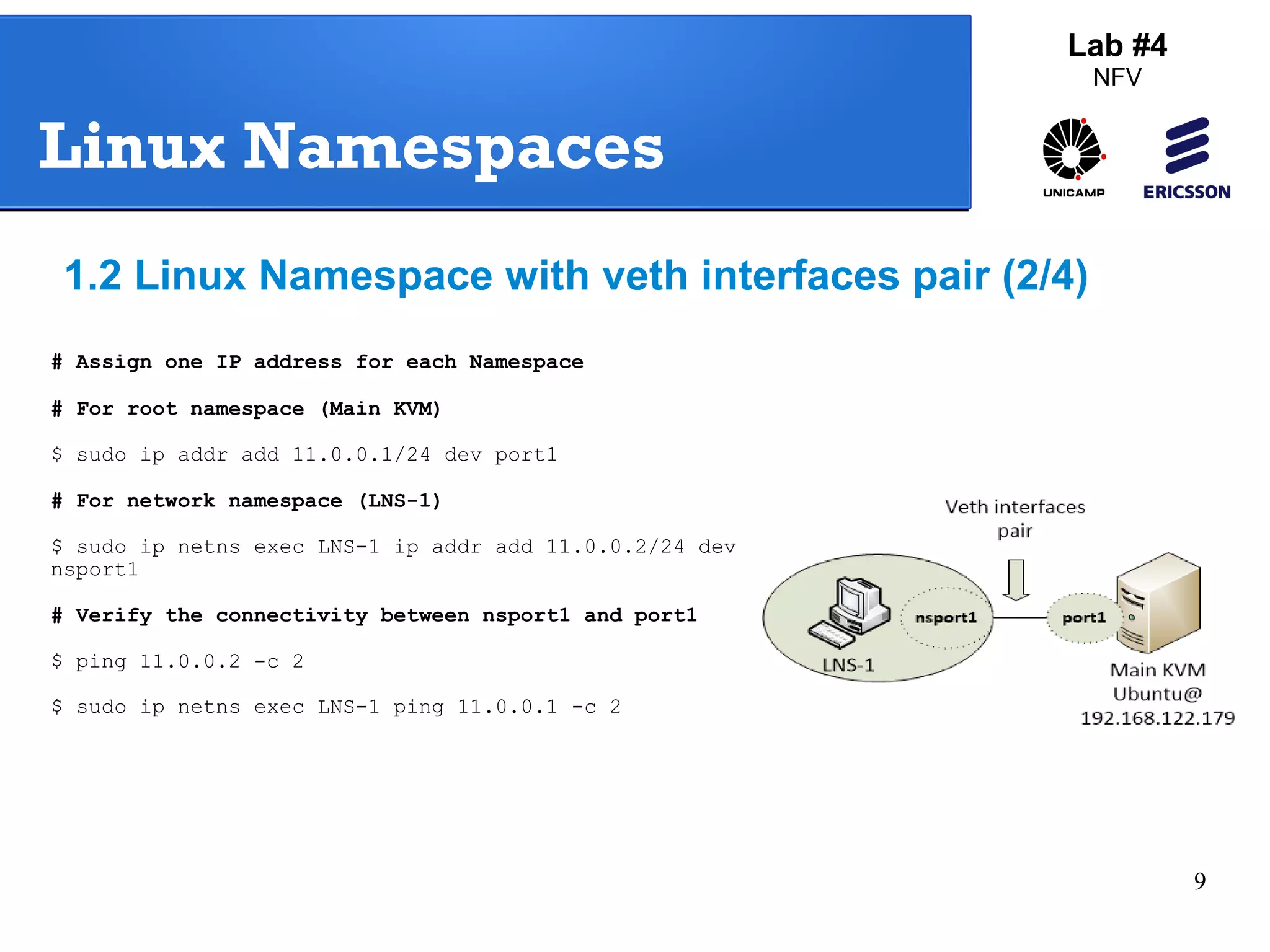

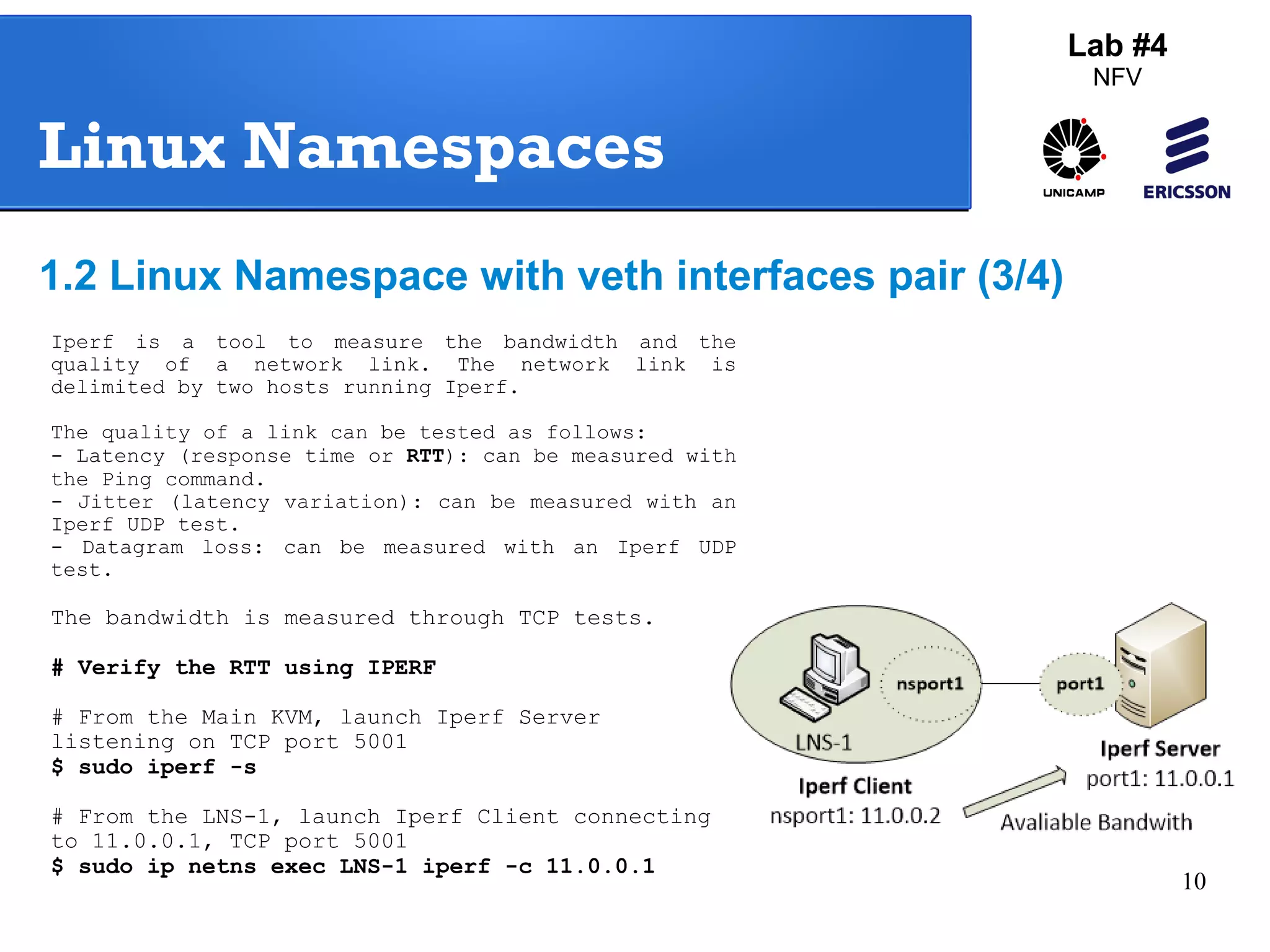

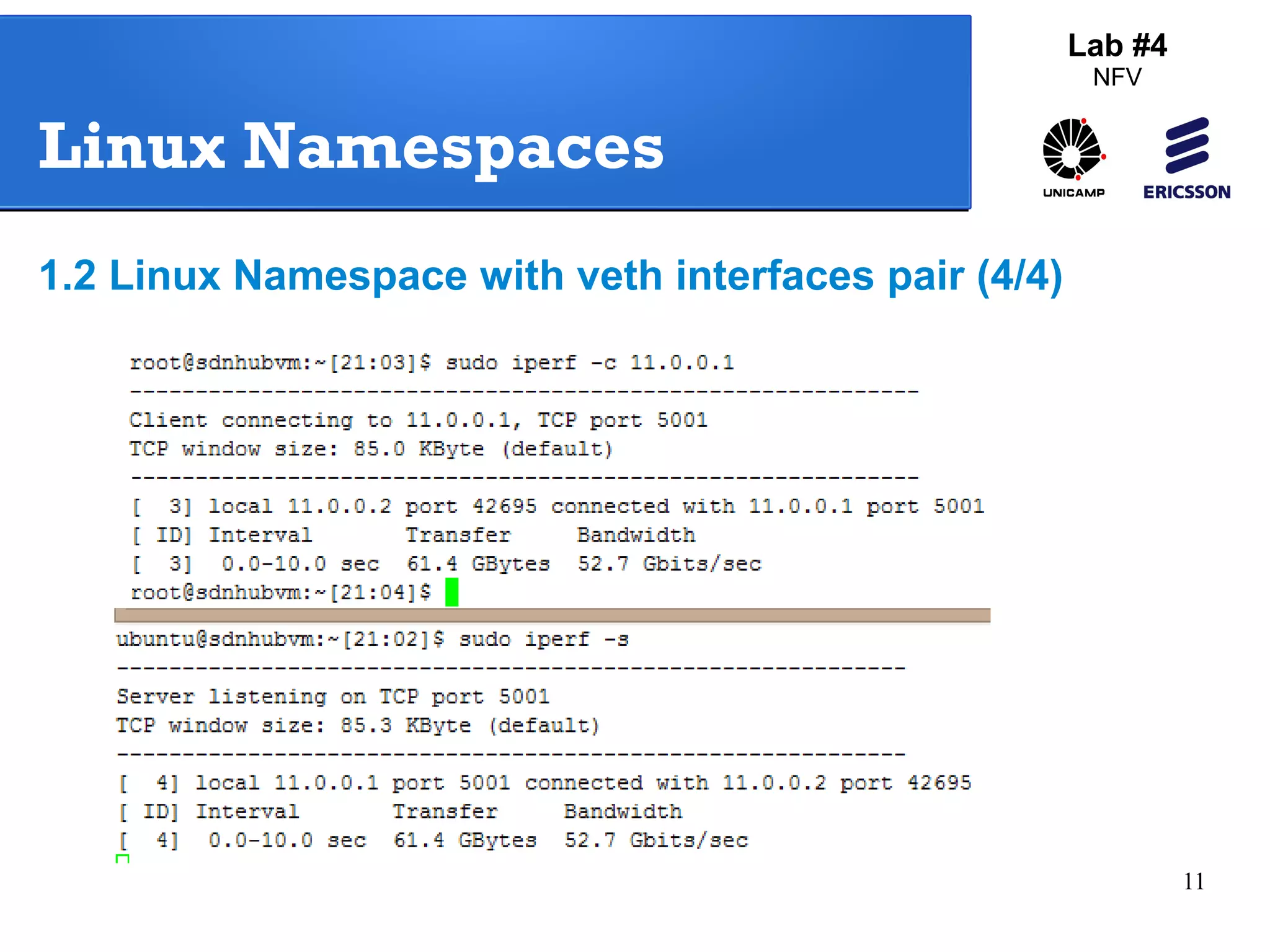

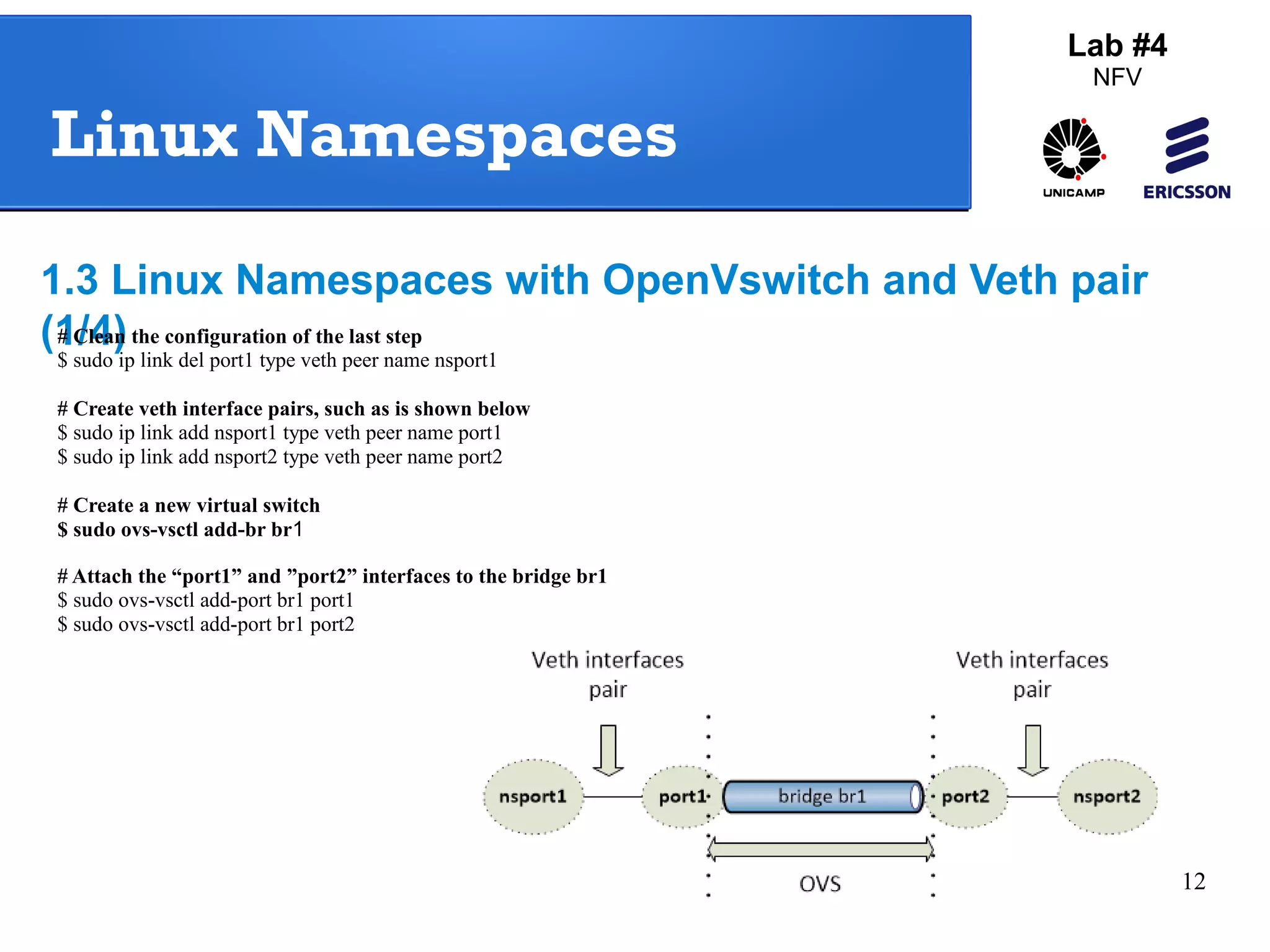

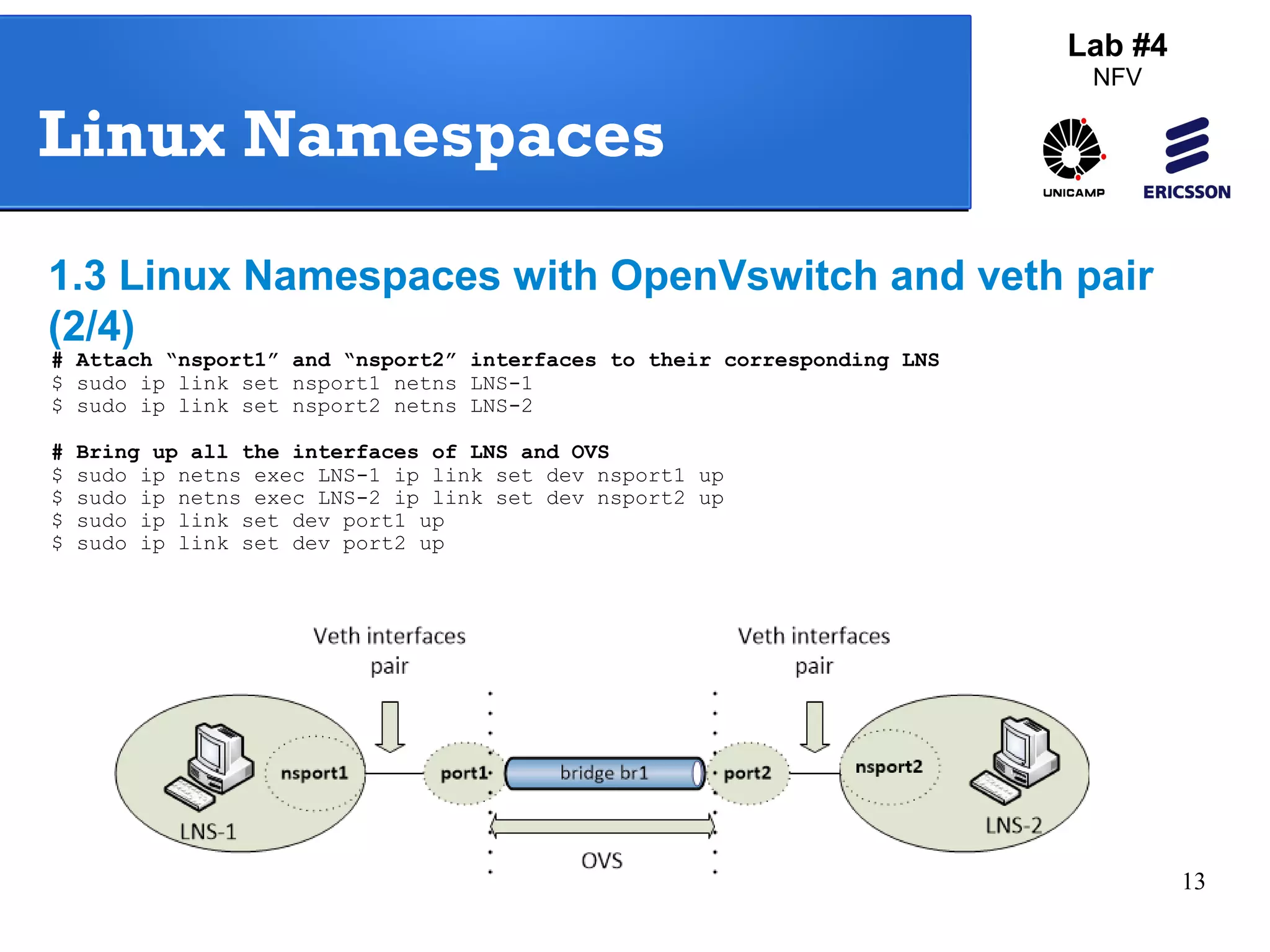

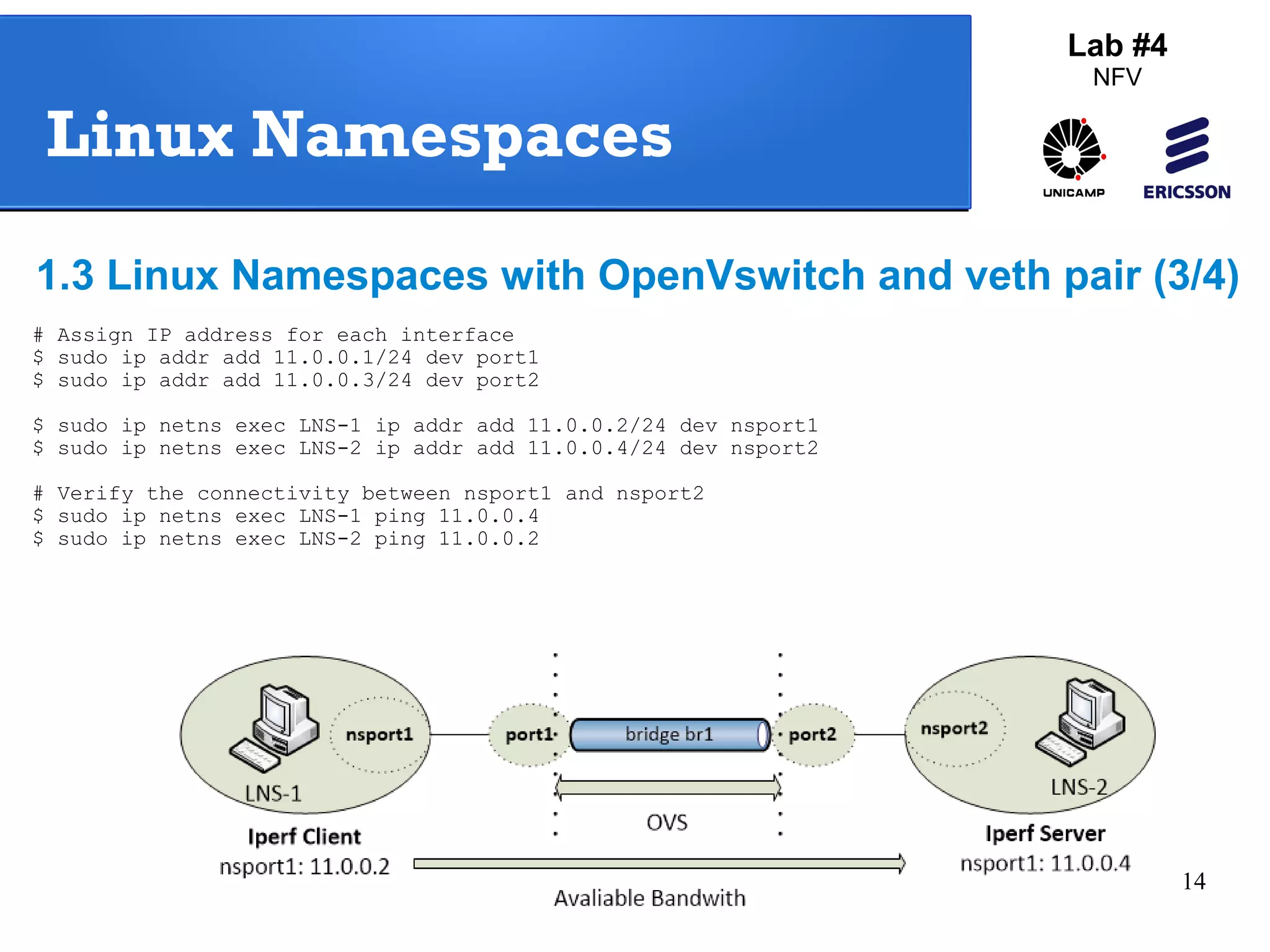

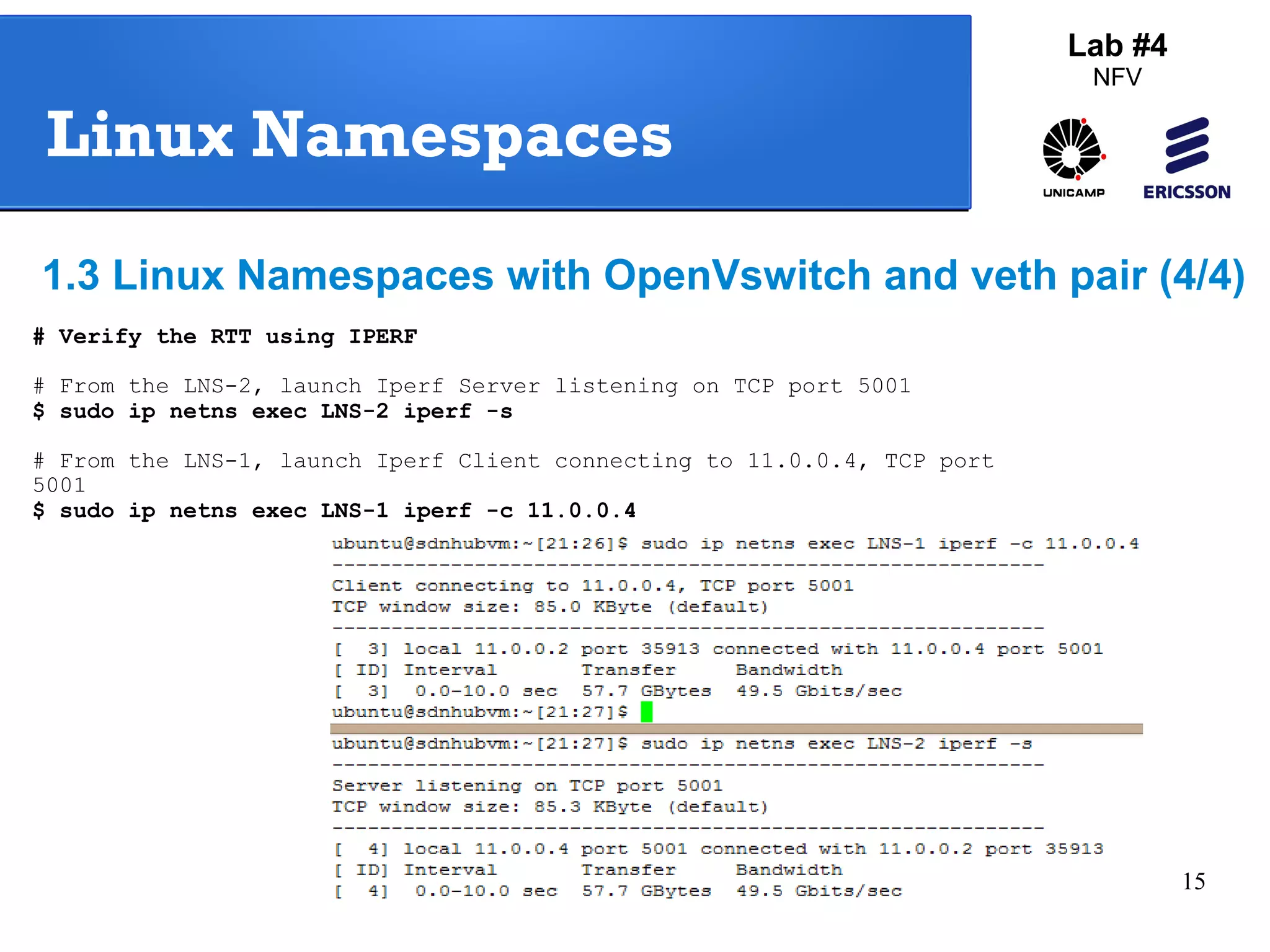

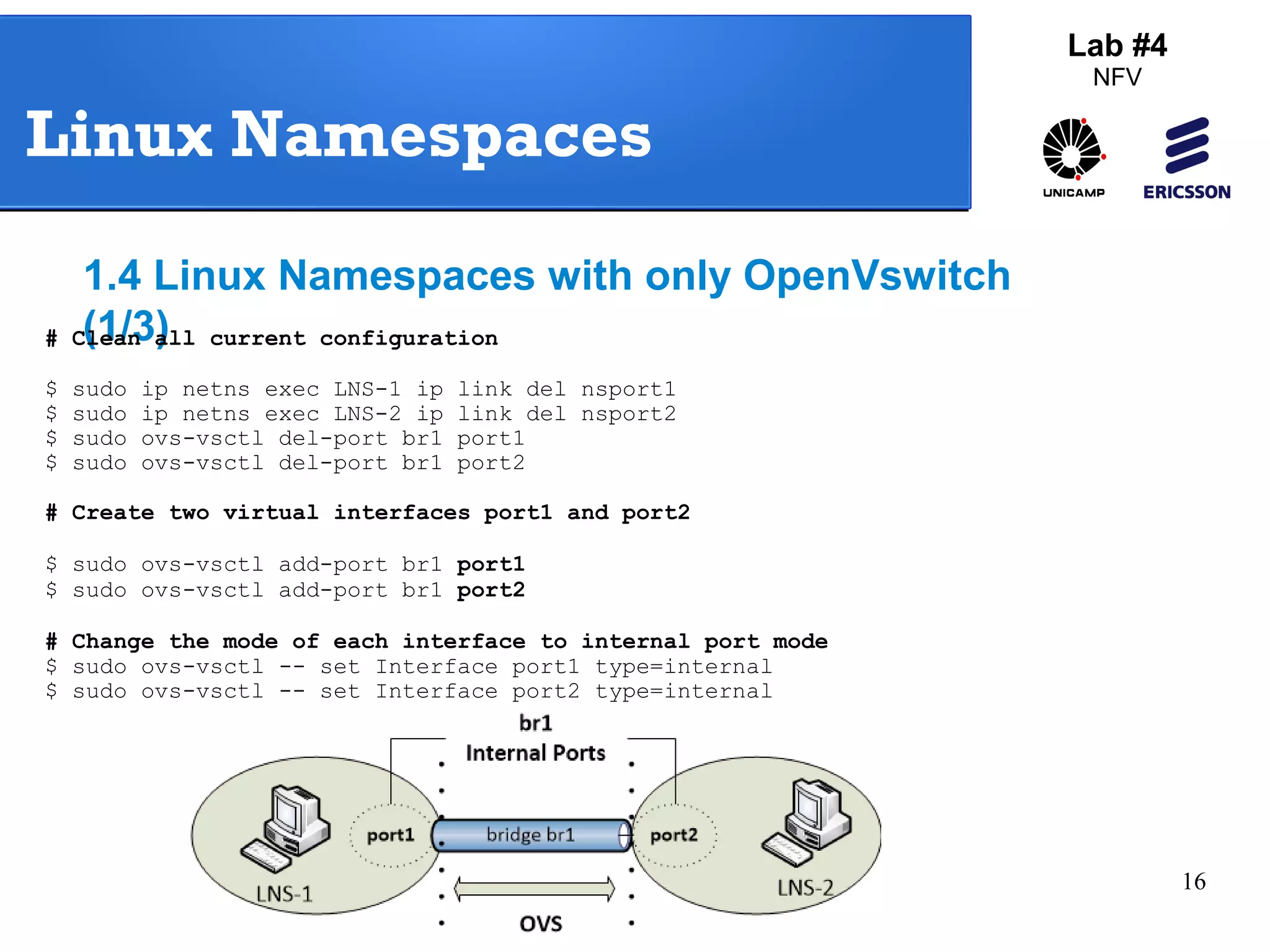

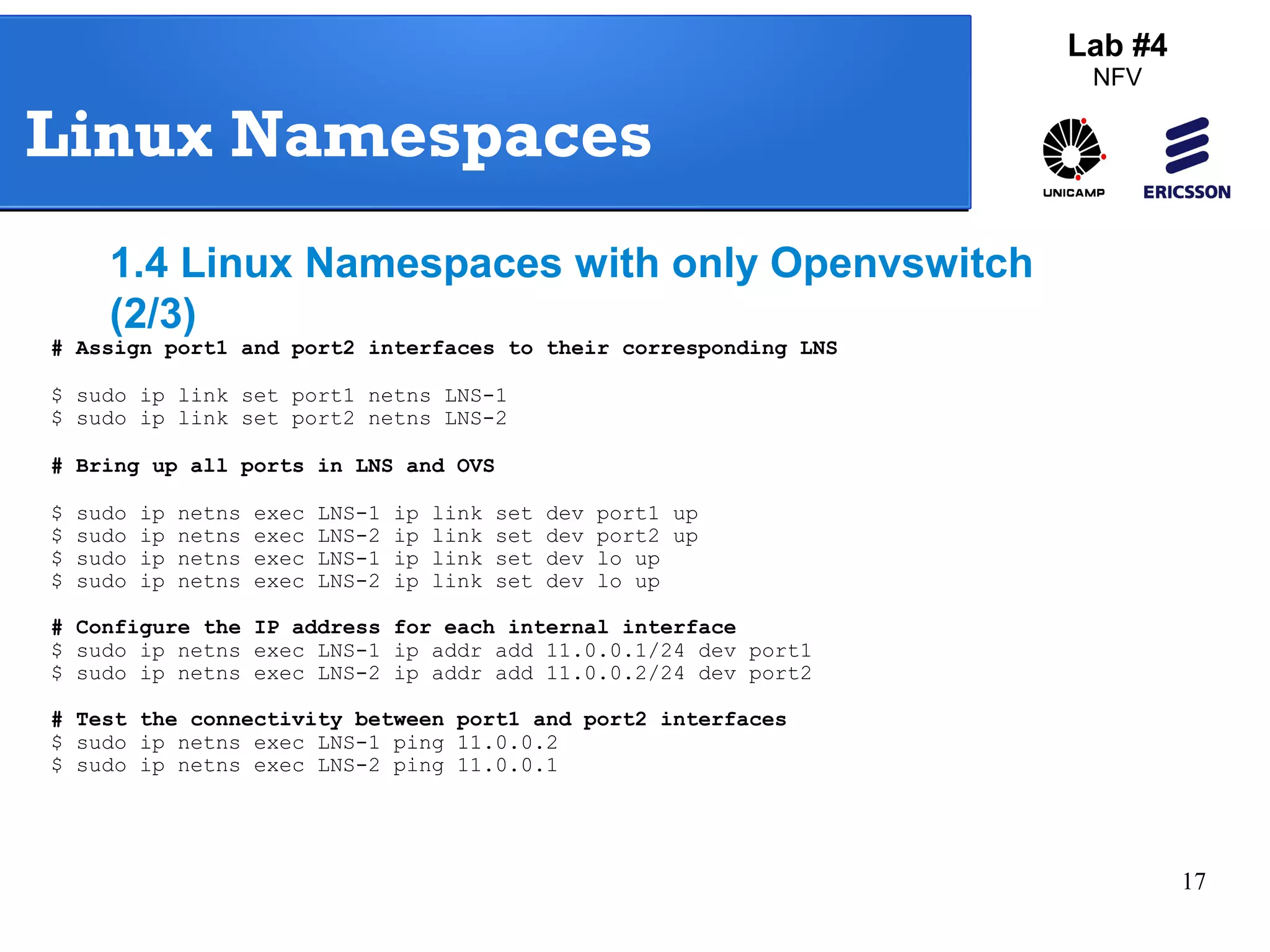

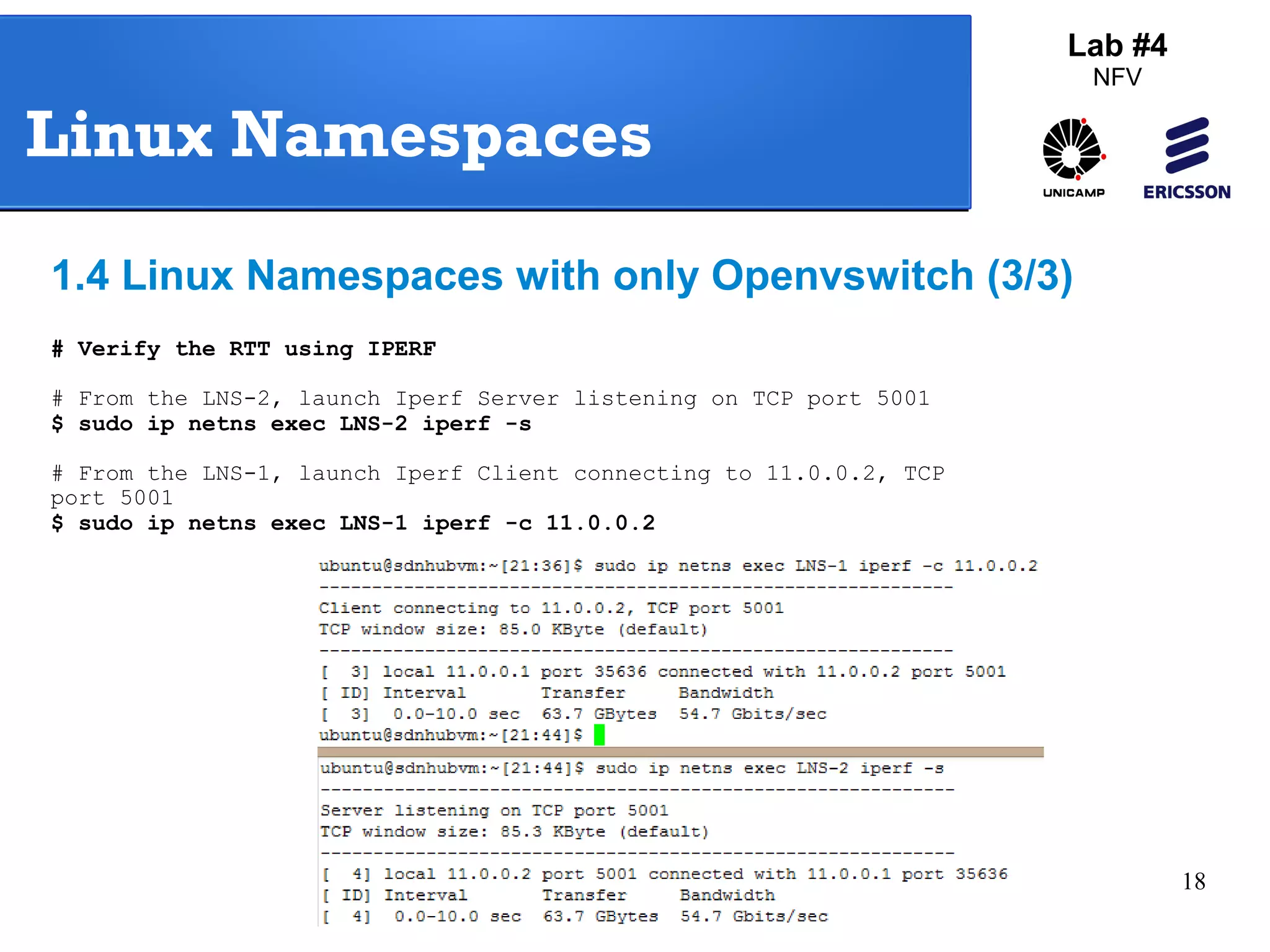

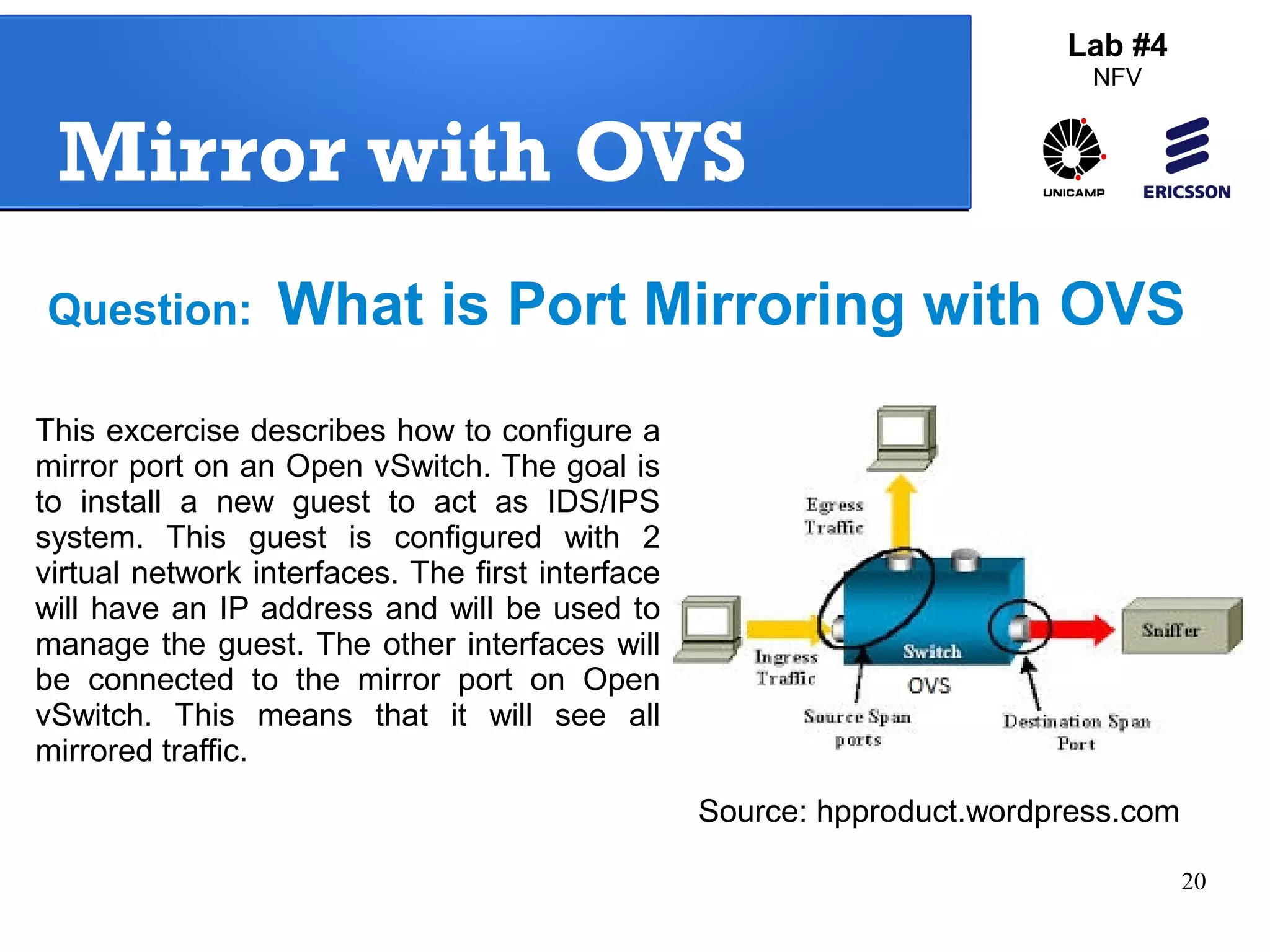

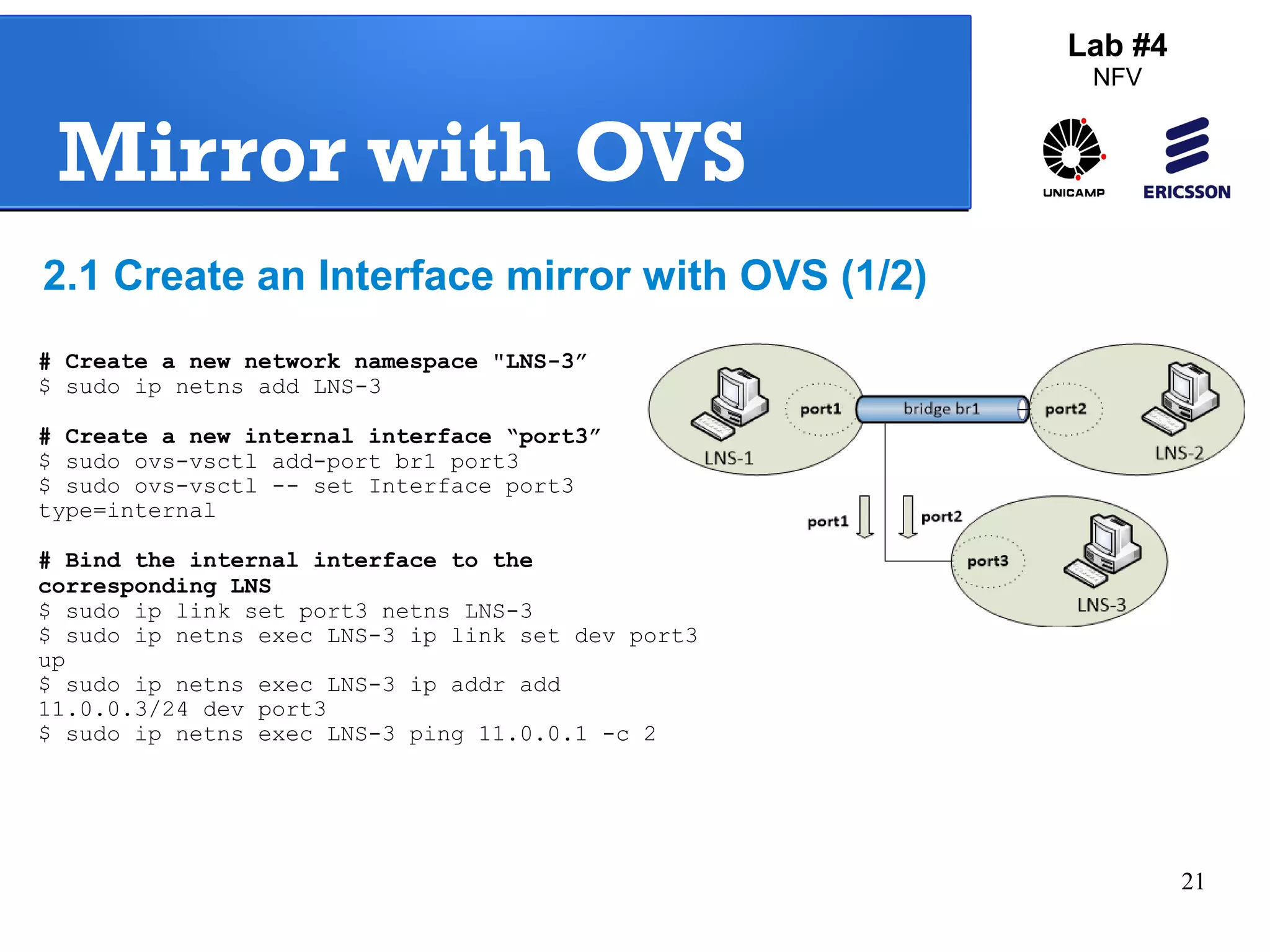

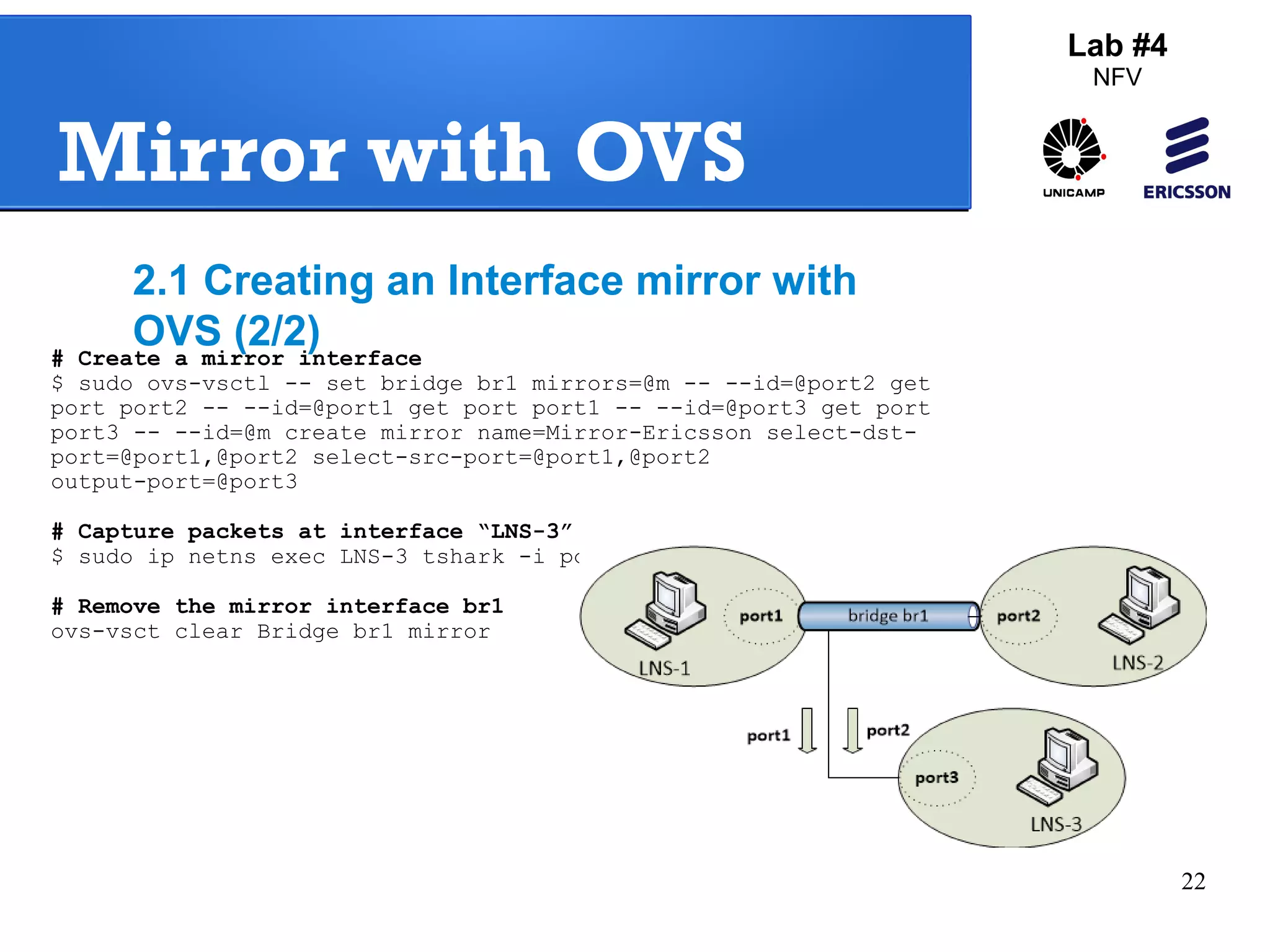

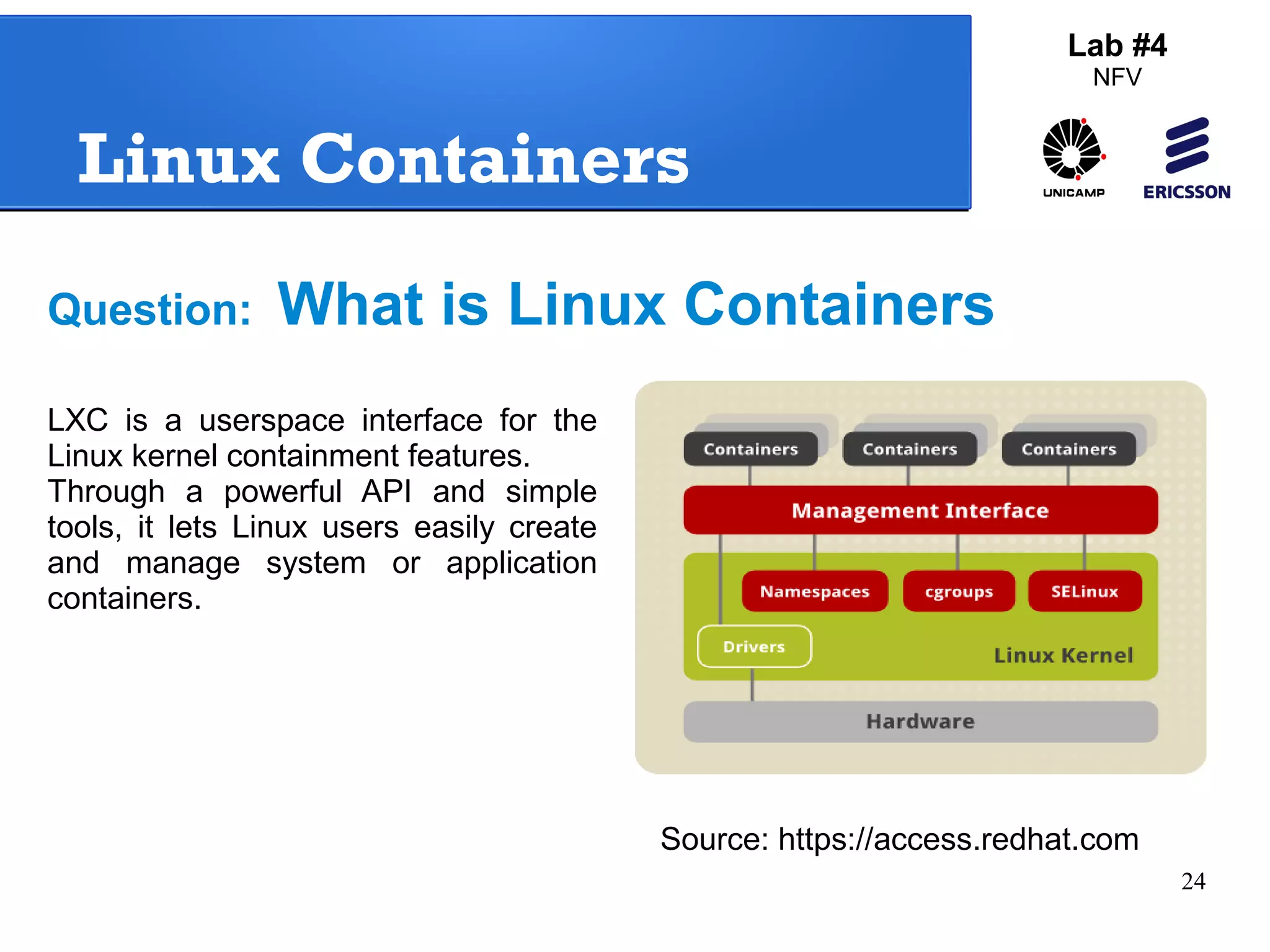

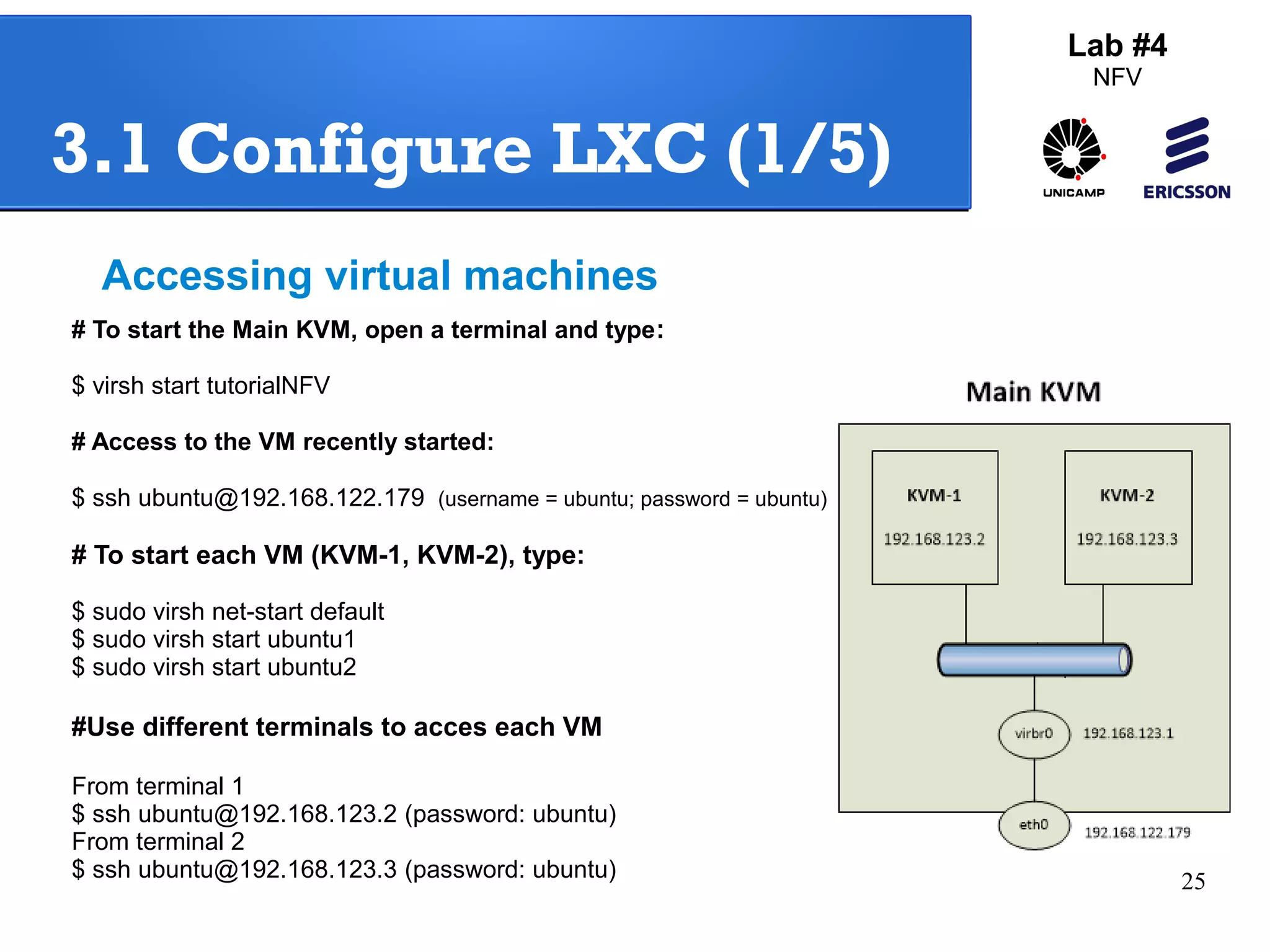

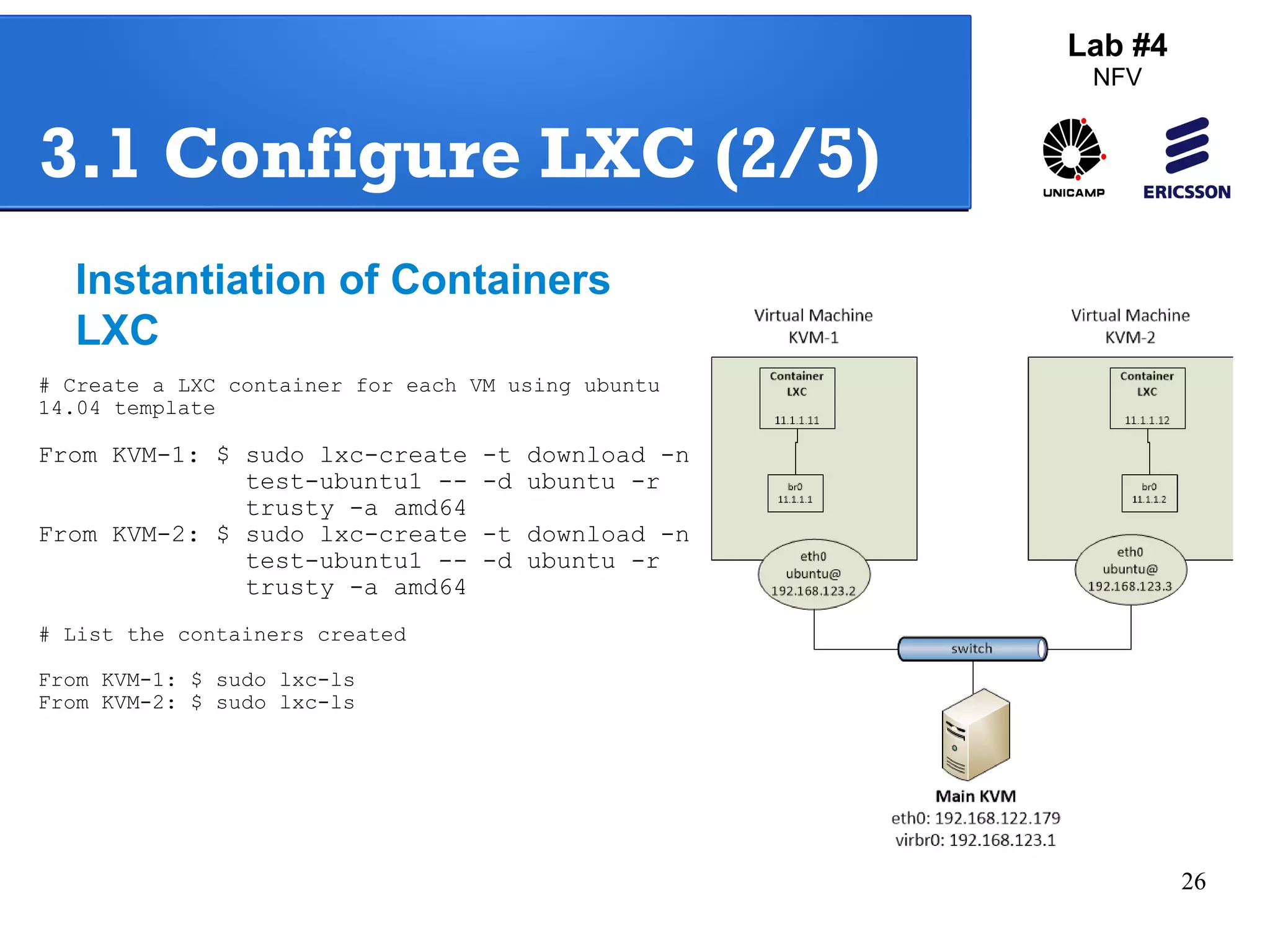

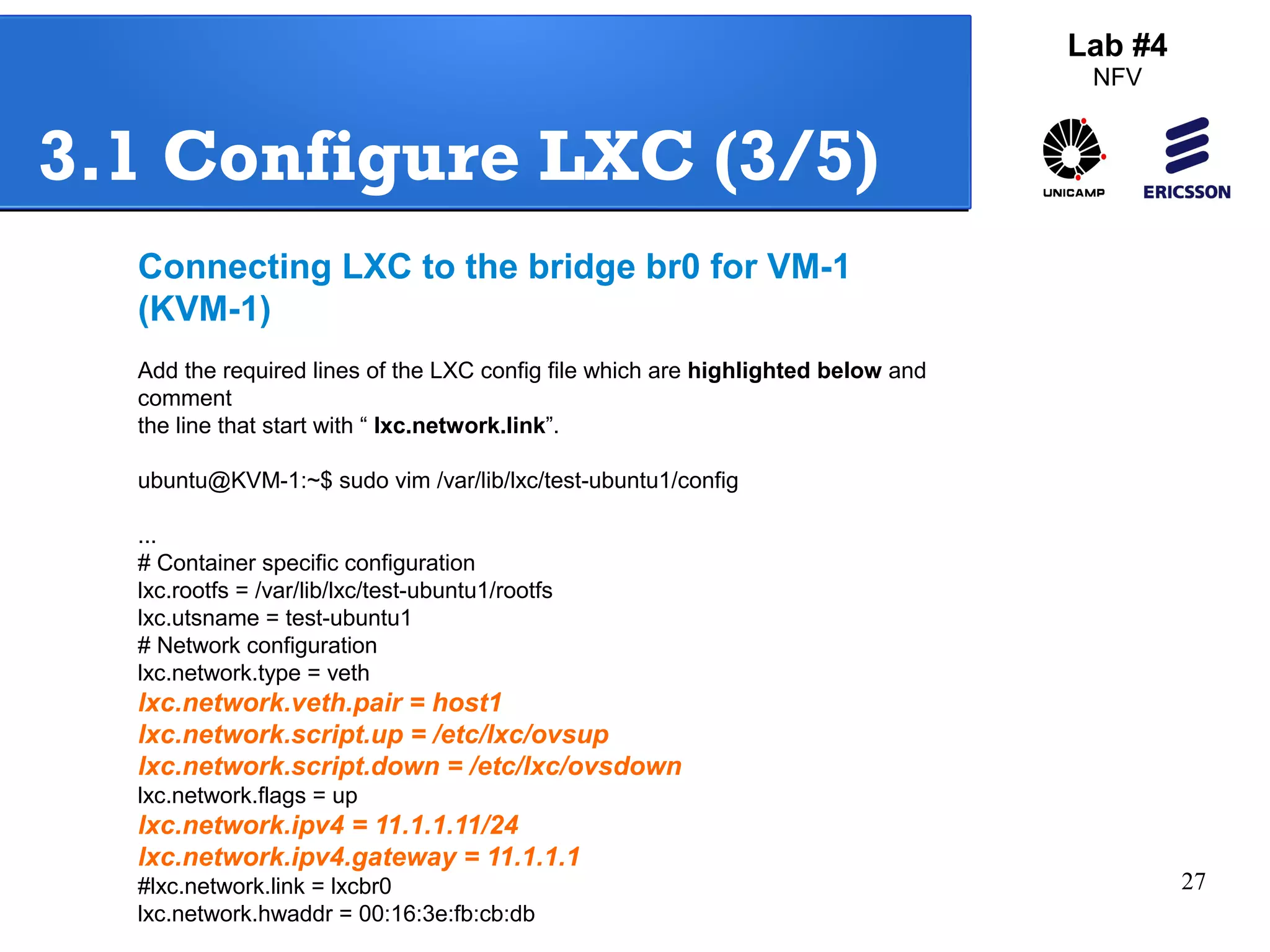

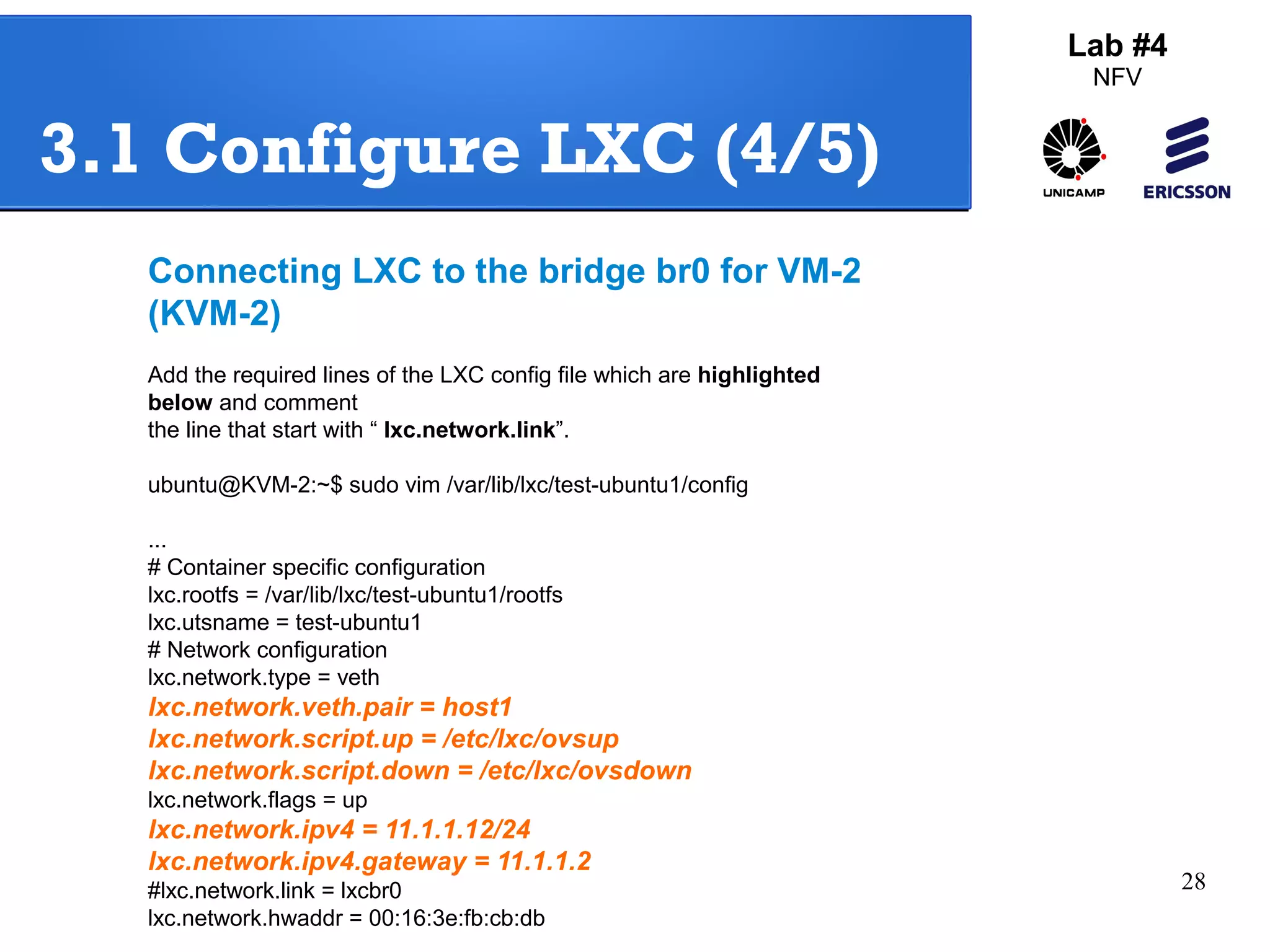

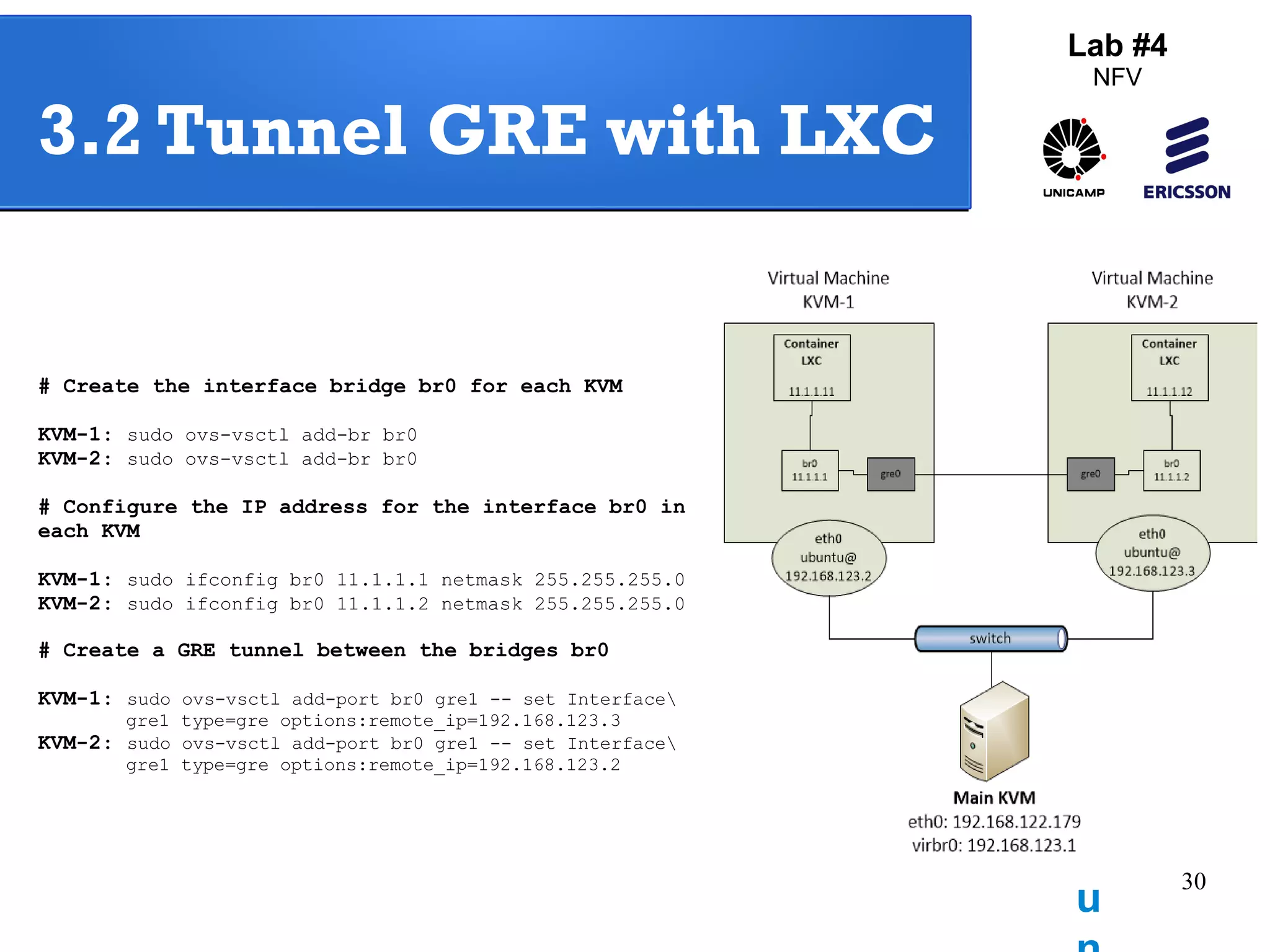

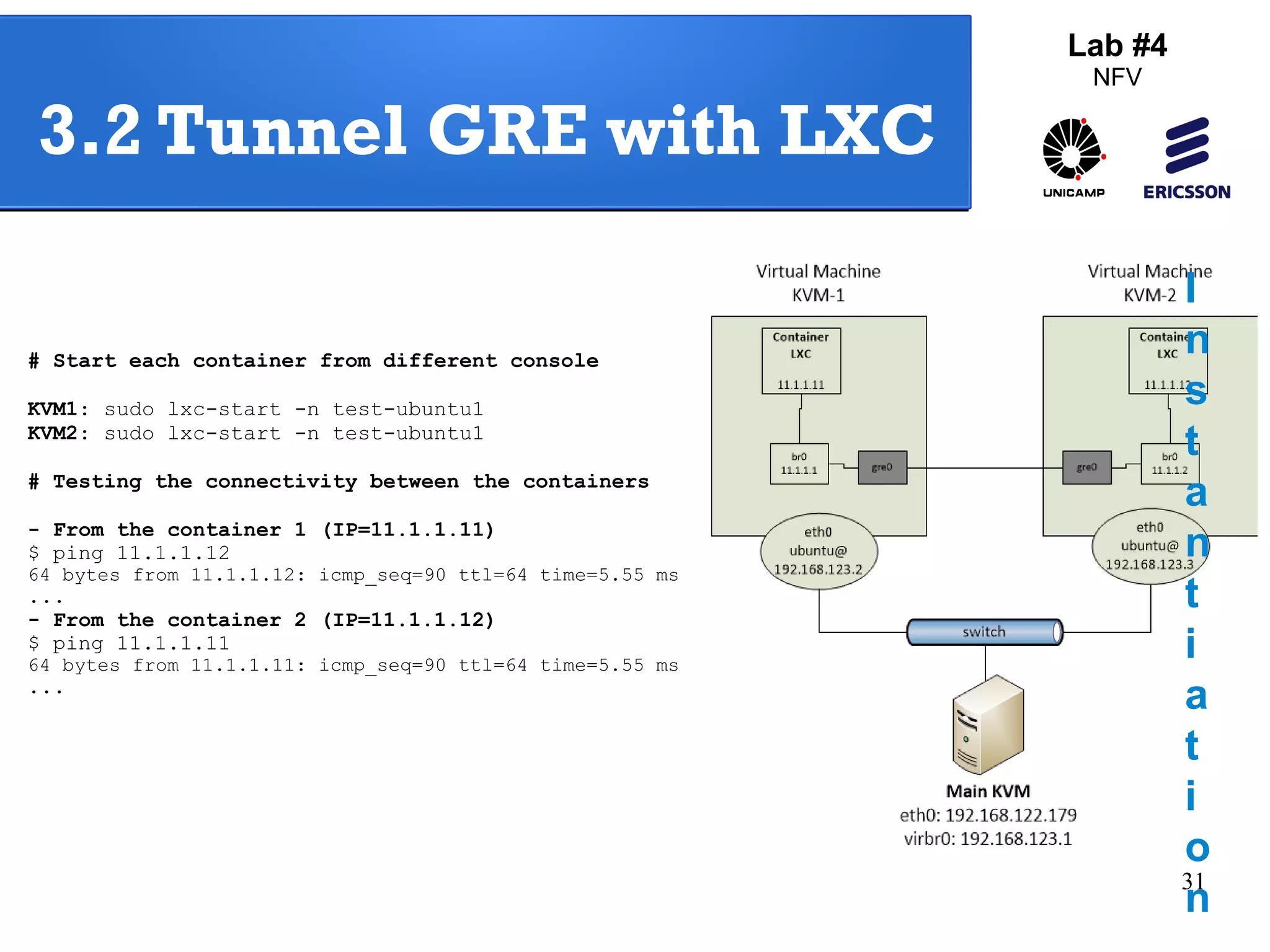

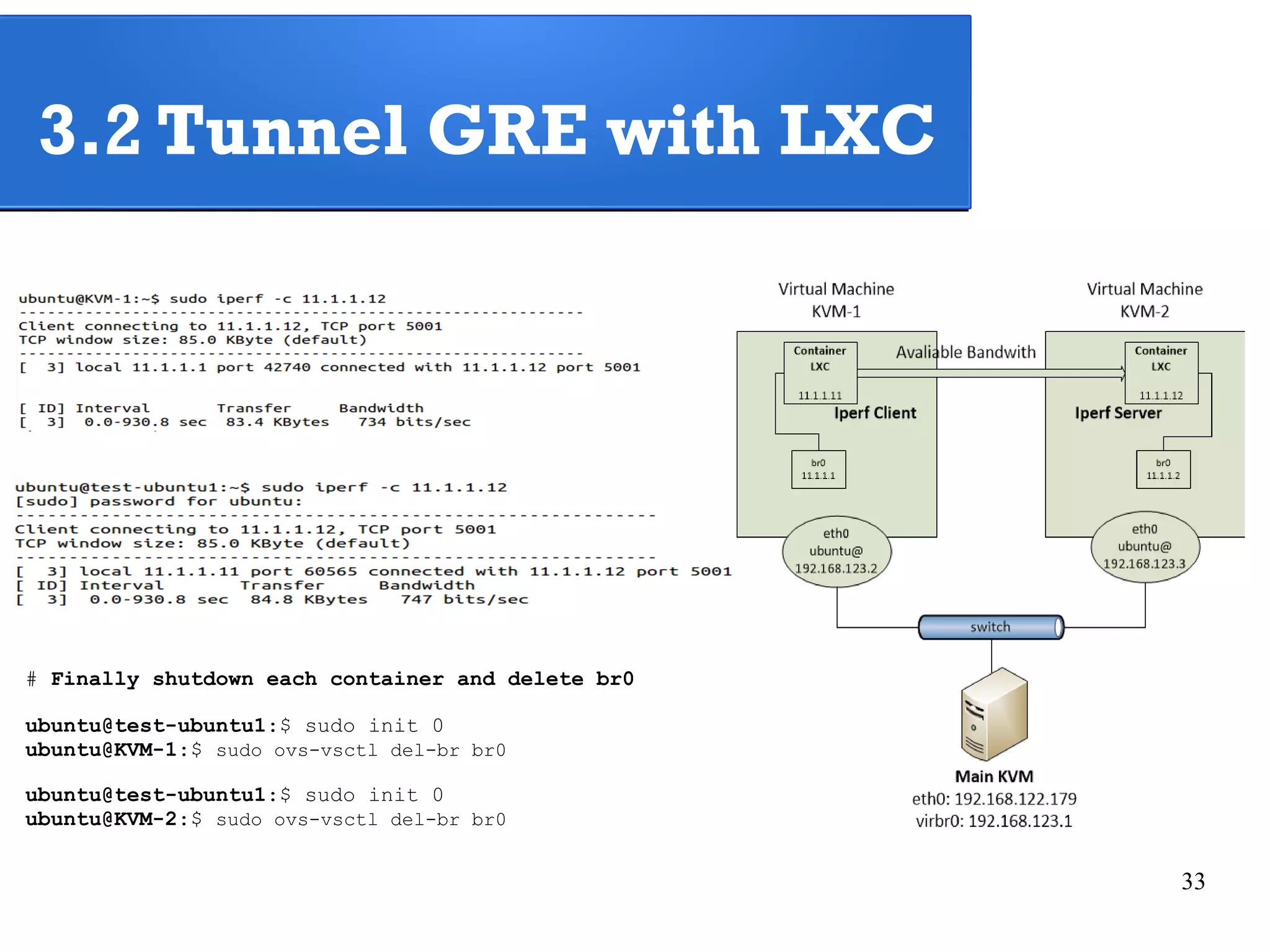

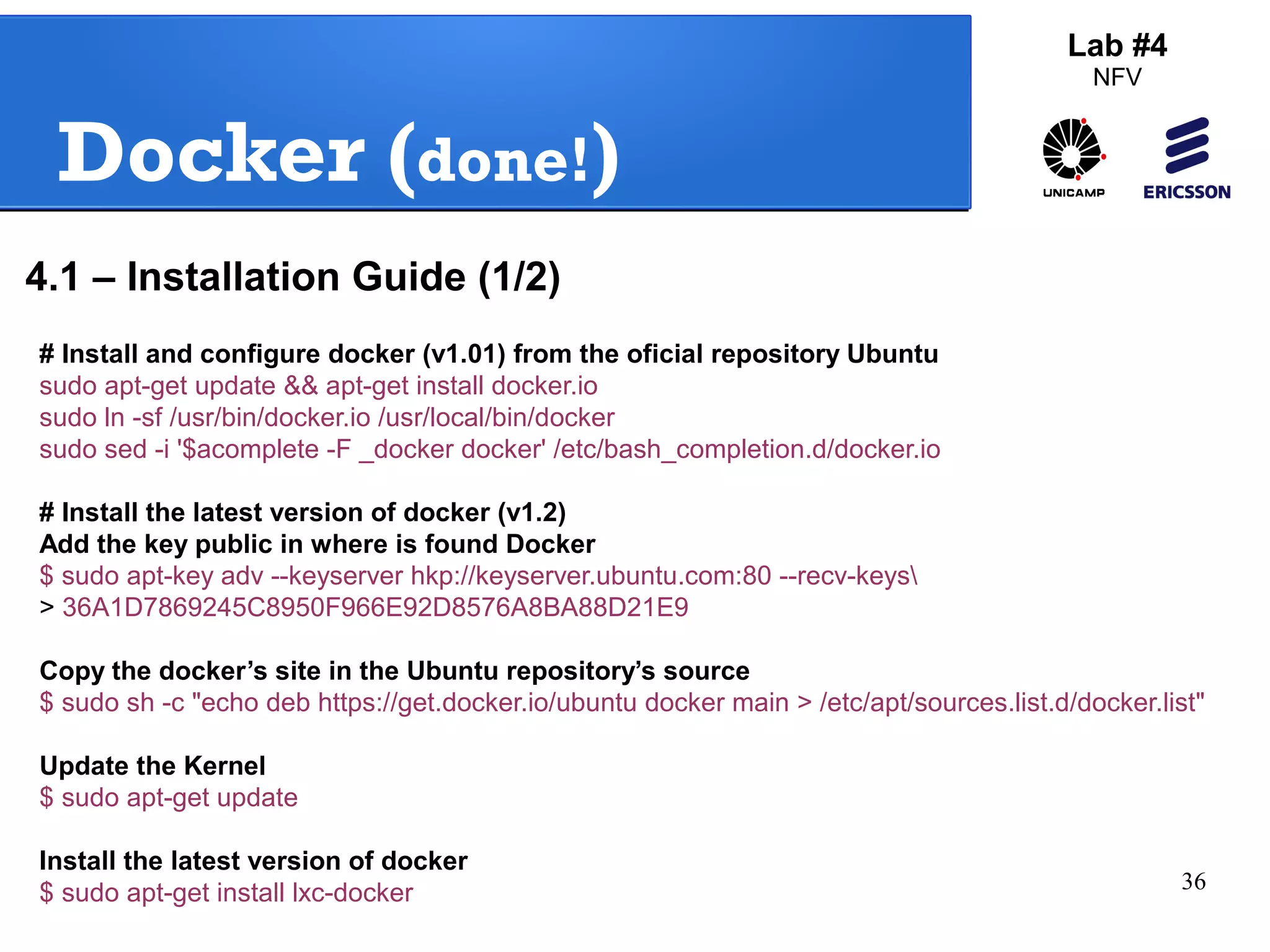

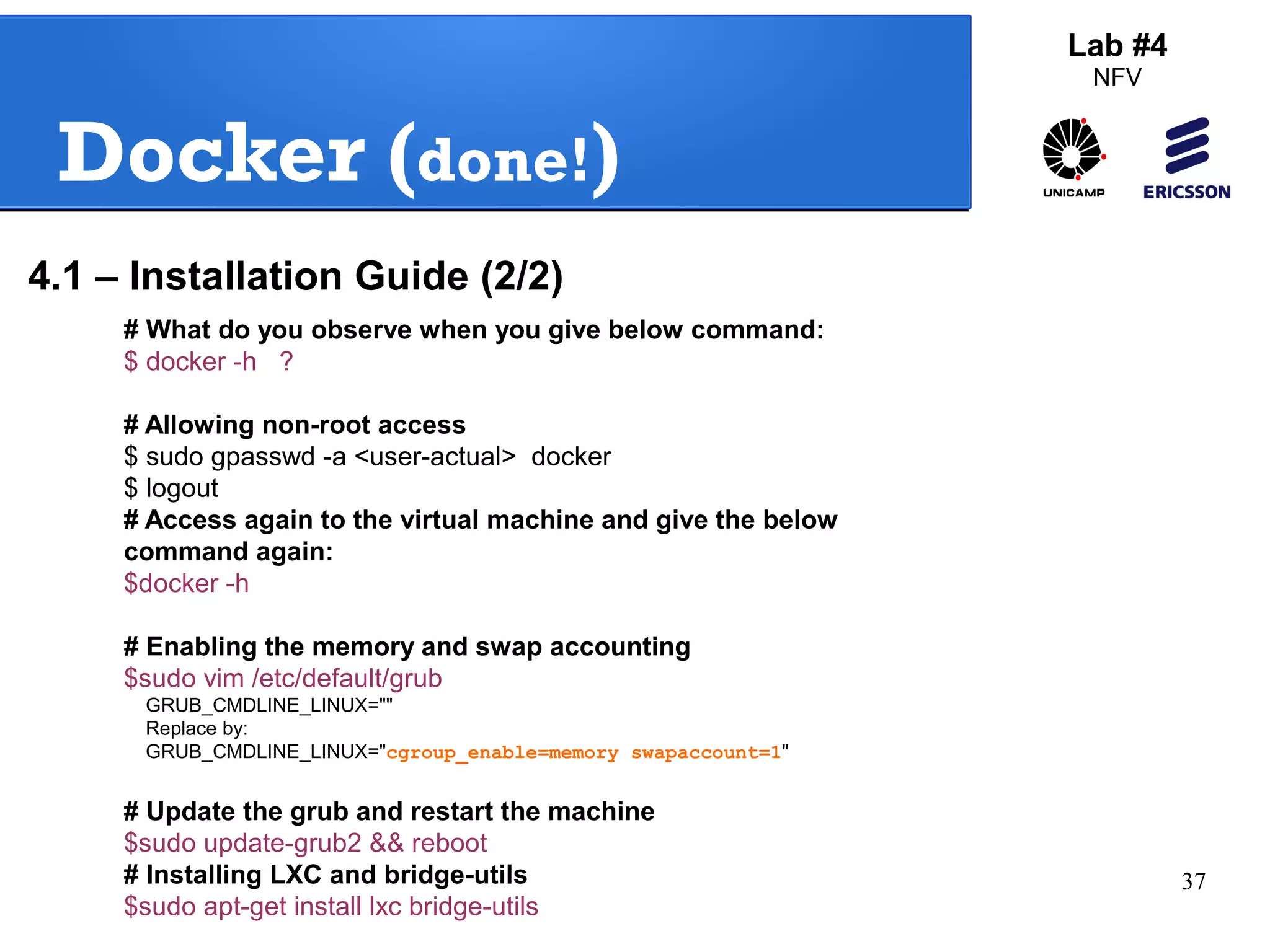

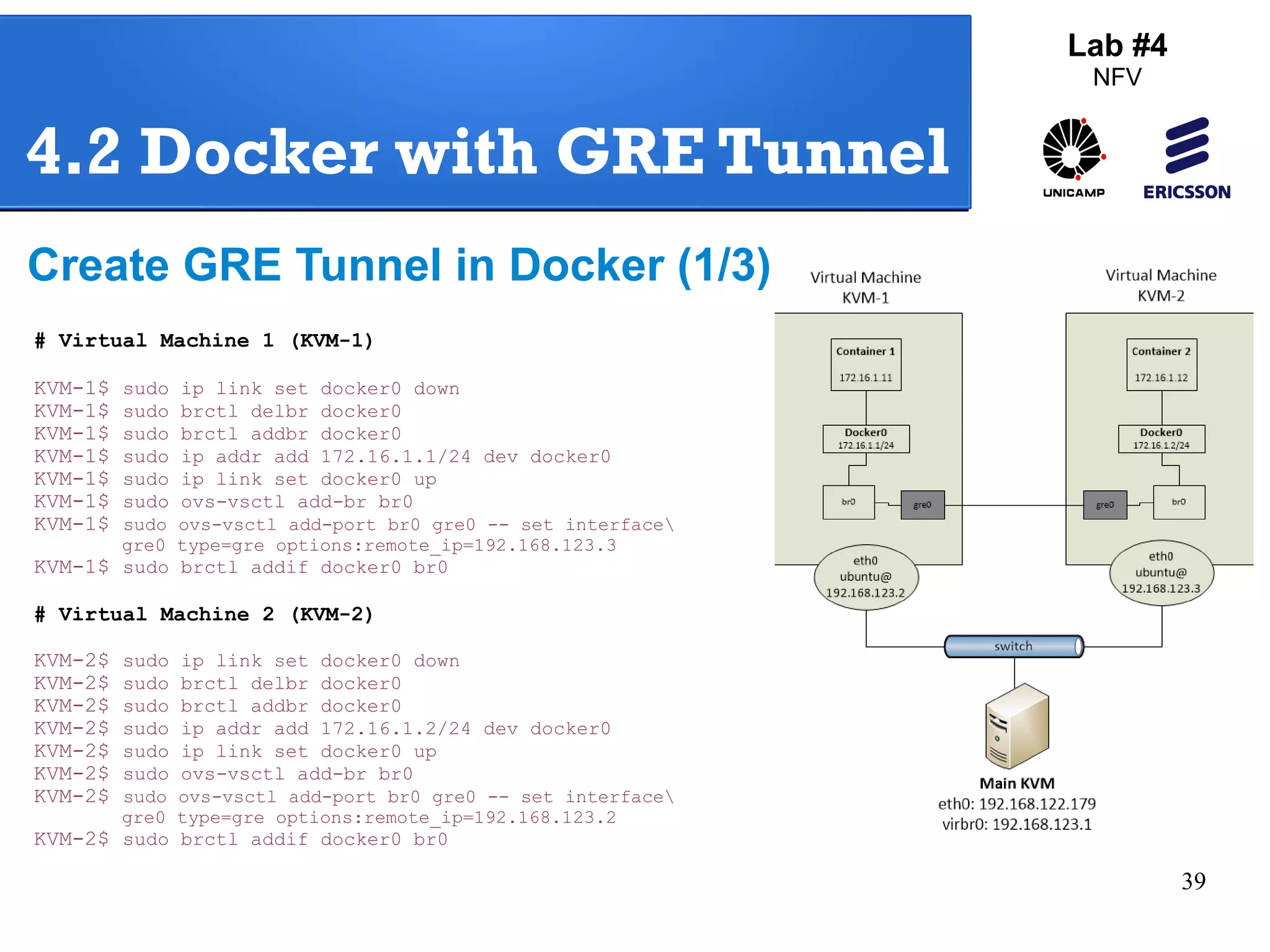

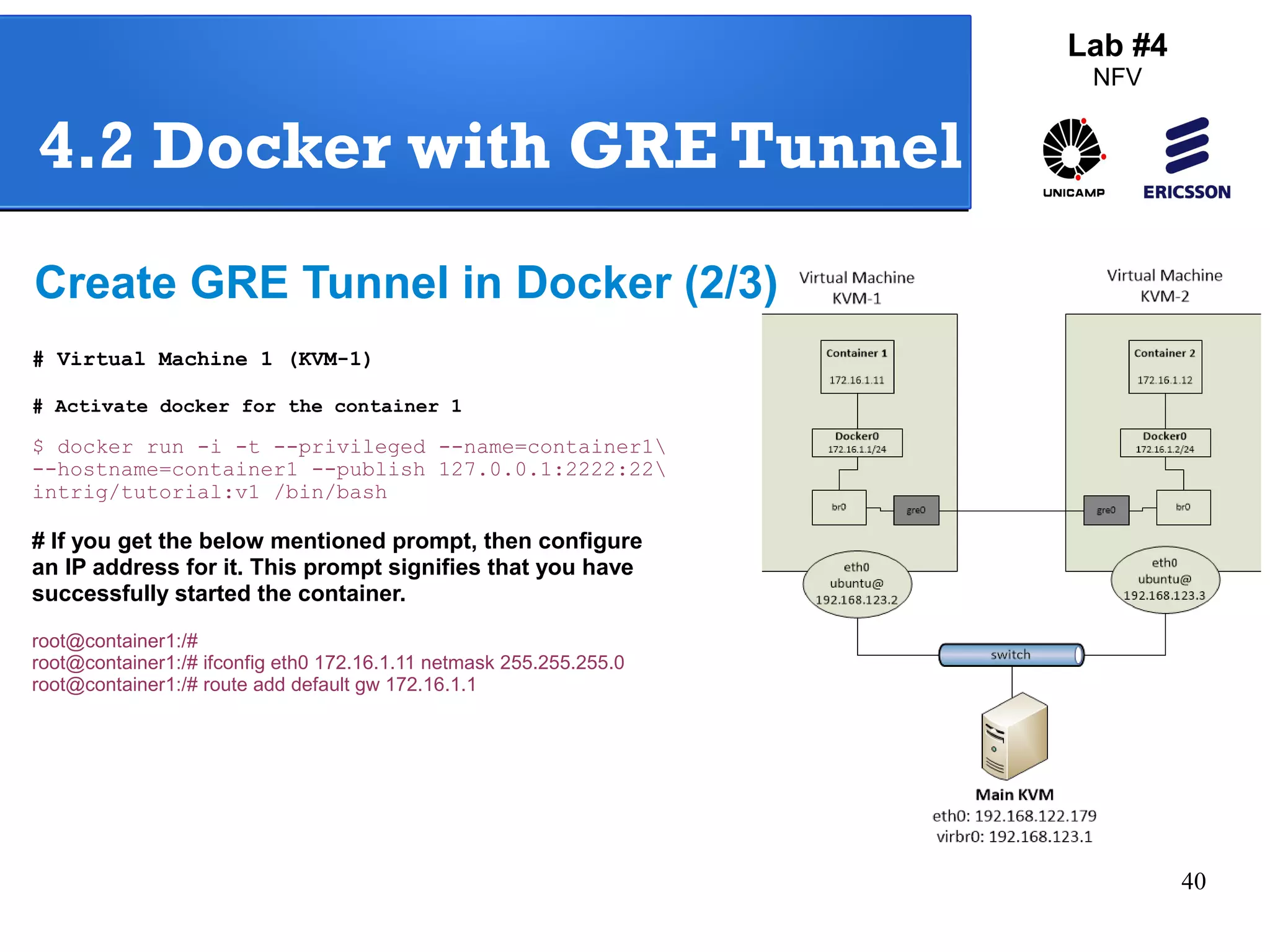

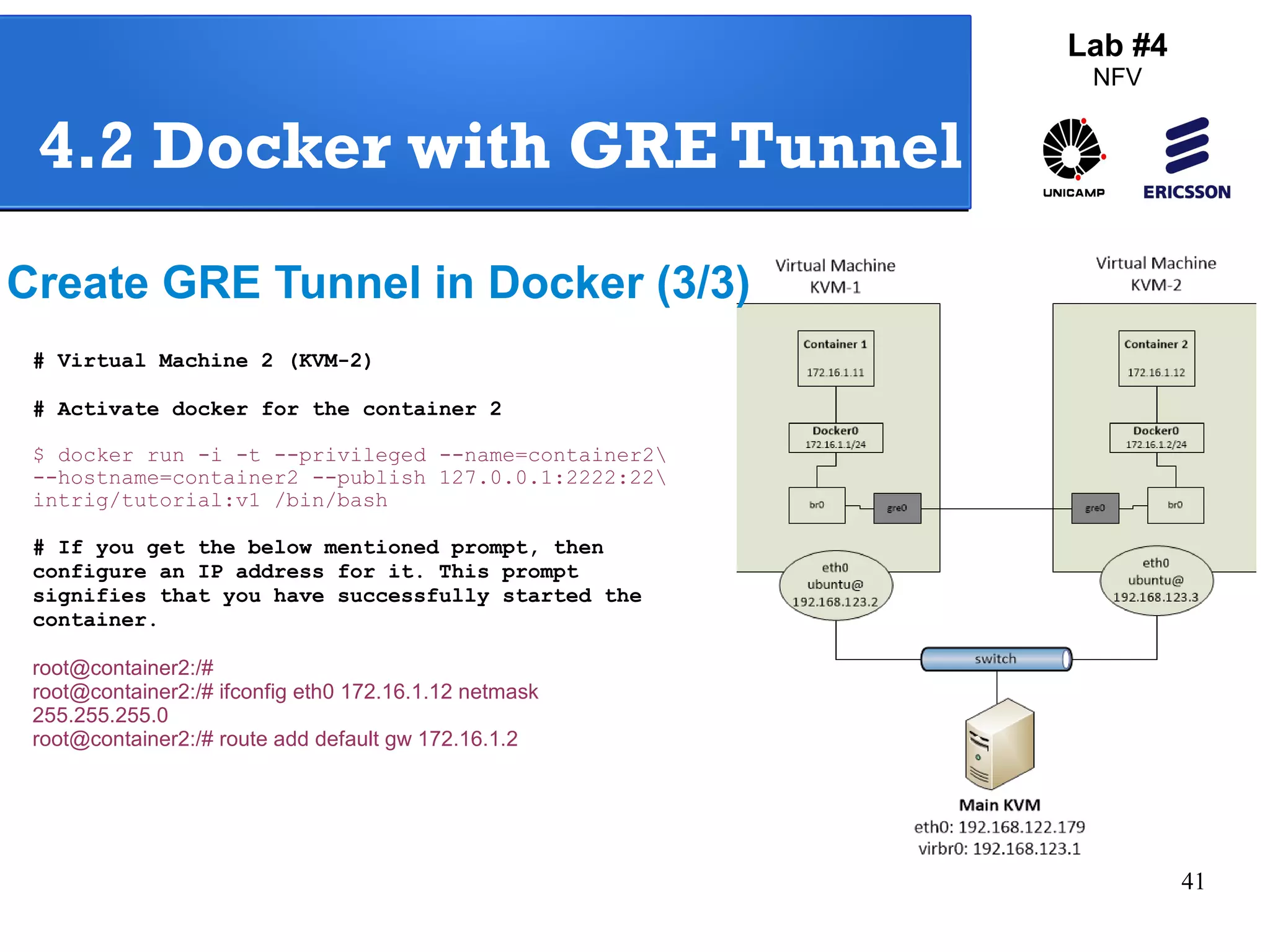

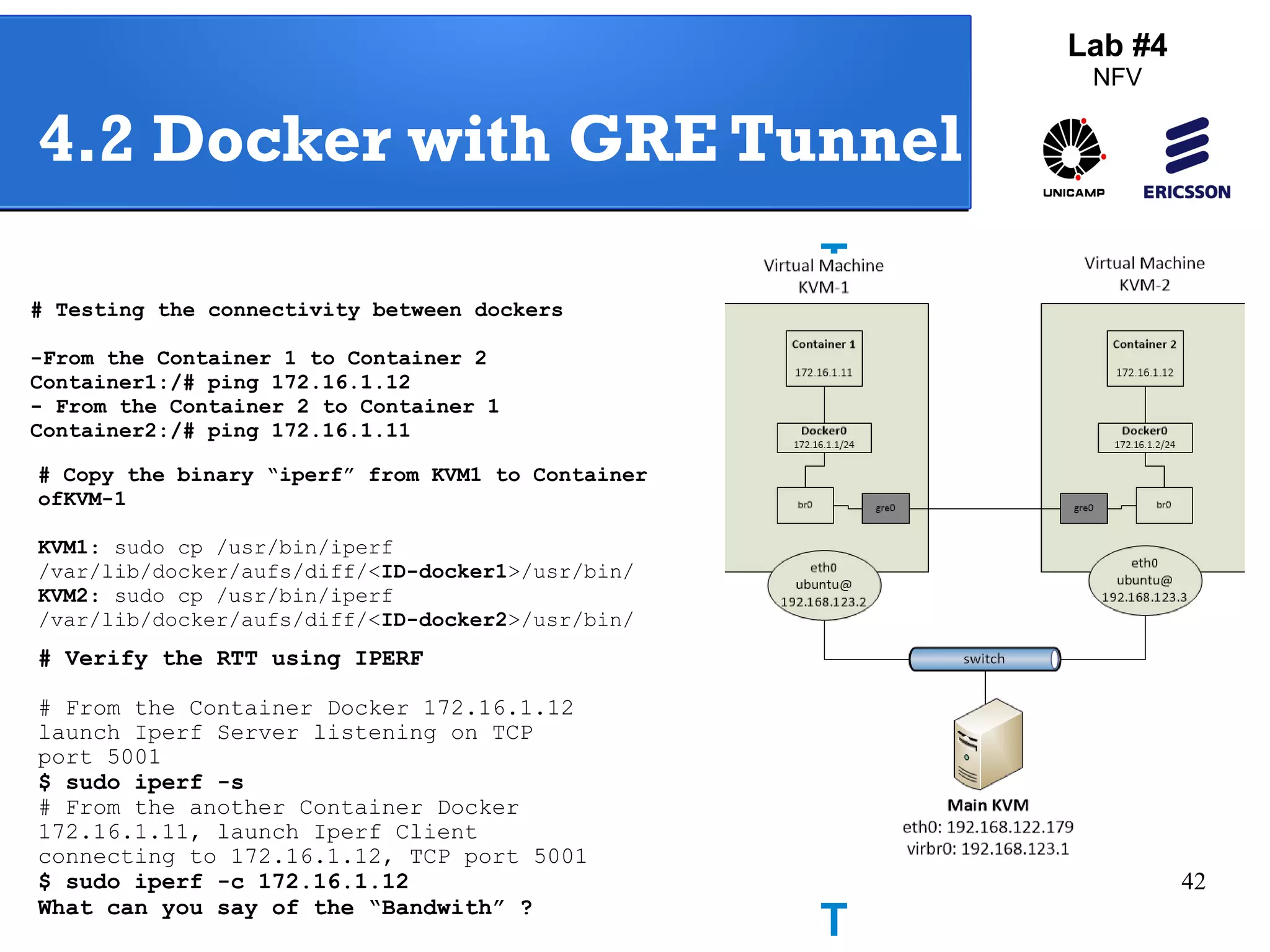

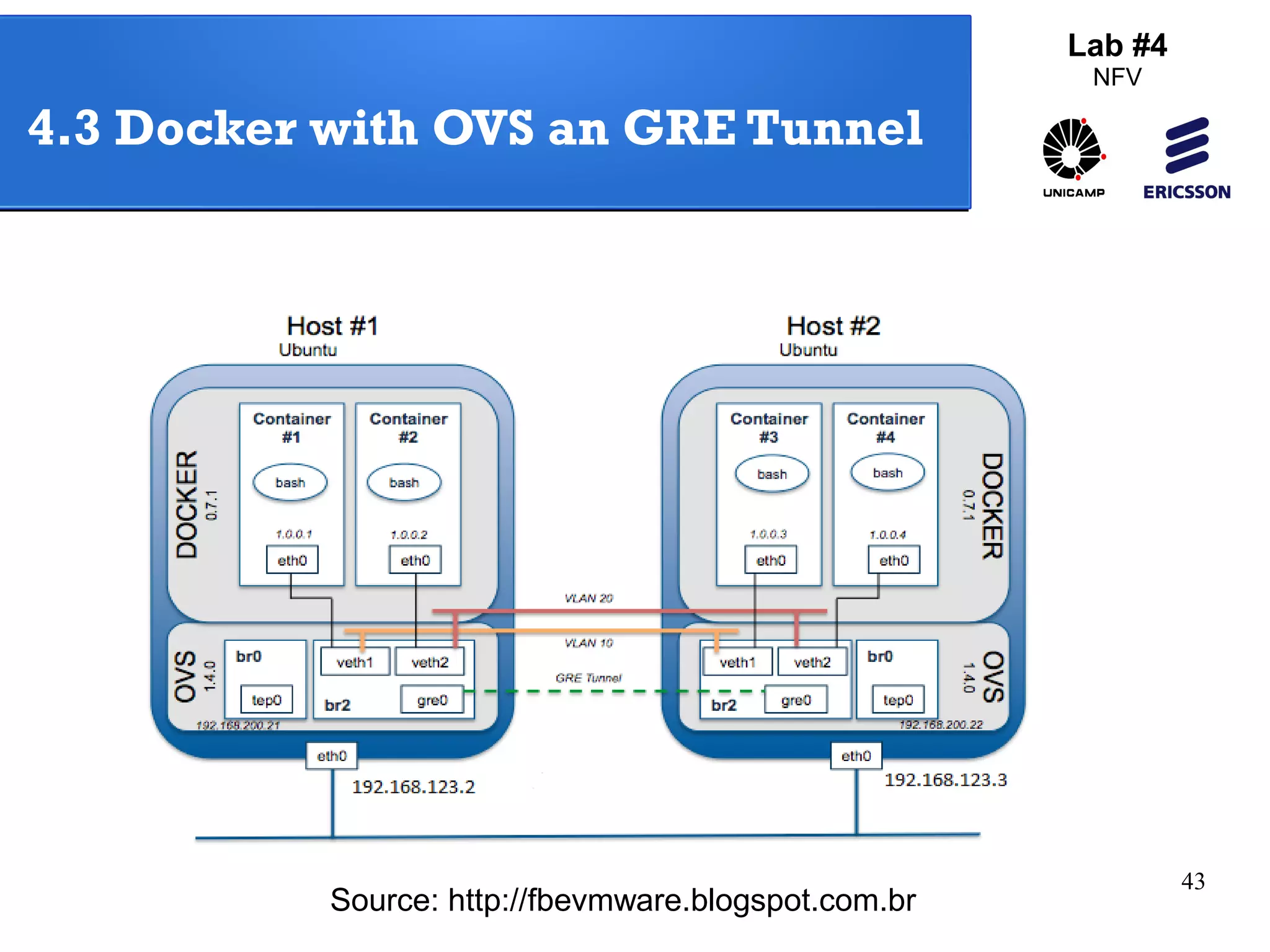

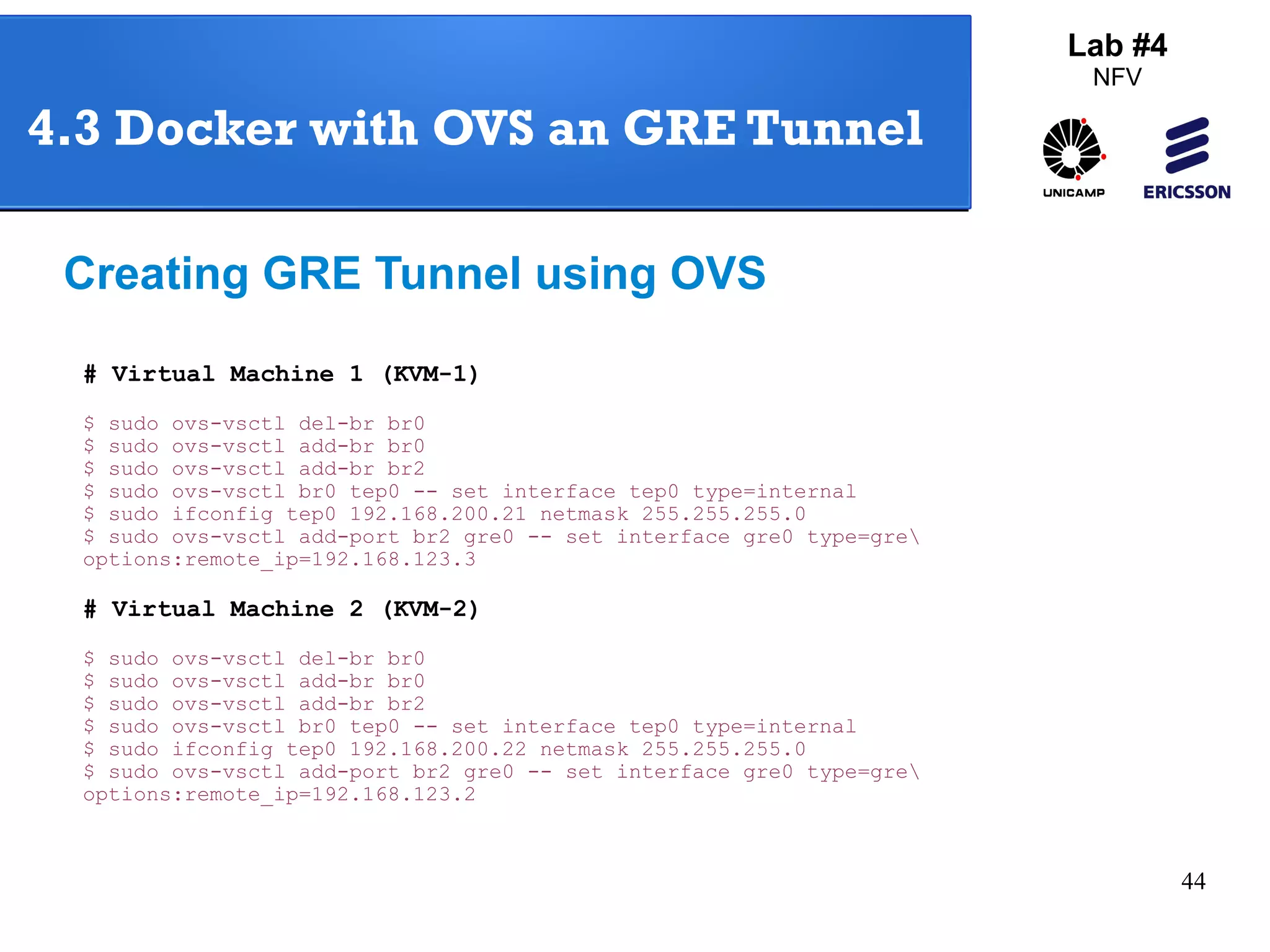

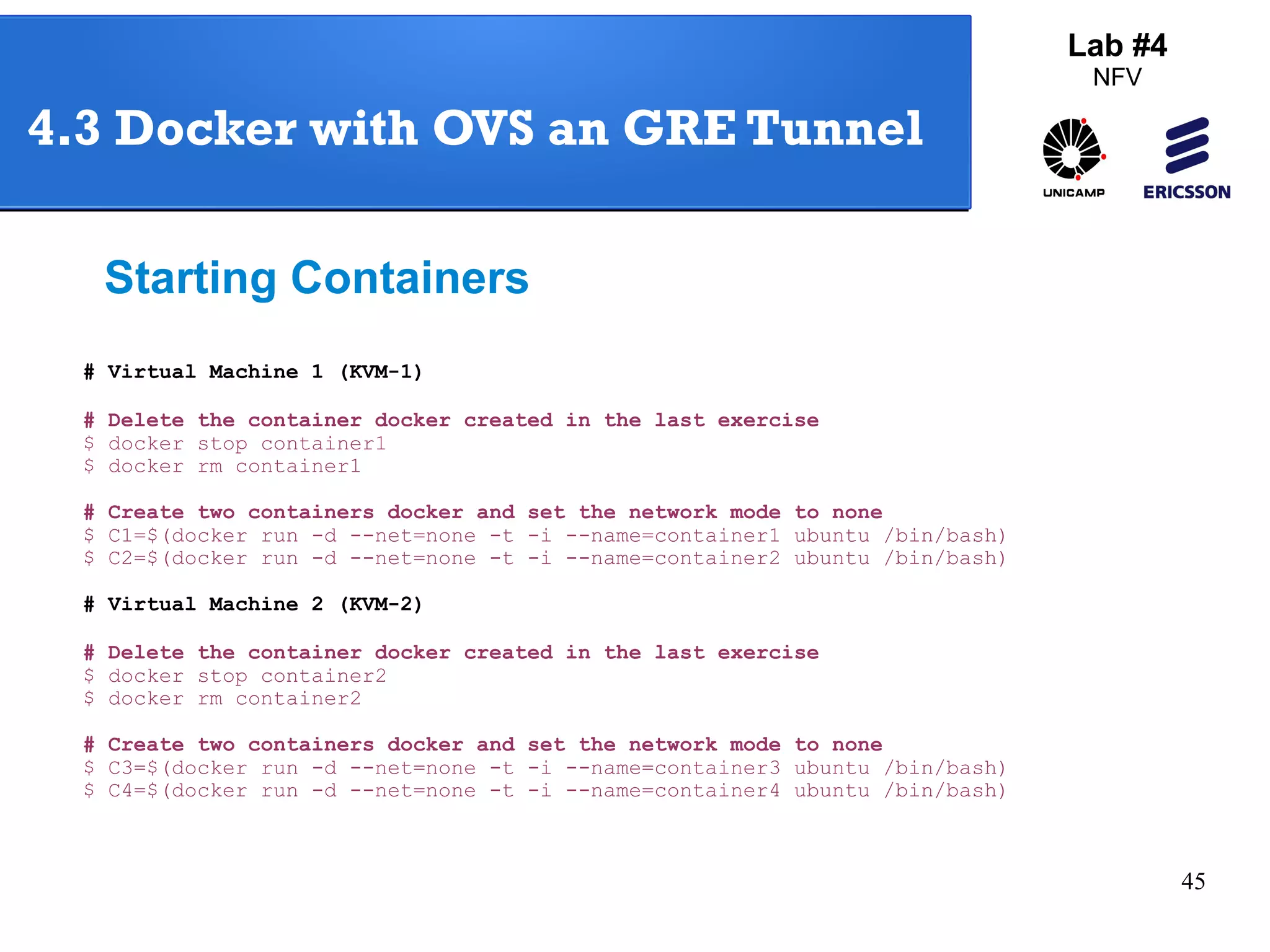

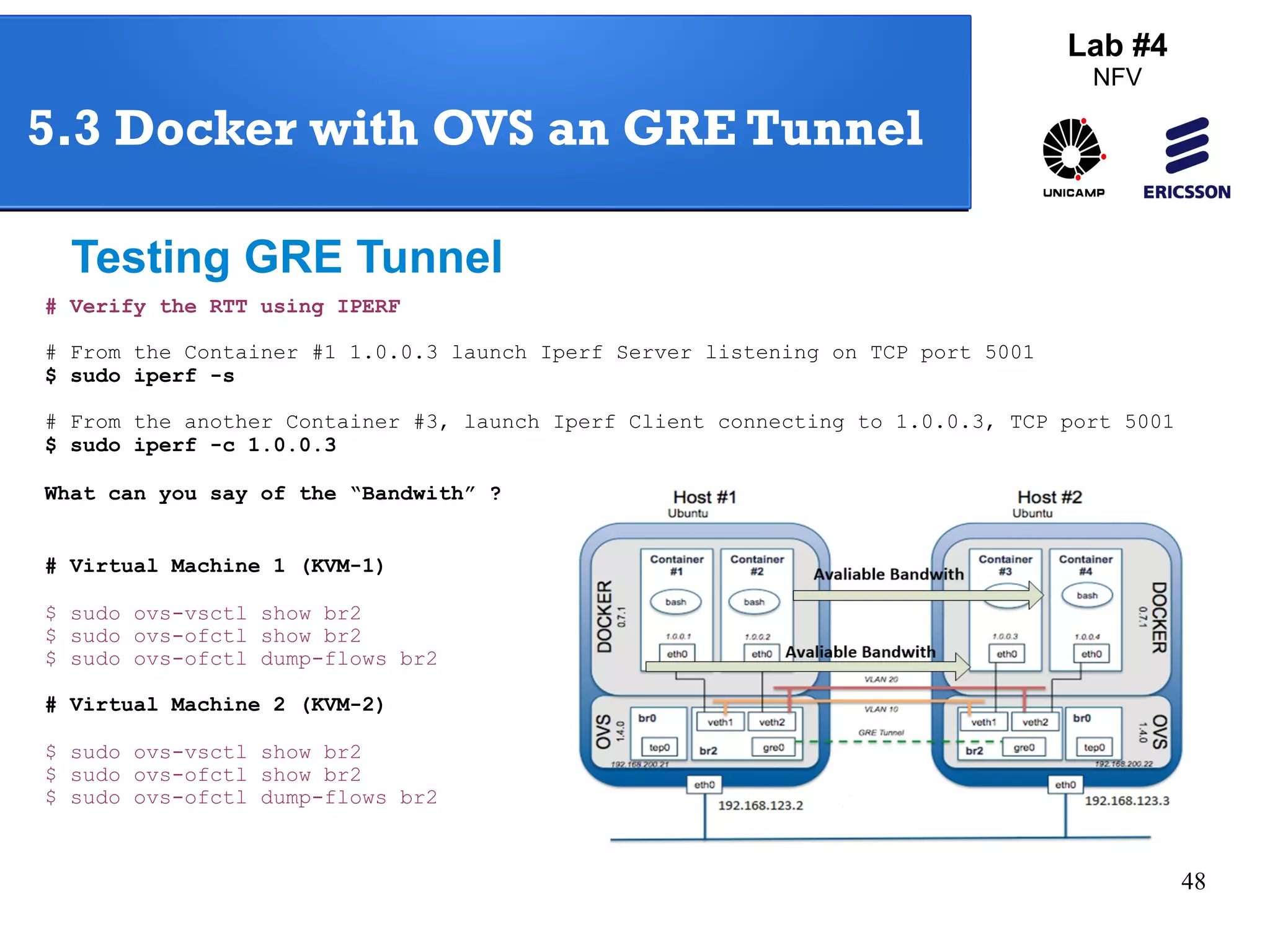

The document outlines a lab exercise on Network Functions Virtualization (NFV) that covers various exercises including Linux namespaces, port mirroring with Open vSwitch (OVS), and Linux containers (LXC). It details steps to create and manage Linux namespaces, attach interfaces, verify connectivity, and configure OVS for port mirroring. Additionally, it explains how to set up Linux containers using LXC and demonstrates creating GRE tunnels between virtual machines.