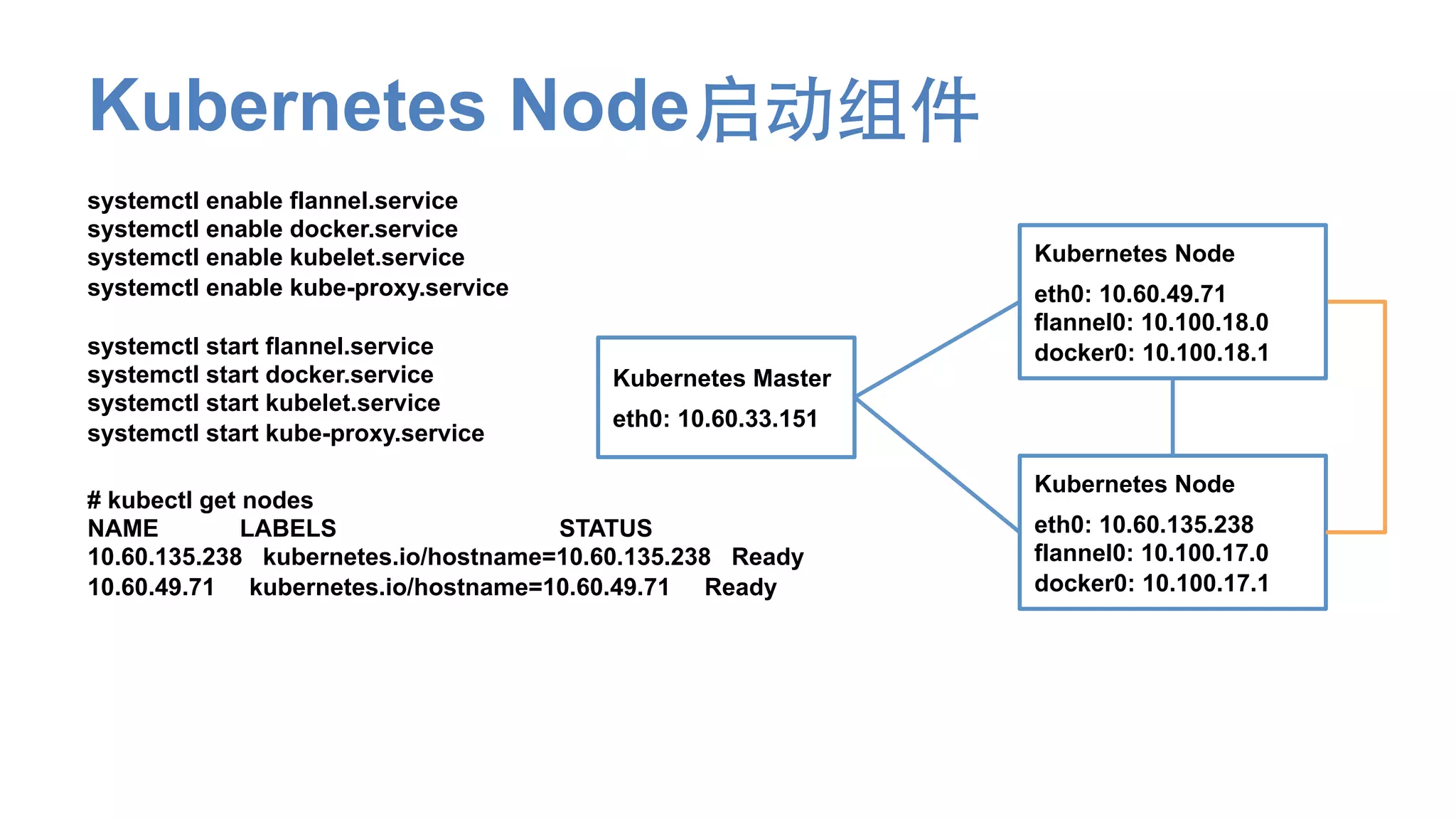

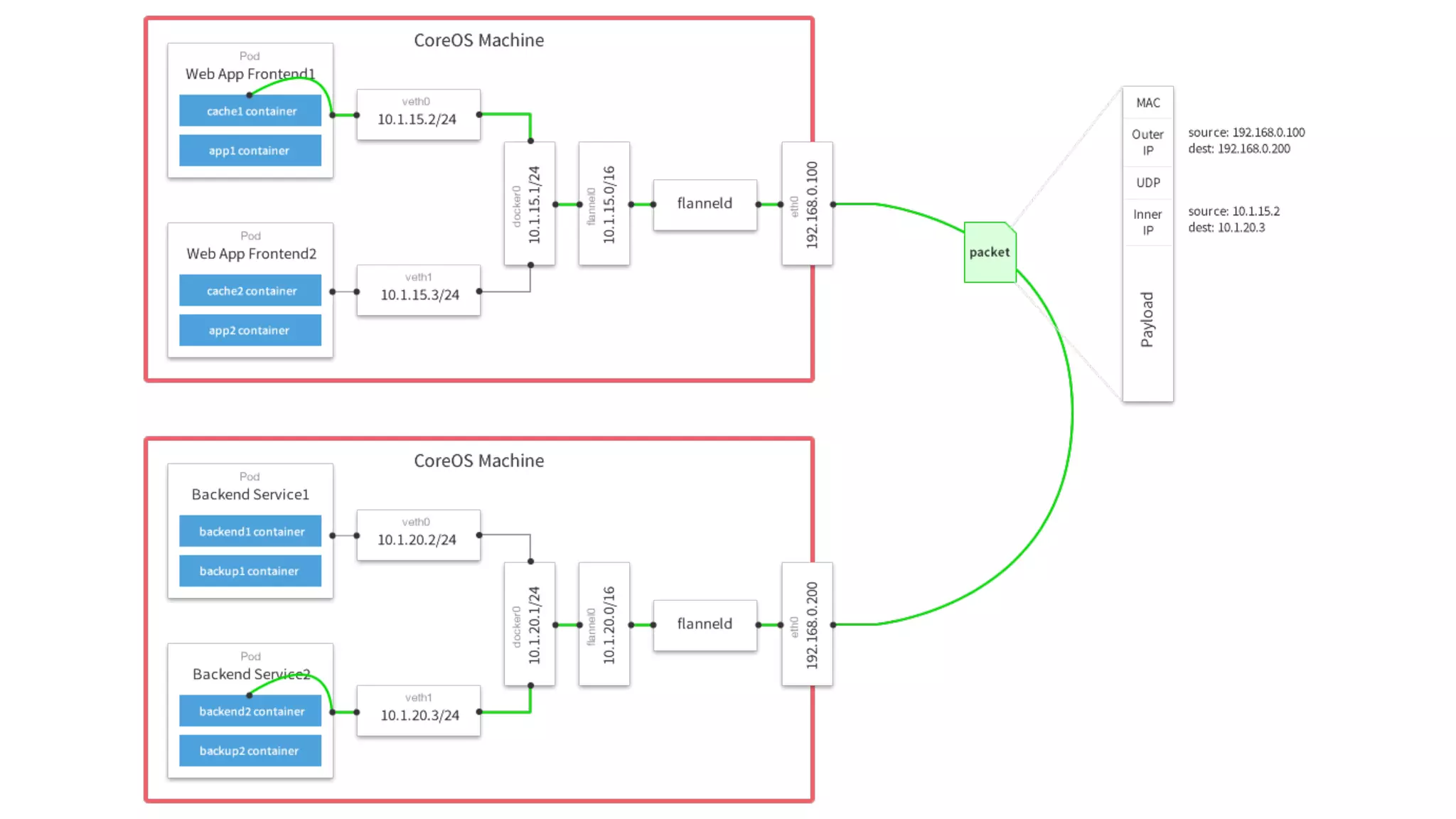

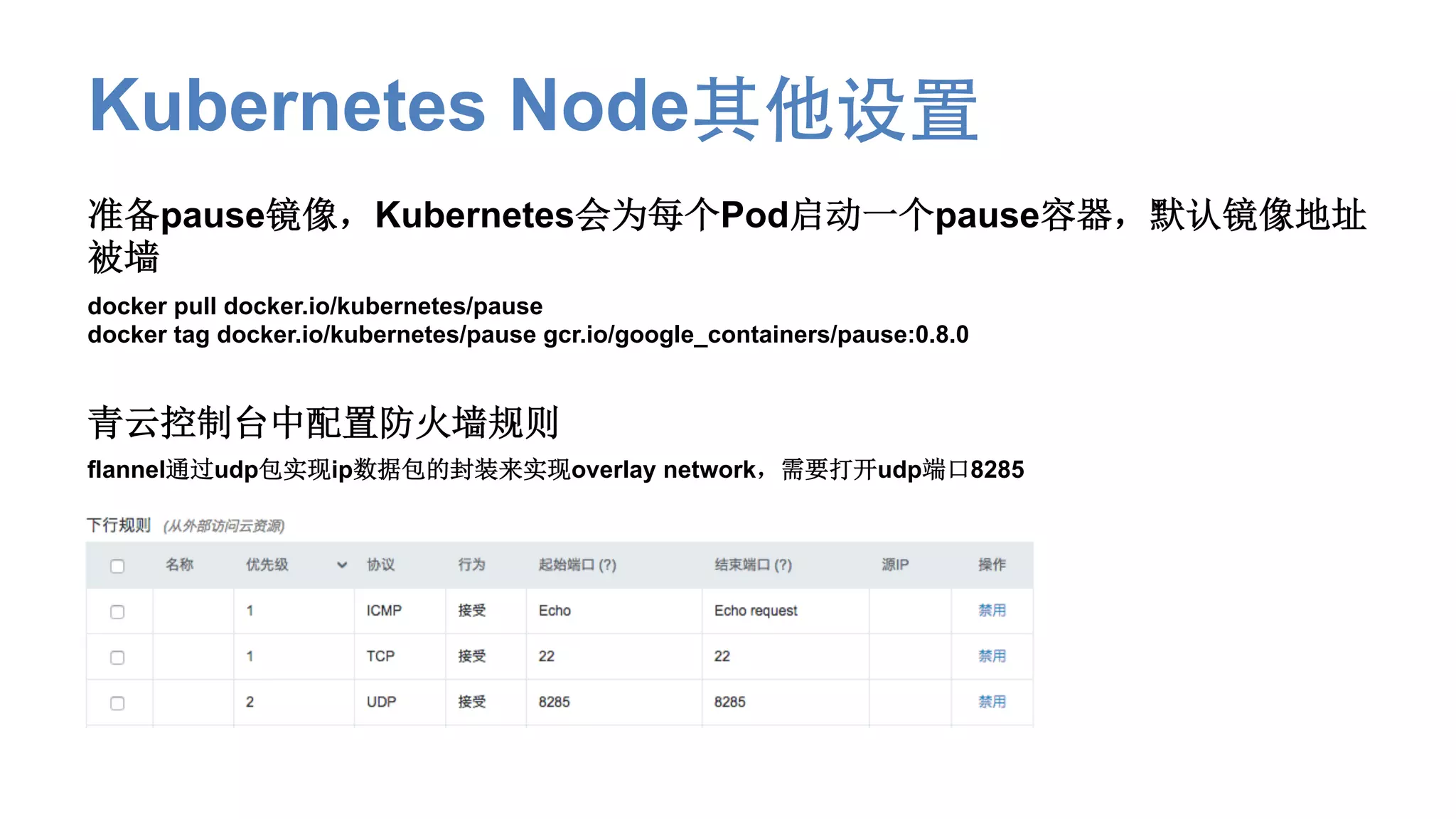

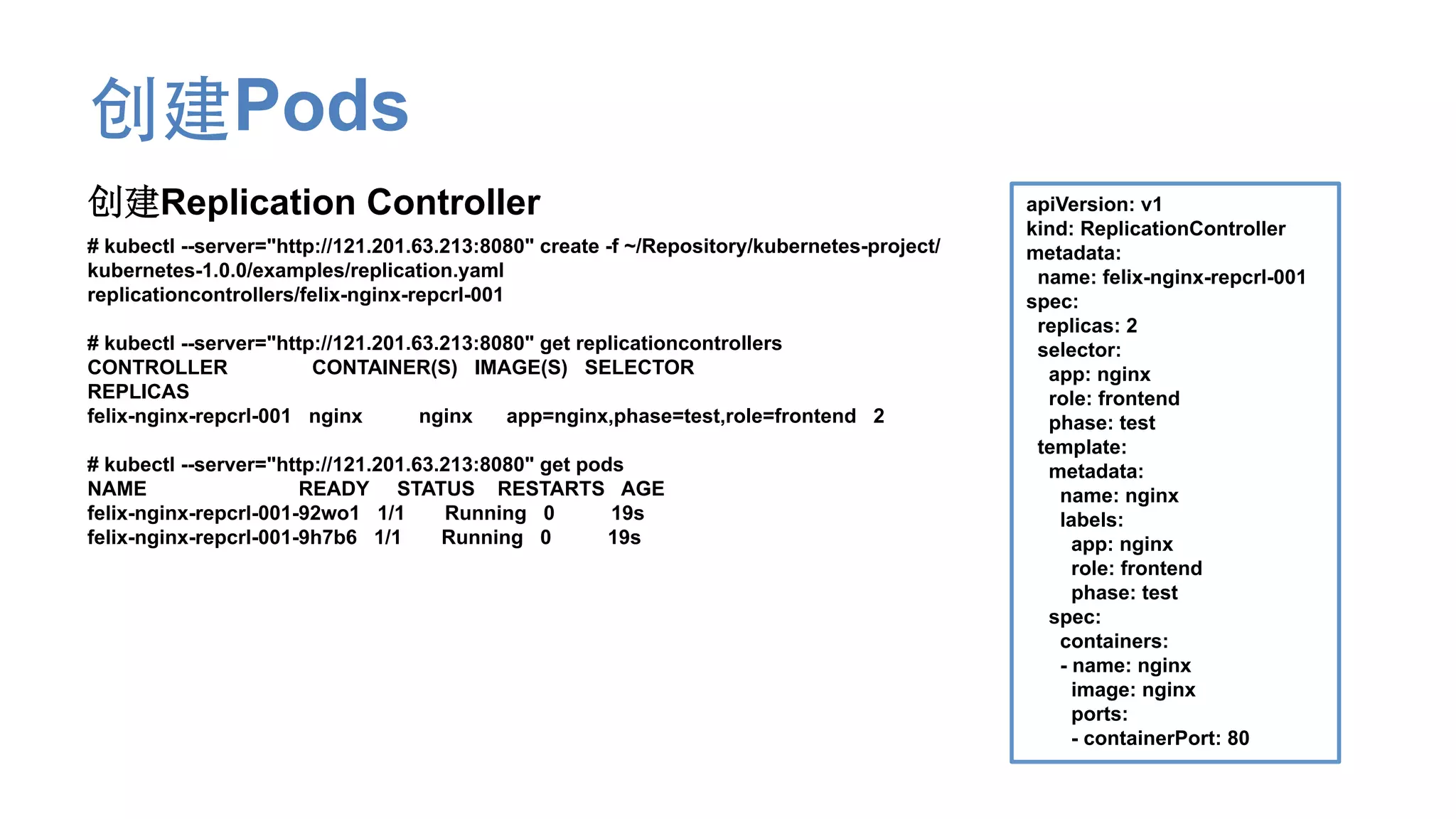

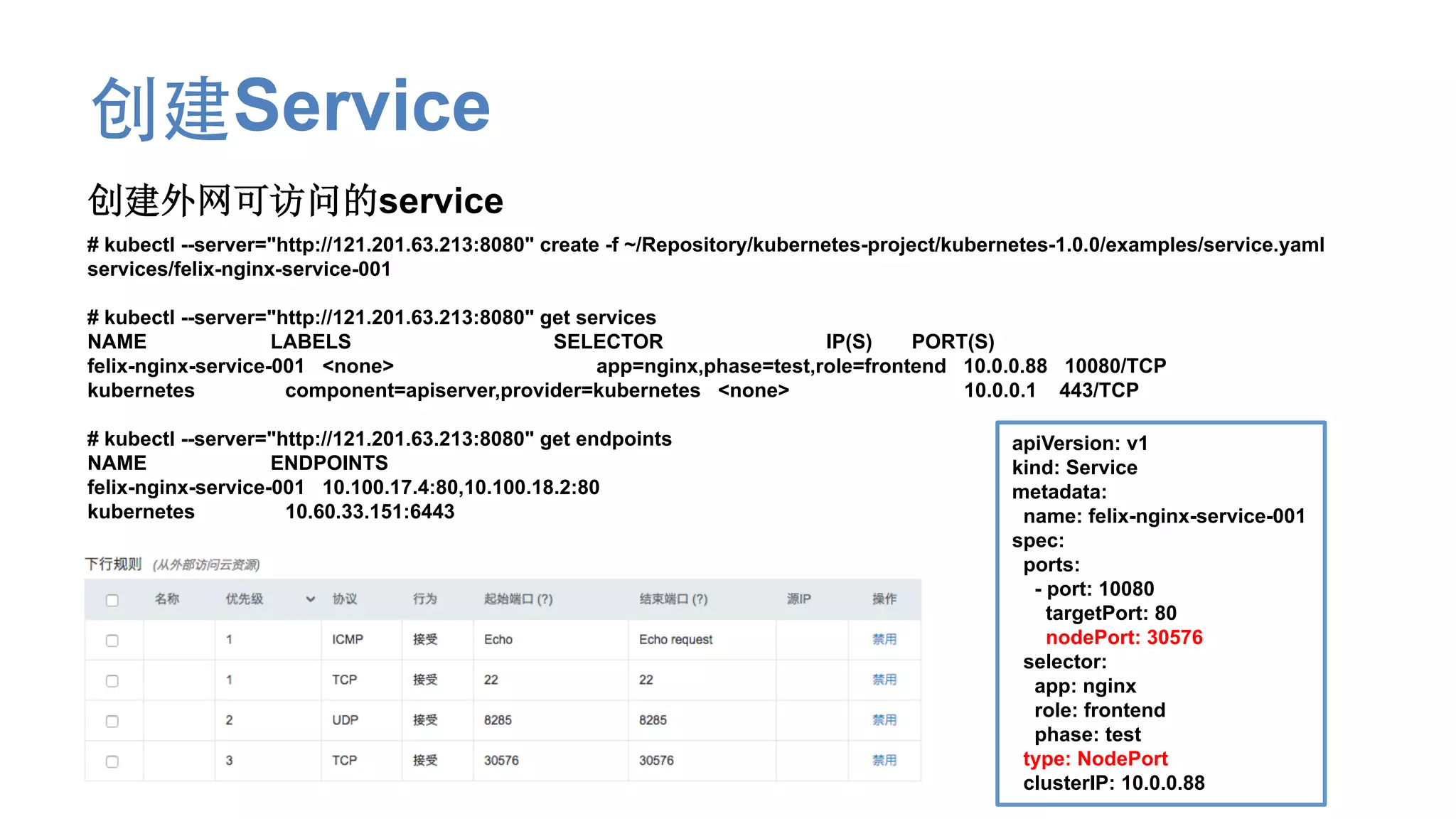

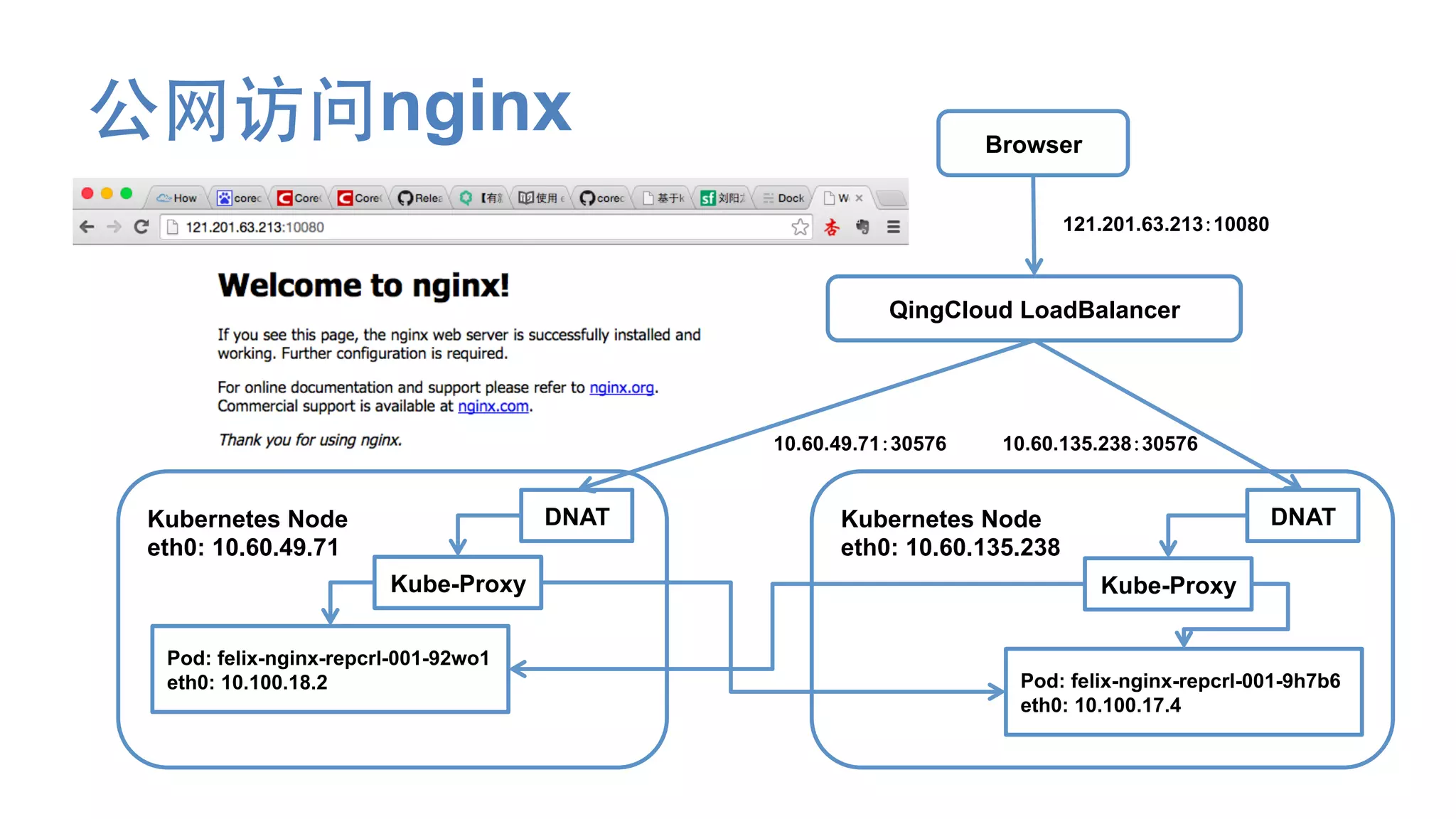

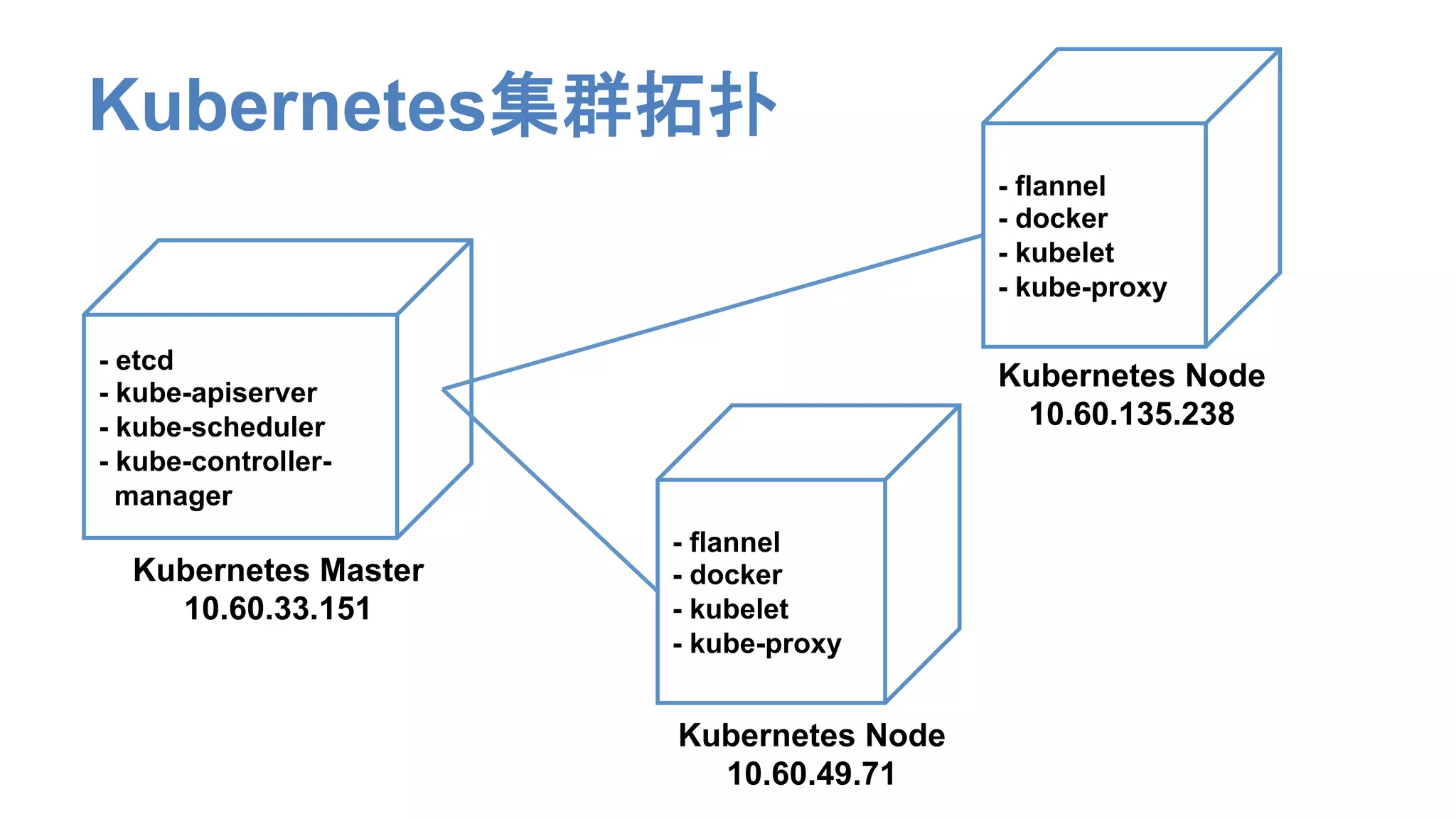

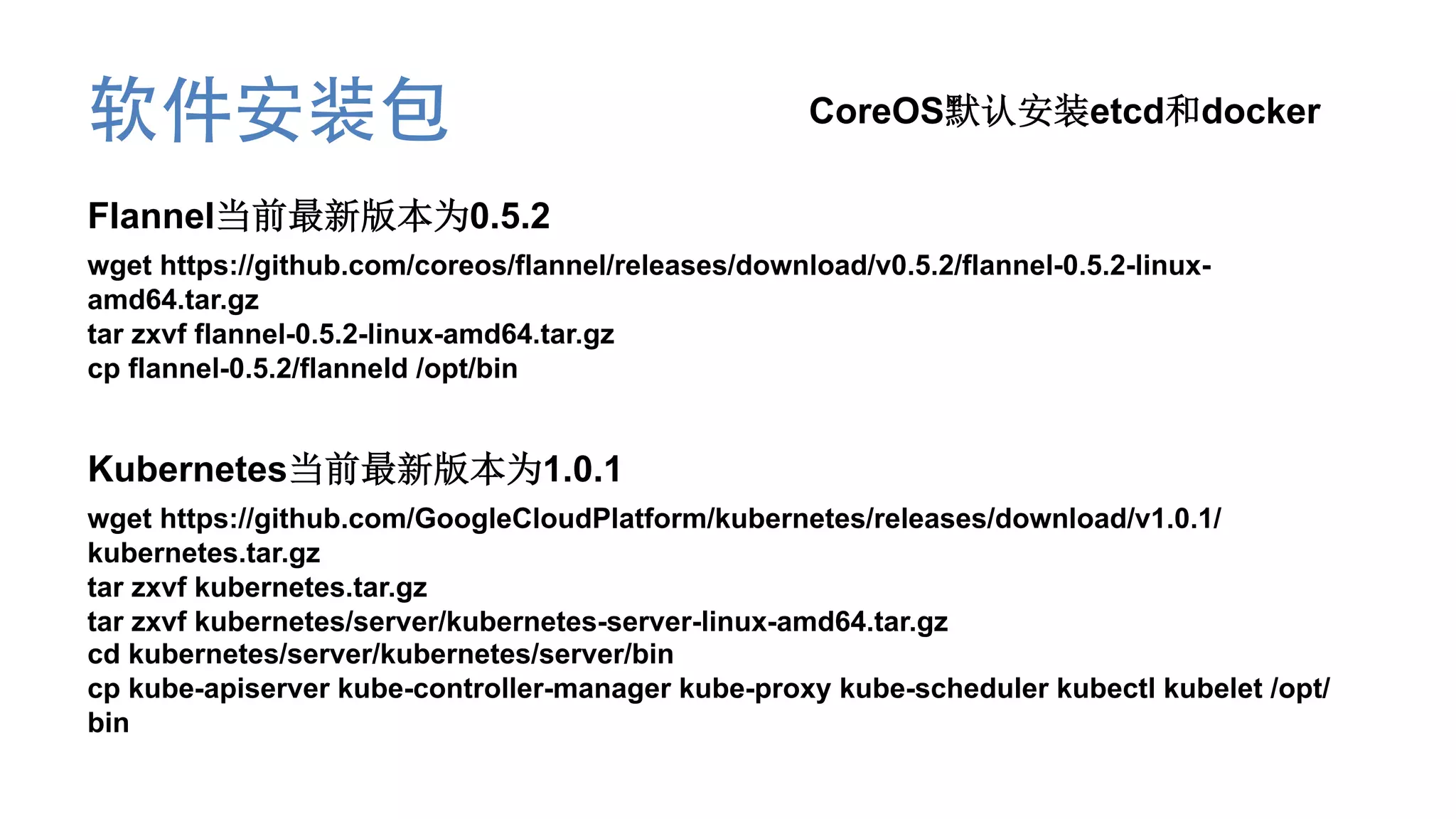

This document describes how to deploy a Kubernetes cluster on CoreOS virtual machines including setting up the Kubernetes master and nodes. It details installing software packages, configuring Kubernetes components like etcd and flannel, and creating replication controllers and services to deploy applications. The cluster consists of a master and two nodes with nginx pods load balanced across nodes using a QingCloud load balancer.

![Kubernetes Master配置

etcd启动配置文件: /etc/systemd/system/k8setcd.service

[Unit]

Description=Etcd Key-Value Store for Kubernetes Cluster

[Service]

ExecStart=/usr/bin/etcd2

--name 'default'

--data-dir '/root/Data/etcd/data'

--advertise-client-urls 'http://0.0.0.0:4001'

--listen-client-urls 'http://0.0.0.0:4001'

Restart=on-failure

RestartSec=5

[Install]

WantedBy=multi-user.target](https://image.slidesharecdn.com/coreoskubernetes-150731032752-lva1-app6892/75/CoreOS-kubernetes-4-2048.jpg)

![Kubernetes Master配置

kube-apiserver启动配置文件: /etc/systemd/system/kube-apiserver.service

[Unit]

Description=Kubernetes API Server

After=k8setcd.service

Wants=k8setcd.service

[Service]

ExecStart=/opt/bin/kube-apiserver

--v=3

--admission_control=NamespaceLifecycle,NamespaceAutoProvision,LimitRanger,ResourceQuota

--address=0.0.0.0

--port=8080

--etcd_servers=http://127.0.0.1:4001

--service-cluster-ip-range=10.0.0.0/24

ExecStartPost=-/bin/bash -c "until /usr/bin/curl http://127.0.0.1:8080; do echo "waiting for API server to come

online..."; sleep 3; done"

Restart=on-failure

RestartSec=5

[Install]

WantedBy=multi-user.target](https://image.slidesharecdn.com/coreoskubernetes-150731032752-lva1-app6892/75/CoreOS-kubernetes-5-2048.jpg)

![Kubernetes Master配置

kube-apiserver启动配置文件: /etc/systemd/system/kube-scheduler.service

[Unit]

Description=Kubernetes Scheduler

After=k8setcd.service

After=kube-apiserver.service

Wants=k8setcd.service

Wants=kube-apiserver.service

[Service]

ExecStart=/opt/bin/kube-scheduler

--v=3

--master=http://127.0.0.1:8080

Restart=on-failure

RestartSec=5

[Install]

WantedBy=multi-user.target](https://image.slidesharecdn.com/coreoskubernetes-150731032752-lva1-app6892/75/CoreOS-kubernetes-6-2048.jpg)

![Kubernetes Master配置

kube-apiserver启动配置文件: /etc/systemd/system/kube-controller-

manager.service

[Unit]

Description=Kubernetes Controller Manager

After=k8setcd.service

After=kube-apiserver.service

Wants=k8setcd.service

Wants=kube-apiserver.service

[Service]

ExecStart=/opt/bin/kube-controller-manager

--v=3

--master=http://127.0.0.1:8080

Restart=on-failure

RestartSec=5

[Install]

WantedBy=multi-user.target](https://image.slidesharecdn.com/coreoskubernetes-150731032752-lva1-app6892/75/CoreOS-kubernetes-7-2048.jpg)

![Kubernetes Node配置

flannel启动配置文件: /etc/systemd/system/flannel.service

[Unit]

Description=Flannel for Overlay Network

[Service]

ExecStart=/opt/bin/flanneld

-v=3

-etcd-endpoints=http://10.60.33.151:4001

ExecStartPost=-/bin/bash -c "until [ -e /var/run/flannel/subnet.env ]; do echo "waiting for write."; sleep 3;

done"

Restart=on-failure

RestartSec=5

[Install]

WantedBy=multi-user.target](https://image.slidesharecdn.com/coreoskubernetes-150731032752-lva1-app6892/75/CoreOS-kubernetes-10-2048.jpg)

![Kubernetes Node配置

docker启动配置文件: /etc/systemd/system/docker.service

[Unit]

Description=Docker container engine configured to run with flannel

Requires=flannel.service

After=flannel.service

[Service]

EnvironmentFile=/var/run/flannel/subnet.env

ExecStartPre=-/usr/bin/ip link set dev docker0 down

ExecStartPre=-/usr/sbin/brctl delbr docker0

ExecStart=/usr/bin/docker -d -s=btrfs -H fd:// --bip=${FLANNEL_SUBNET} --mtu=${FLANNEL_MTU}

Restart=on-failure

RestartSec=5

[Install]

WantedBy=multi-user.target](https://image.slidesharecdn.com/coreoskubernetes-150731032752-lva1-app6892/75/CoreOS-kubernetes-11-2048.jpg)

![Kubernetes Node配置

kubelet启动配置文件: /etc/systemd/system/kubelet.service

[Unit]

Description=Kubernetes Kubelet

After=docker.service

Wants=docker.service

[Service]

ExecStart=/opt/bin/kubelet

--v=3

--chaos_chance=0.0

--container_runtime=docker

--hostname_override=10.60.135.238

--address=10.60.135.238

--api_servers=10.60.33.151:8080

--port=10250

Restart=on-failure

RestartSec=5

[Install]

WantedBy=multi-user.target](https://image.slidesharecdn.com/coreoskubernetes-150731032752-lva1-app6892/75/CoreOS-kubernetes-12-2048.jpg)

![Kubernetes Node配置

kube-proxy启动配置文件: /etc/systemd/system/kube-proxy.service

[Unit]

Description=Kubernetes proxy server

After=docker.service

Wants=docker.service

[Service]

ExecStart=/opt/bin/kube-proxy --v=3 --master=http://10.60.33.151:8080

Restart=on-failure

RestartSec=5

[Install]

WantedBy=multi-user.target](https://image.slidesharecdn.com/coreoskubernetes-150731032752-lva1-app6892/75/CoreOS-kubernetes-13-2048.jpg)