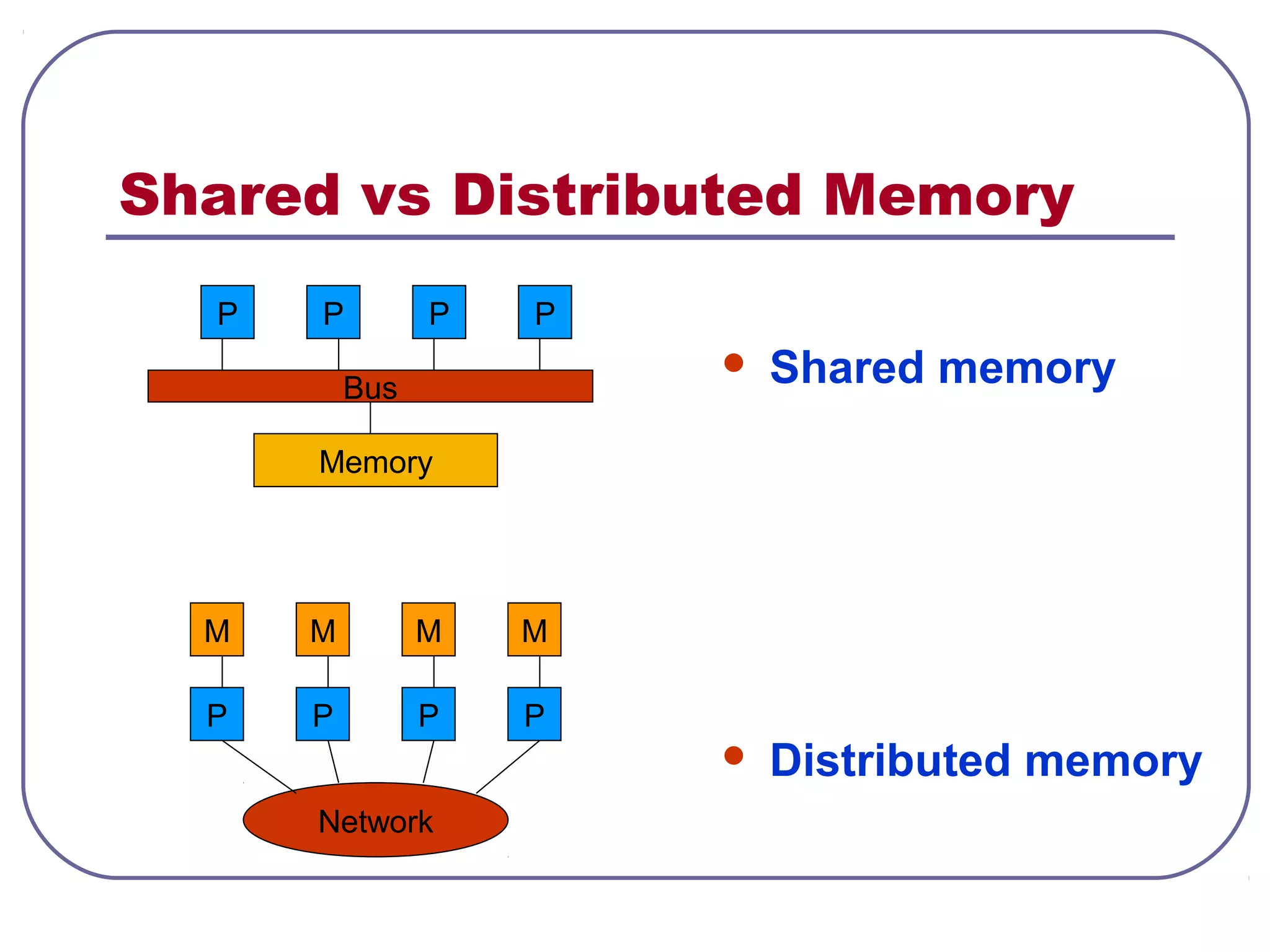

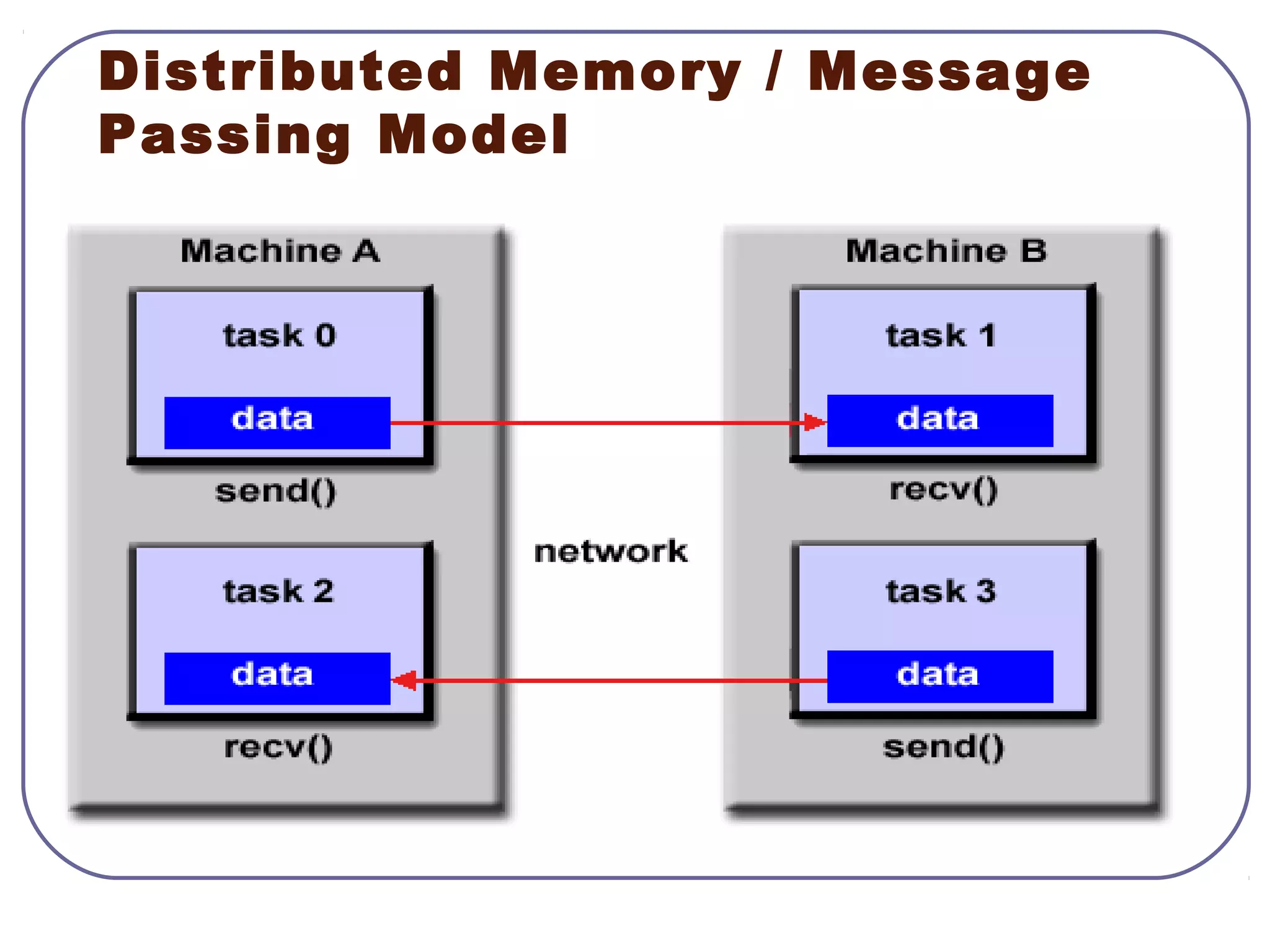

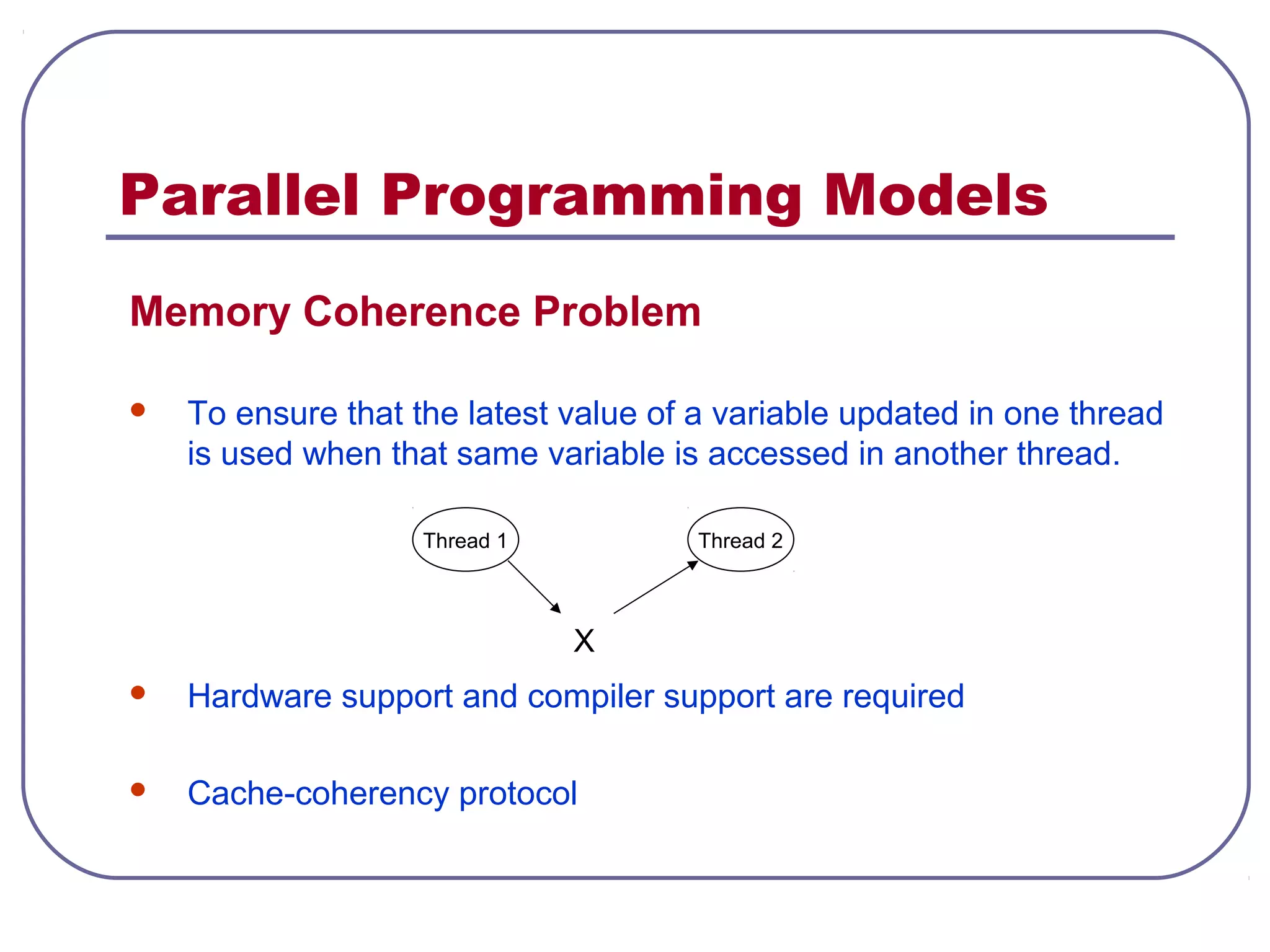

The document discusses various parallel programming models. It describes data parallelism which emphasizes concurrent execution of the same task on different data elements, and task parallelism which emphasizes concurrent execution of different tasks. It also covers shared memory and distributed memory models, explicit and implicit parallelism, and common parallel programming tools like MPI. Message passing and data parallel models are explained in more detail.