This document discusses virtual memory and demand paging. Some key points:

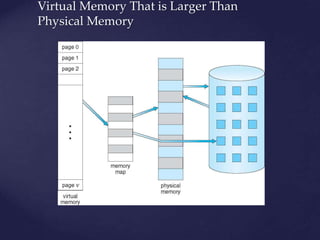

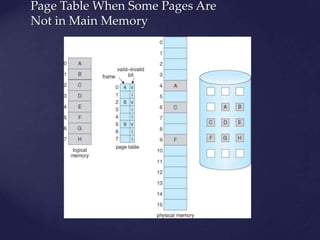

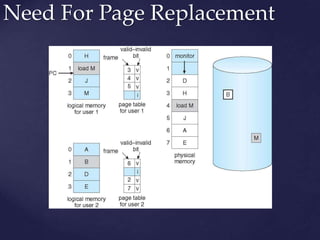

- Virtual memory separates logical memory from physical memory, allowing for larger address spaces than physical memory.

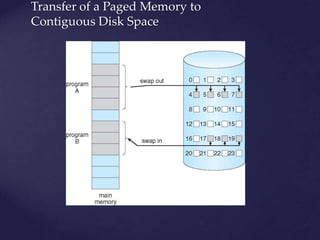

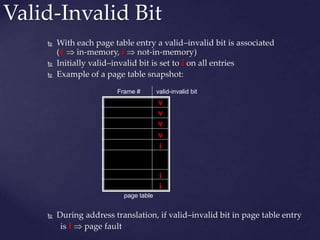

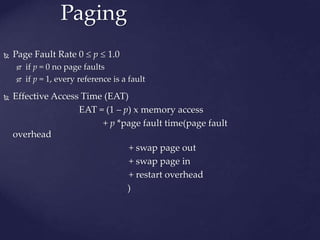

- Demand paging brings pages into memory only when needed, reducing I/O and memory usage compared to storing the entire program in memory.

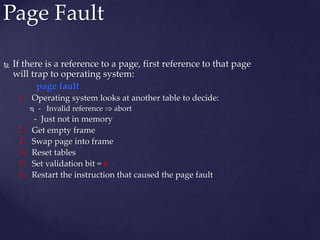

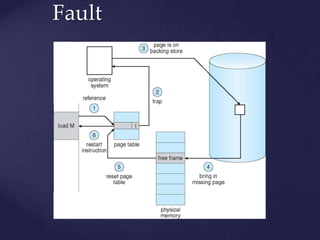

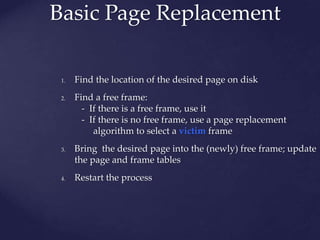

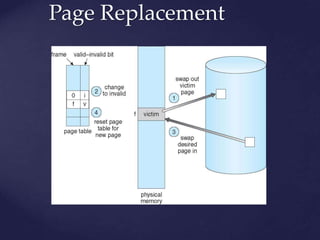

- A page fault occurs if a process tries to access a page not currently in memory. The OS then brings the page into an empty frame.

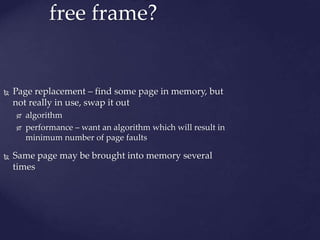

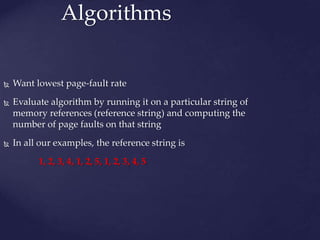

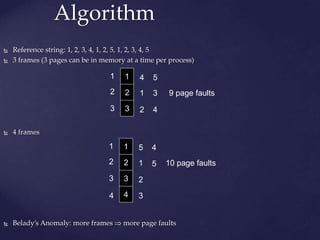

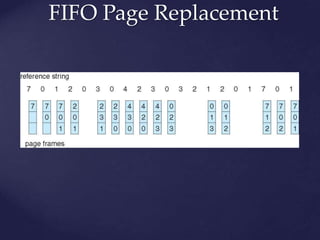

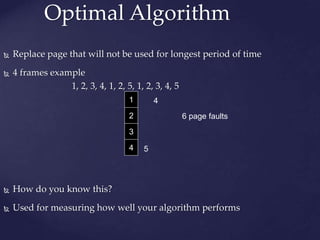

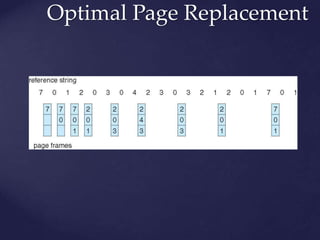

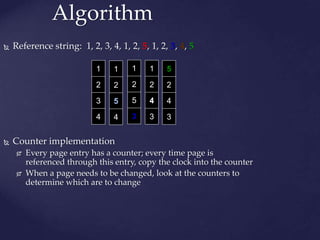

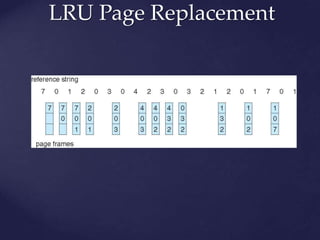

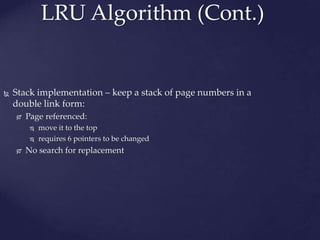

- When pages need to be swapped in, a page replacement algorithm like FIFO or LRU is used to select a page to swap out to make room. This prevents over-allocation of physical memory.