transfer learning, Introduction & motivation

Adapting Neural Networks

Process

Transfer Learning

Transferring the knowledge of one model to perform a new task. Cheaper, faster way of adapting a neural network by exploiting their generalization properties

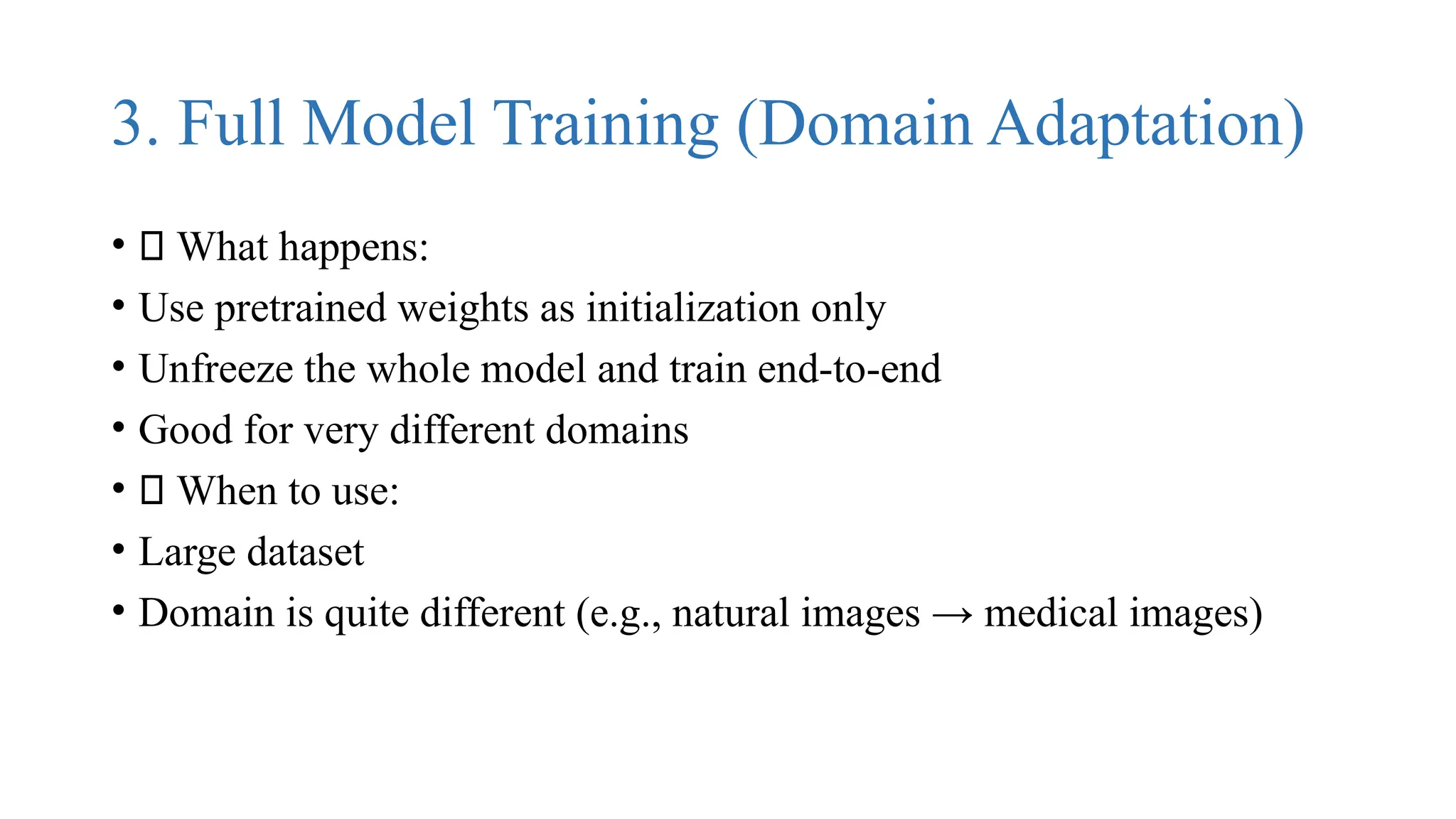

![✅ 3. Add Custom Classifier

•

• from tensorflow.keras import layers, models

• model = models.Sequential([

• base_model,

• layers.Flatten(),

• layers.Dense(256, activation='relu'),

• layers.Dropout(0.5),

• layers.Dense(num_classes, activation='softmax') # num_classes = your number of categories

• ])

• Flatten() converts feature maps to a vector

• Dense(256): Learn complex features

• Dropout(0.5): Prevent overfitting

• Final Dense layer: Softmax for multi-class classification](https://image.slidesharecdn.com/introductionmotivation-250414142149-668ca54c/75/Introduction-to-transfer-learning-aster-way-of-adapting-a-neural-network-by-exploiting-their-generalization-properties-pptx-19-2048.jpg)

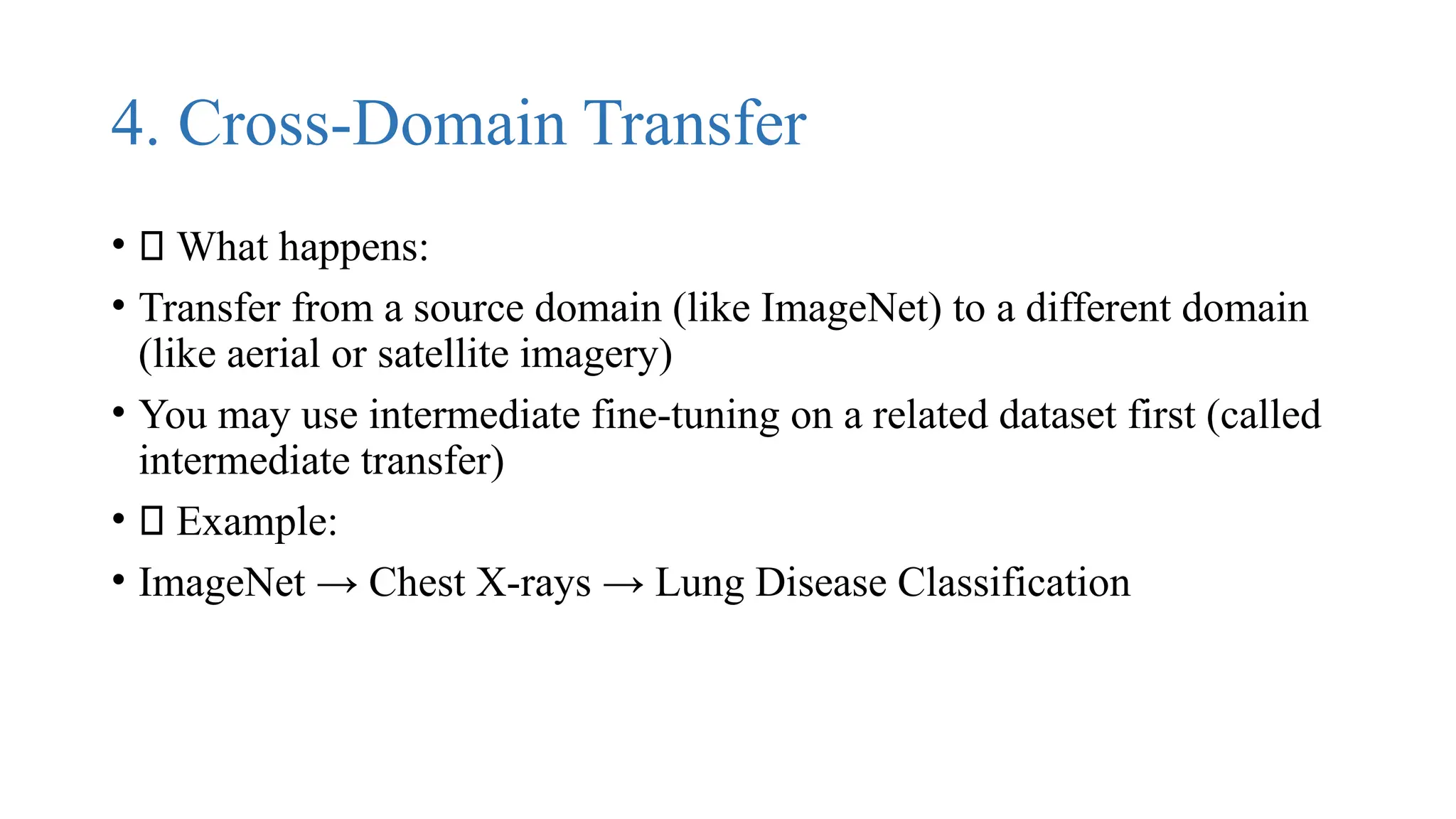

![✅ 4. Compile and Train

•

• model.compile(optimizer='adam',

• loss='categorical_crossentropy',

• metrics=['accuracy'])

• model.fit(train_data, validation_data=val_data, epochs=10)

• Use Adam optimizer and categorical crossentropy

• Evaluate on validation set](https://image.slidesharecdn.com/introductionmotivation-250414142149-668ca54c/75/Introduction-to-transfer-learning-aster-way-of-adapting-a-neural-network-by-exploiting-their-generalization-properties-pptx-20-2048.jpg)

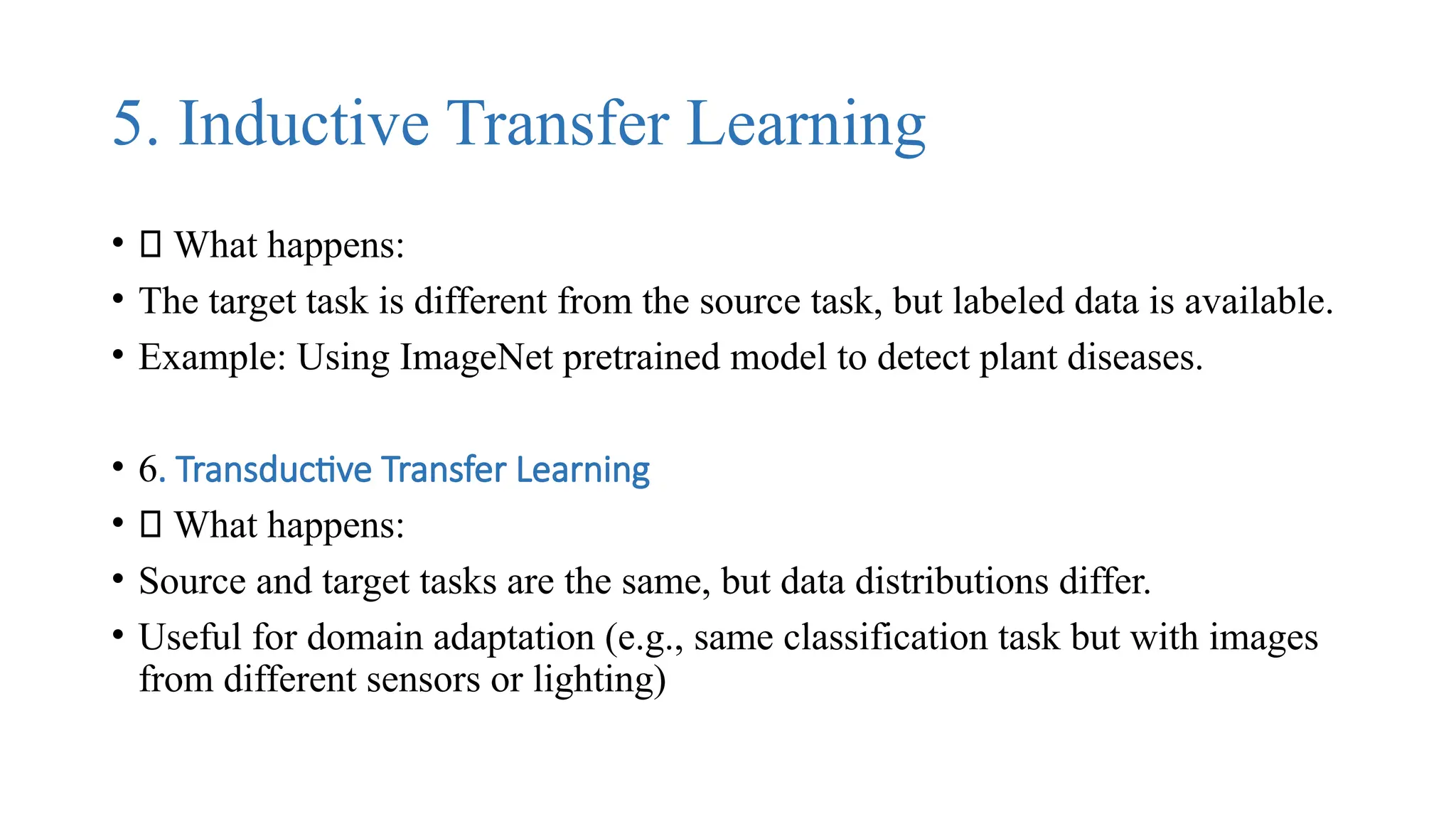

![✅ 5. Unfreeze Some VGG16 Layers (e.g., last

4 blocks)

•

• for layer in base_model.layers[-4:]: # Unfreeze last 4 layers

• layer.trainable = True

• Re-trains last few layers to adapt high-level features to your dataset

• Use a very small learning rate:

•

• from tensorflow.keras.optimizers import Adam

• model.compile(optimizer=Adam(learning_rate=1e-5),

• loss='categorical_crossentropy',

• metrics=['accuracy'])](https://image.slidesharecdn.com/introductionmotivation-250414142149-668ca54c/75/Introduction-to-transfer-learning-aster-way-of-adapting-a-neural-network-by-exploiting-their-generalization-properties-pptx-22-2048.jpg)

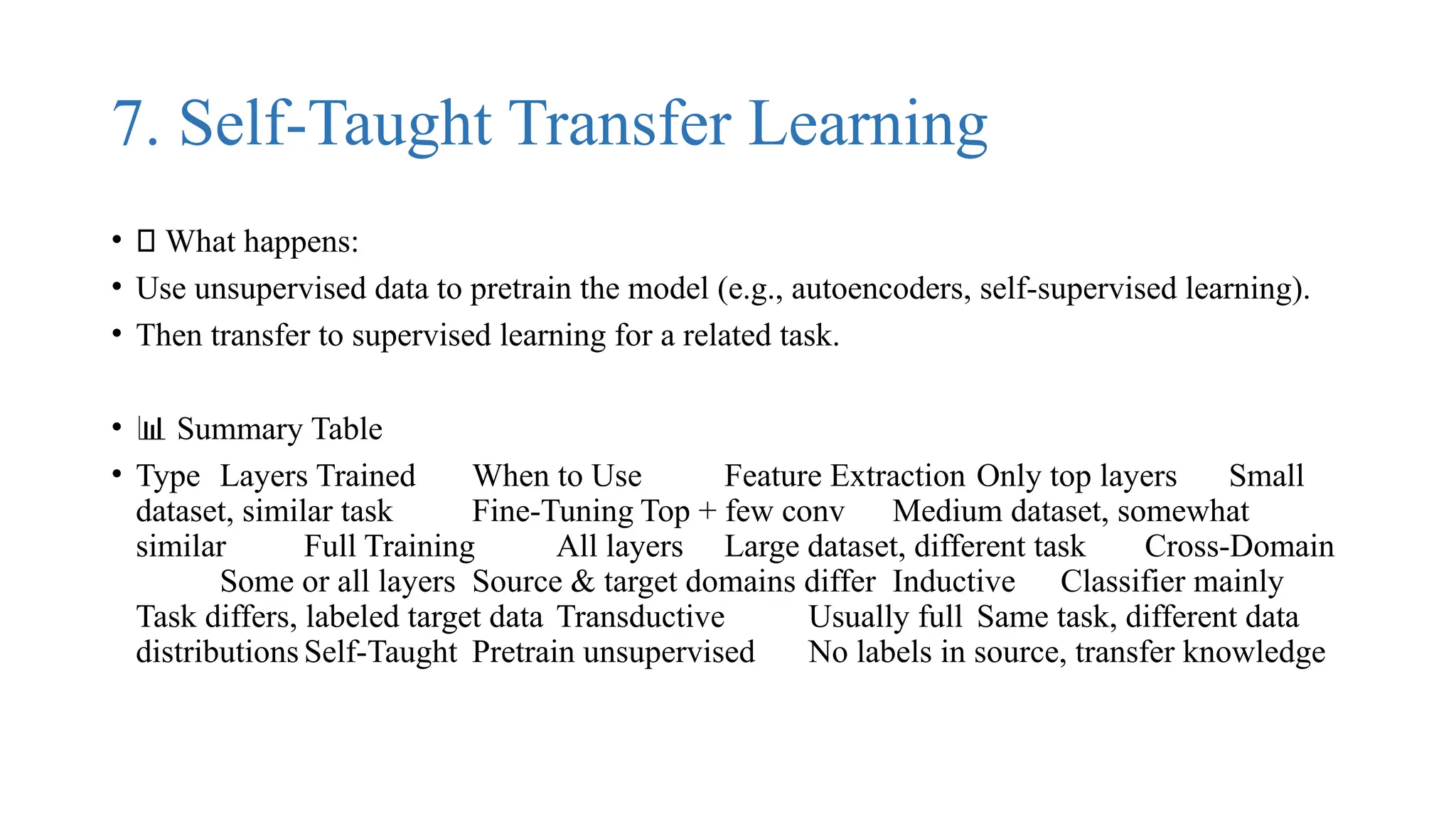

![2. Fine-Tuning

• 🔹 What happens:

• Start with a pretrained model.

• Unfreeze some deeper layers (usually last few blocks).

• Retrain both classifier and some conv layers with a low learning rate.

• ✅ When to use:

• Moderate or large dataset

• Your new task is somewhat similar, but needs adaptation

• Example:

• for layer in base_model.layers[-10:]:

• layer.trainable = True](https://image.slidesharecdn.com/introductionmotivation-250414142149-668ca54c/75/Introduction-to-transfer-learning-aster-way-of-adapting-a-neural-network-by-exploiting-their-generalization-properties-pptx-28-2048.jpg)