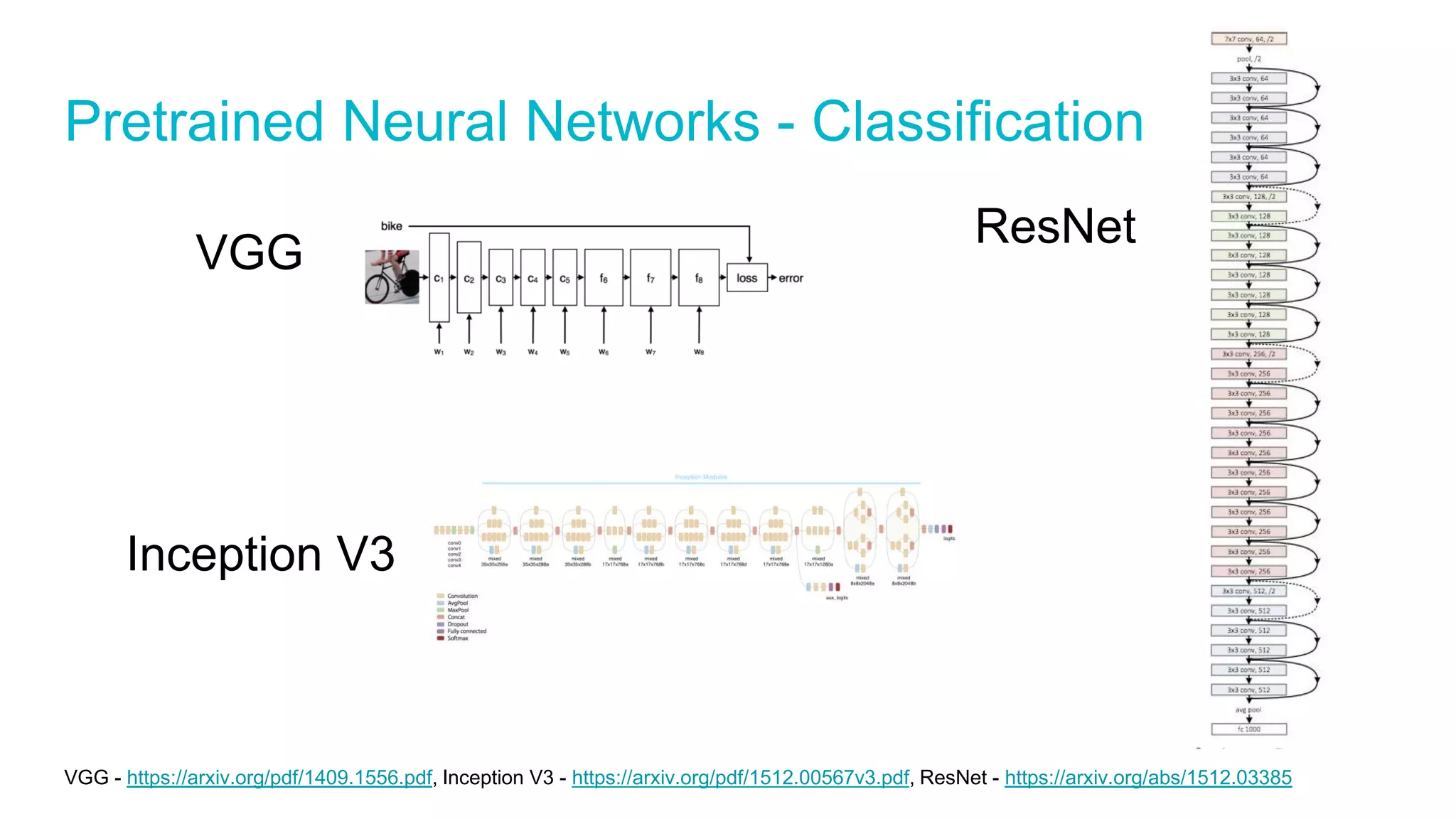

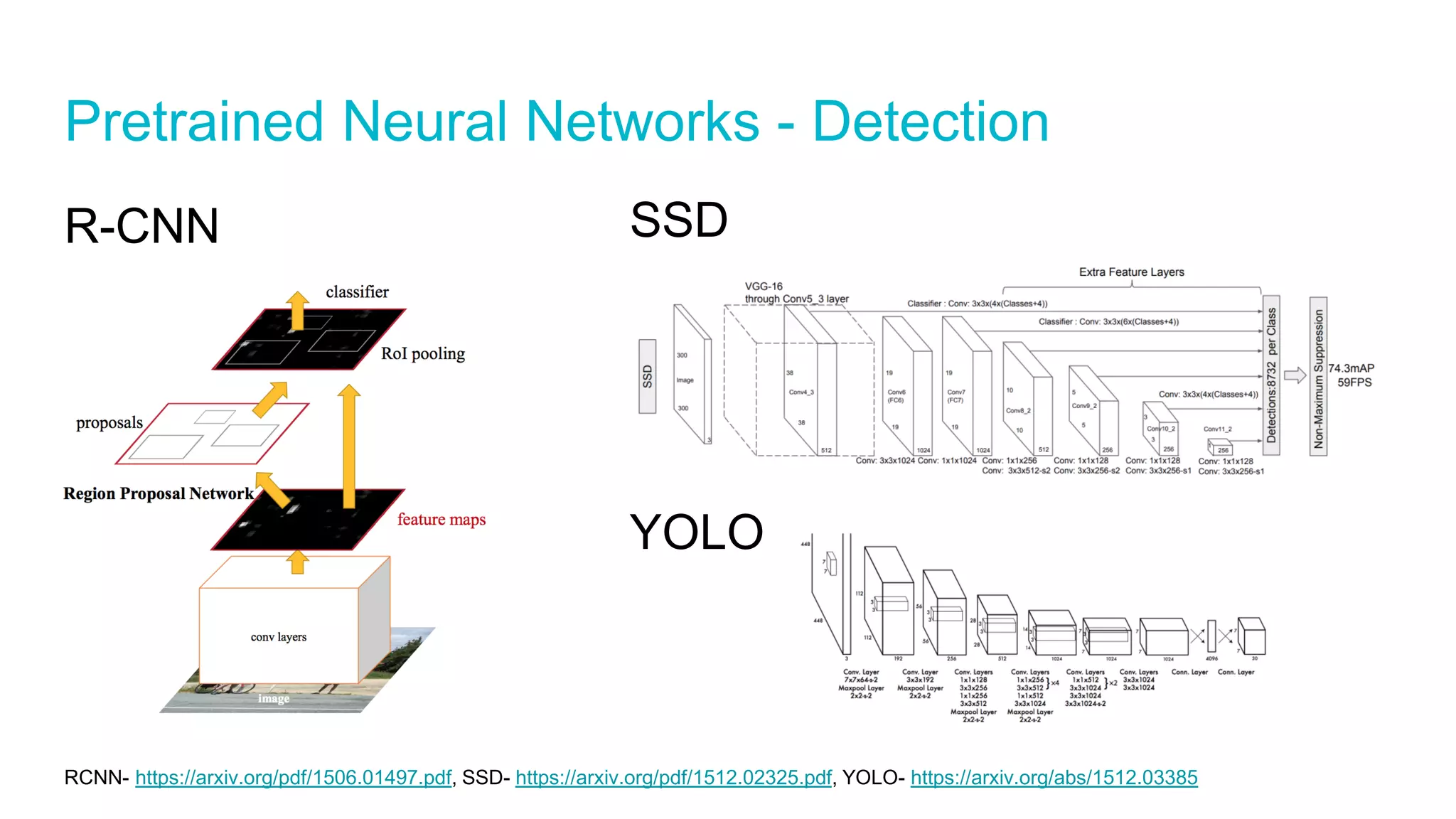

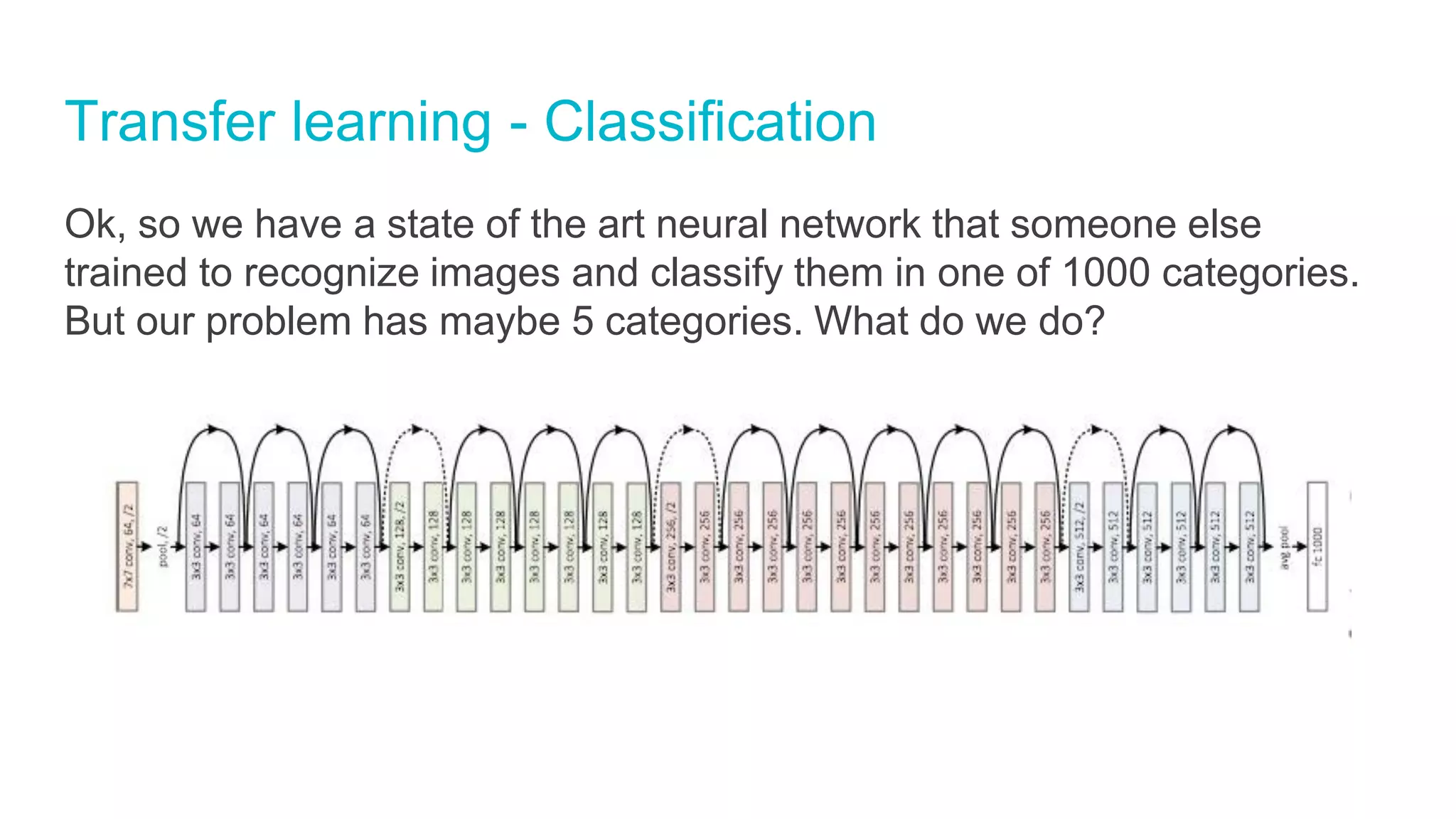

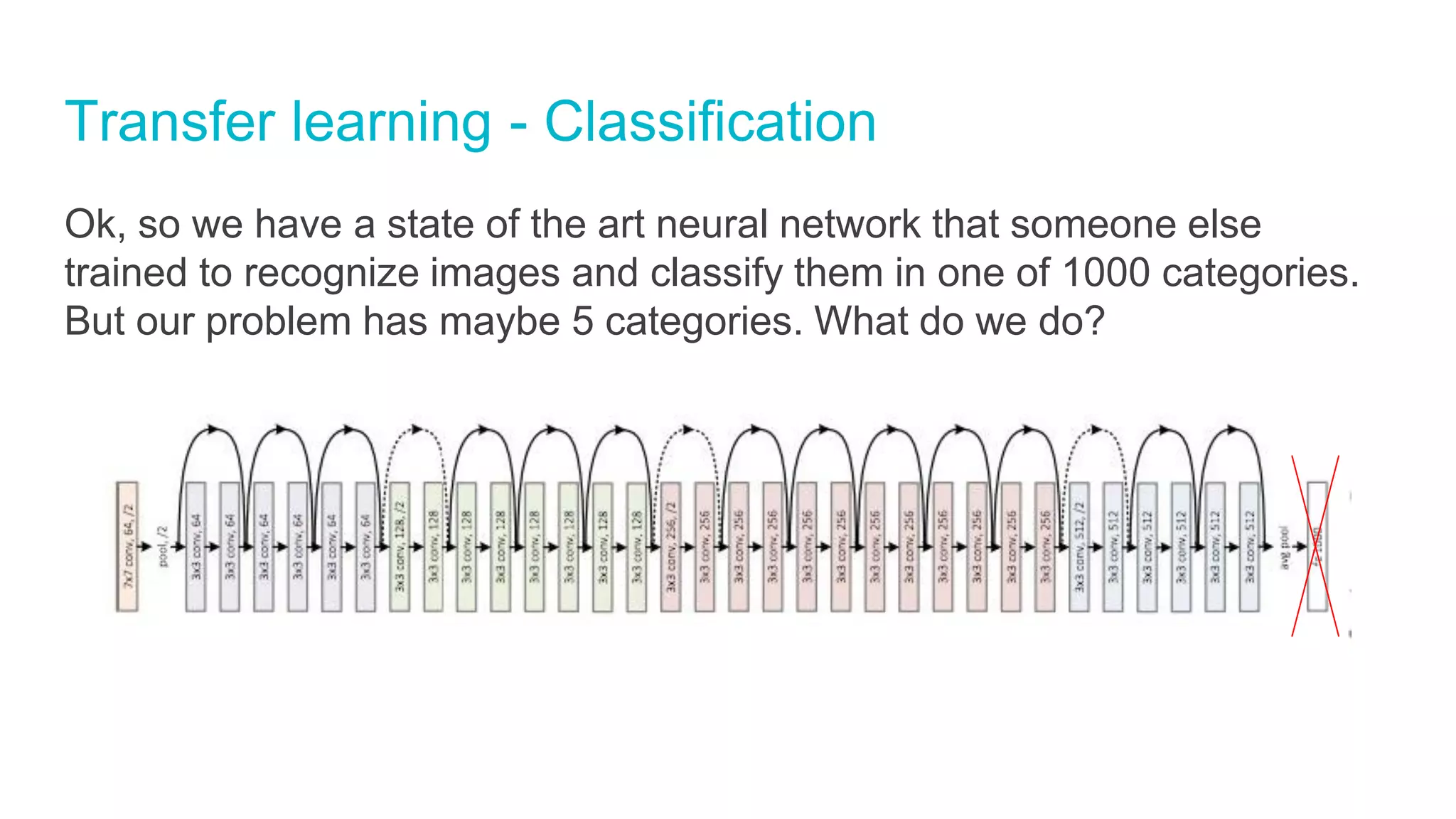

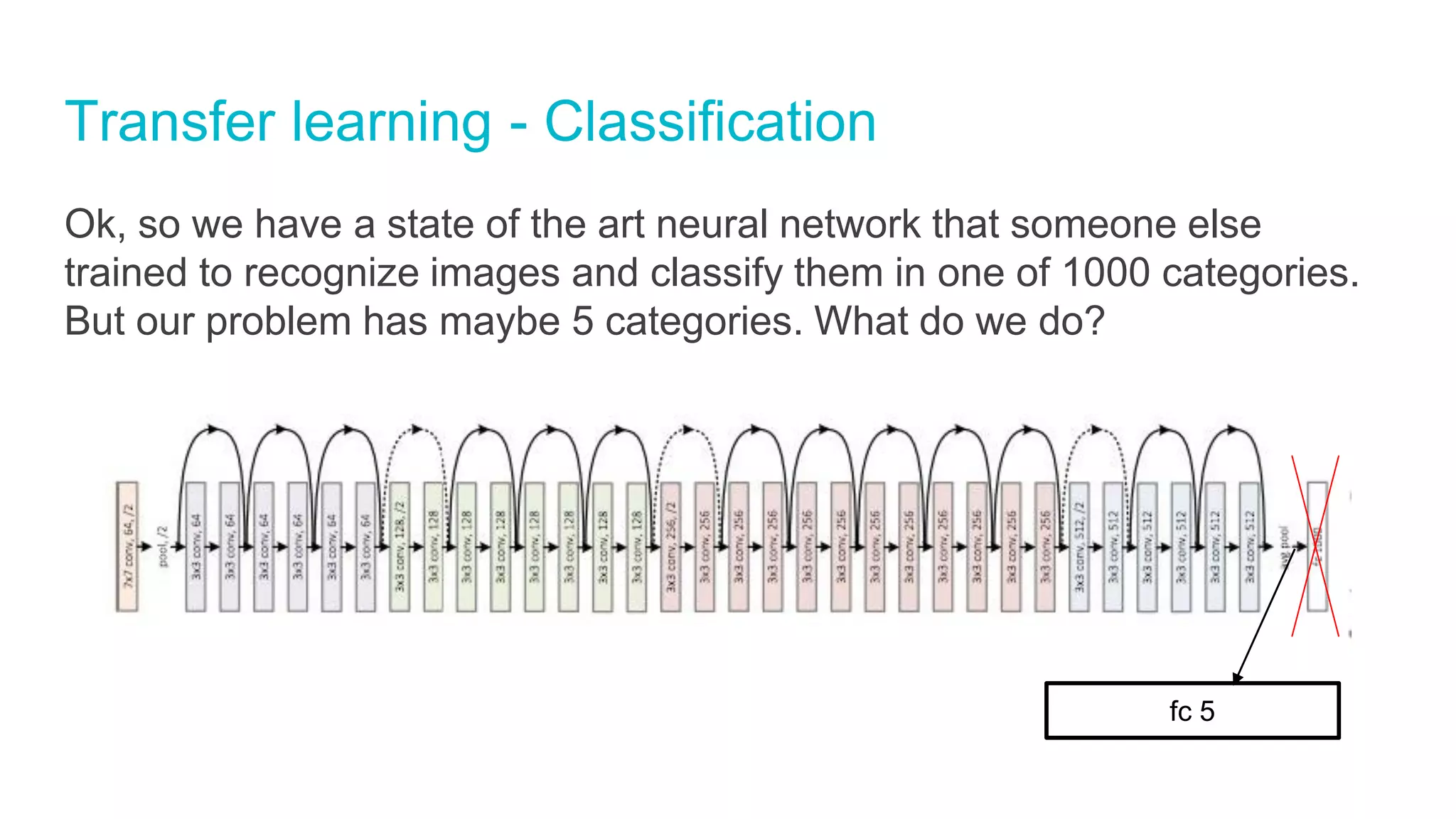

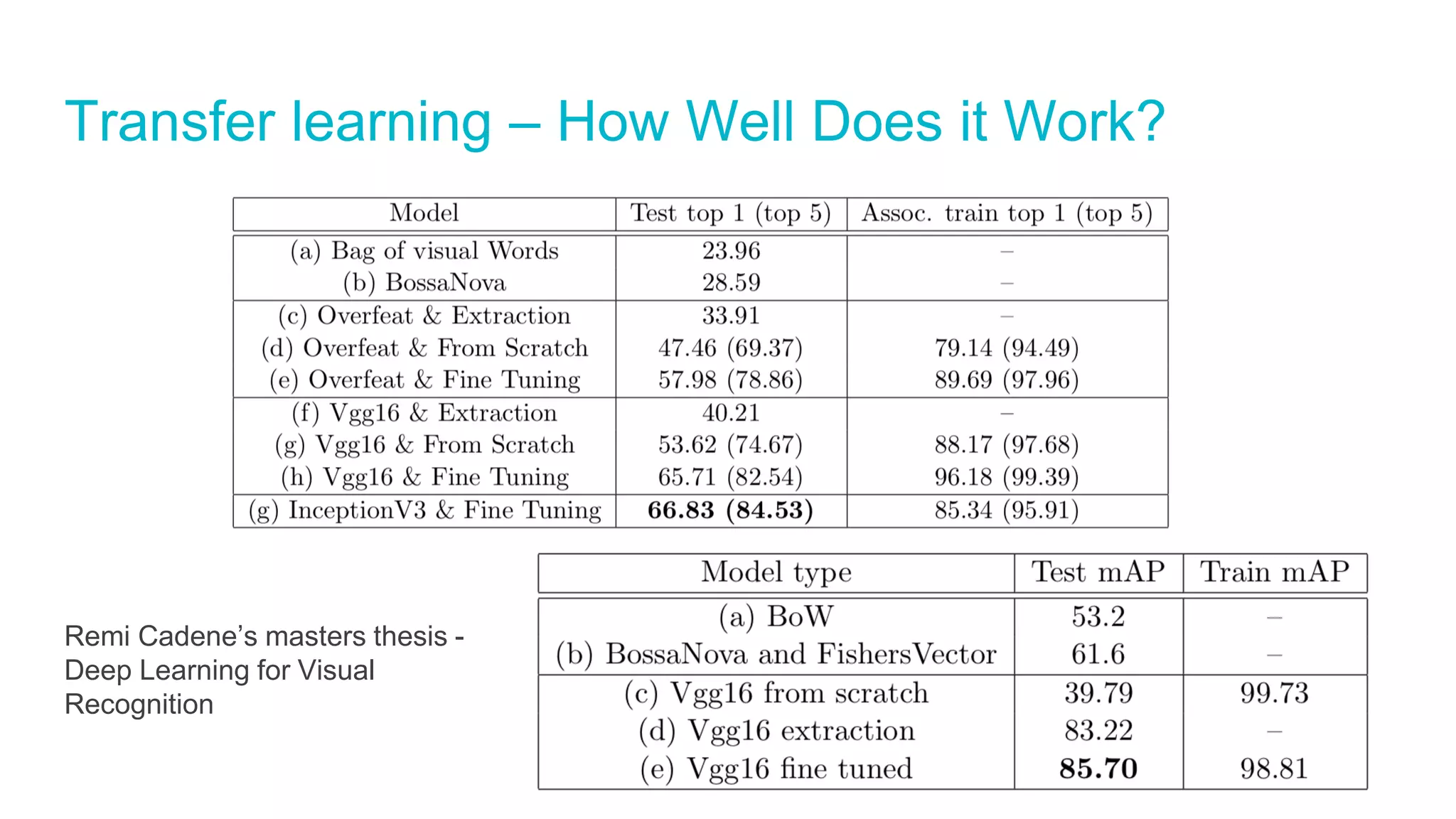

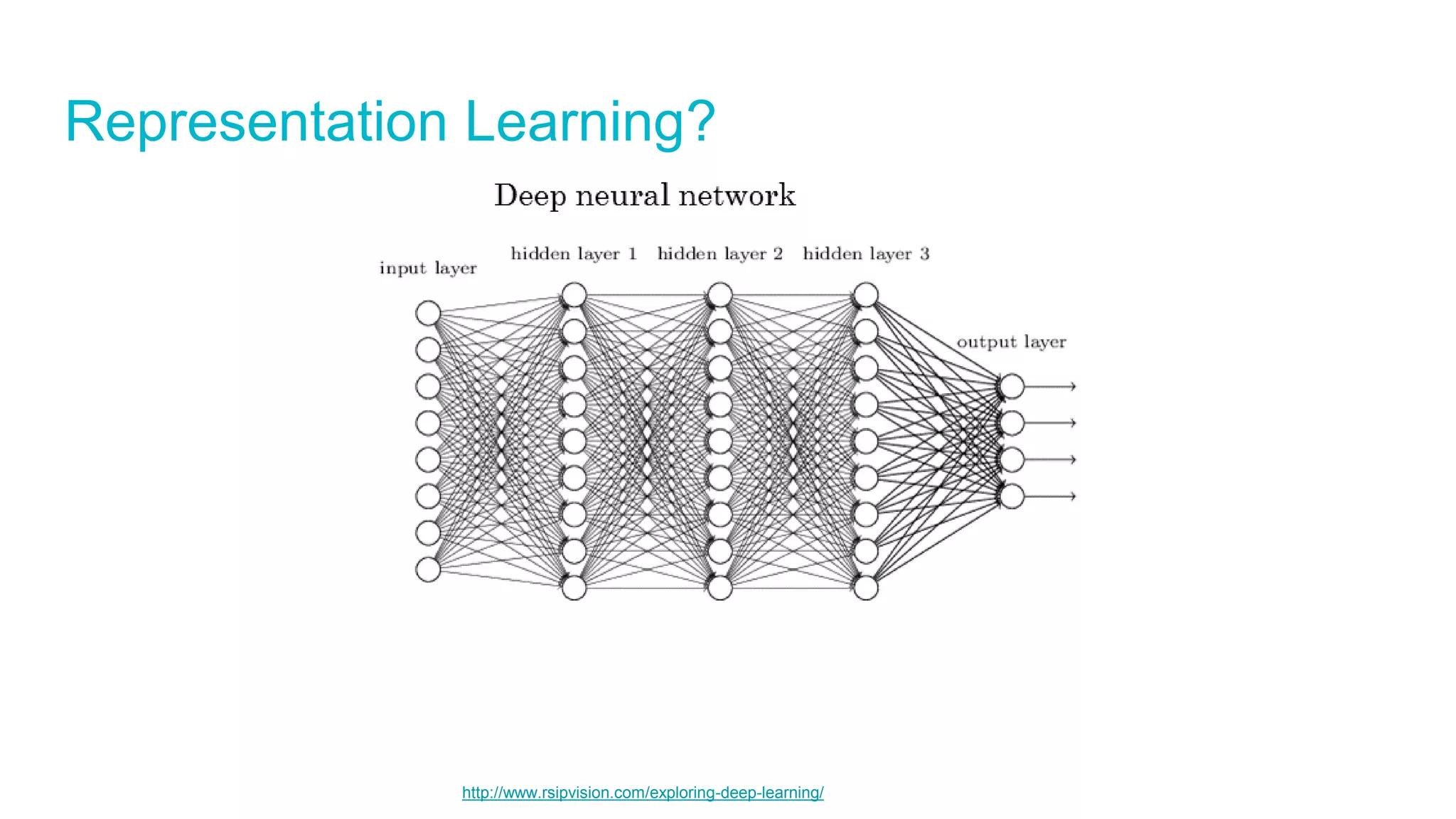

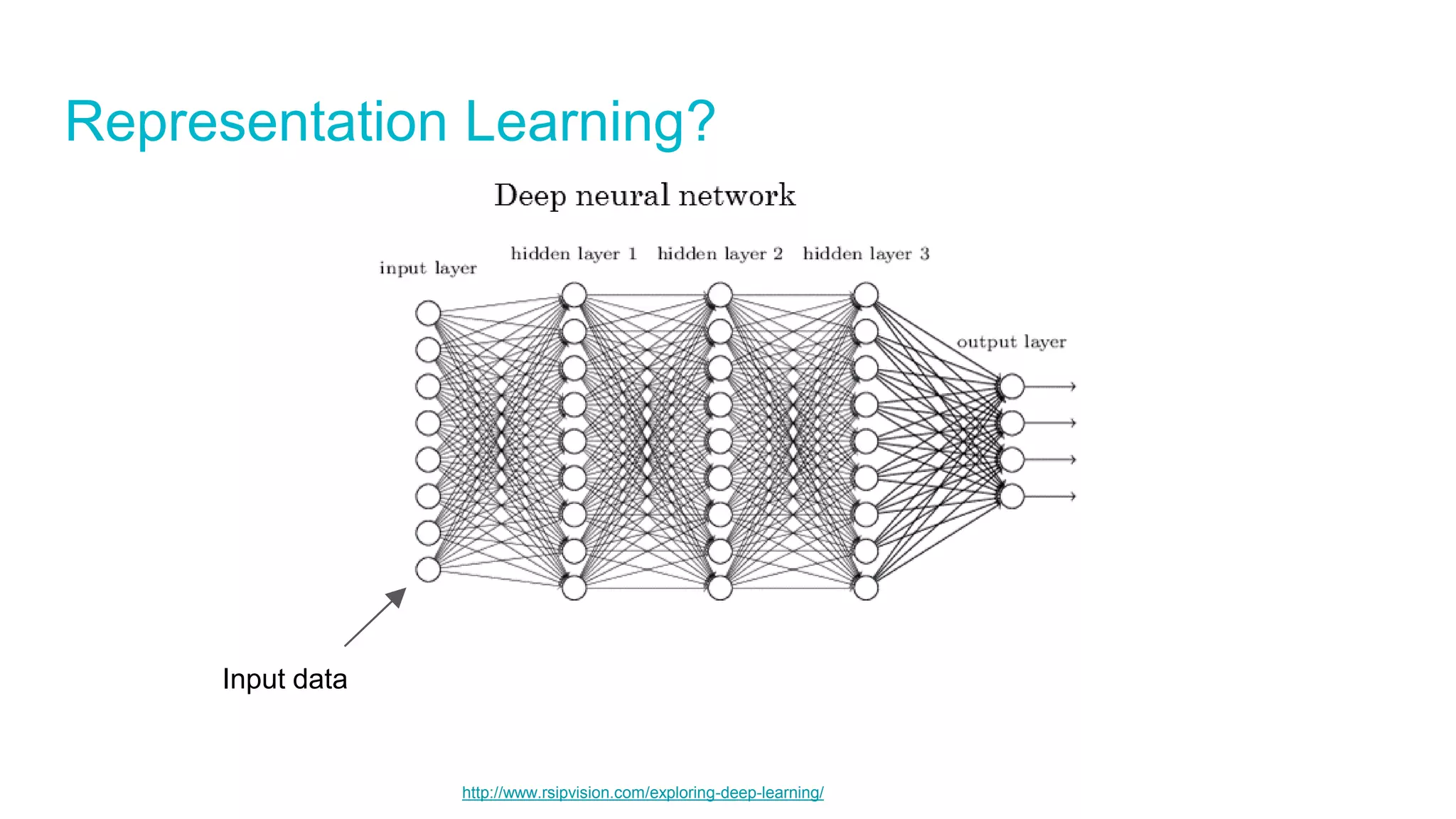

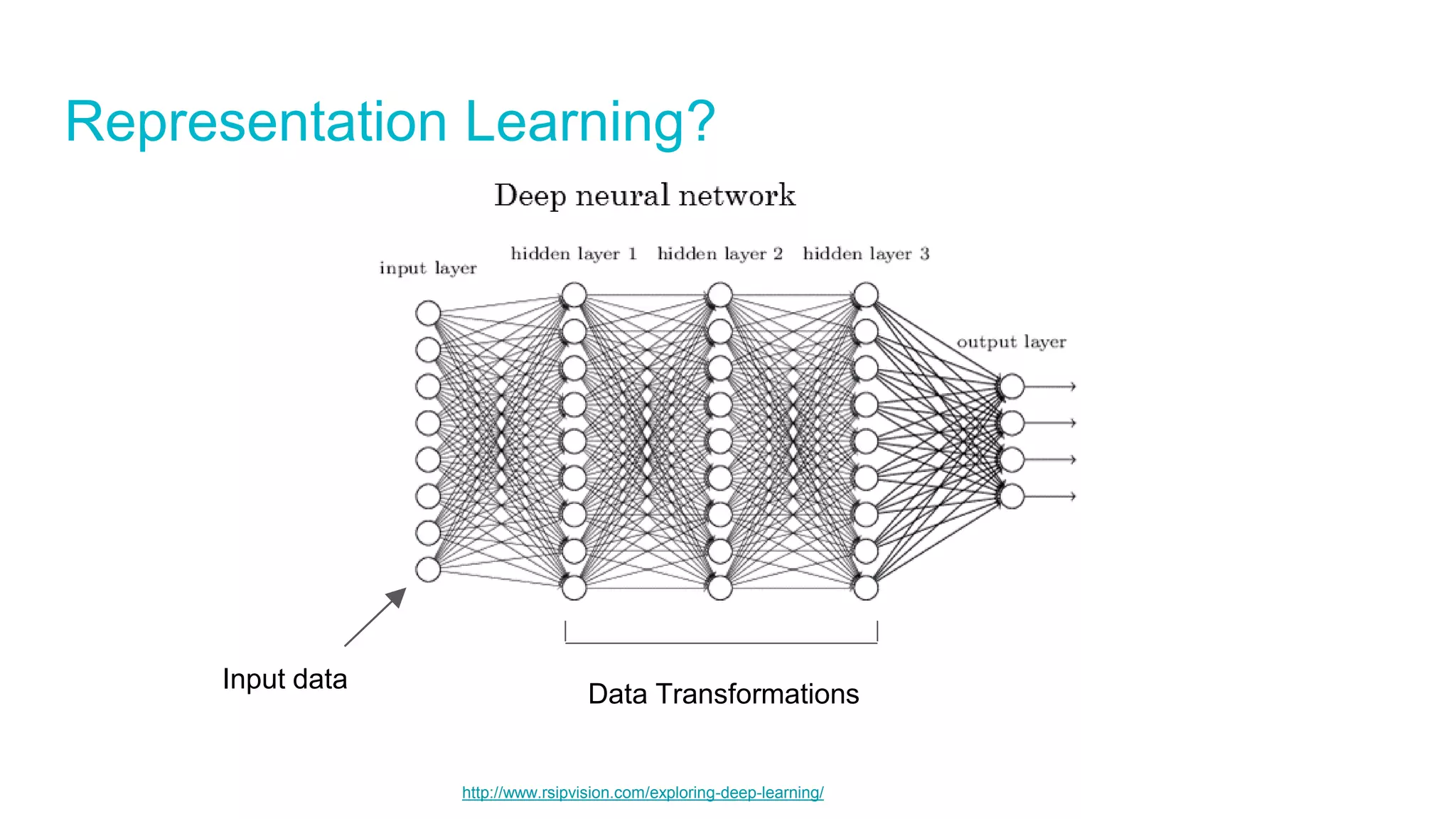

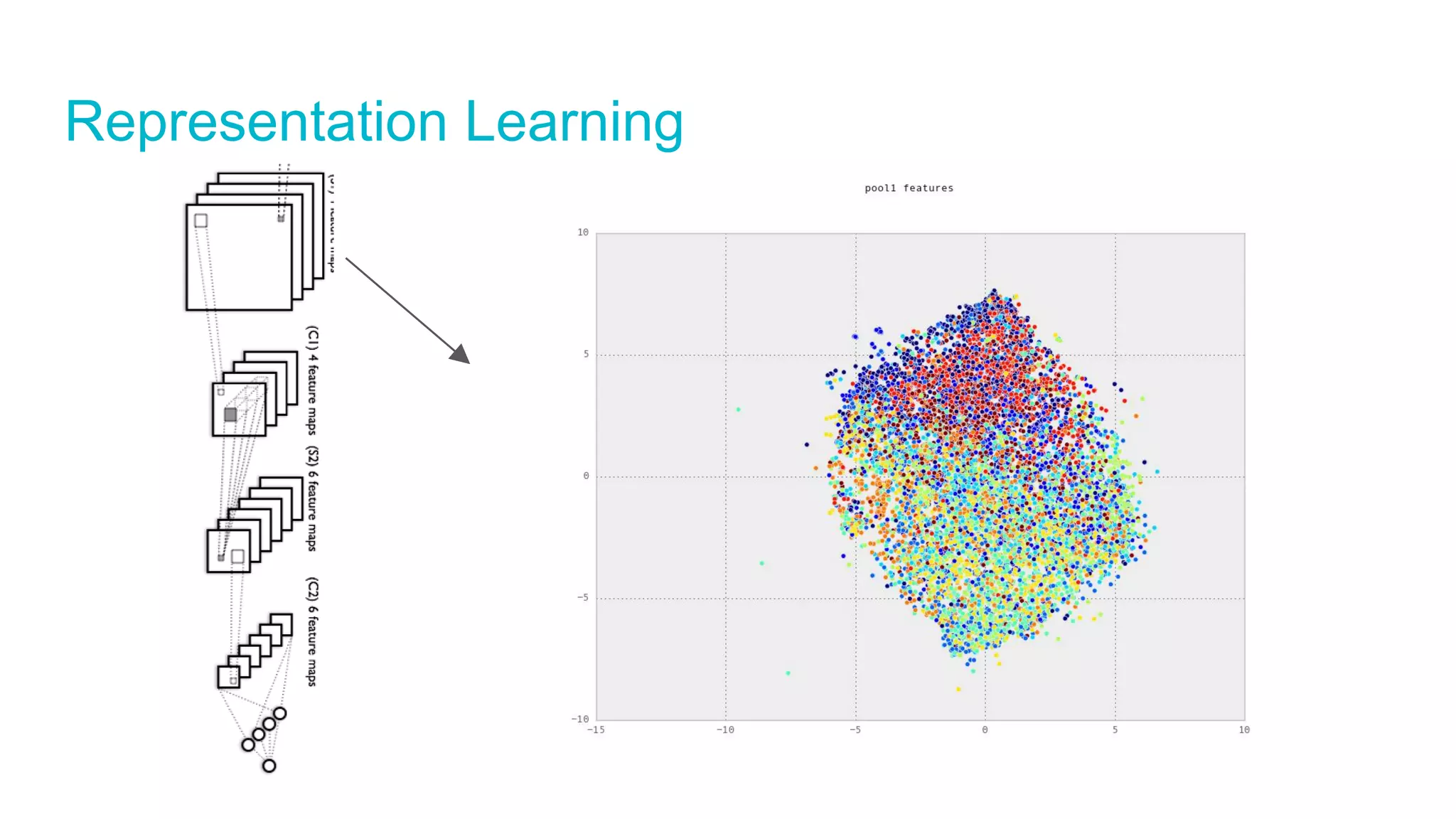

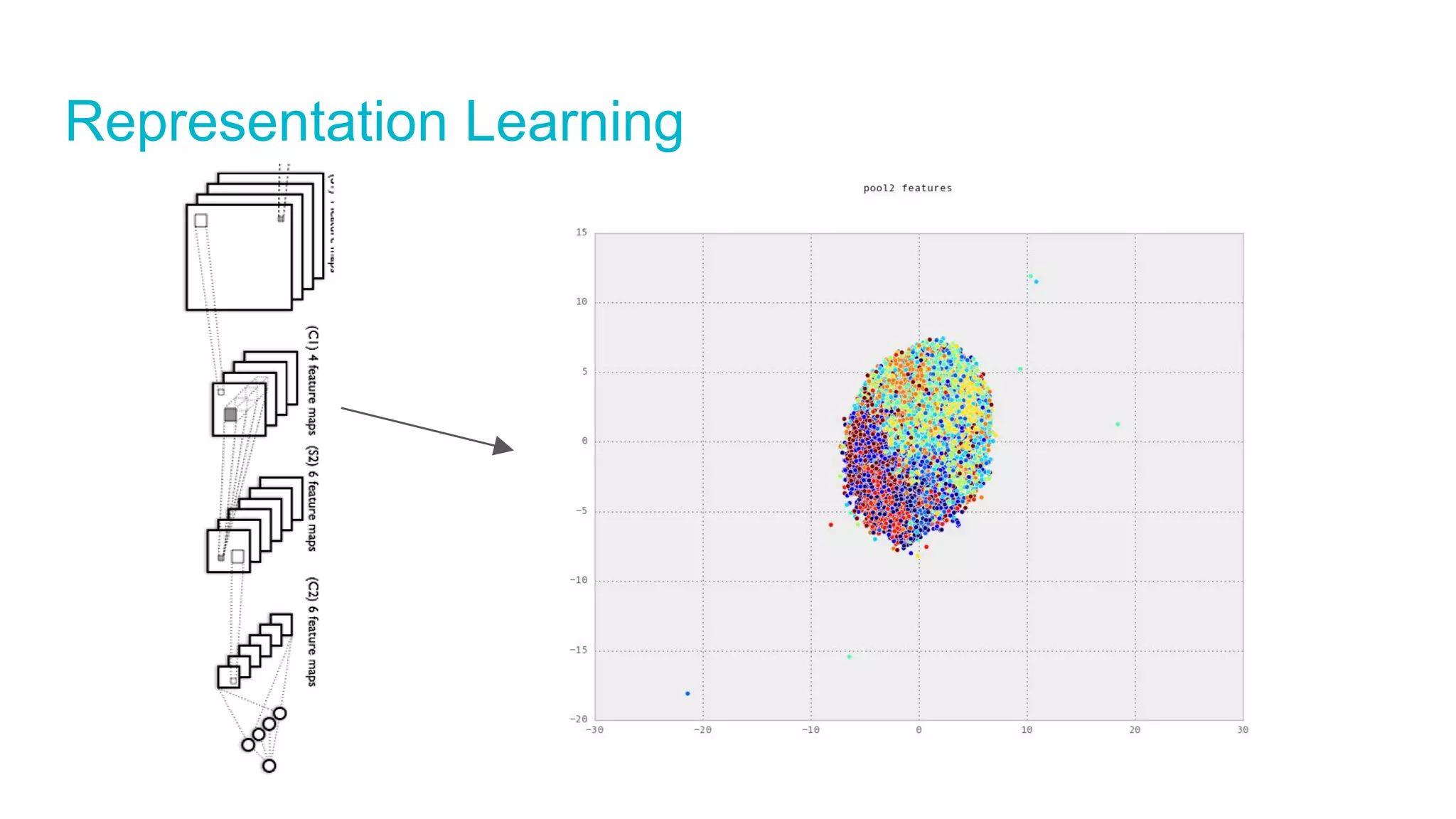

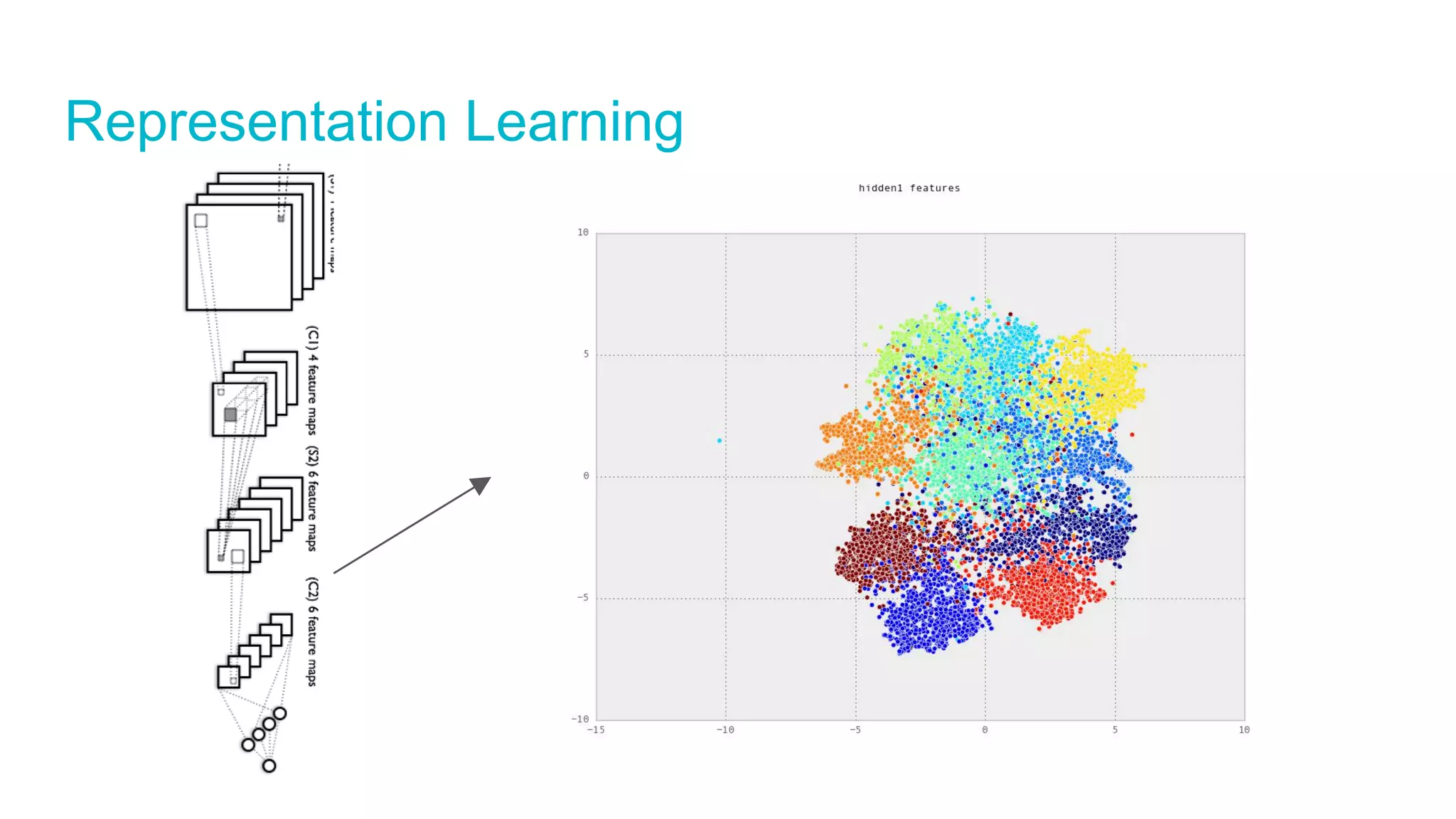

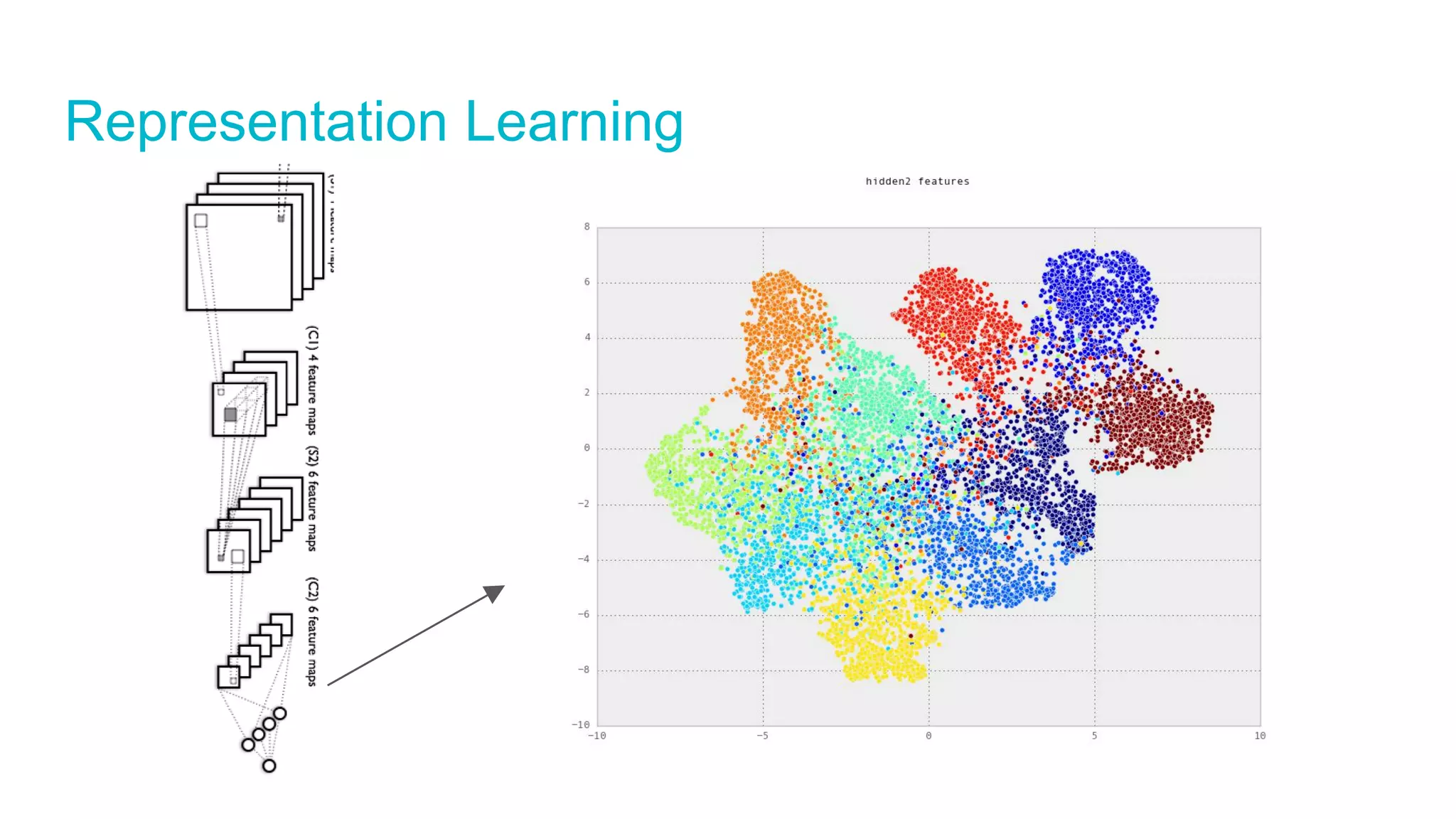

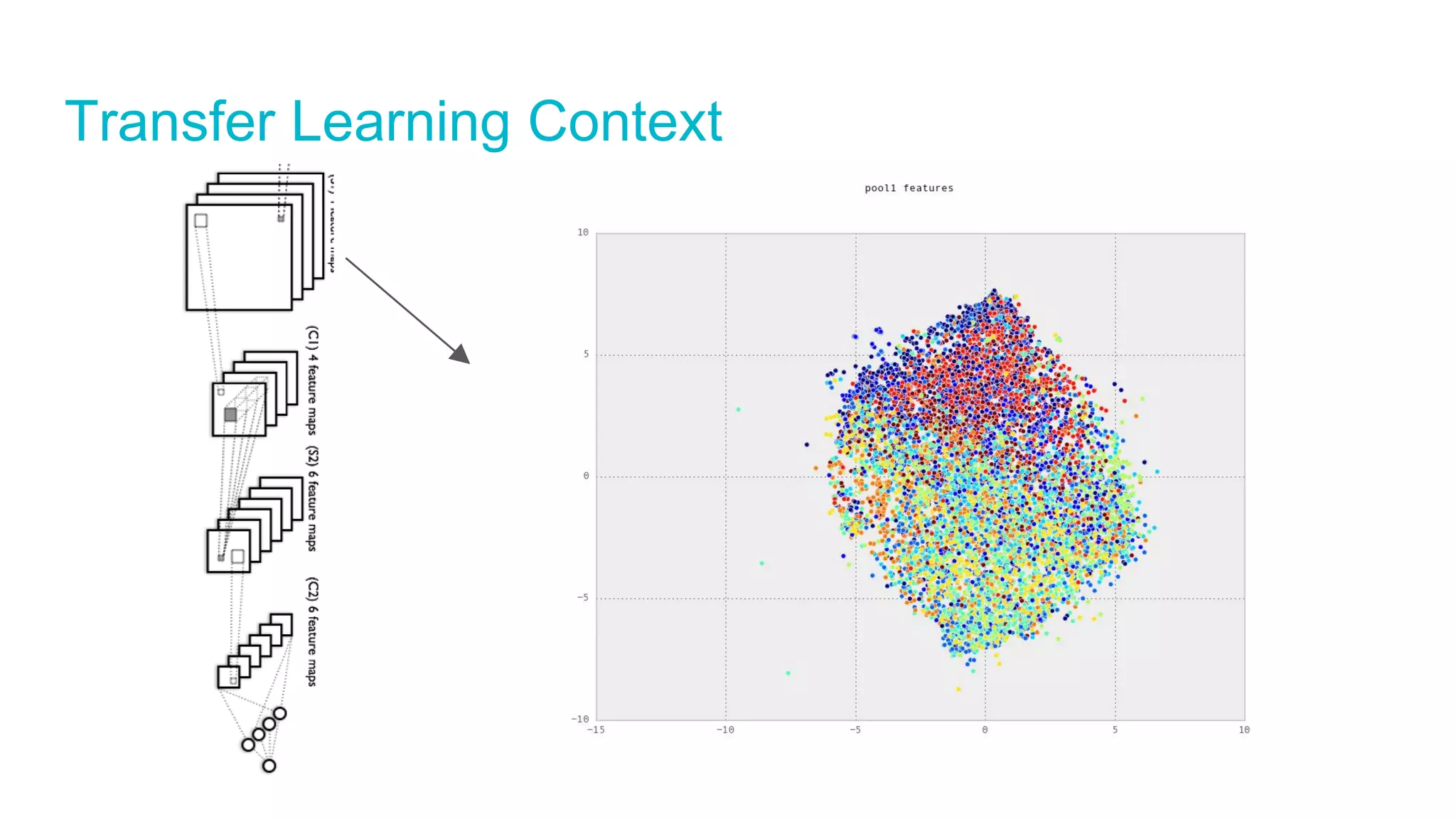

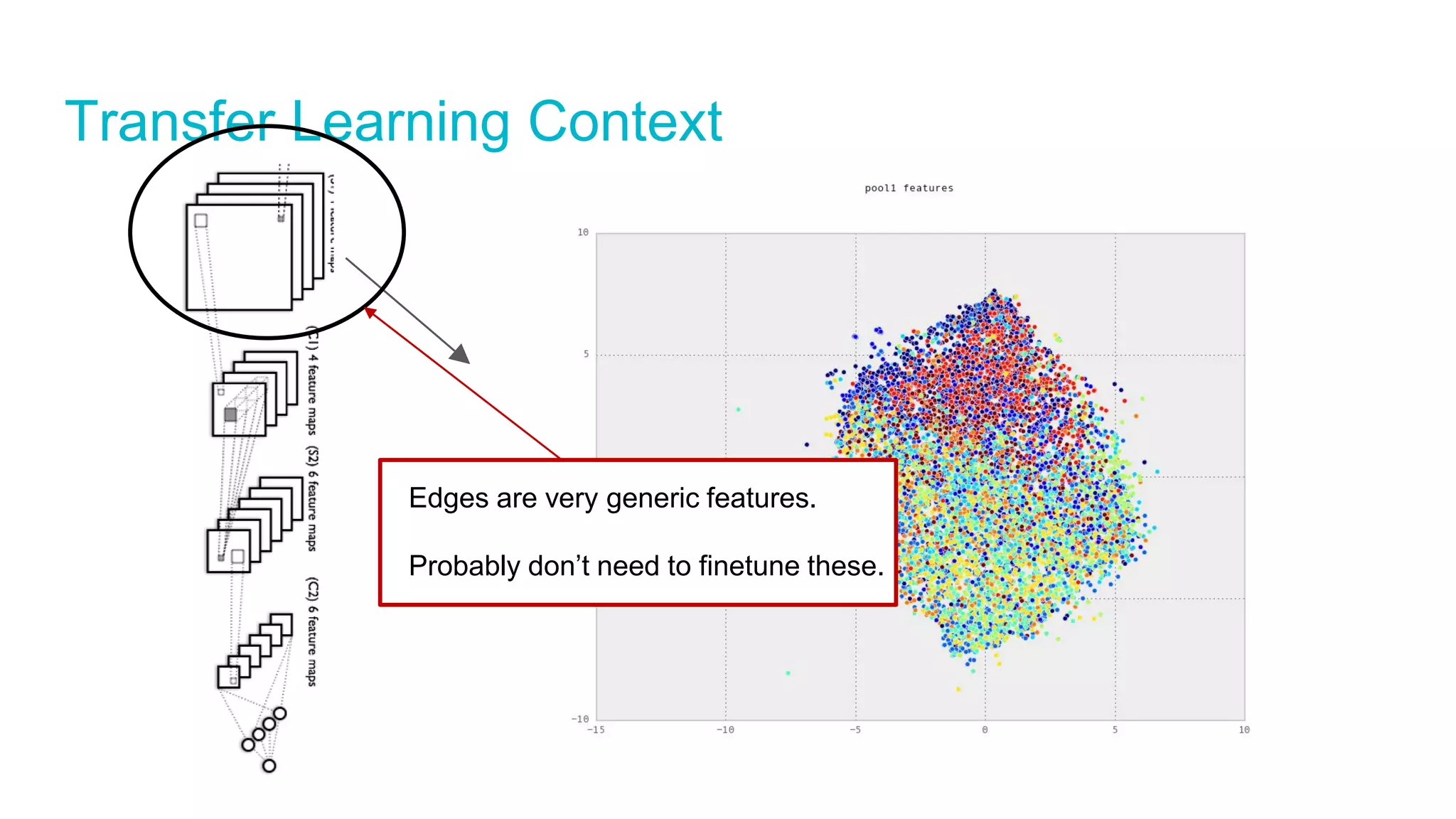

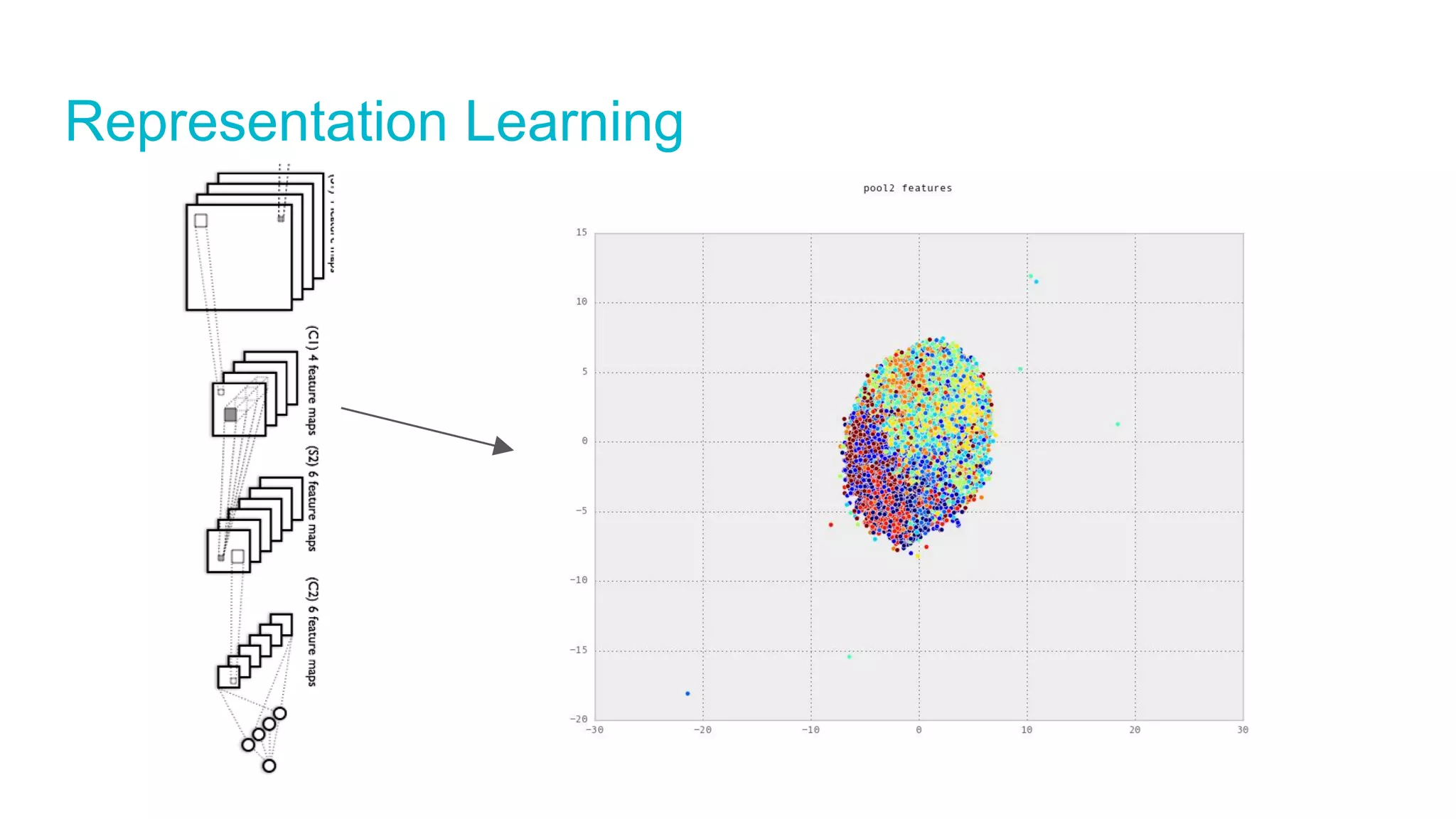

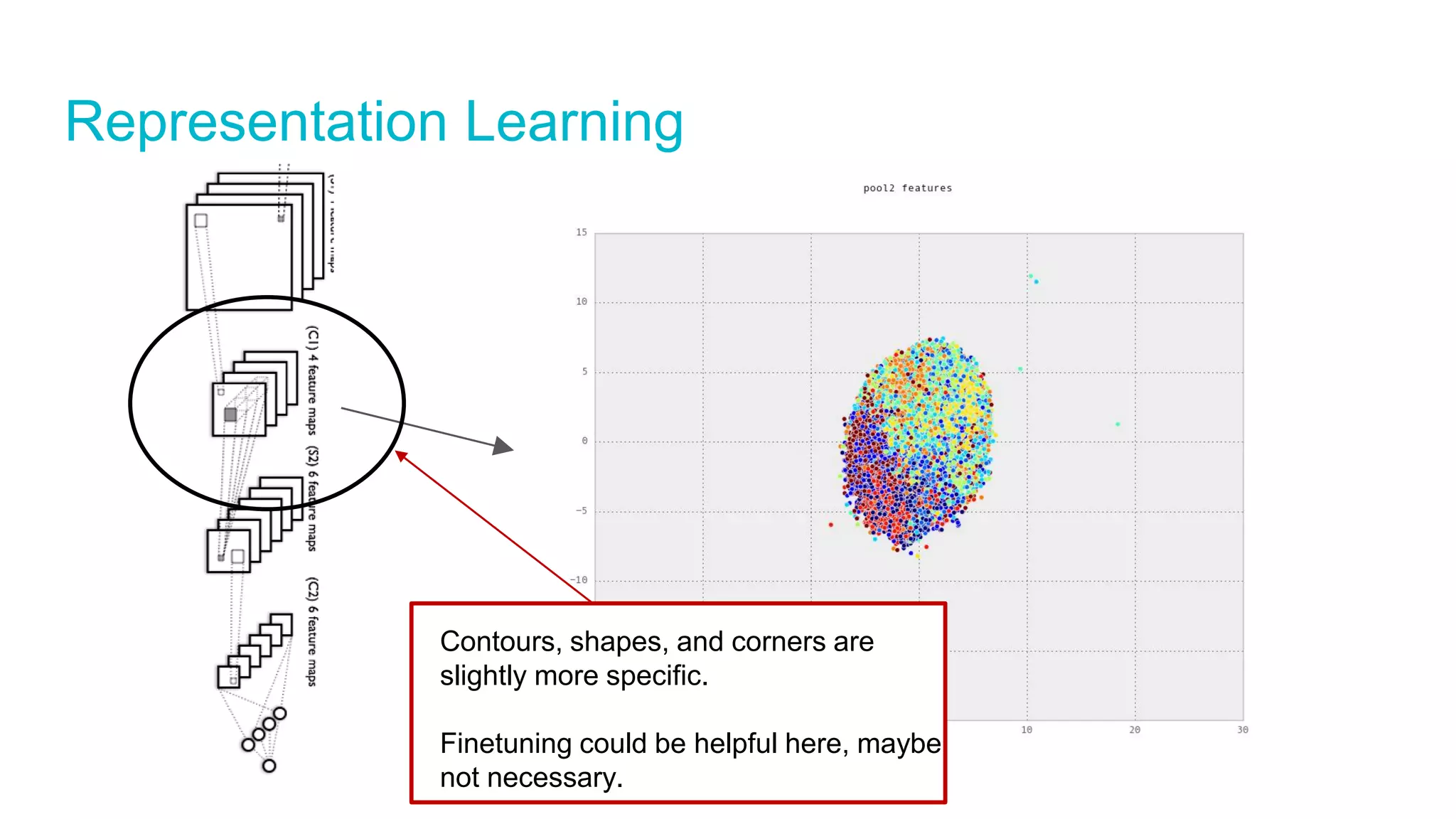

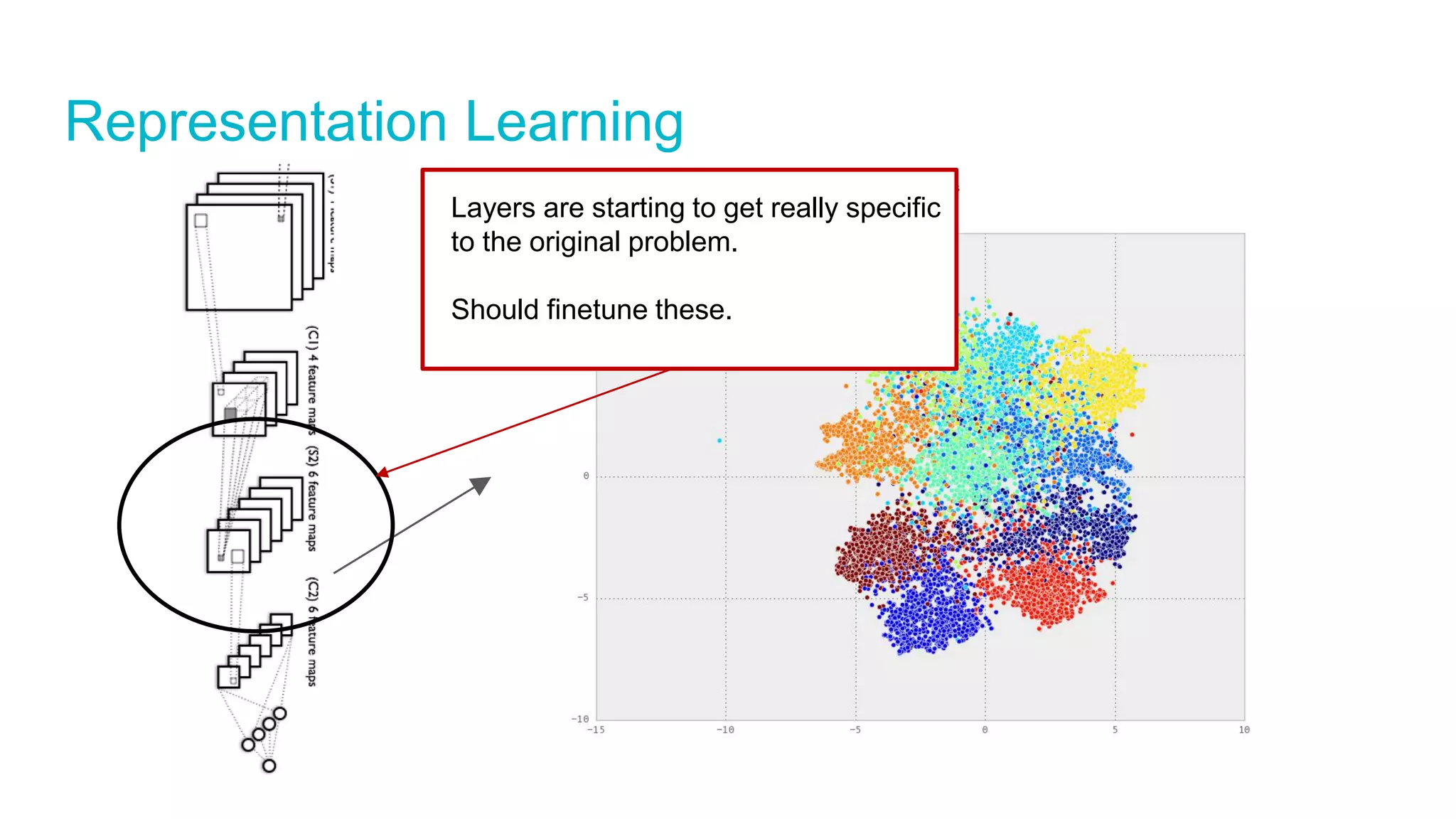

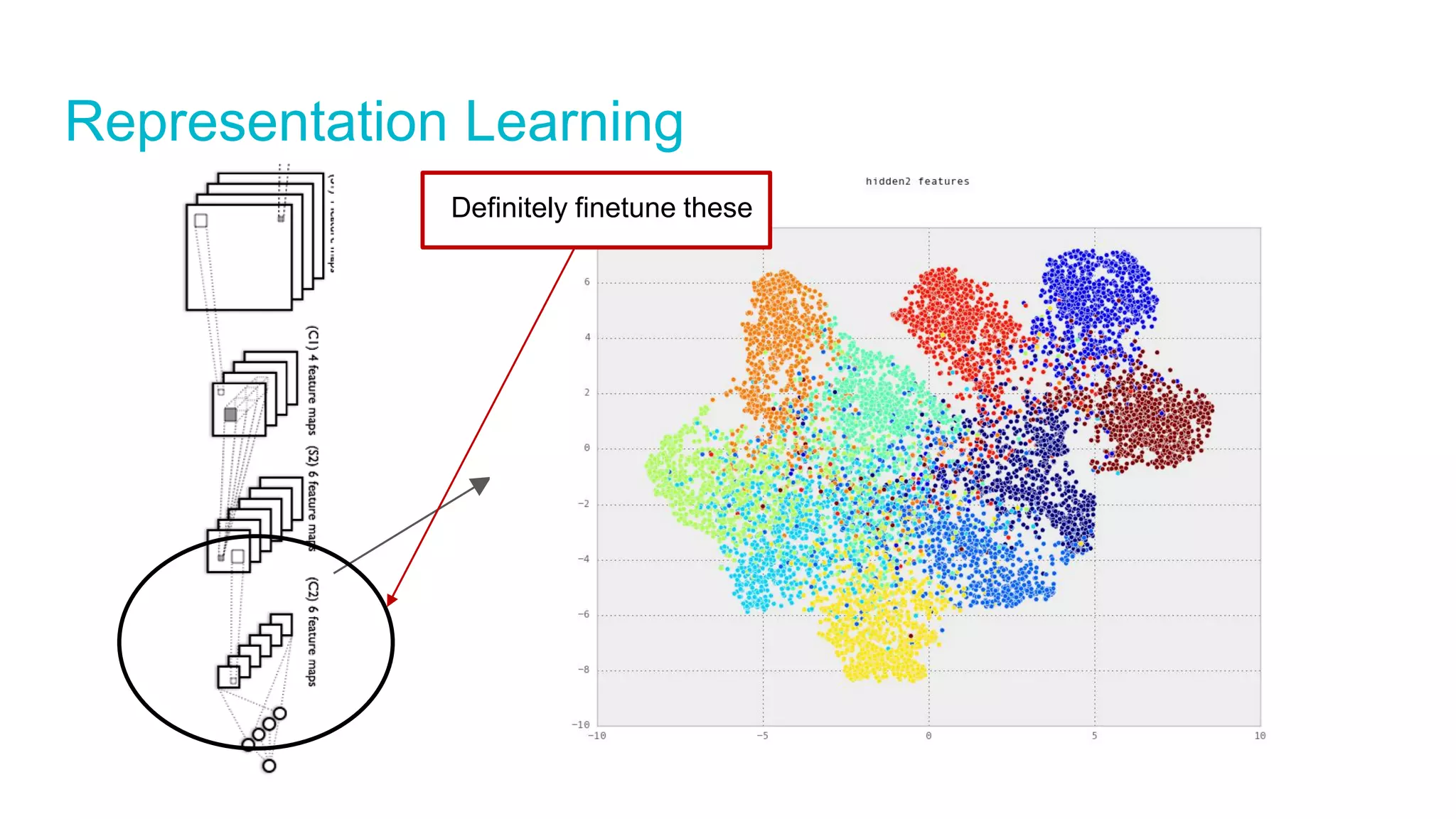

The document explains transfer learning, which involves adapting a pre-trained neural network to a new dataset, making it especially useful for problems with limited training data. It outlines methods for implementing transfer learning in both classification and detection tasks, emphasizing features such as fine-tuning and feature extraction. Additionally, it provides links to relevant research and further resources for different programming frameworks.