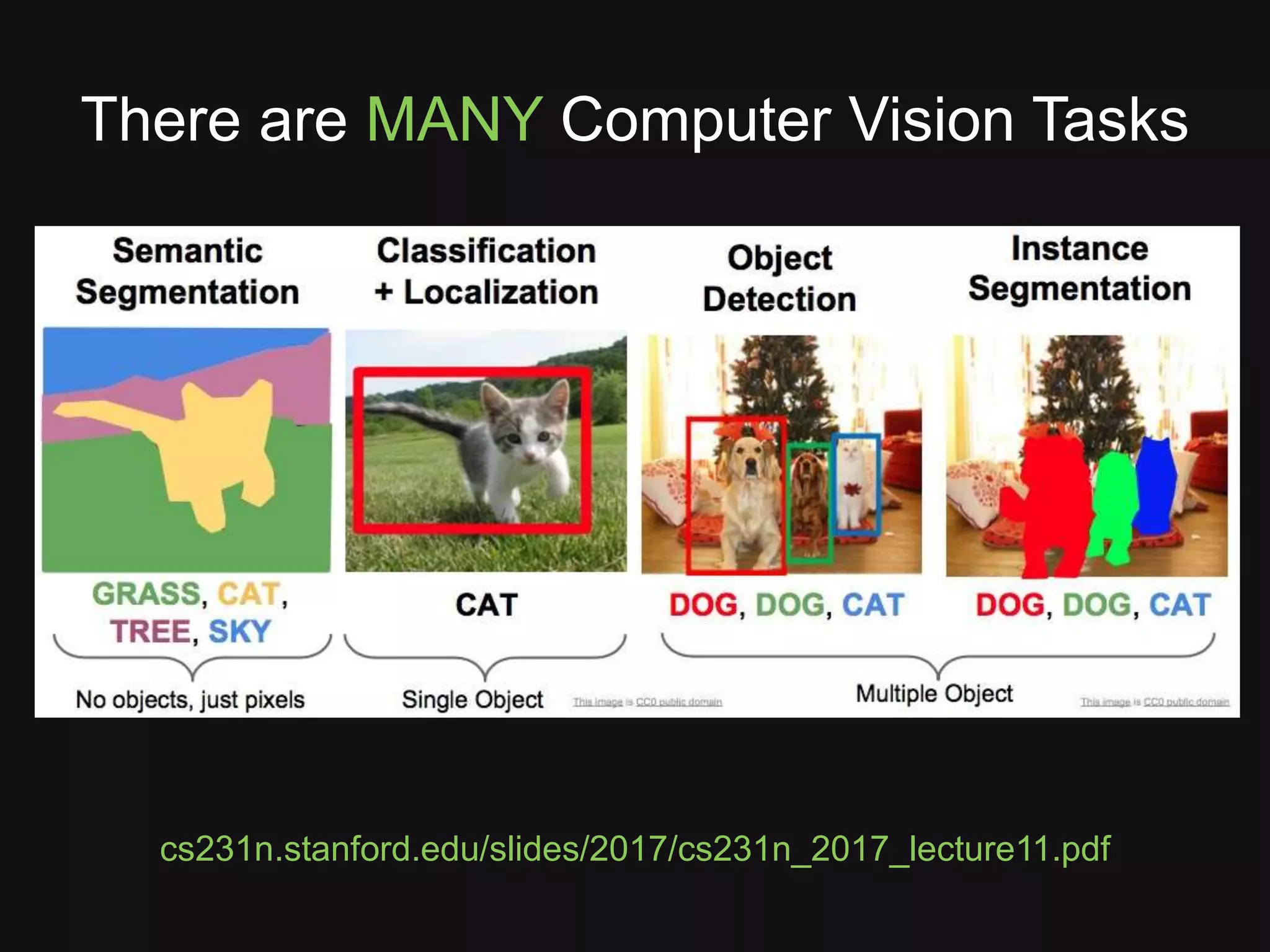

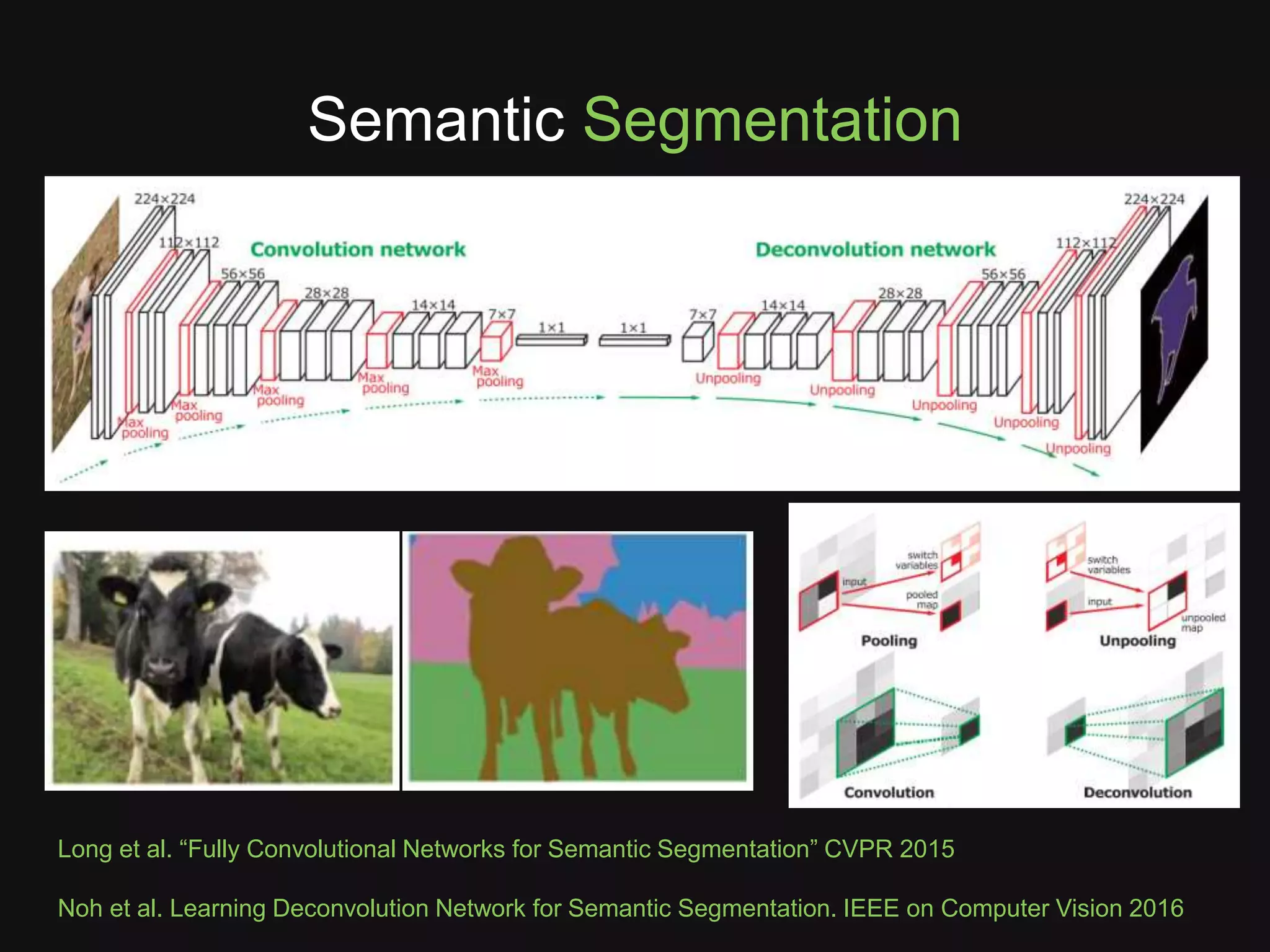

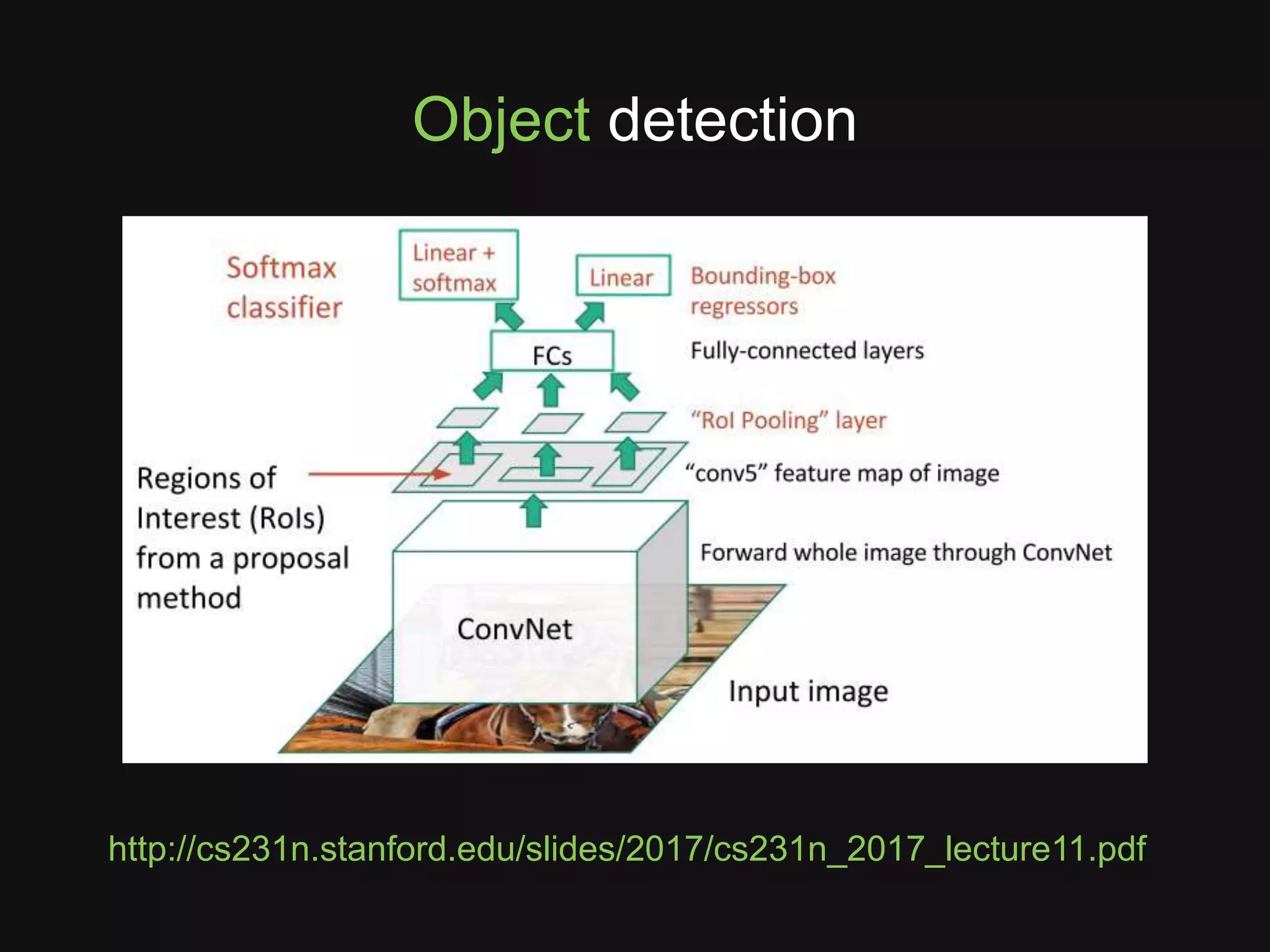

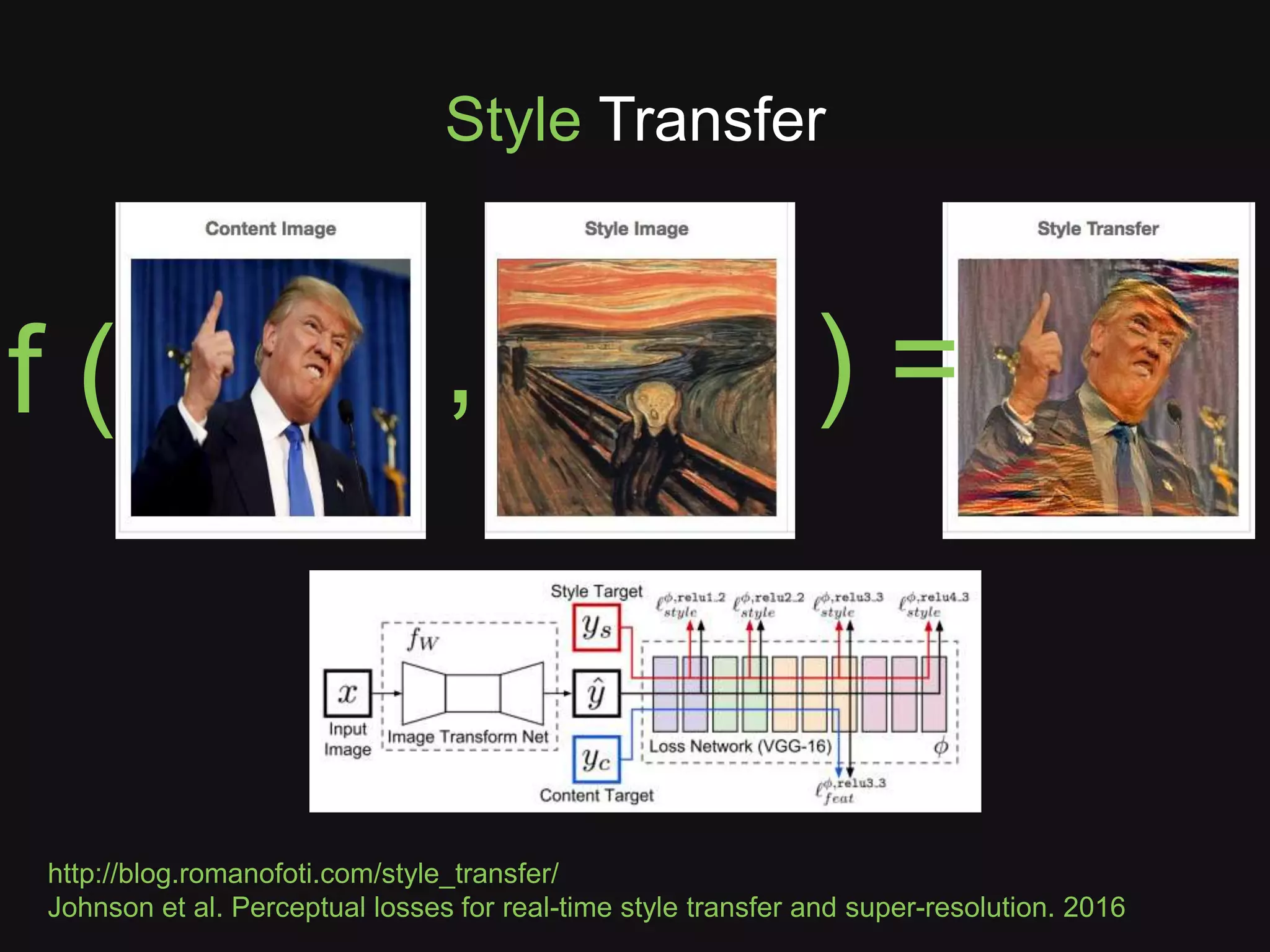

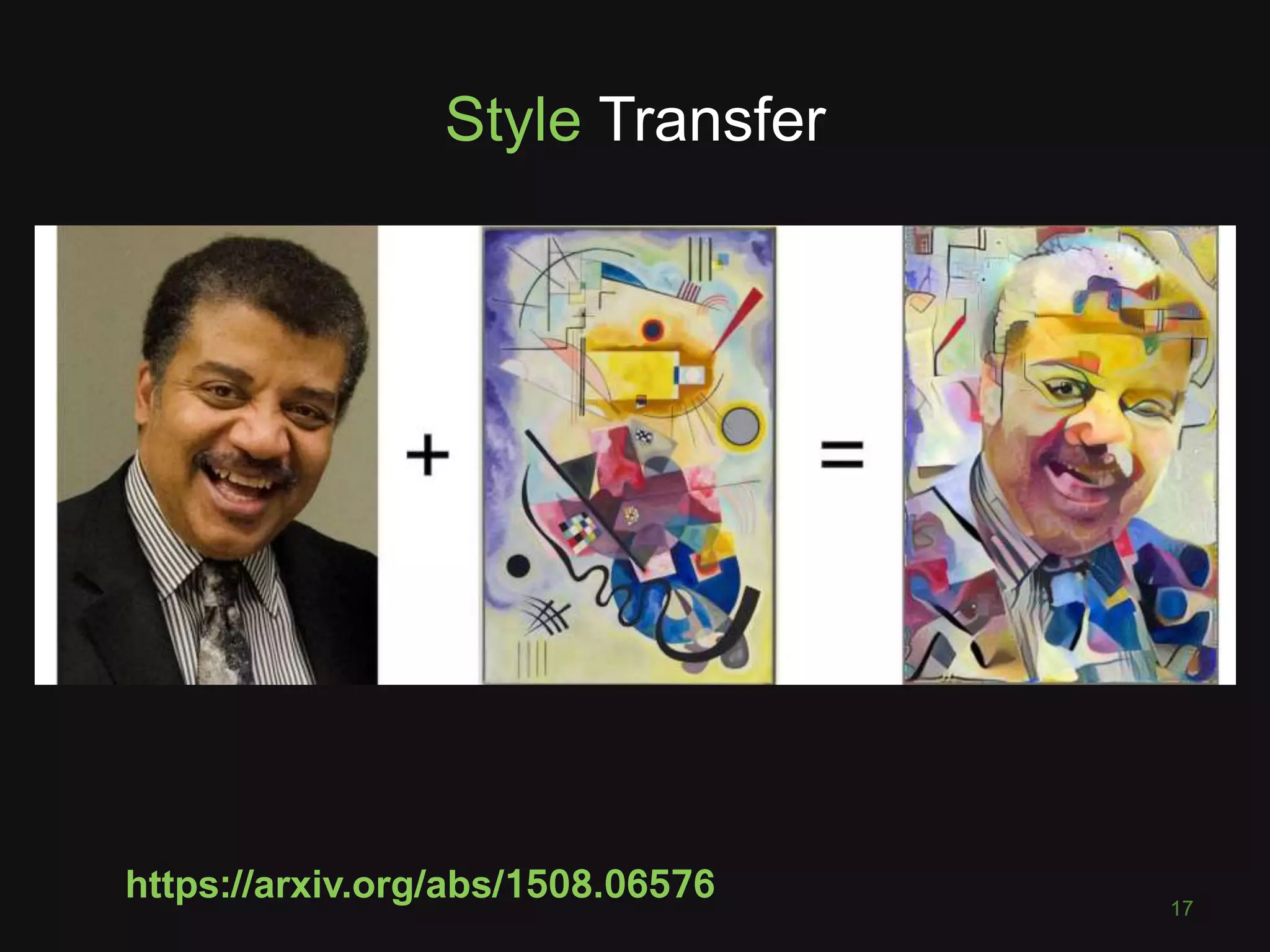

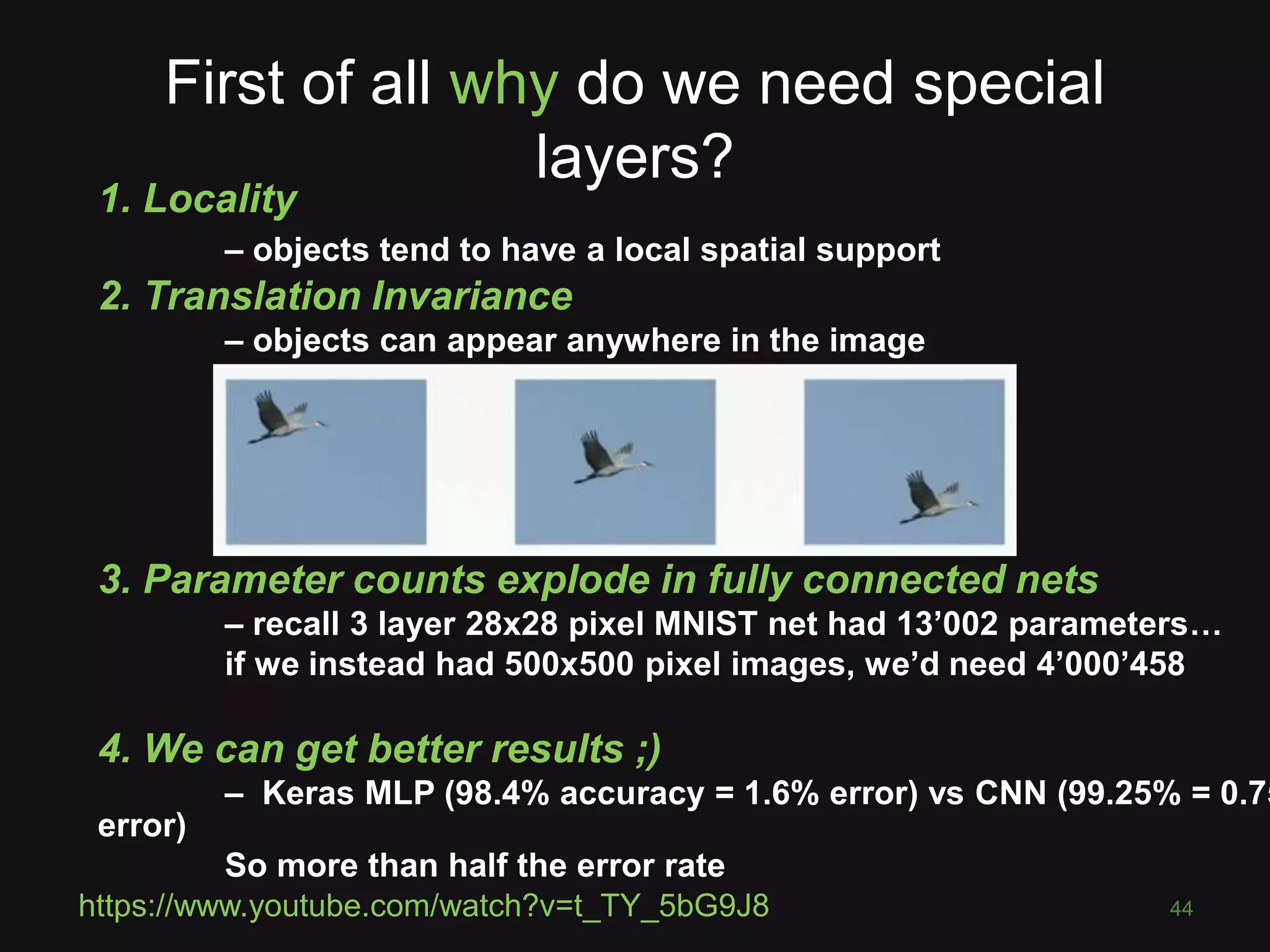

This document discusses deep learning for computer vision tasks. It begins with an overview of image classification using convolutional neural networks and how they have achieved superhuman performance on ImageNet. It then covers the key layers and concepts in CNNs, including convolutions, max pooling, and transferring learning to new problems. Finally, it discusses more advanced computer vision tasks that CNNs have been applied to, such as semantic segmentation, style transfer, visual question answering, and combining images with other data sources.

![What is a neuron?

25

• 3 inputs [x1,x2,x3]

• 3 weights [w1,w2,w3]

• 1 bias term b

• Element-wise multiply and sum: inputs & weights

• Add bias term

• Apply activation function f

• Output of neuron is just a number (like the in](https://image.slidesharecdn.com/pyconde17-171025091553/75/Deep-Learning-for-Computer-Vision-PyconDE-2017-24-2048.jpg)

![What is an Activation Function?

26

Sigmoid Tanh ReLU

Nonlinearities … “squashing functions” … transform neuron’s

output

NB: sigmoid output in [0,1]](https://image.slidesharecdn.com/pyconde17-171025091553/75/Deep-Learning-for-Computer-Vision-PyconDE-2017-25-2048.jpg)

![What is a Convolutional Neural

Network?

43

“like a ordinary neural network but with

special types of layers that work well

on images”

(math works on numbers)

• Pixel = 3 colour channels (R, G, B)

• Pixel intensity ∈[0,255]

• Image has width w and height h

• Therefore image is w x h x 3 numbers](https://image.slidesharecdn.com/pyconde17-171025091553/75/Deep-Learning-for-Computer-Vision-PyconDE-2017-42-2048.jpg)

![Softmax

Convert scores ∈ ℝ to probabilities ∈ [0,1]

Final output prediction = highest probability class

67](https://image.slidesharecdn.com/pyconde17-171025091553/75/Deep-Learning-for-Computer-Vision-PyconDE-2017-65-2048.jpg)