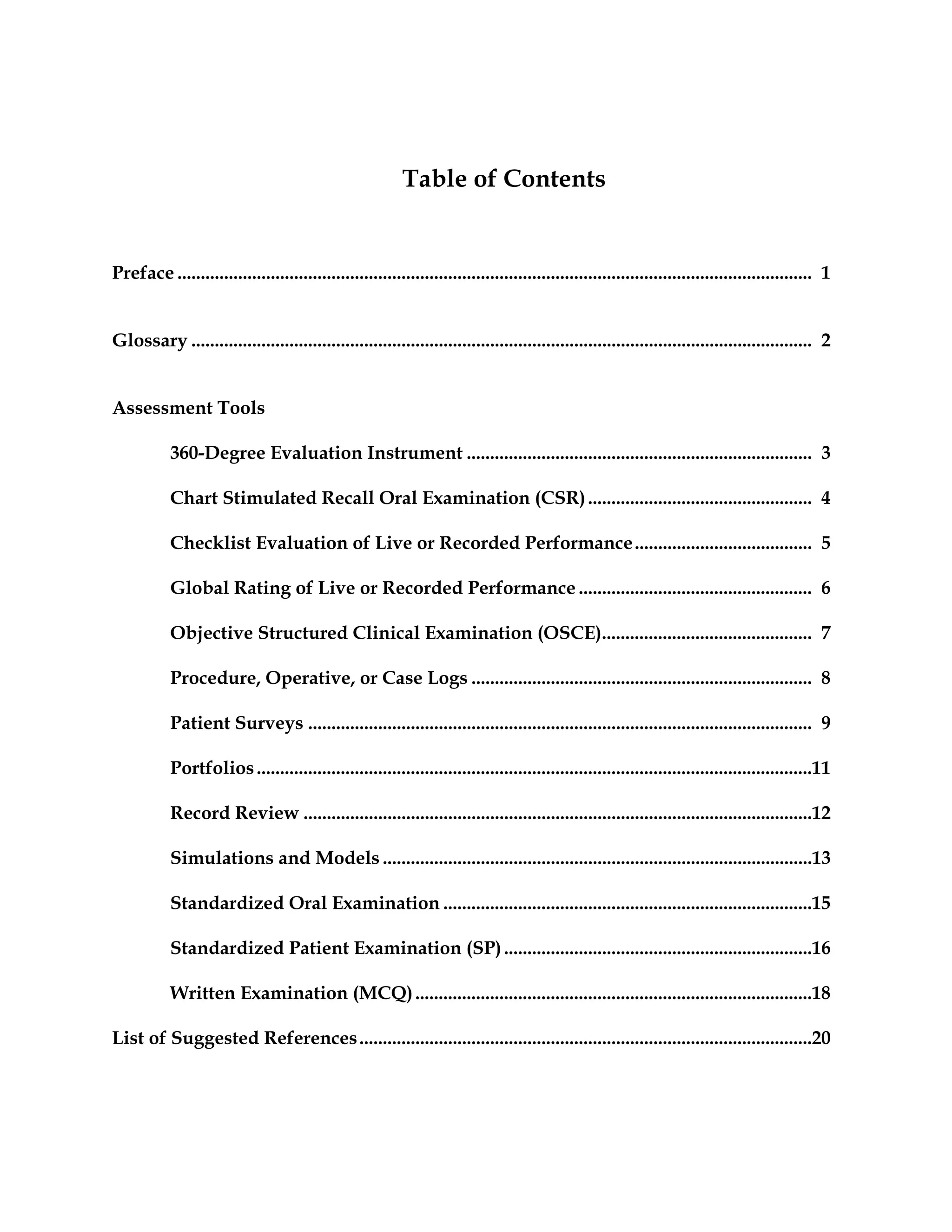

This document provides information about the Toolbox of Assessment Methods, which was created through a joint initiative between the Accreditation Council for Graduate Medical Education (ACGME) and the American Board of Medical Specialties (ABMS). It describes various assessment methods that can be used to evaluate residents, including brief descriptions of each method, how they are used, their psychometric qualities, and feasibility. The toolbox is intended to assist medical educators in selecting and developing evaluation techniques for residency programs and assessing resident competencies.