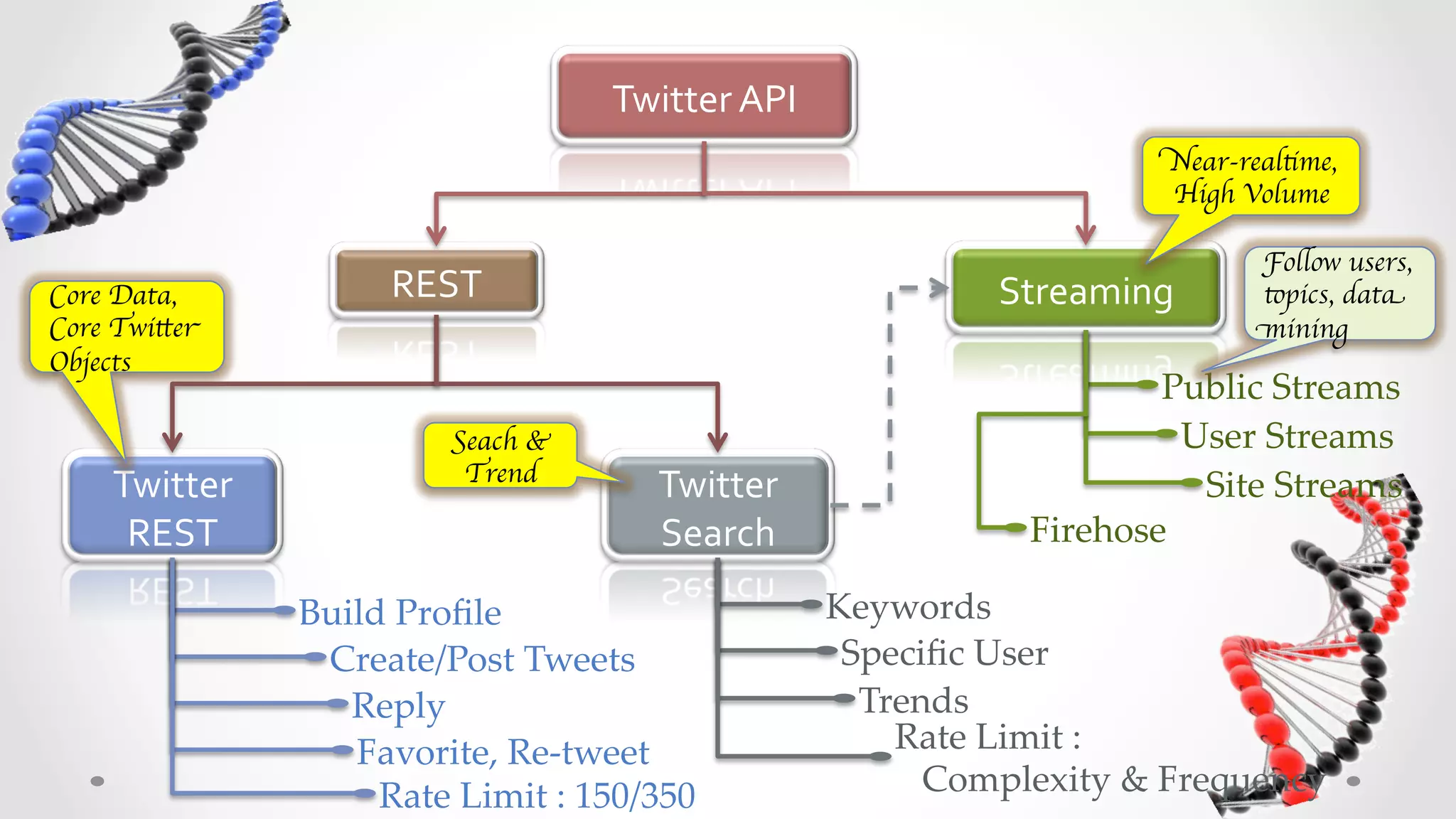

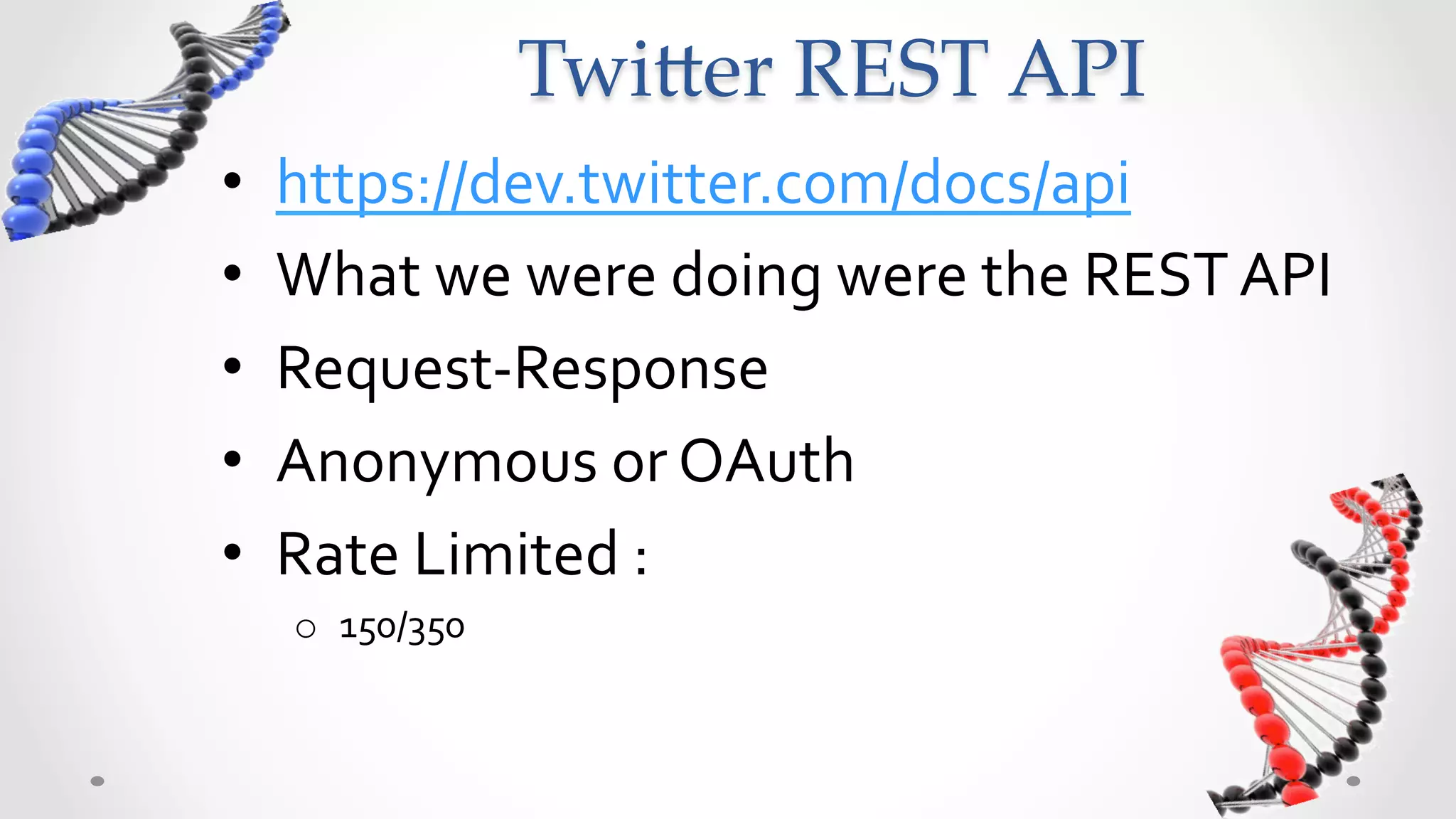

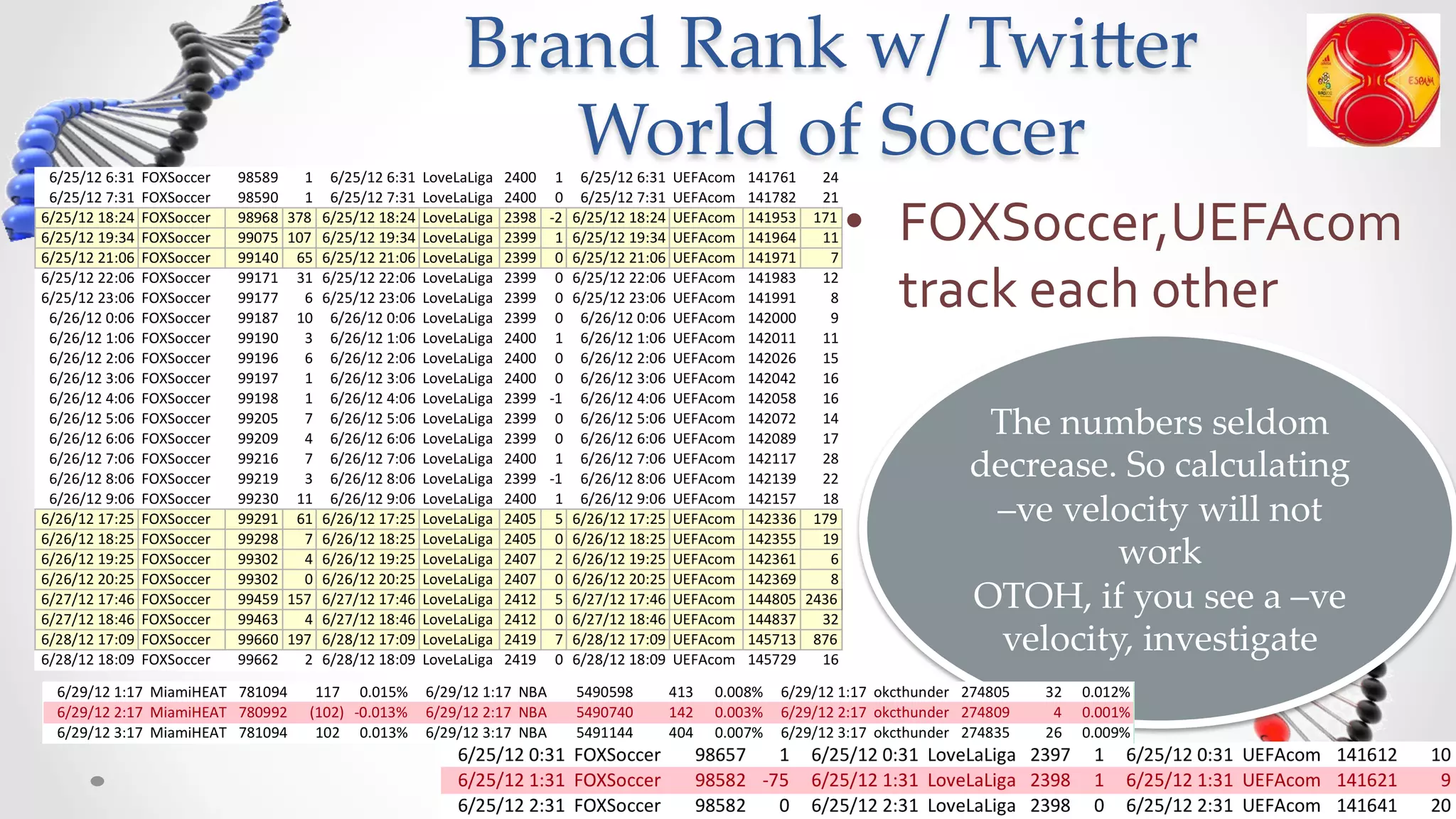

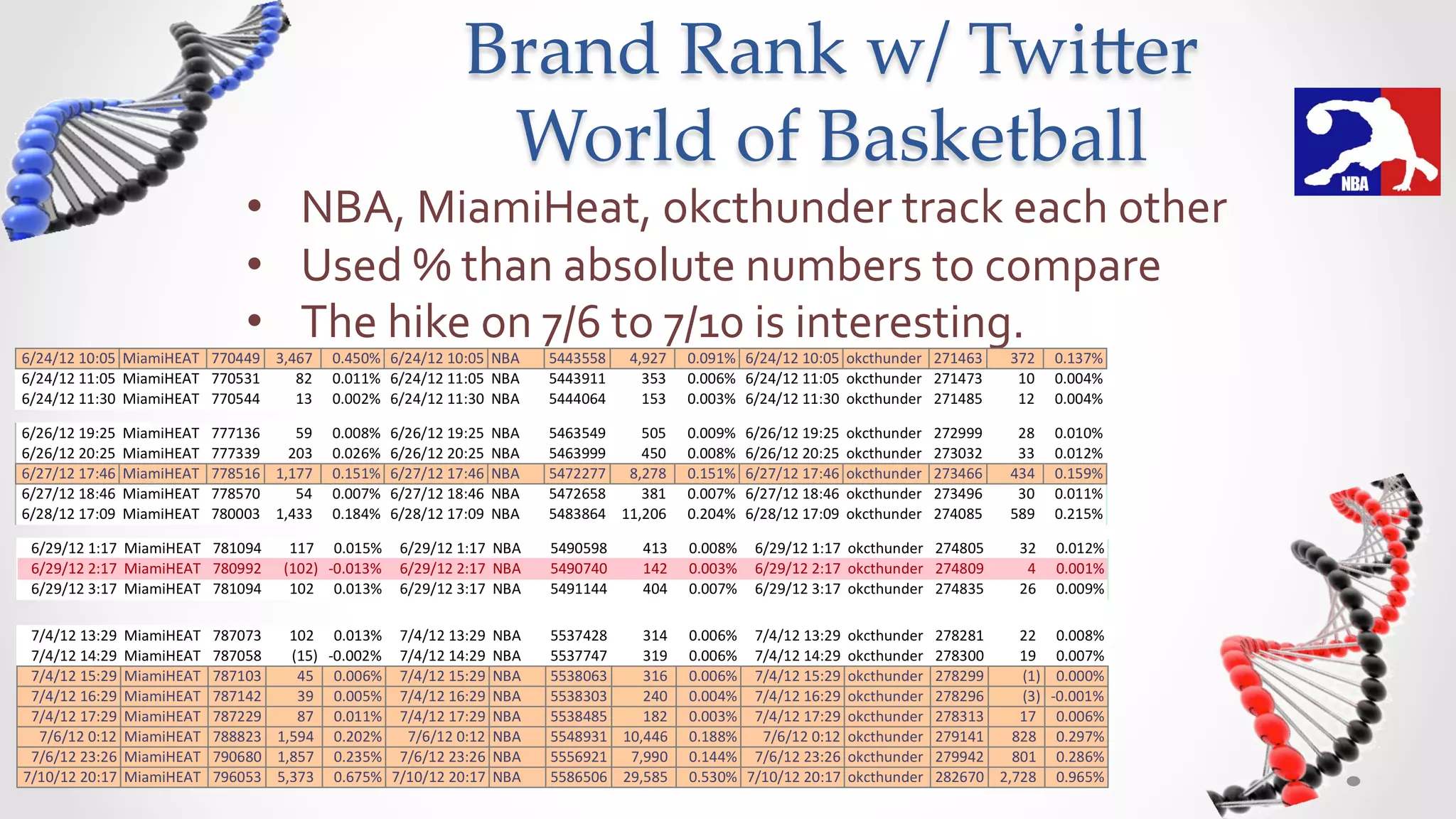

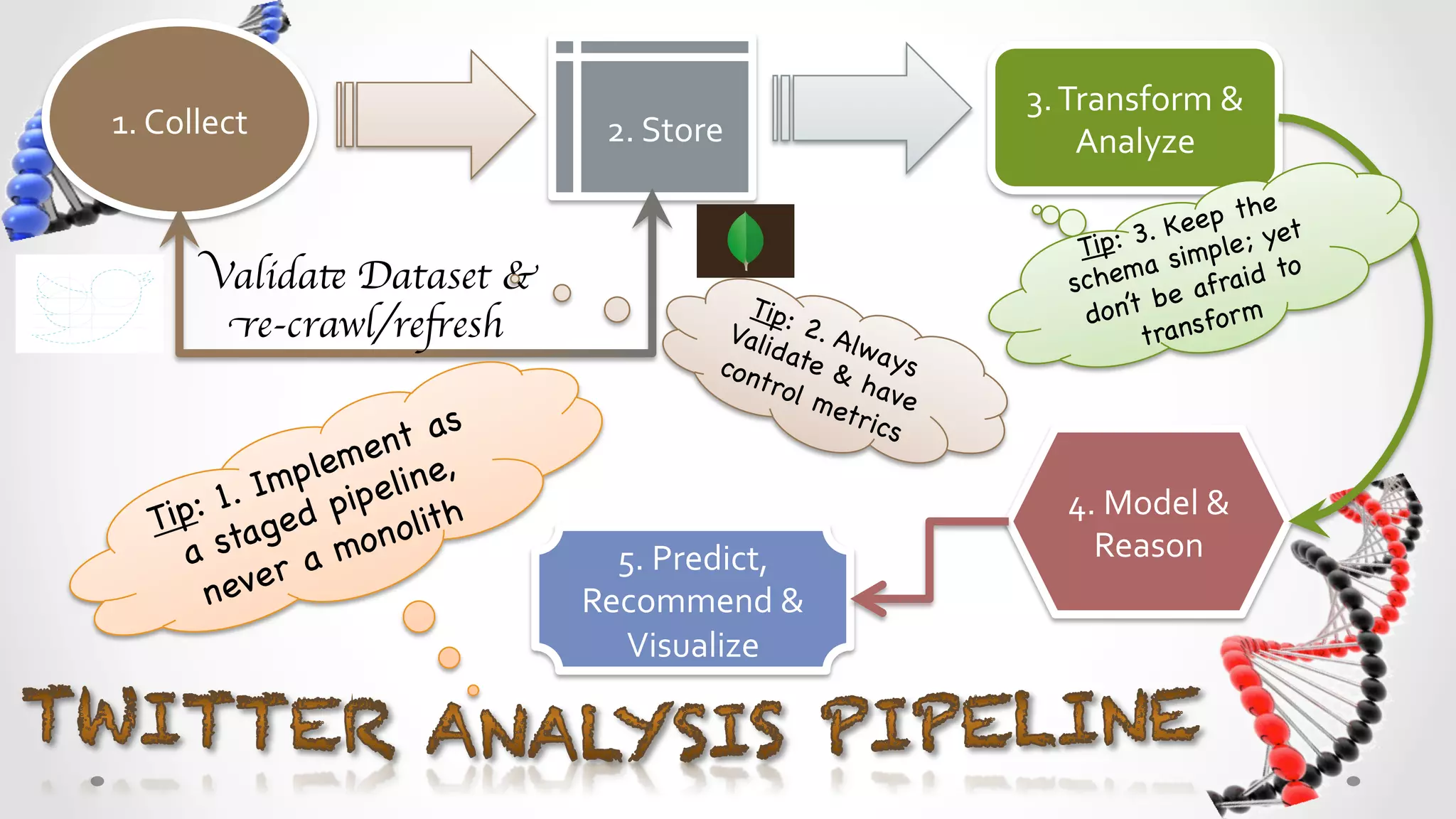

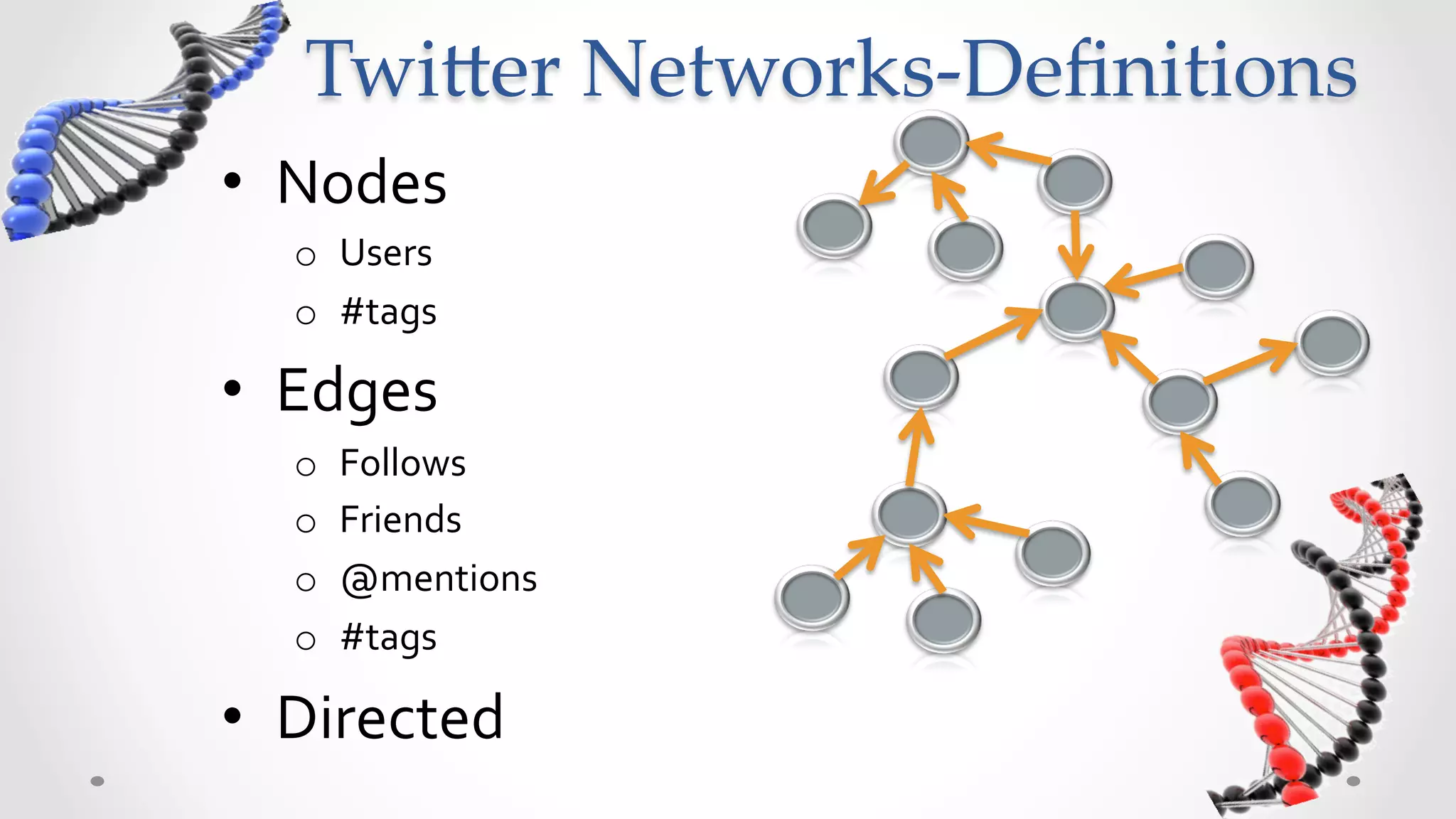

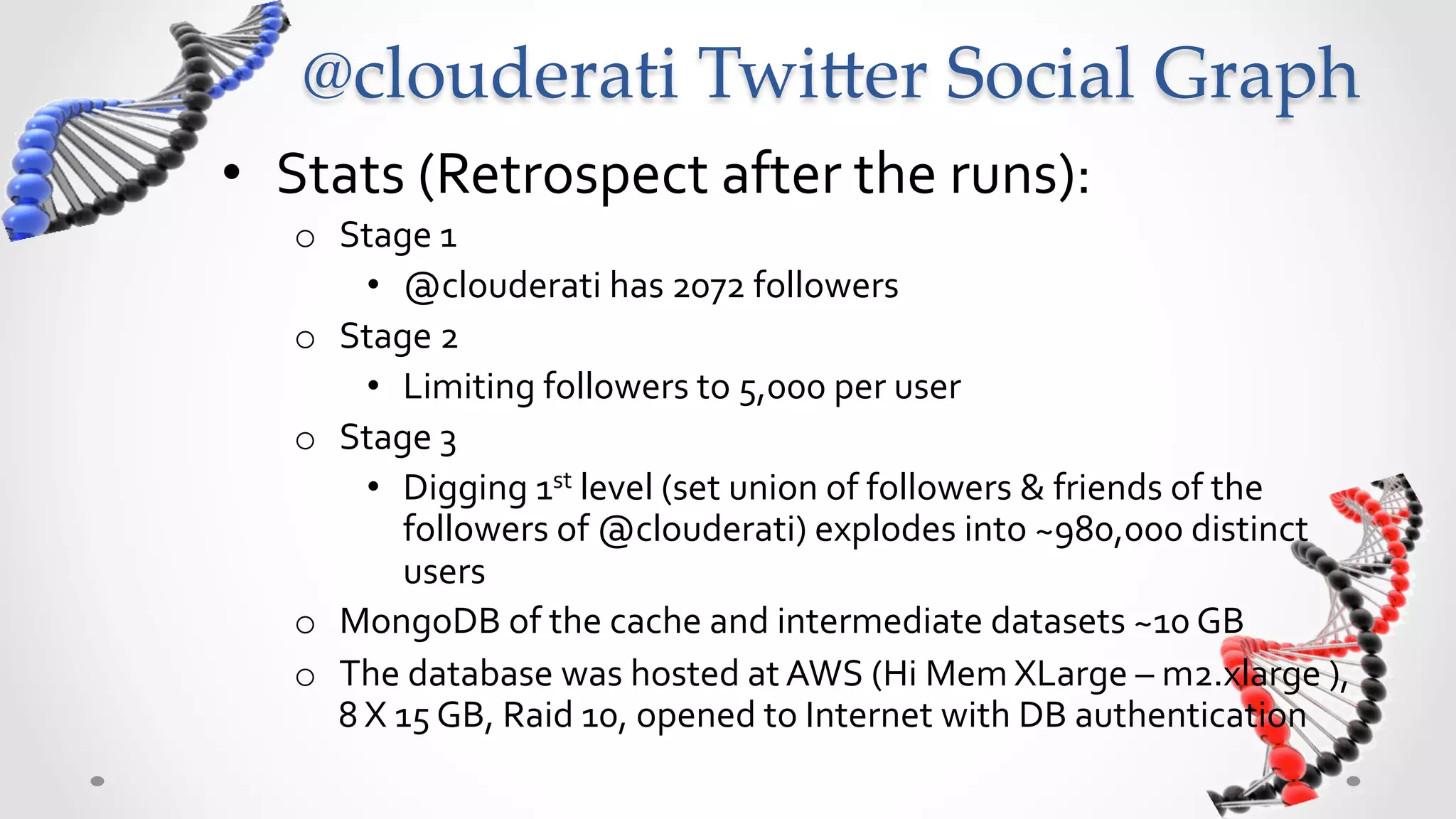

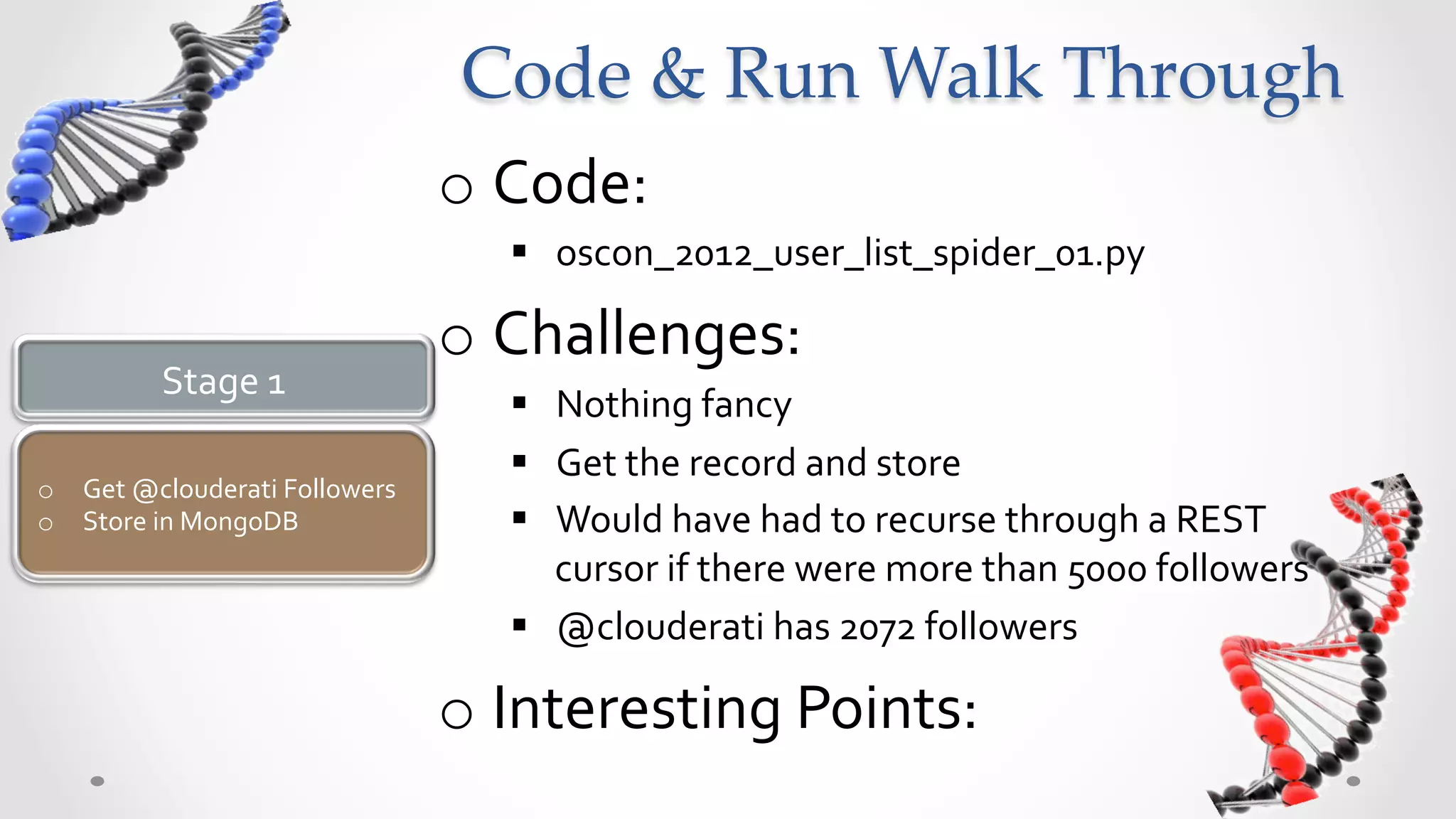

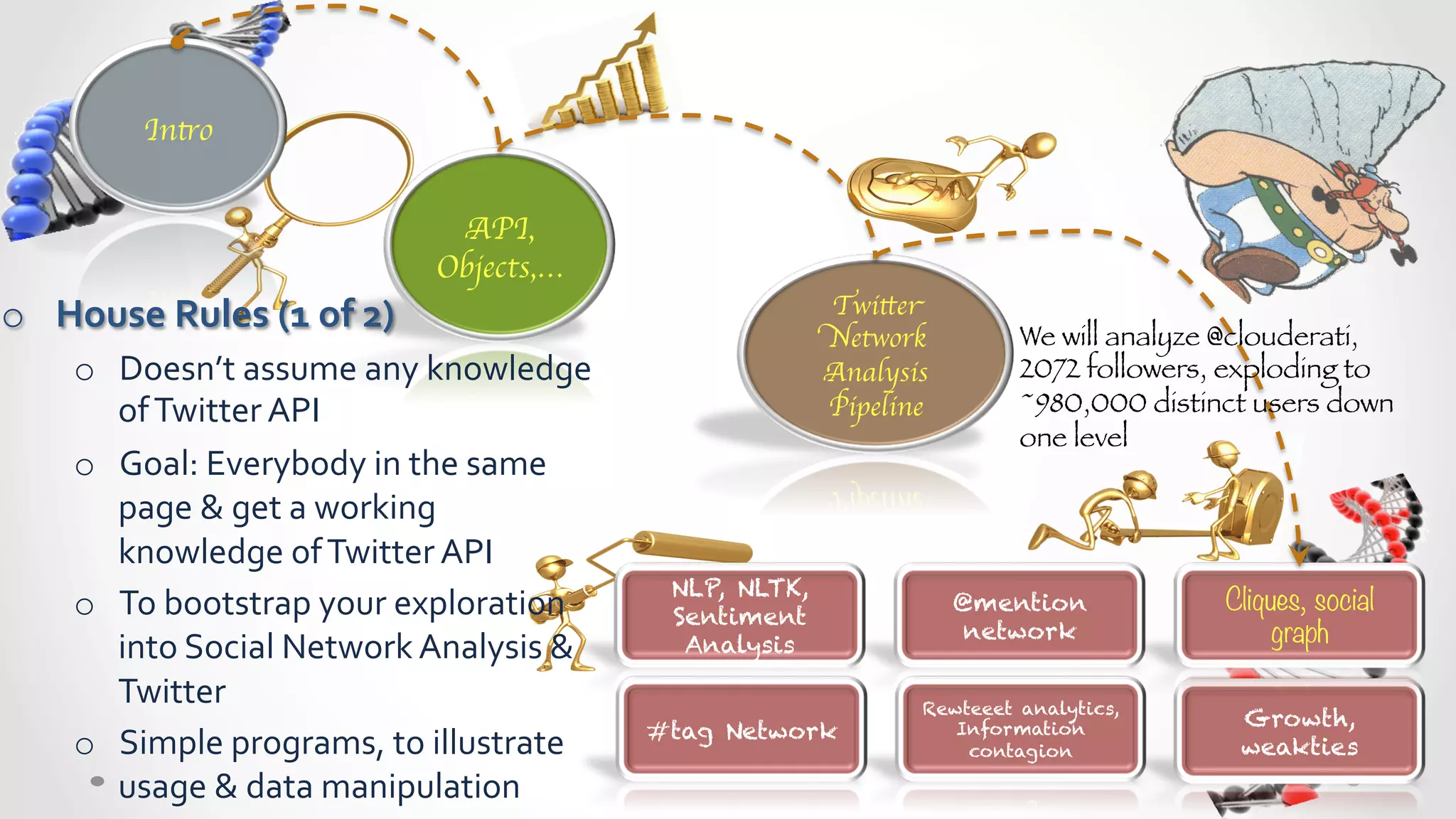

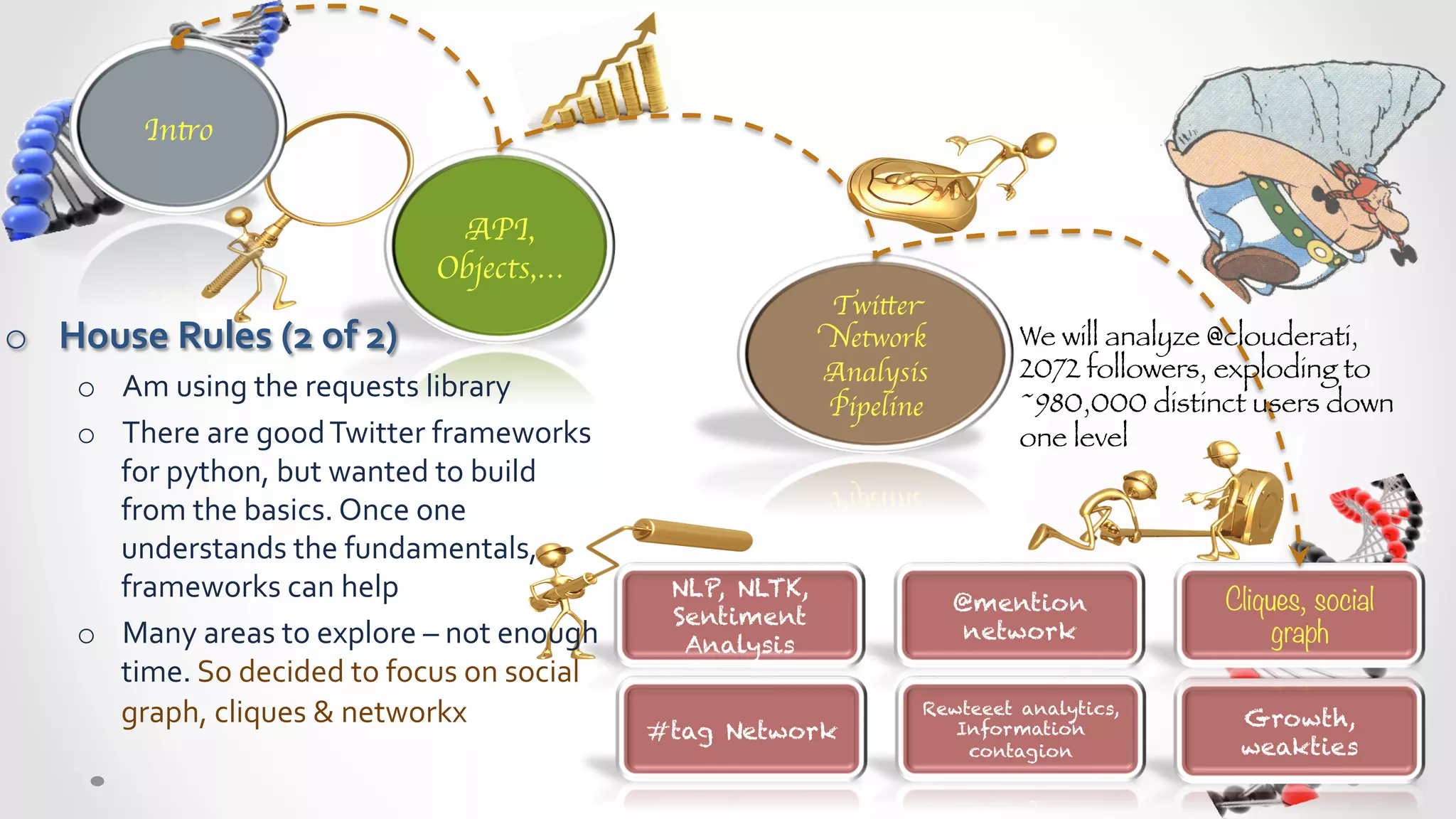

The document discusses analyzing social networks and Twitter data using Python. It provides an introduction to analyzing the Twitter network of the user @clouderati, including 2072 followers. The presentation will cover topics like mentions, hashtags, retweets, and constructing a social graph to analyze cliques and networks. It also provides some tips for working with Twitter APIs and building scalable social media analysis pipelines in Python.

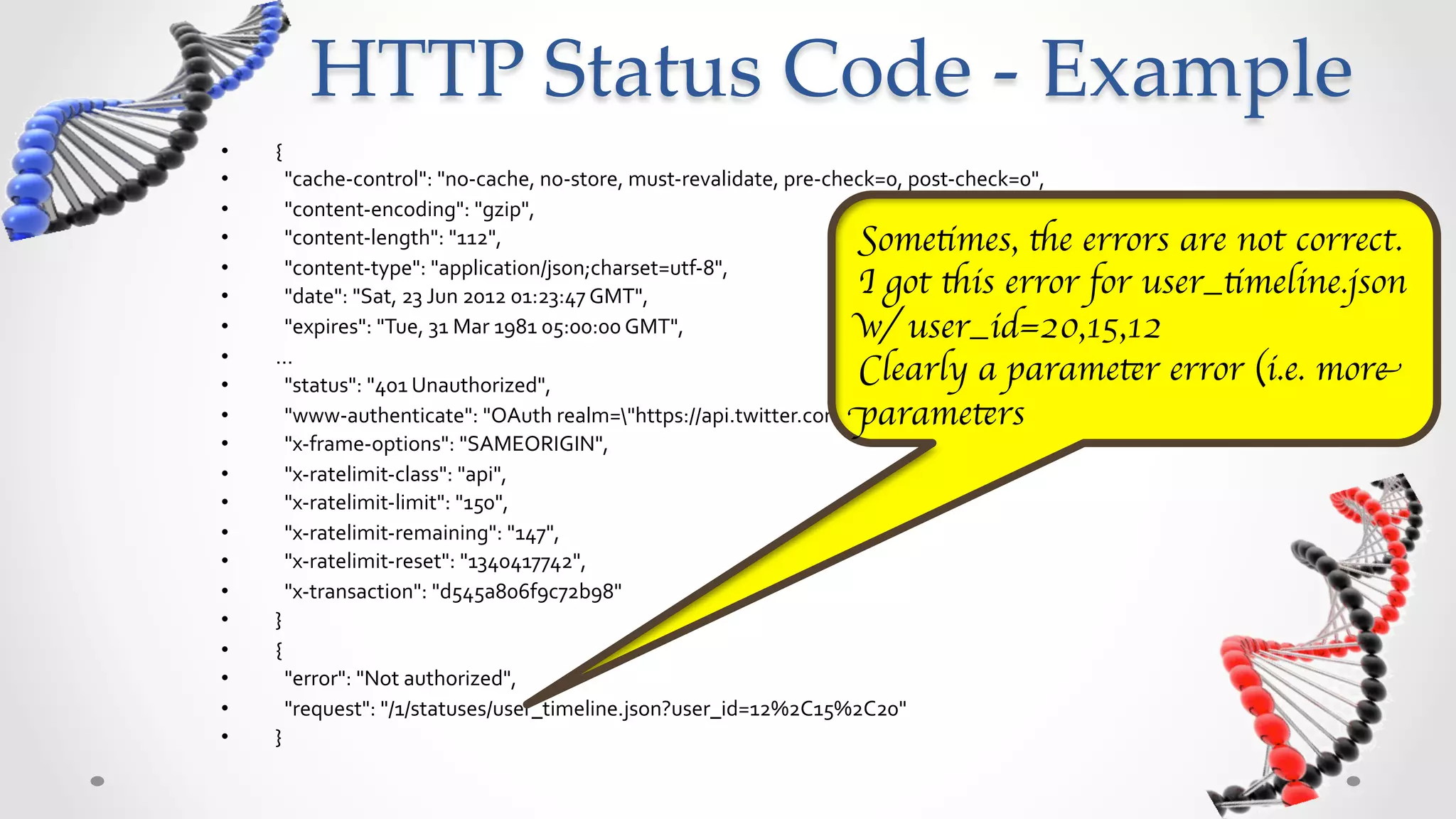

![HTTP Status Code -‐‑ Example

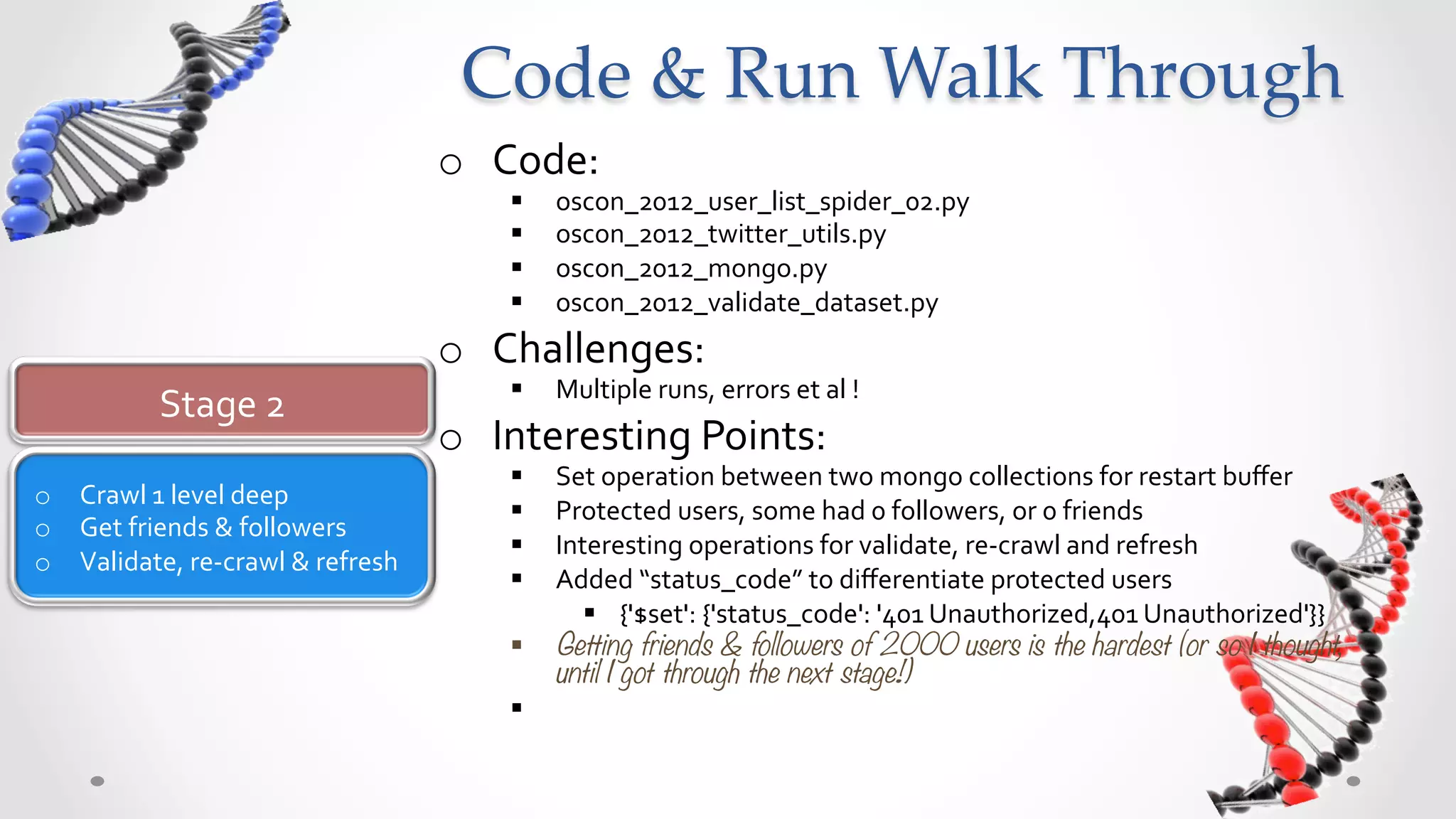

• {

•

"cache-‐control":

"no-‐cache,

max-‐age=300",

•

"content-‐encoding":

"gzip",

•

"content-‐length":

"91",

•

"content-‐type":

"application/json;

charset=utf-‐8",

•

"date":

"Sat,

23

Jun

2012

00:06:56

GMT",

•

"expires":

"Sat,

23

Jun

2012

00:11:56

GMT",

•

"server":

"tfe",

•

…

•

"status":

"401

Unauthorized",

•

"vary":

"Accept-‐Encoding",

•

"www-‐authenticate":

"OAuth

realm="https://api.twitter.com"",

•

•

"x-‐ratelimit-‐class":

"api",

"x-‐ratelimit-‐limit":

"0",

Detailed

error

•

"x-‐ratelimit-‐remaining":

"0",

message

in

JSON

!

•

"x-‐ratelimit-‐reset":

"1340413616",

•

"x-‐runtime":

"0.01997"

I

like

this

• }

• {

•

"errors":

[

•

{

•

"code":

53,

•

"message":

"Basic

authentication

is

not

supported"

•

}

•

]

• }](https://image.slidesharecdn.com/oscon-2012-37-120708013058-phpapp01/75/The-Art-of-Social-Media-Analysis-with-Twitter-Python-43-2048.jpg)

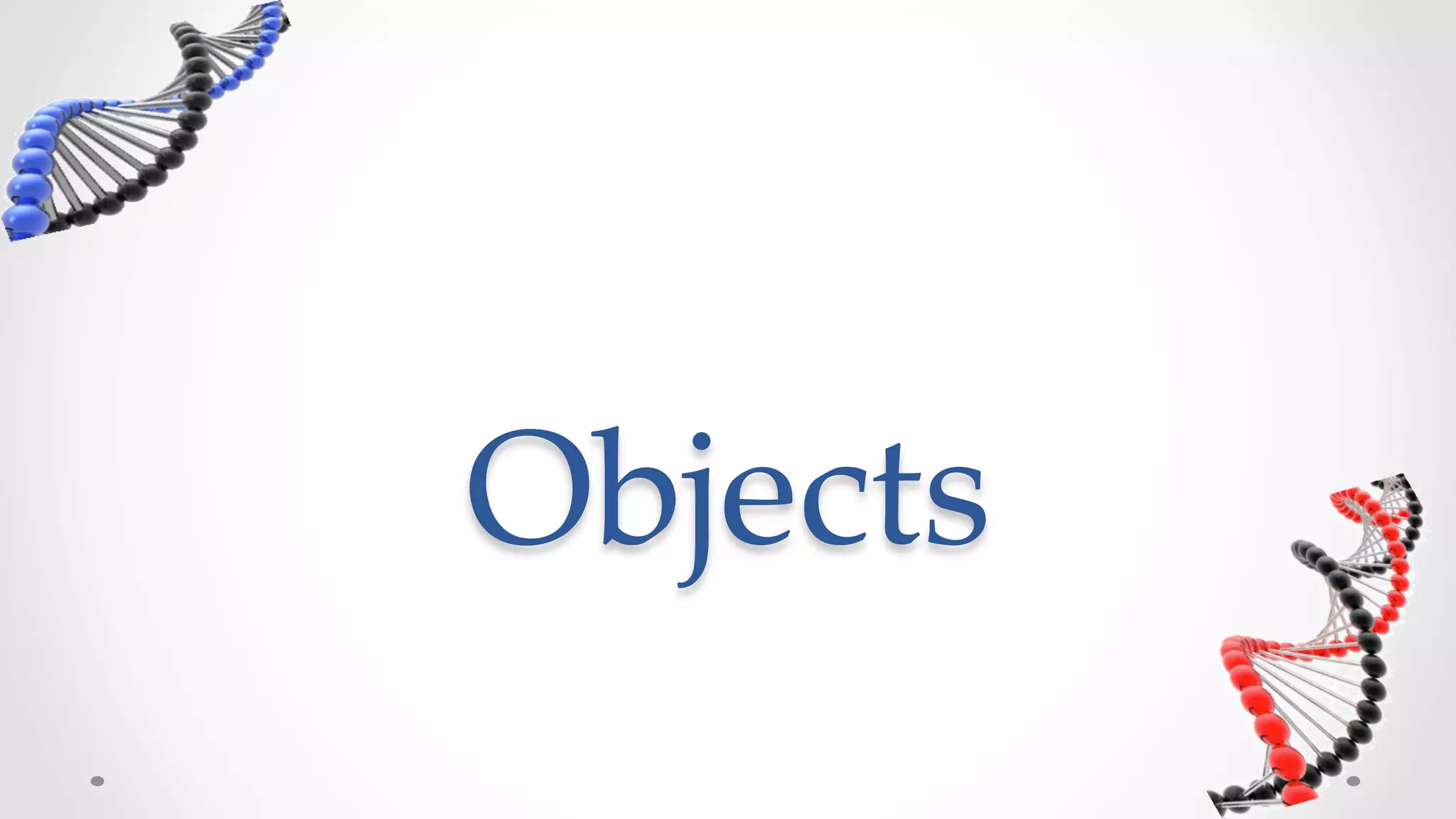

![HTTP Status Code – Confusing Example

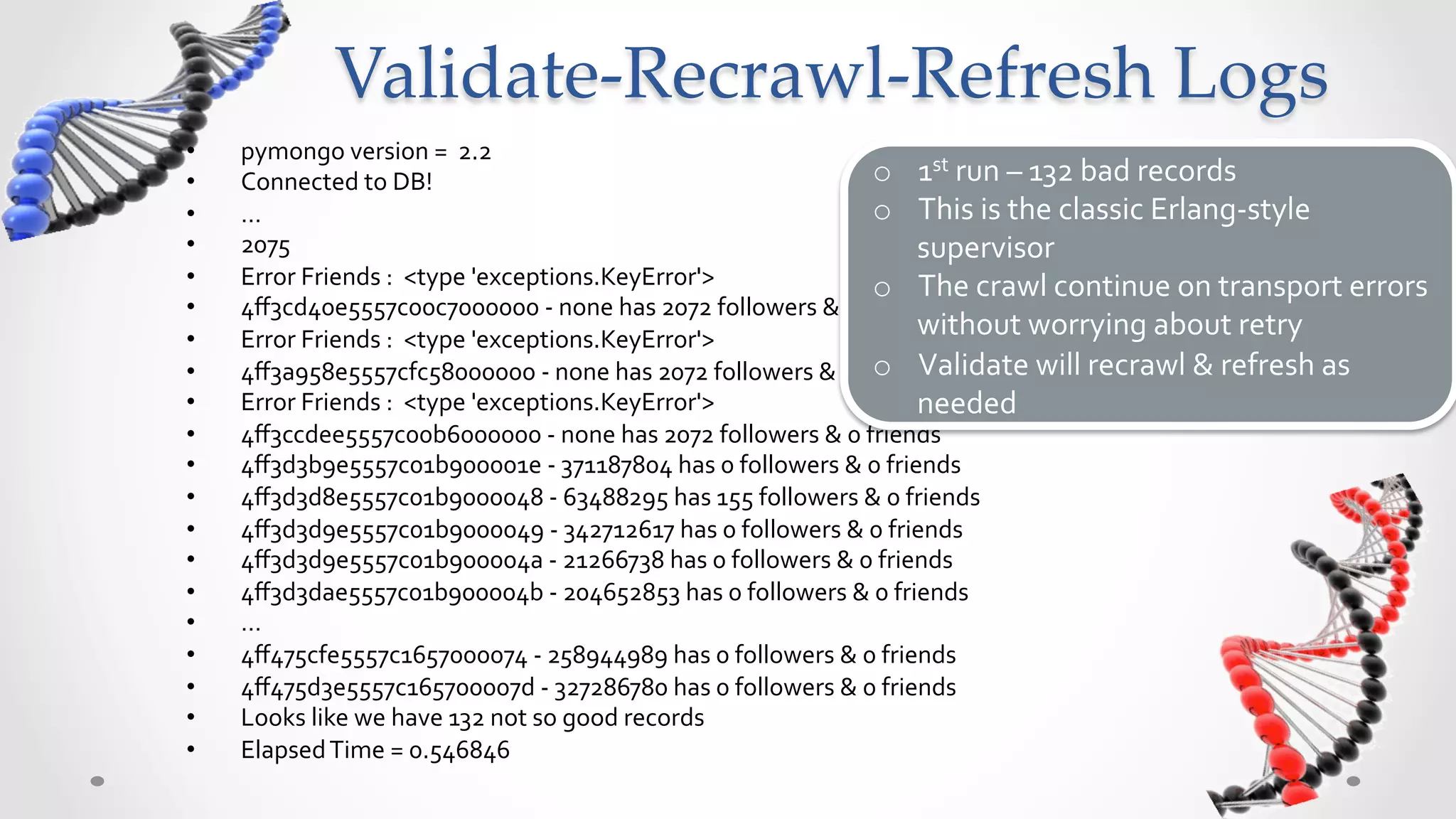

• {

• GET

https://api.twitter.com/1/users/lookup.json?

• …

screen_nme=twitterapi,twitter&include_entities=

•

"pragma":

"no-‐cache",

true

•

"server":

"tfe",

•

…

• Spelling

Mistake

•

"status":

"404

Not

Found",

o Should

be

screen_name

•

…

• But

confusing

error

!

• }

• {

• Should

be

406

Not

Acceptable

or

413

Too

Long

,

•

"errors":

[

showing

parameter

error

•

{

•

"code":

34,

•

"message":

"Sorry,

that

page

does

not

exist"

•

}

•

]

• }](https://image.slidesharecdn.com/oscon-2012-37-120708013058-phpapp01/75/The-Art-of-Social-Media-Analysis-with-Twitter-Python-44-2048.jpg)

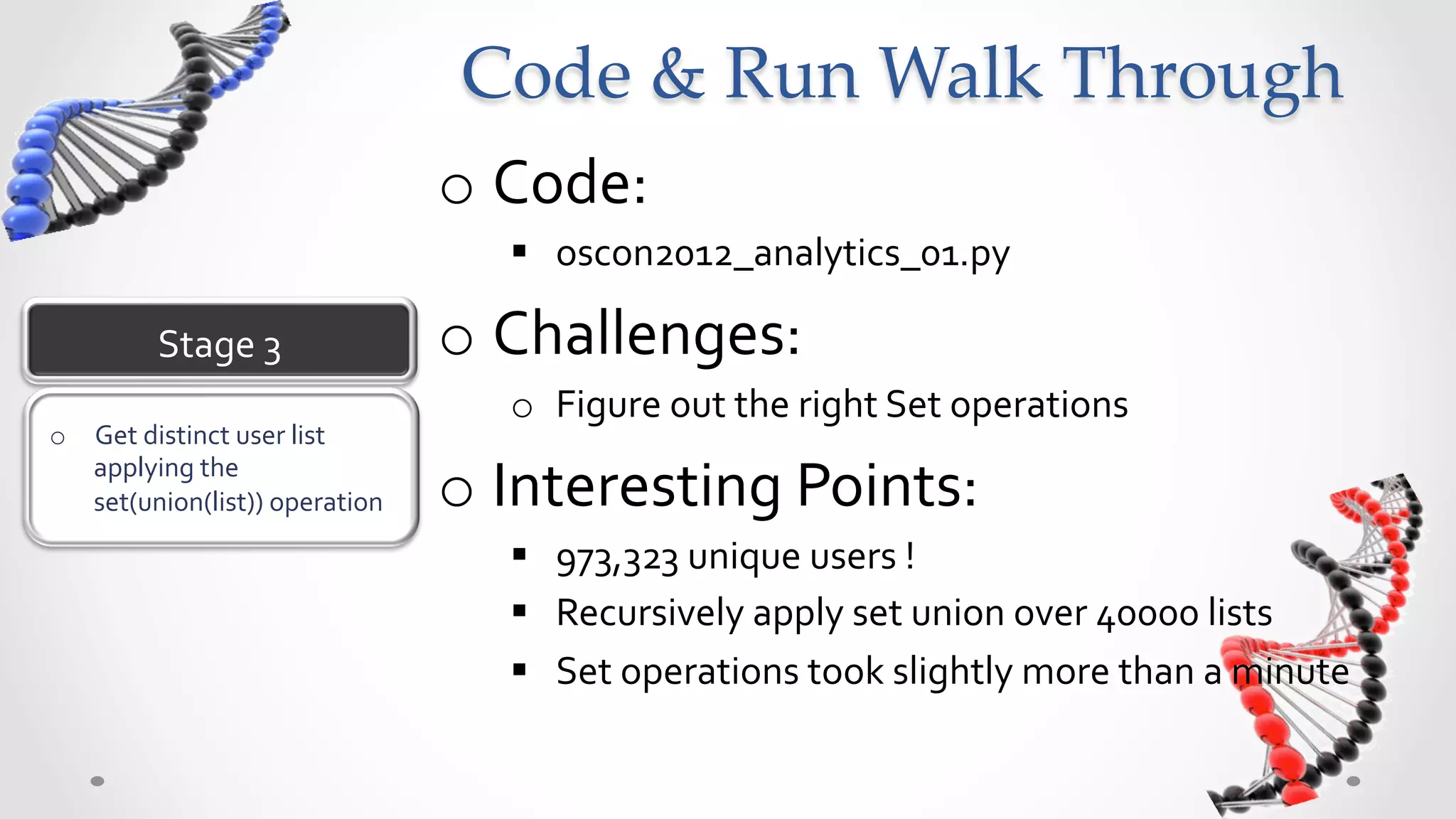

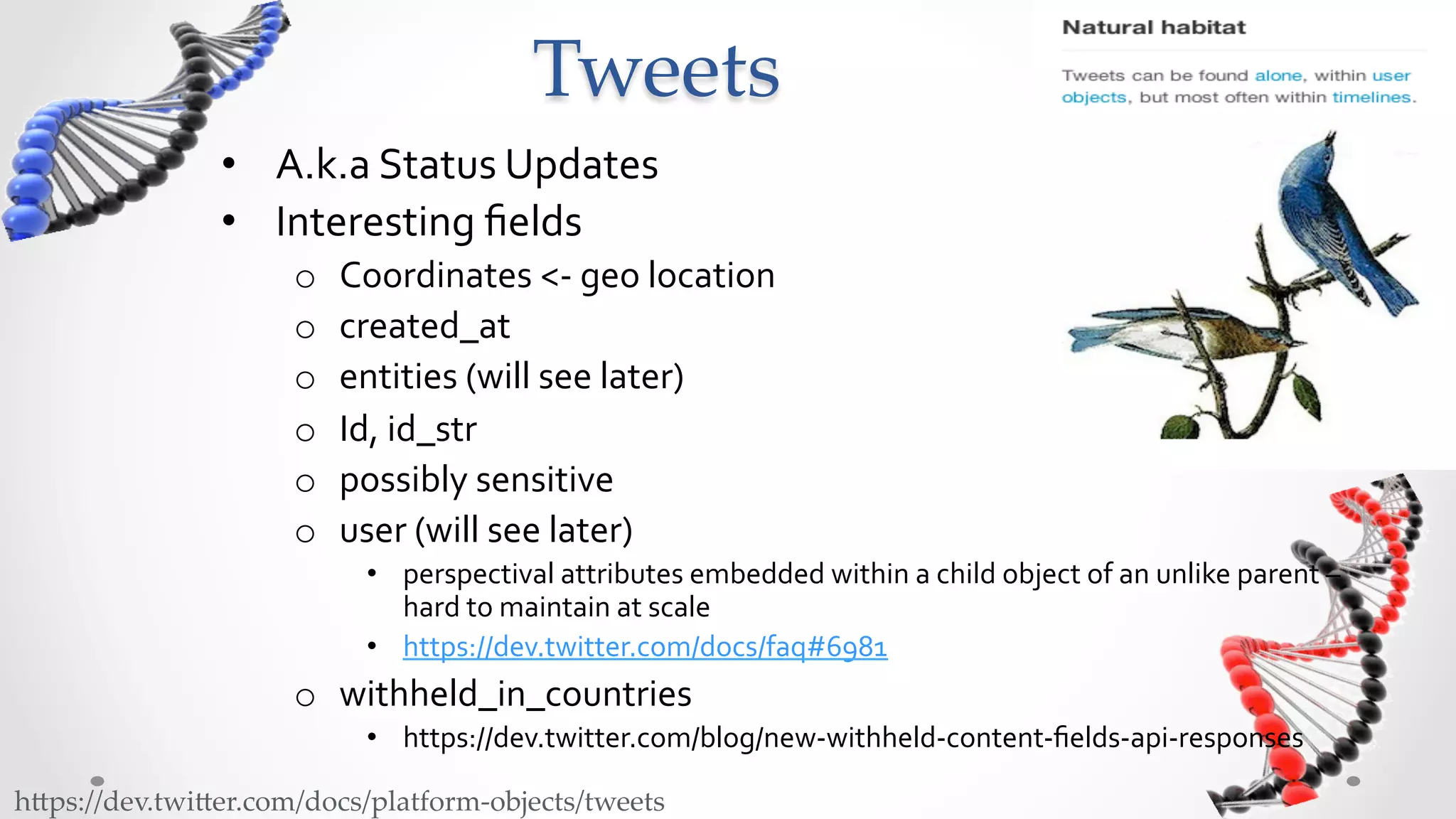

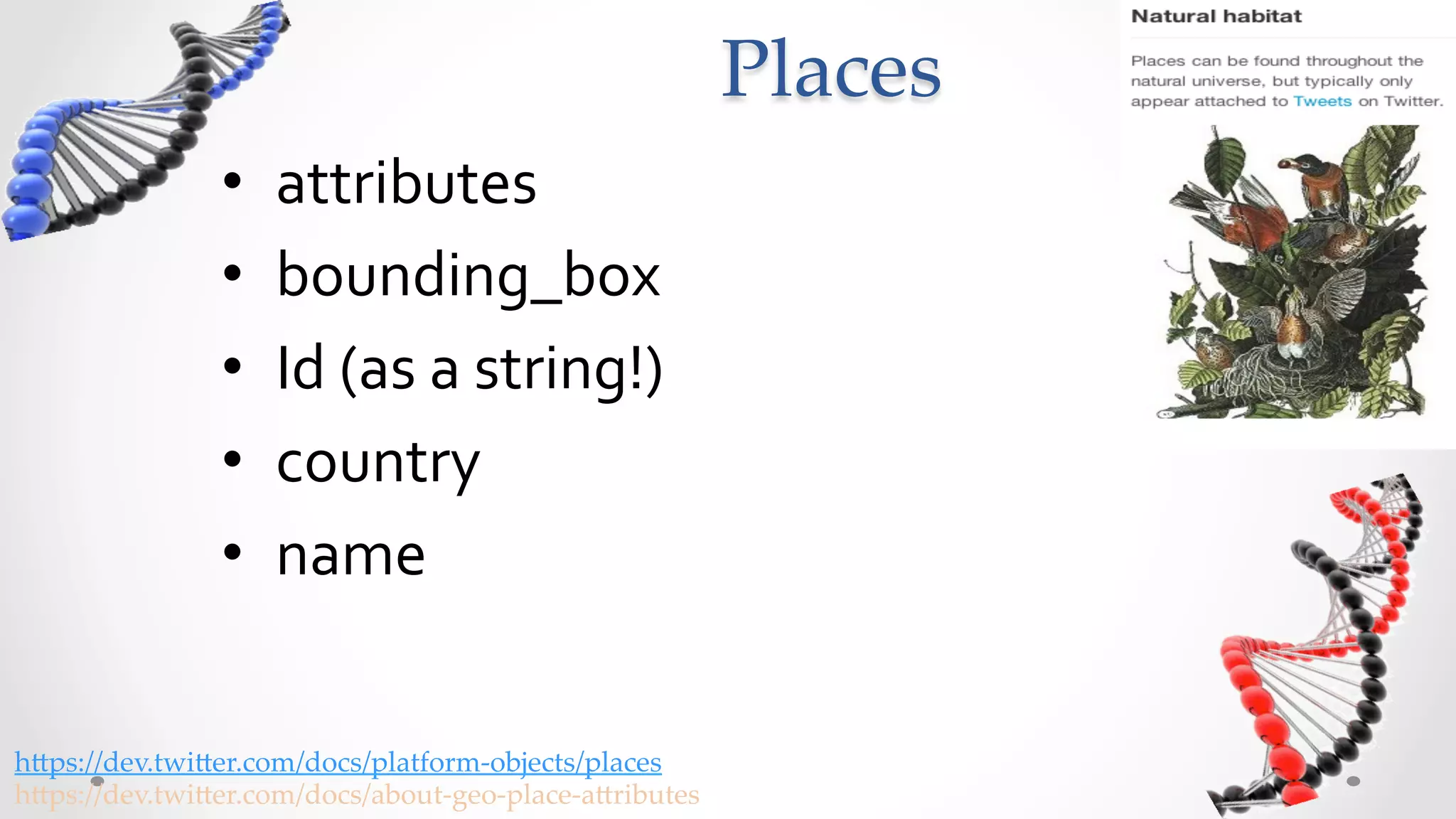

![Places

• Can

search

for

tweets

near

a

place

like

so:

• Get

latlong

of

conv

center

[45.52929,-‐122.66289]

o Tweets

near

that

place

• Tweets

near

San

Jose

[37.395715,-‐122.102308]

• We

will

not

see

further

here.

But

very

useful](https://image.slidesharecdn.com/oscon-2012-37-120708013058-phpapp01/75/The-Art-of-Social-Media-Analysis-with-Twitter-Python-56-2048.jpg)