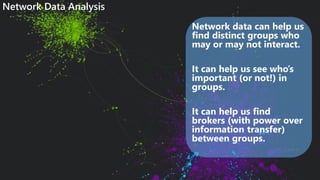

The document discusses social media data collection and analysis, covering its nature, value, and methods for obtaining such data through database dumps, APIs, and web scraping. It highlights the challenges of representativeness of social media data and the importance of various analysis techniques, including text analysis, topic modeling, and network data analysis. Additionally, it provides insights into survival analysis in the context of social media behavior and presents relevant Python packages for executing these tasks.