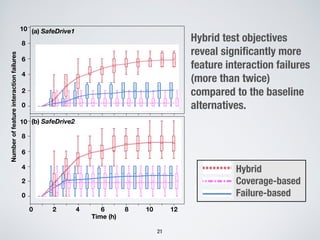

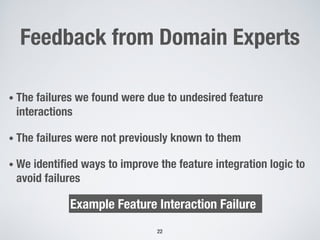

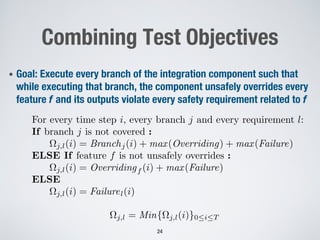

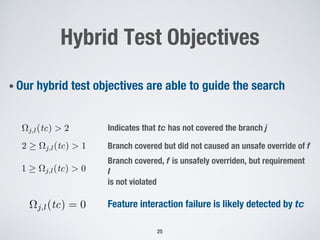

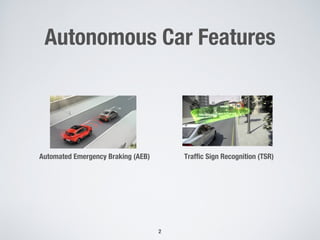

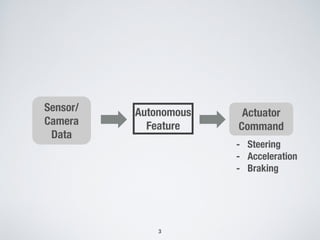

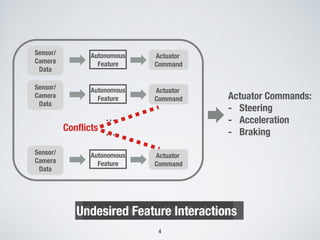

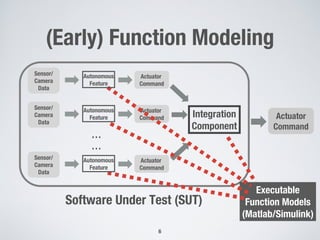

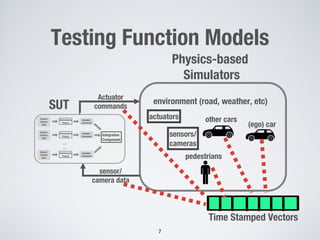

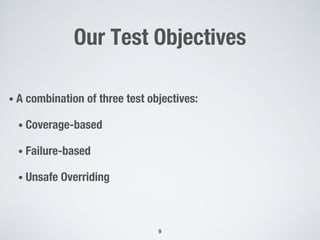

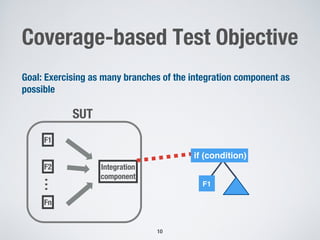

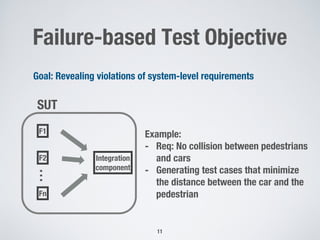

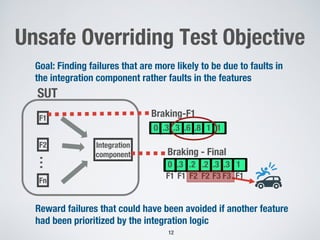

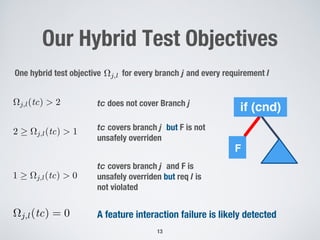

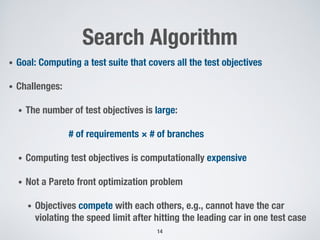

This document proposes a search-based testing approach to automatically detect undesired feature interactions in self-driving systems during early development stages. It defines hybrid test objectives that combine coverage-based, failure-based, and unsafe overriding criteria. A tailored many-objective search algorithm is used to generate test cases that satisfy the objectives. An empirical evaluation on two industrial case study systems found the hybrid objectives revealed significantly more feature interaction failures than baseline objectives. Domain experts validated the identified failures were previously unknown and suggested ways to improve the feature integration logic.

![MOSA: Many-Objective Search-

based Test Generation

15

Objective 1

Objective 2

Not all (non-dominated) solutions

are optimal for the purpose of testing

Panichella et. al.

[ICST 2015]](https://image.slidesharecdn.com/ase2018-shiva-180905140756/85/Testing-Autonomous-Cars-for-Feature-Interaction-Failures-using-Many-Objective-Search-15-320.jpg)

![MOSA: Many-Objective Search-

based Test Generation

16

Objective 1

Objective 2

Not all (non-dominated) solutions

are optimal for the purpose of testing

These points are

better than others

Panichella et. al.

[ICST 2015]](https://image.slidesharecdn.com/ase2018-shiva-180905140756/85/Testing-Autonomous-Cars-for-Feature-Interaction-Failures-using-Many-Objective-Search-16-320.jpg)