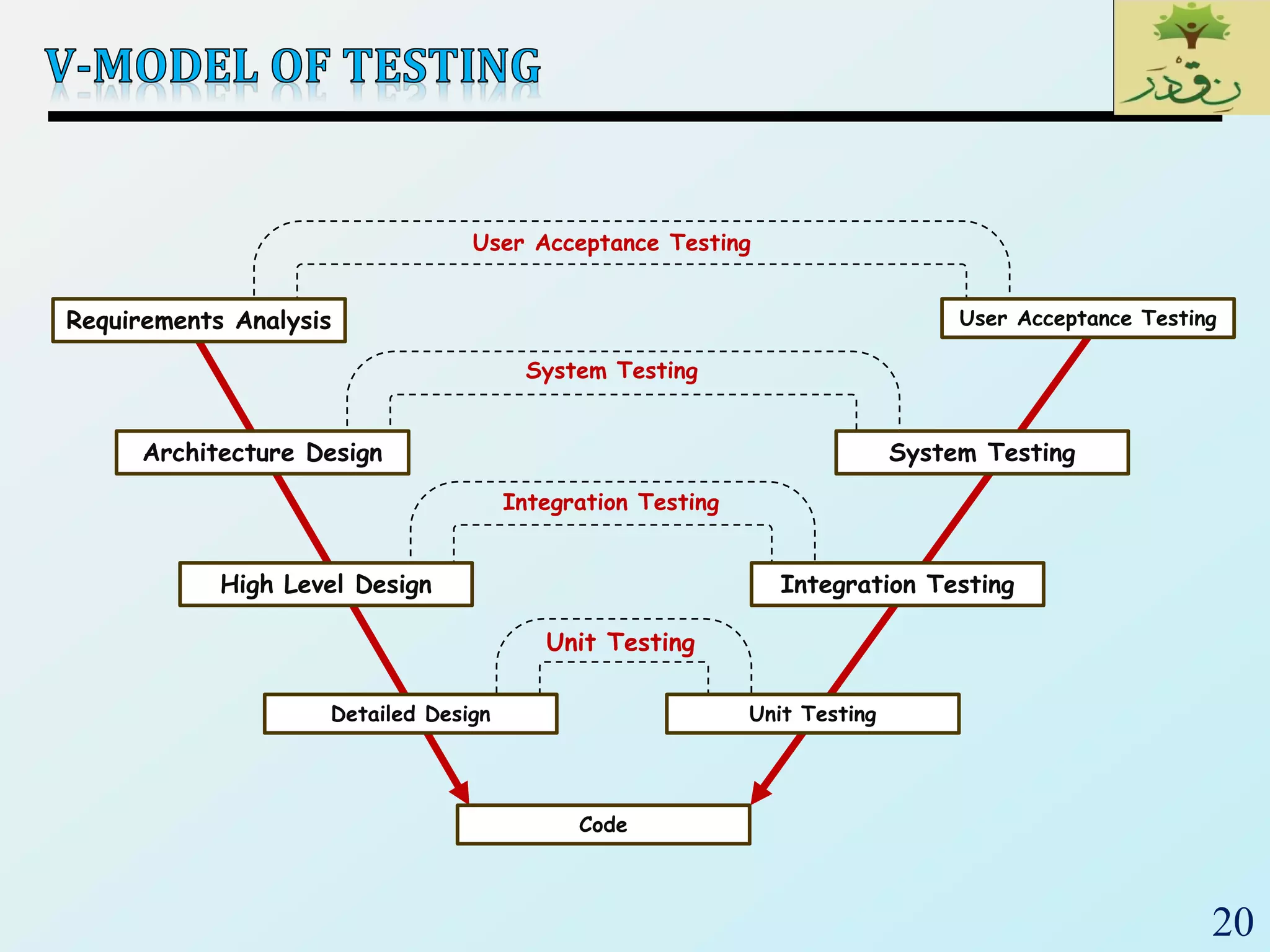

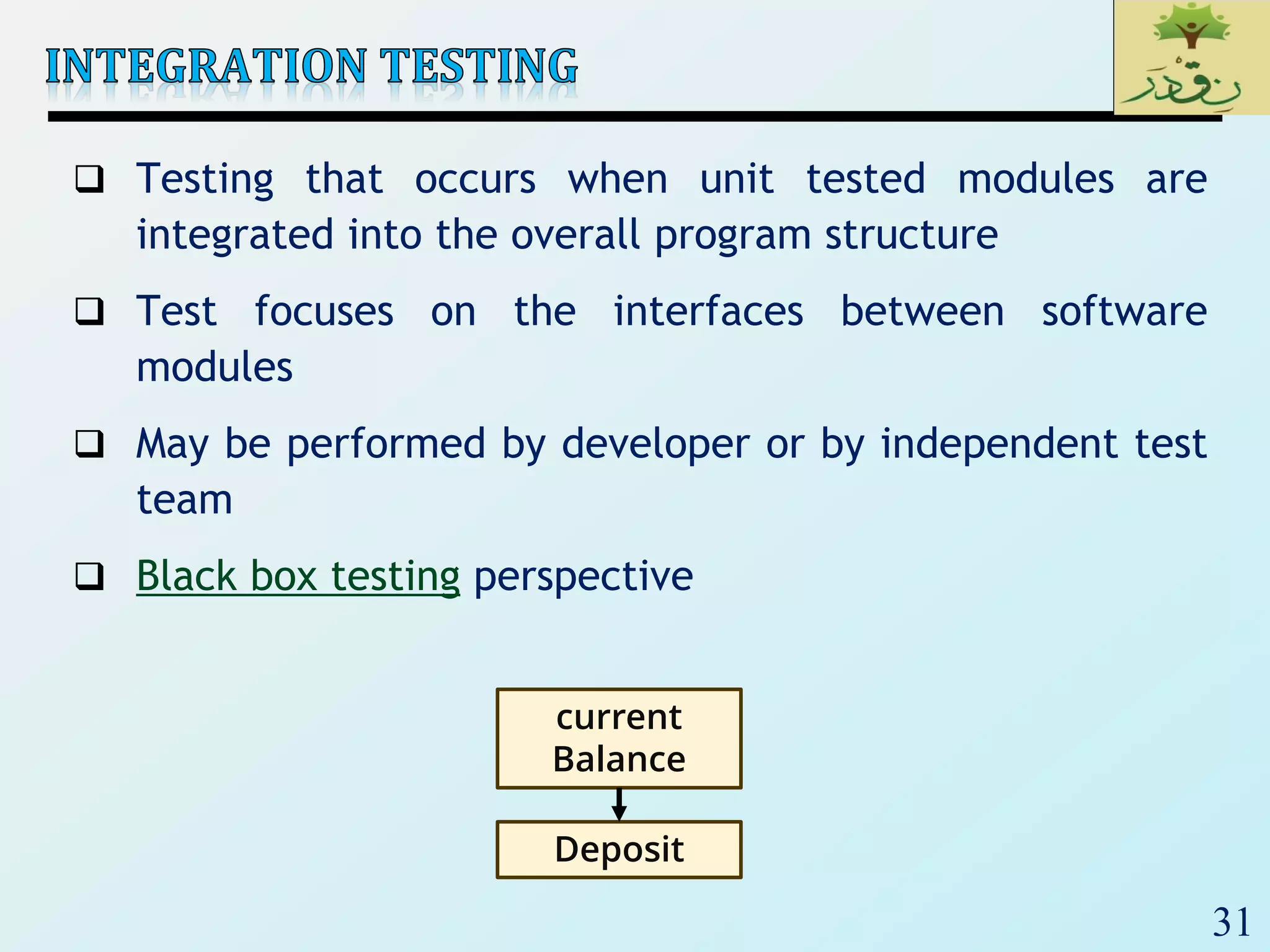

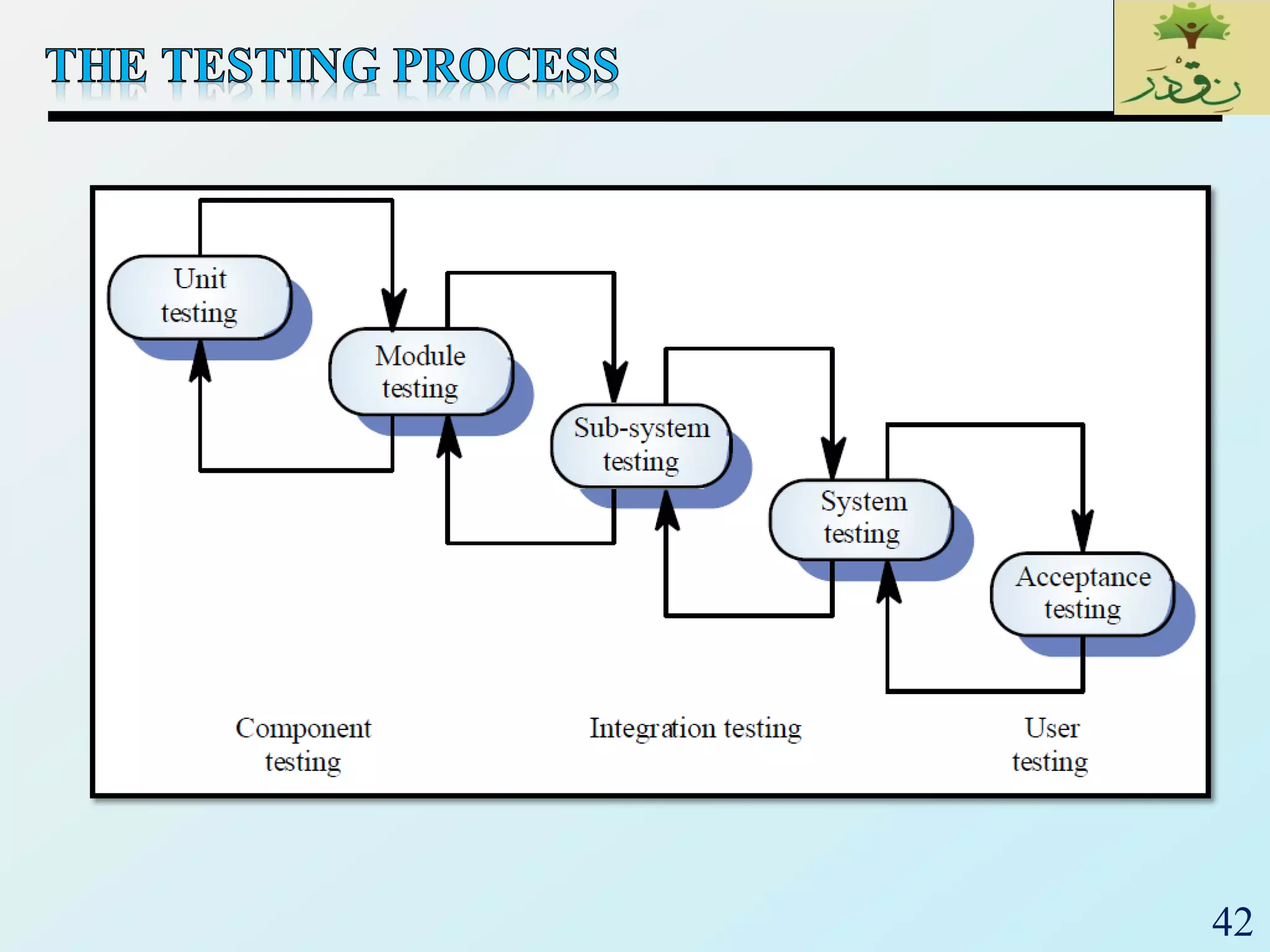

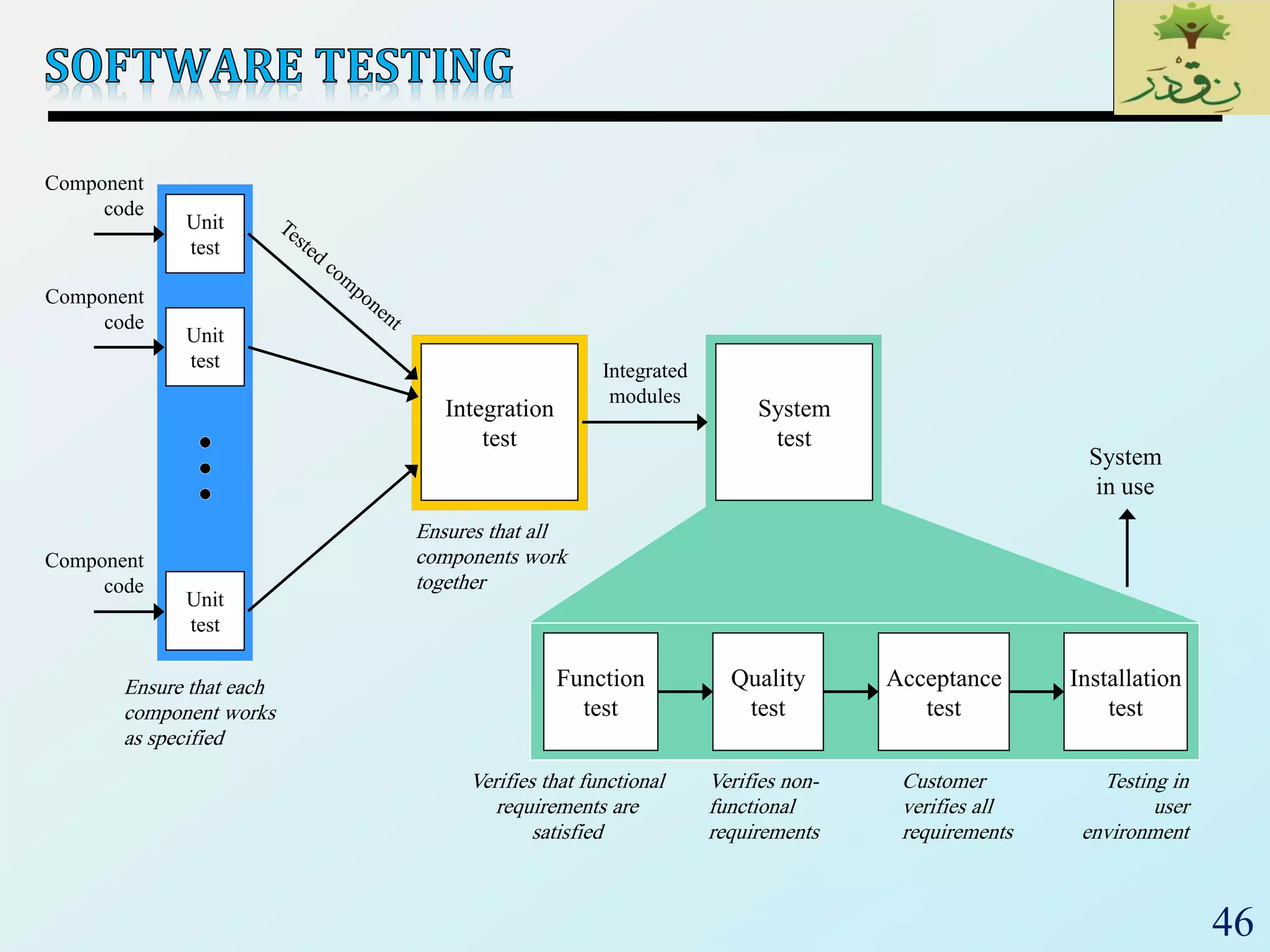

1. The document discusses various types of software testing including unit testing, integration testing, system testing, and acceptance testing. It explains that unit testing focuses on individual program units in isolation while integration testing tests modules assembled into subsystems.

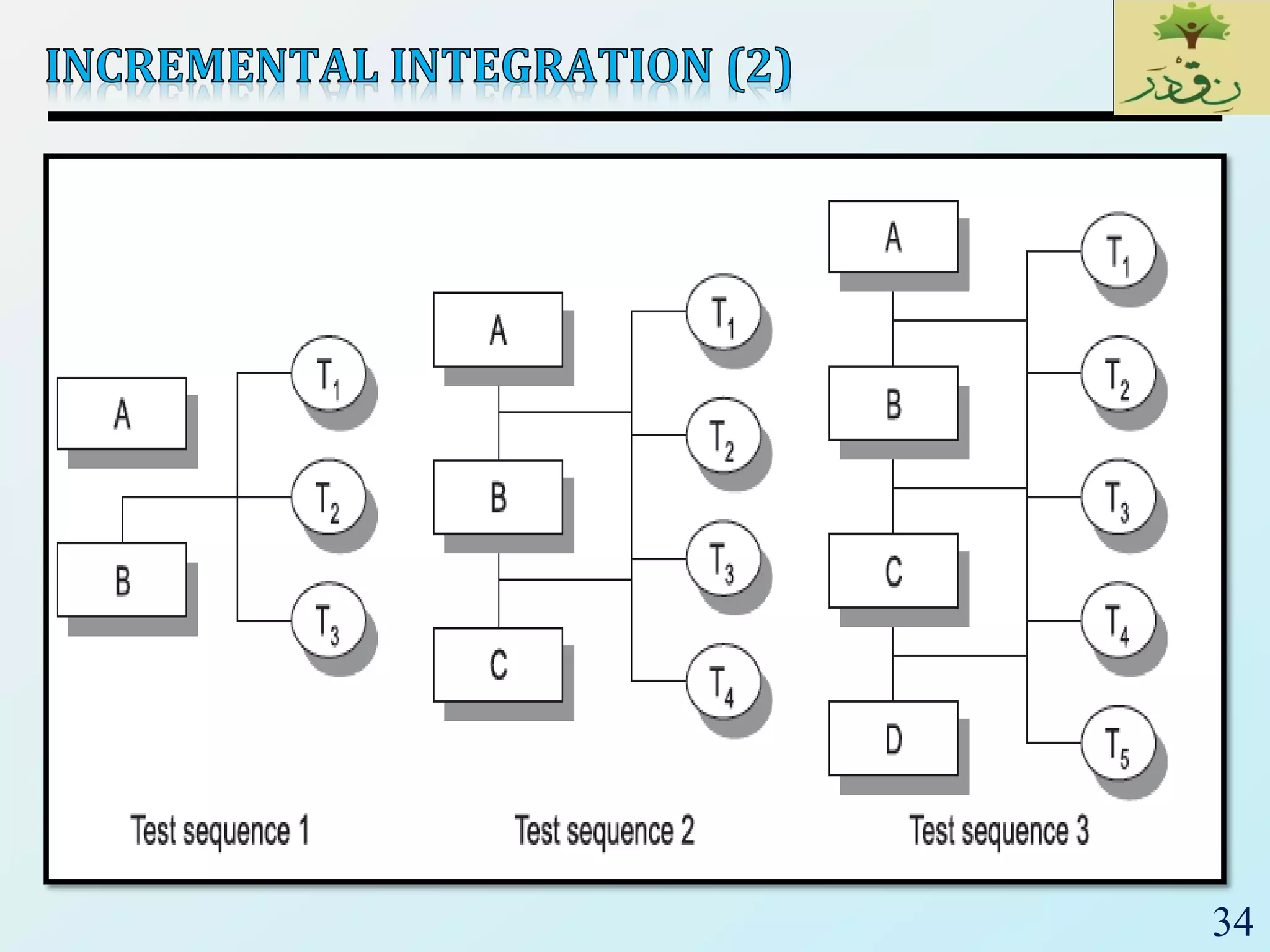

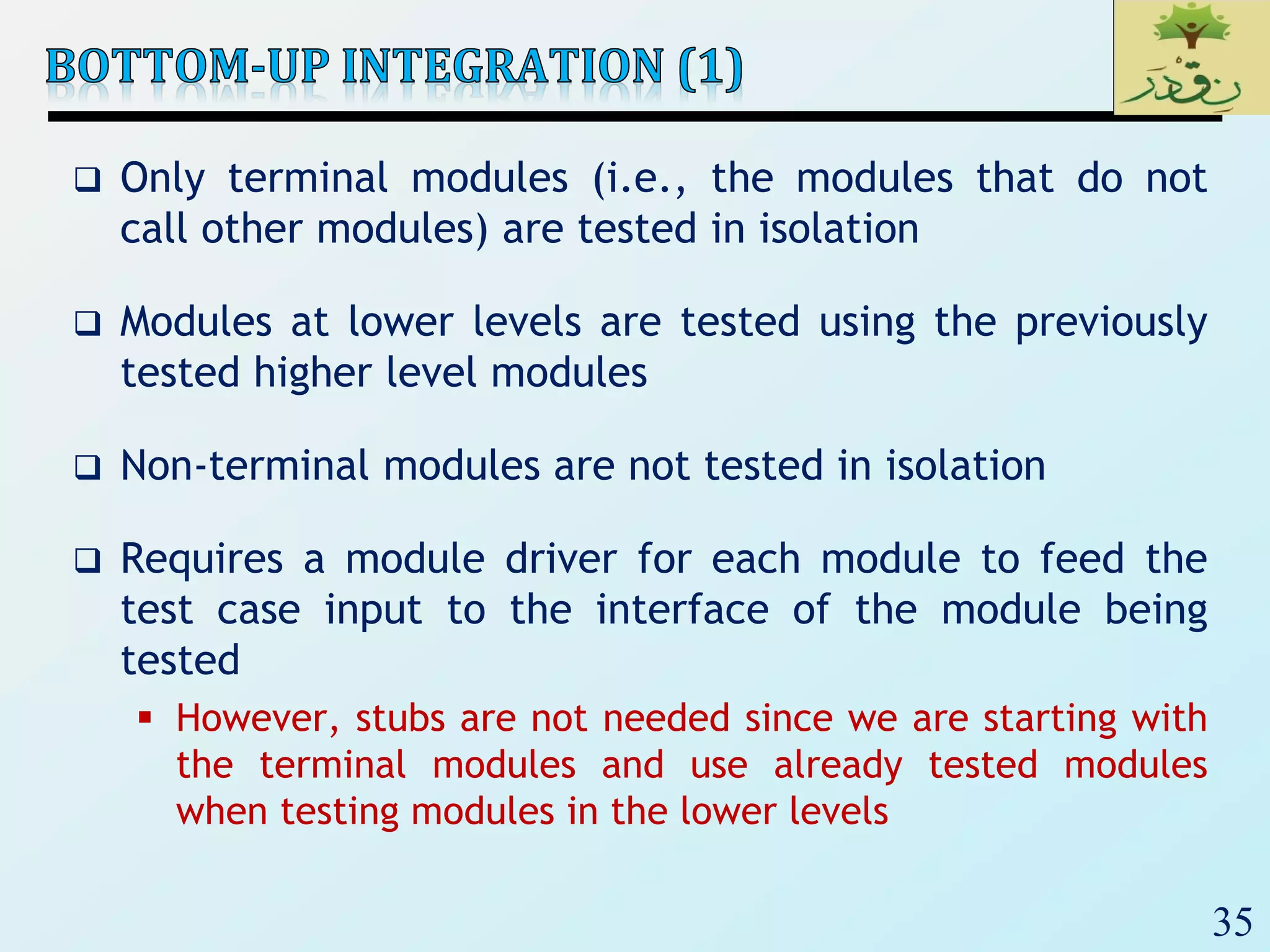

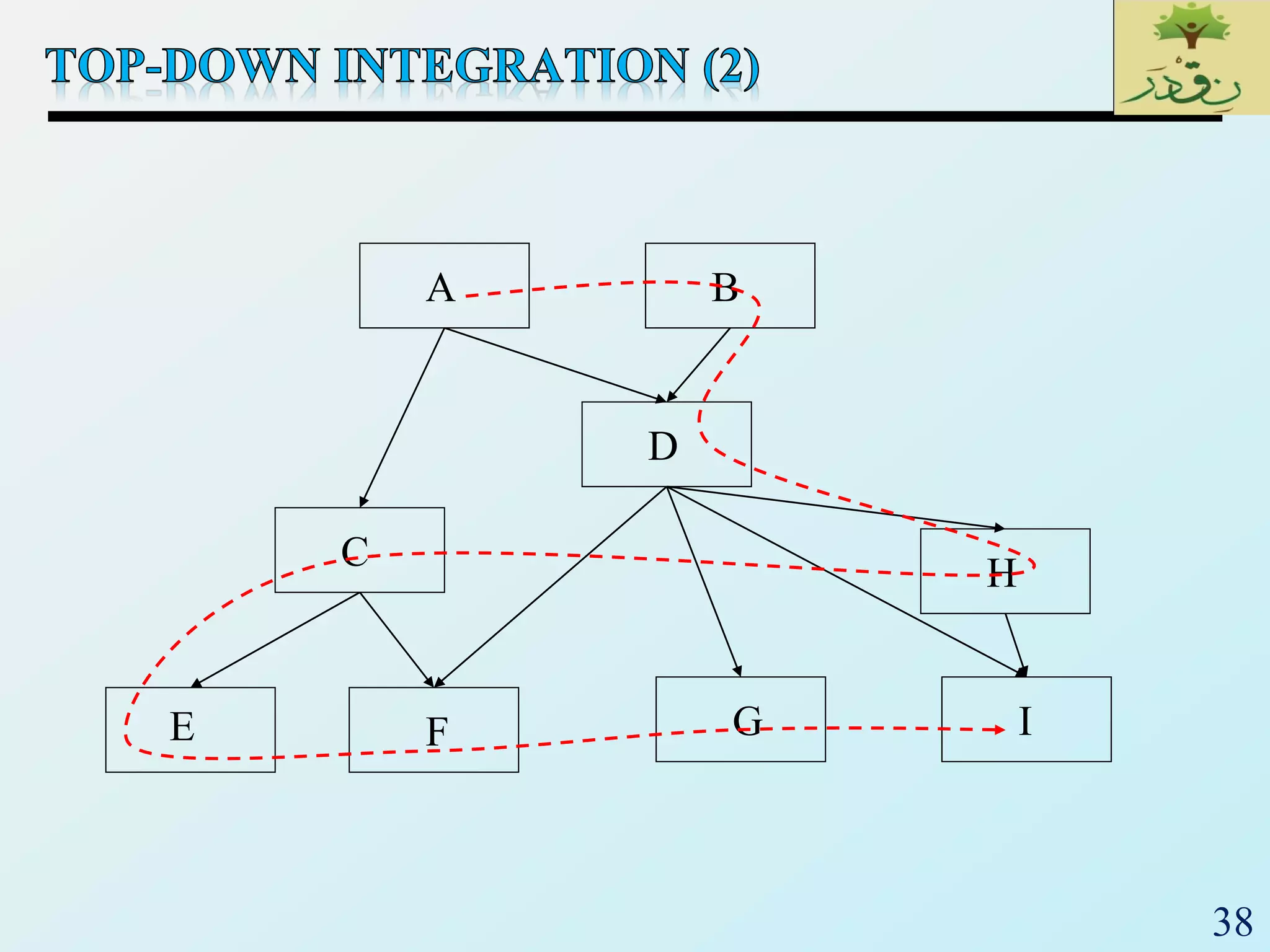

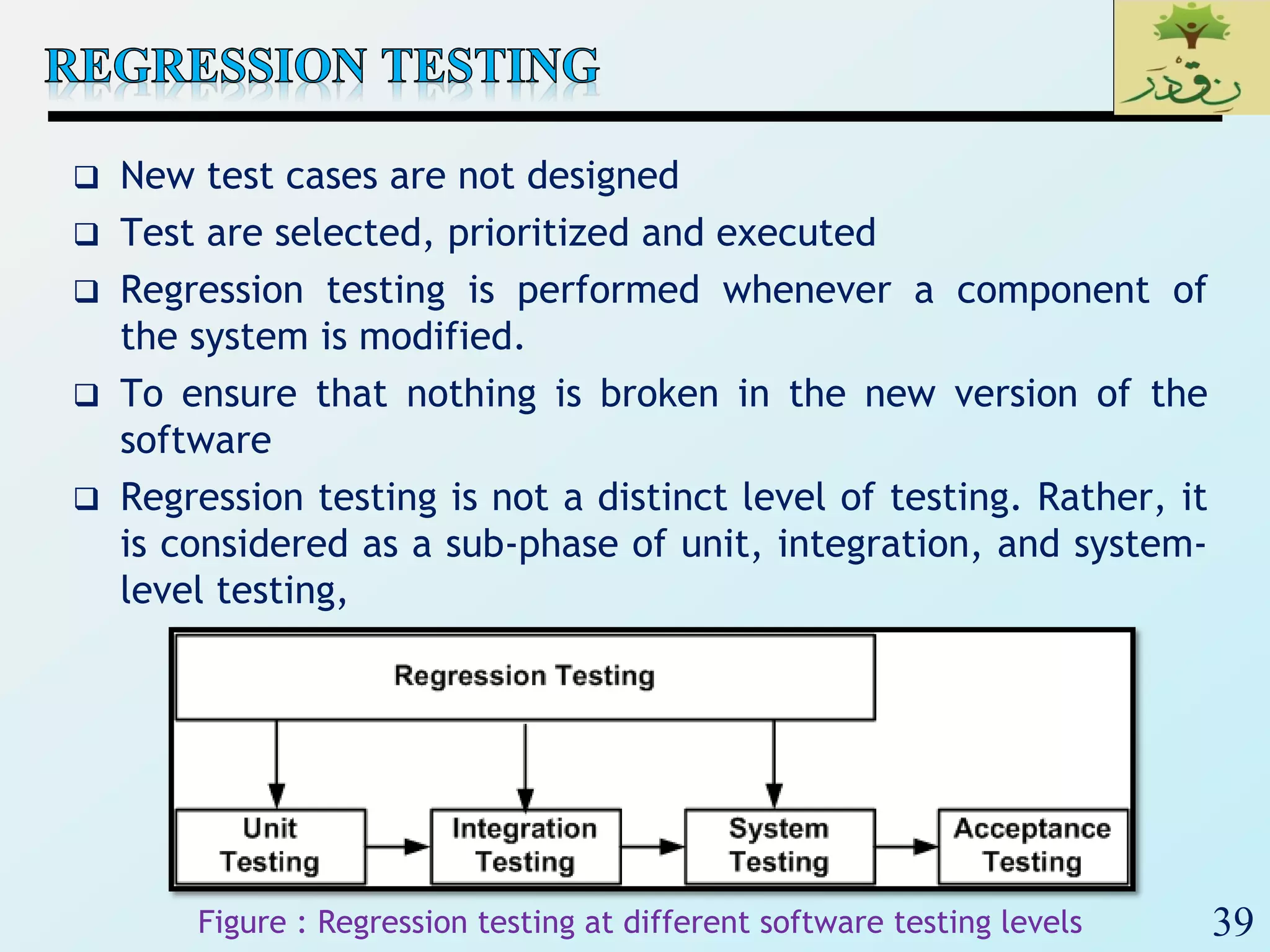

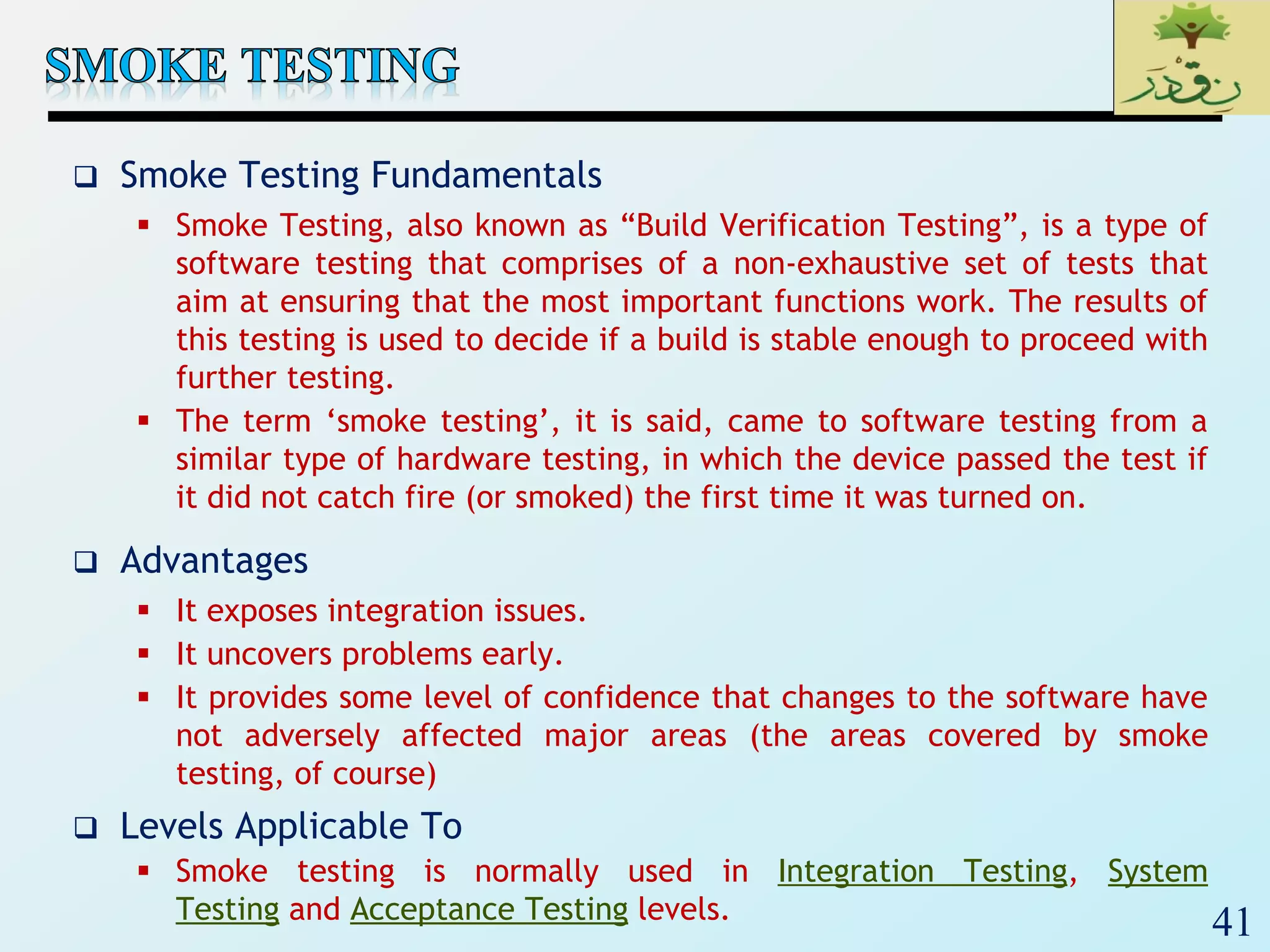

2. The document then provides examples of different integration testing strategies like incremental, bottom-up, top-down, and discusses regression testing. It also defines smoke testing and explains its purpose in integration, system and acceptance testing levels.

3. Finally, the document emphasizes the importance of system and acceptance testing to verify functional and non-functional requirements and ensure the system can operate as intended in a real environment.

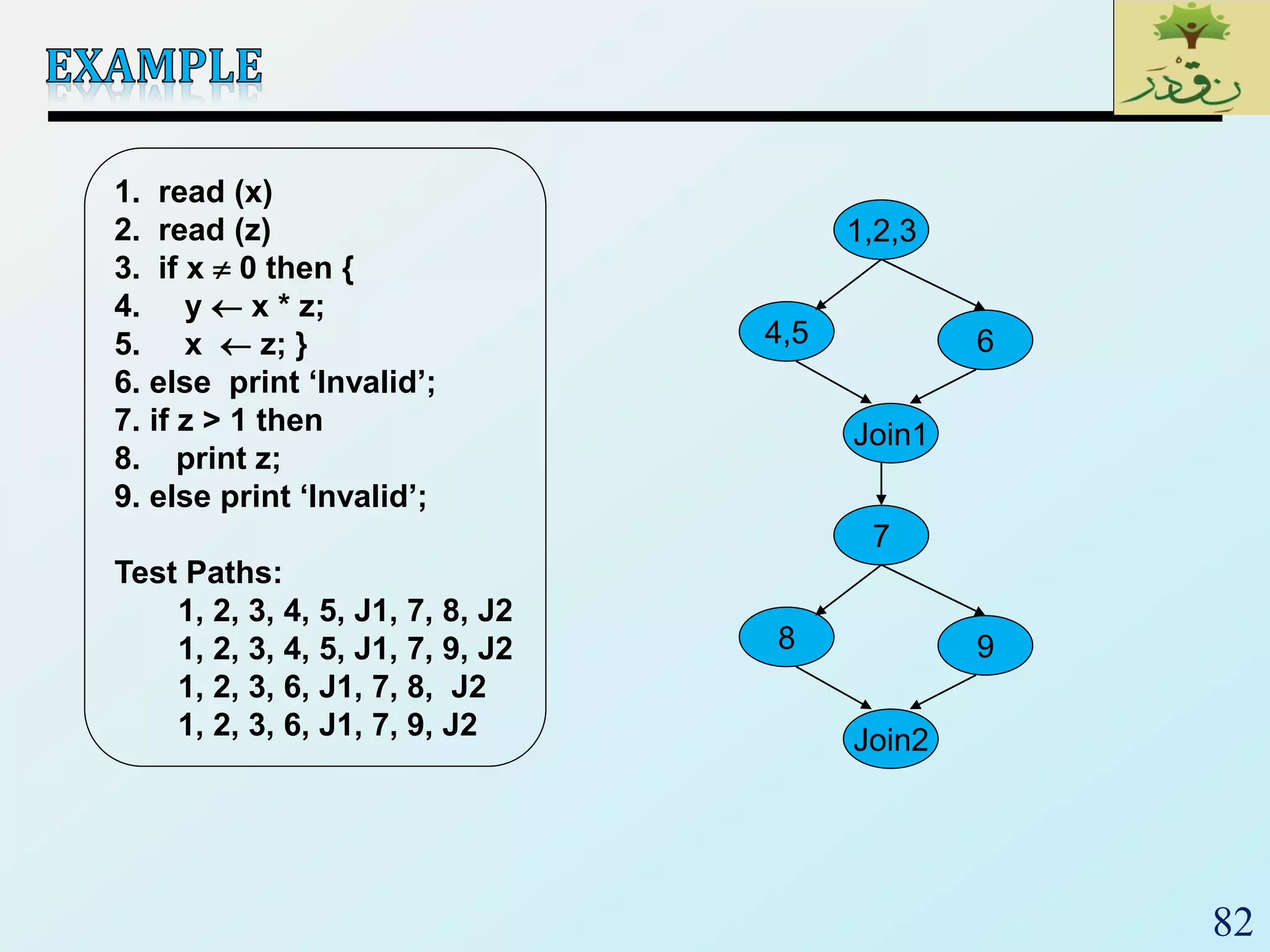

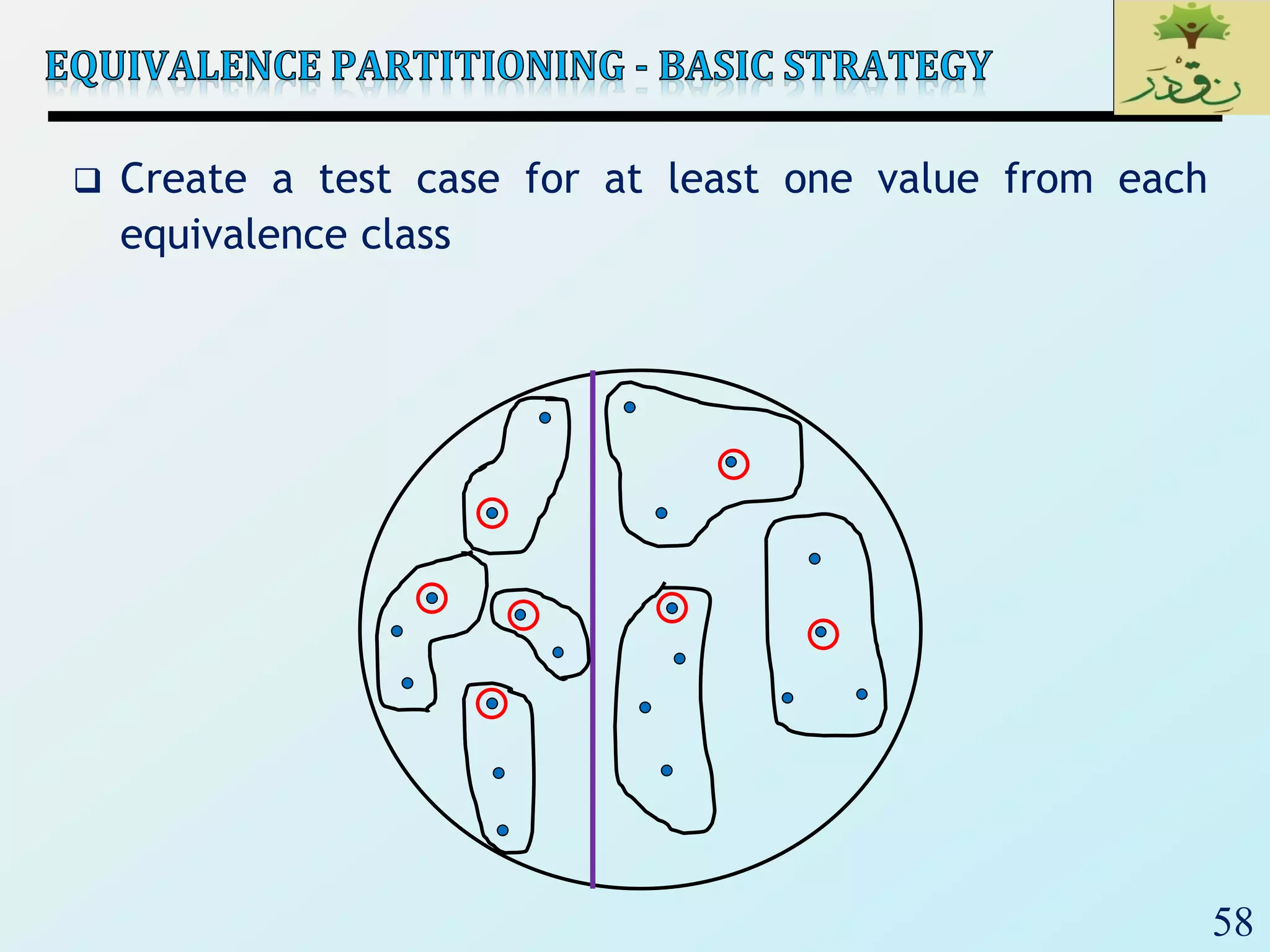

![60

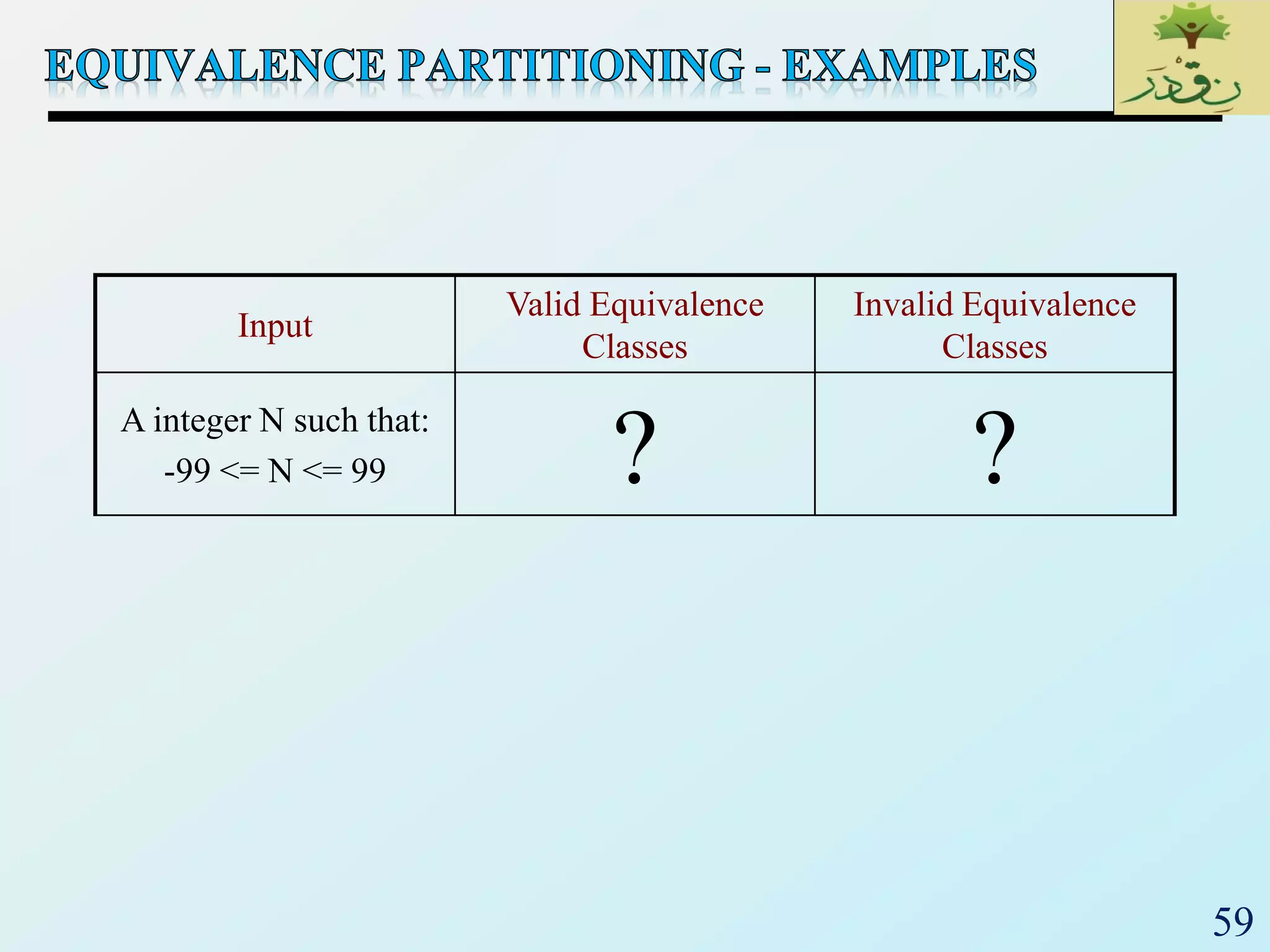

Input

Valid Equivalence

Classes

Invalid Equivalence

Classes

A integer N such that:

-99 <= N <= 99

[-99, -10]

[-9, -1]

0

[1, 9]

[10, 99]

?](https://image.slidesharecdn.com/se2018lec19softwaretesting-180322170944/75/SE2018_Lec-19_-Software-Testing-60-2048.jpg)

![61

Input

Valid Equivalence

Classes

Invalid Equivalence

Classes

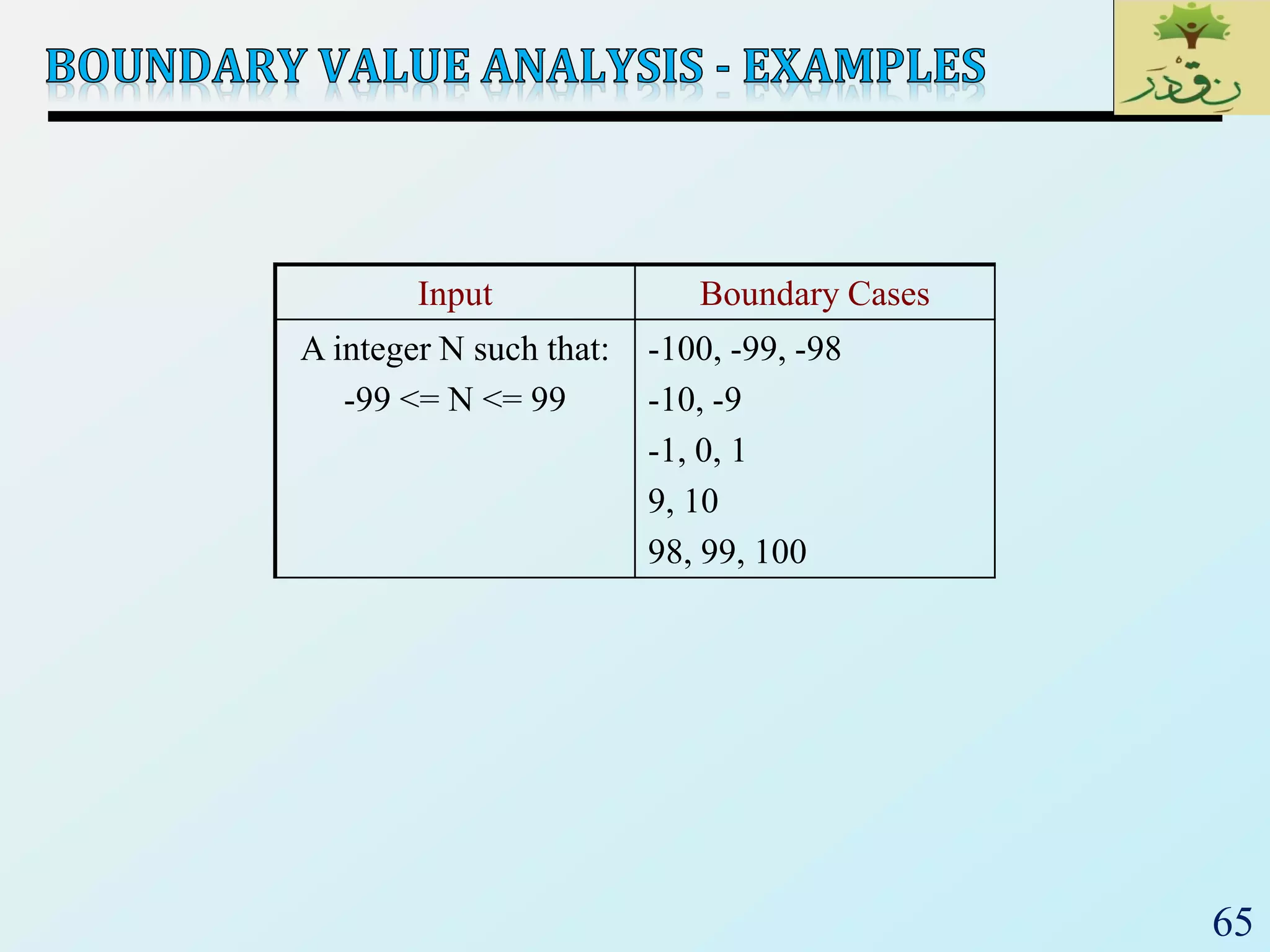

A integer N such that:

-99 <= N <= 99

[-99, -10]

[-9, -1]

0

[1, 9]

[10, 99]

< -99

> 99

Malformed numbers

{12-, 1-2-3, …}

Non-numeric strings

{junk, 1E2, $13}

Empty value](https://image.slidesharecdn.com/se2018lec19softwaretesting-180322170944/75/SE2018_Lec-19_-Software-Testing-61-2048.jpg)