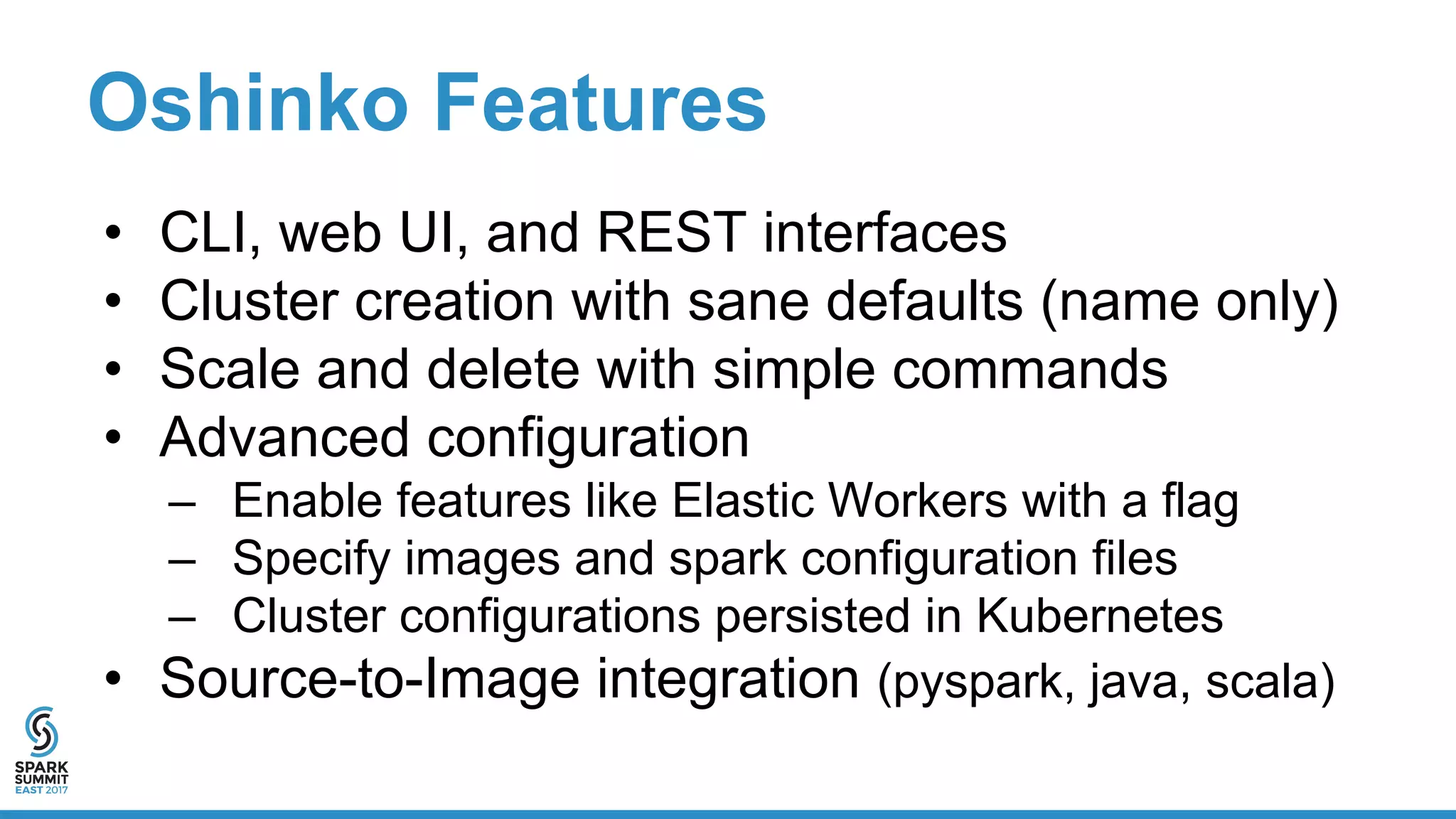

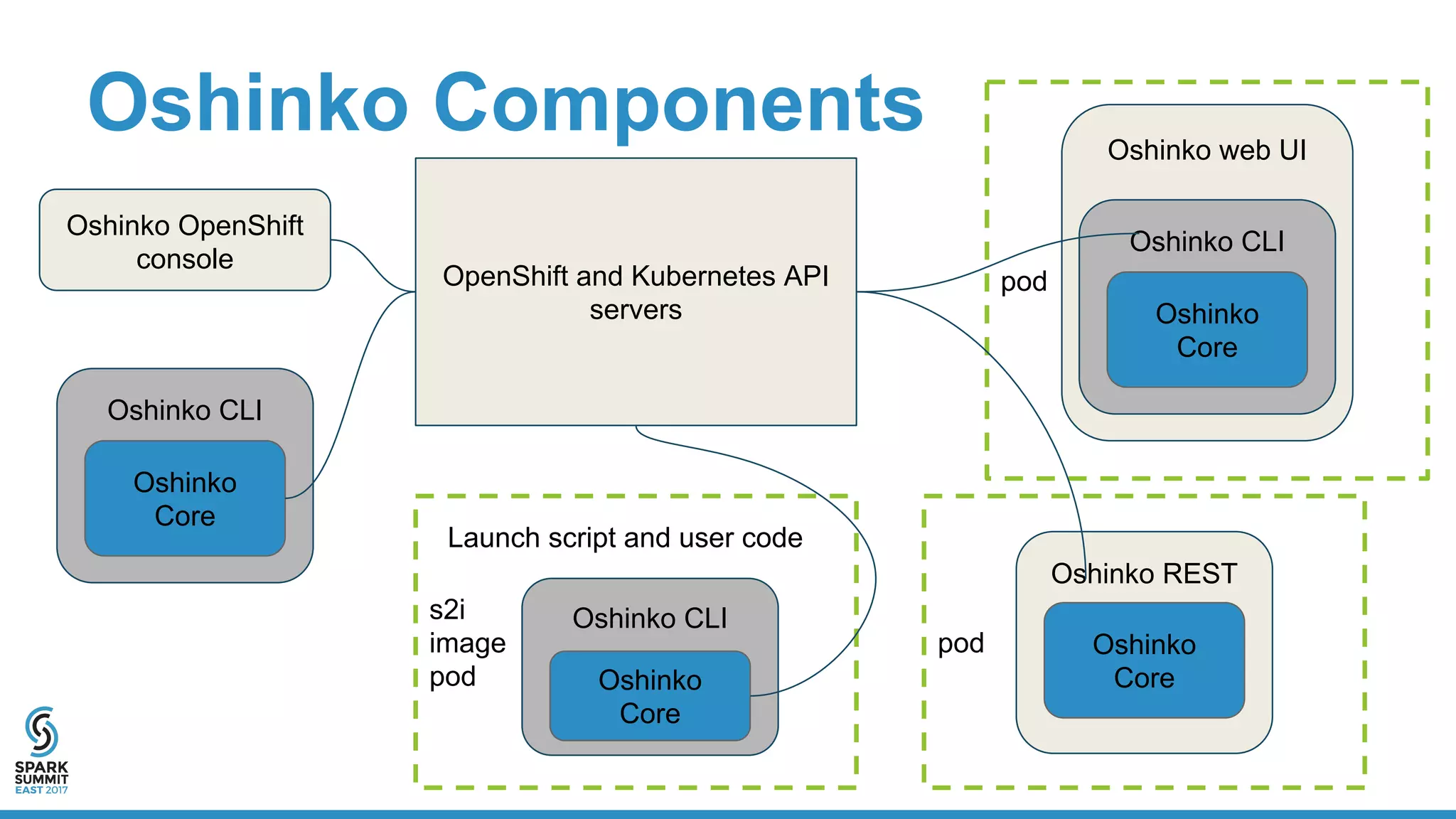

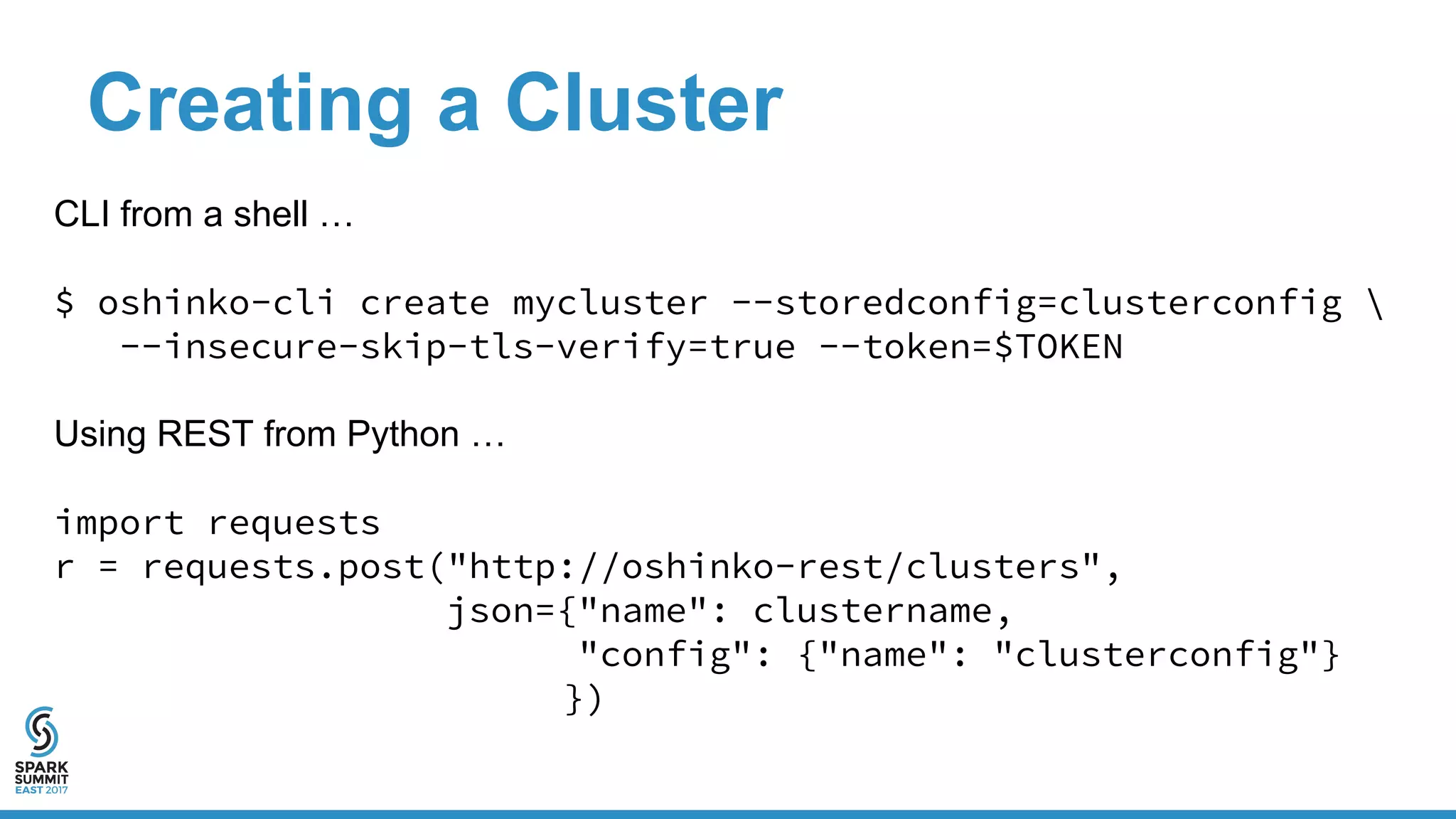

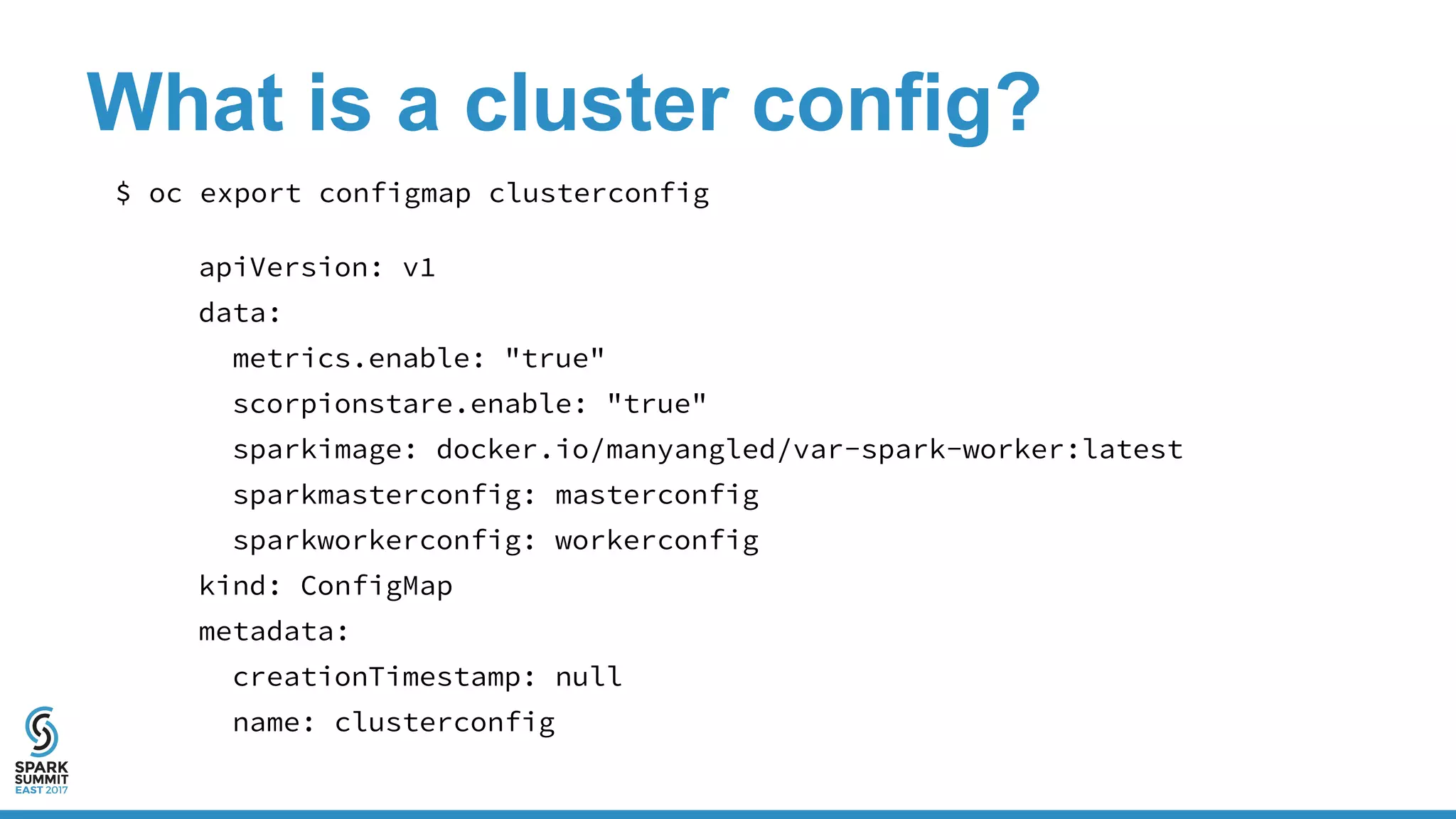

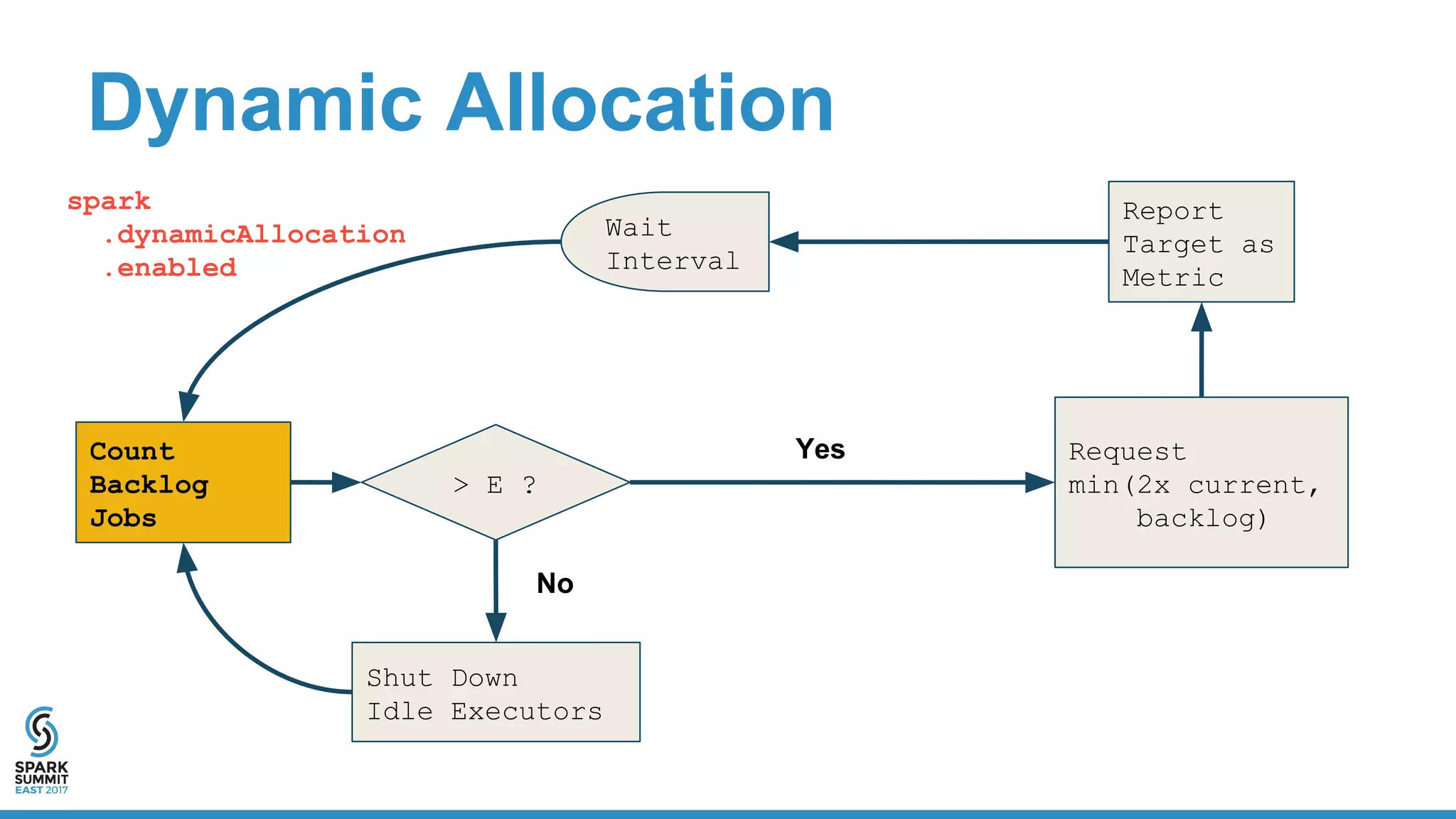

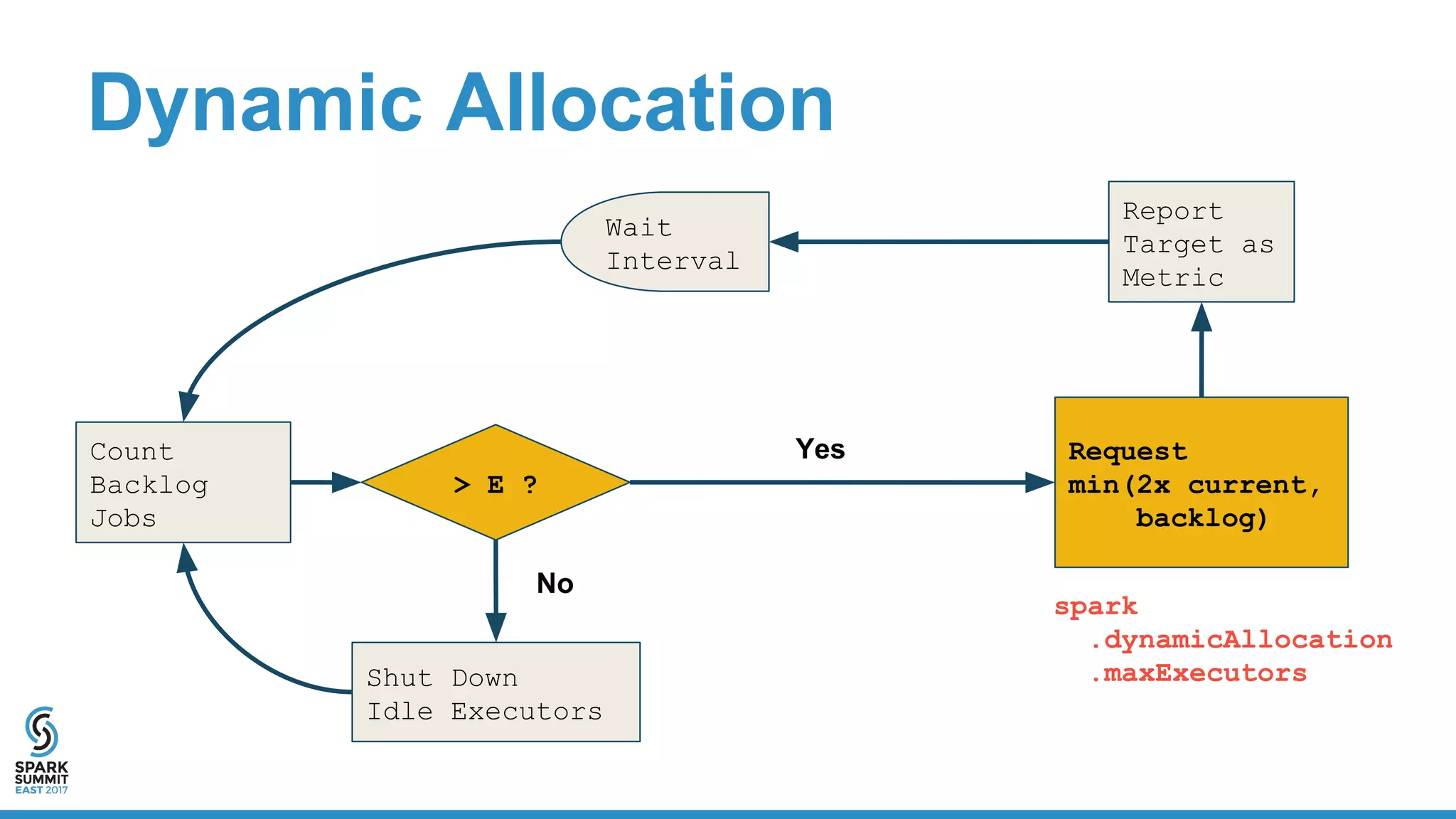

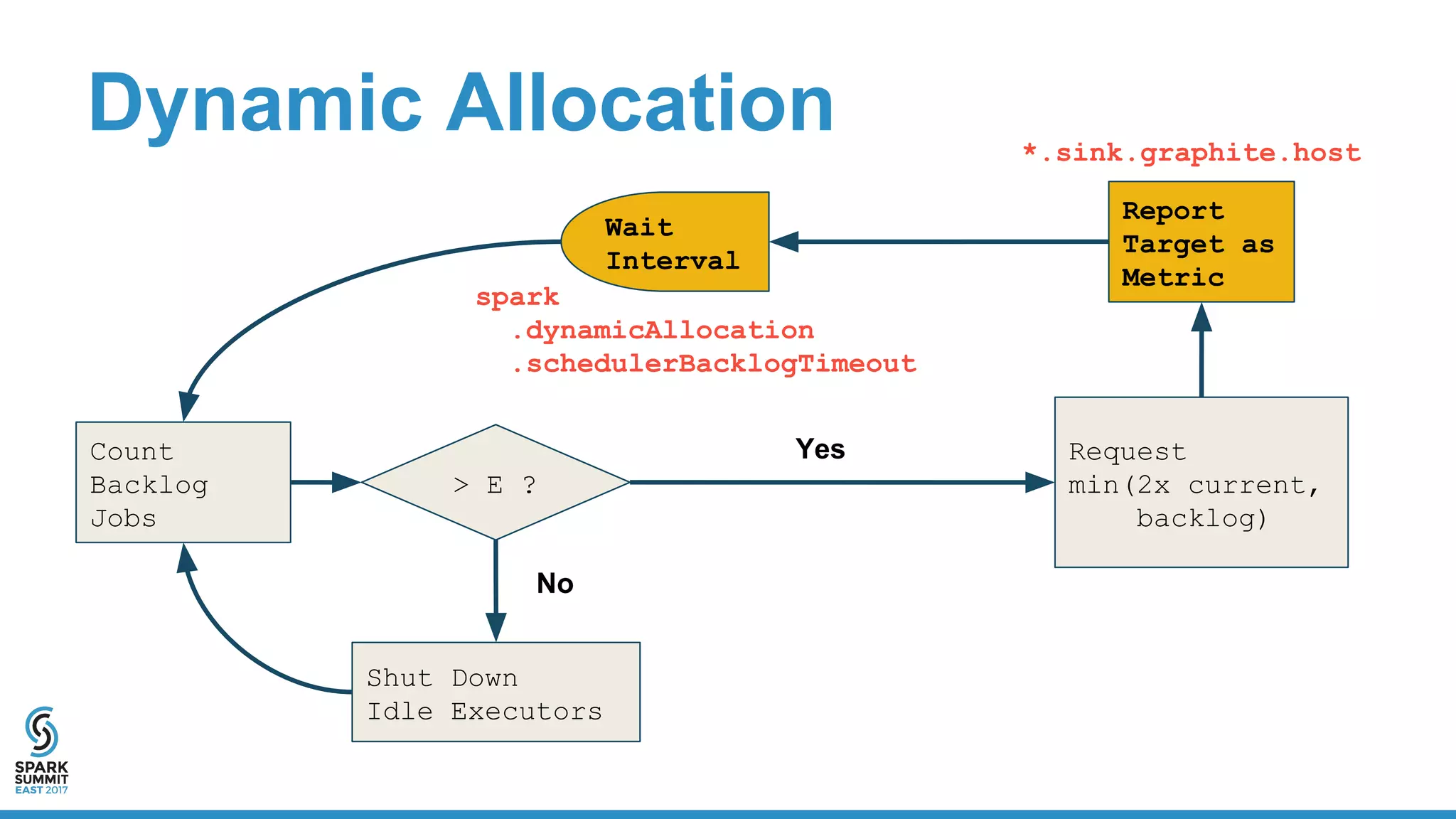

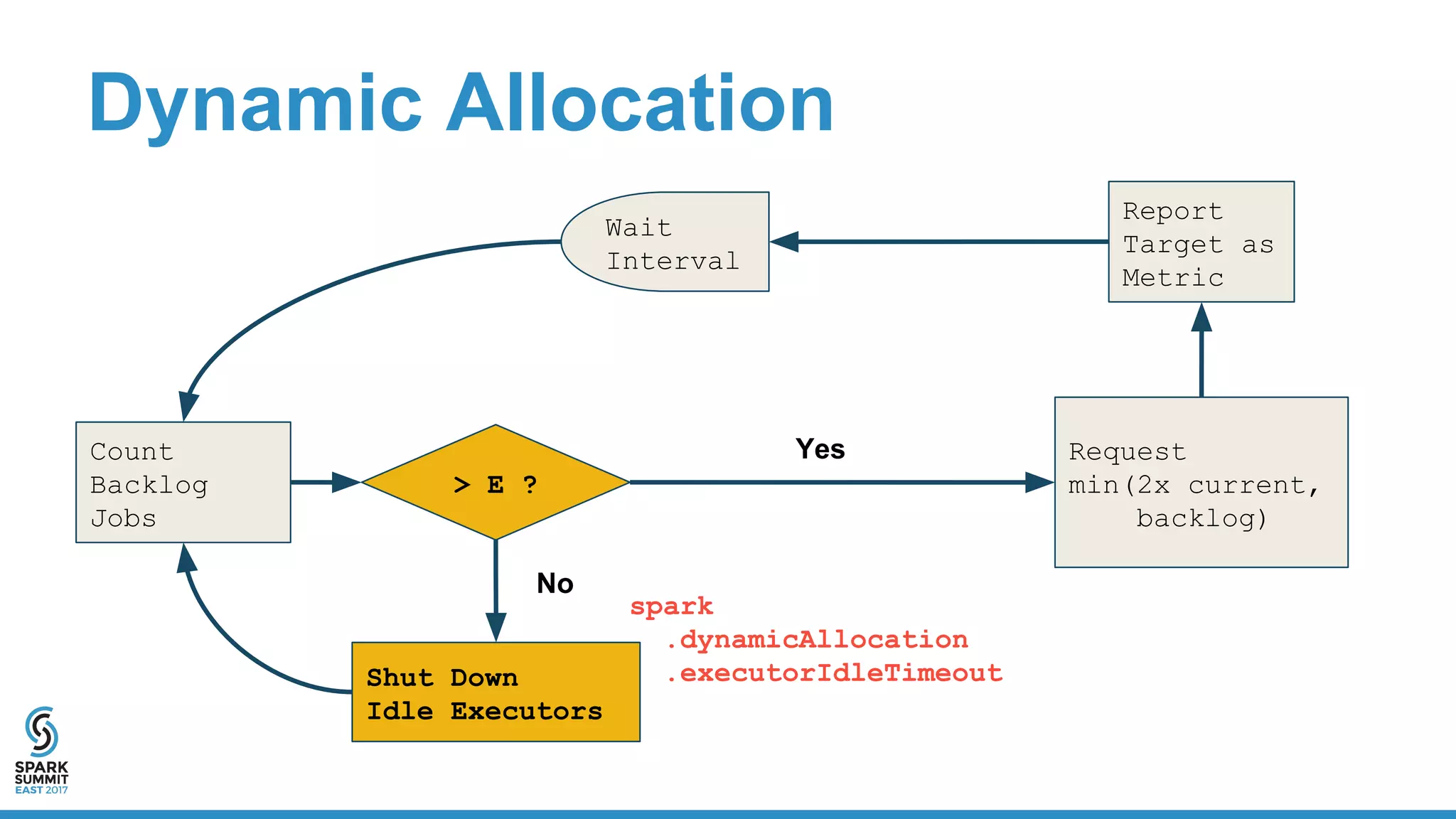

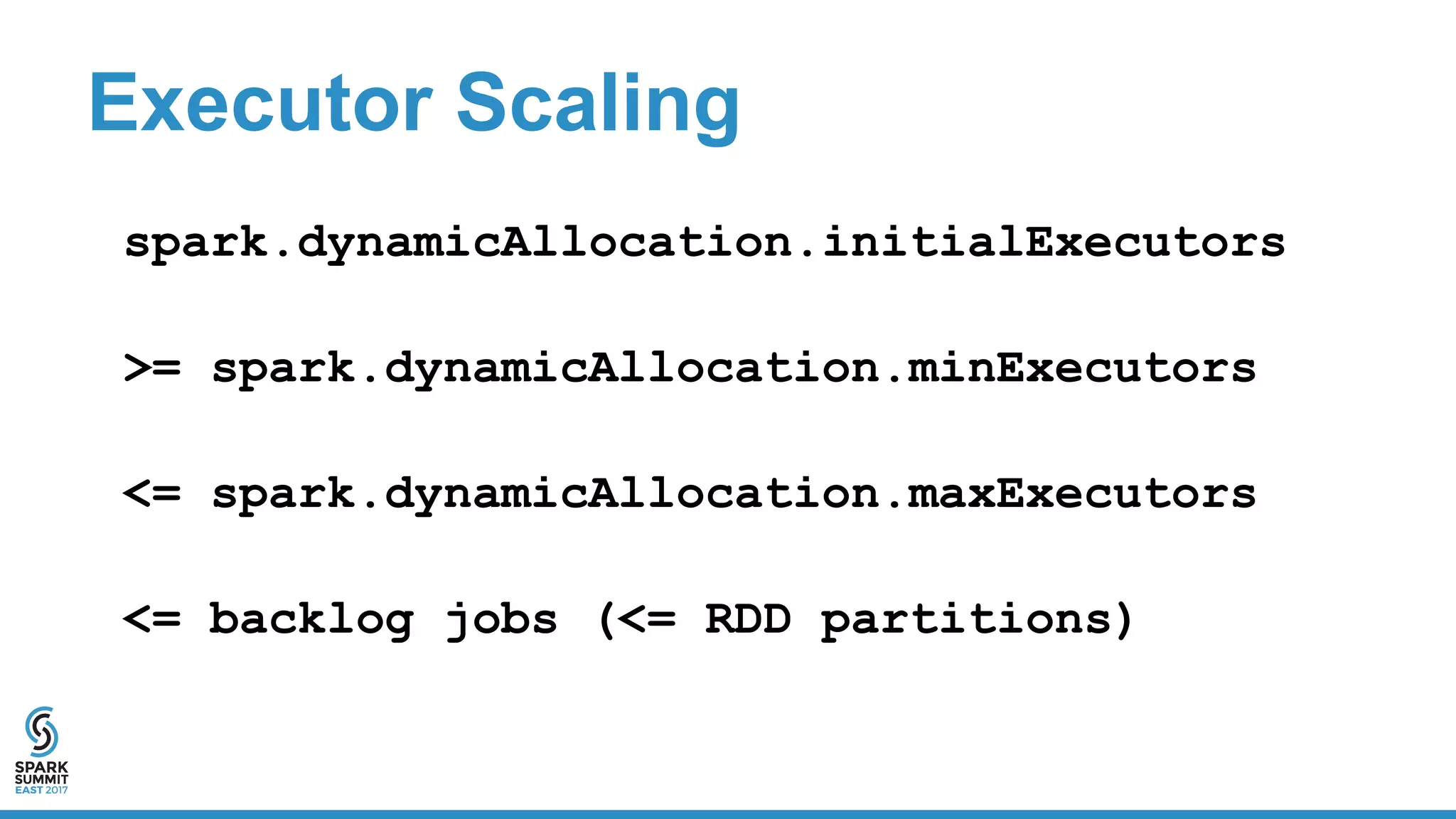

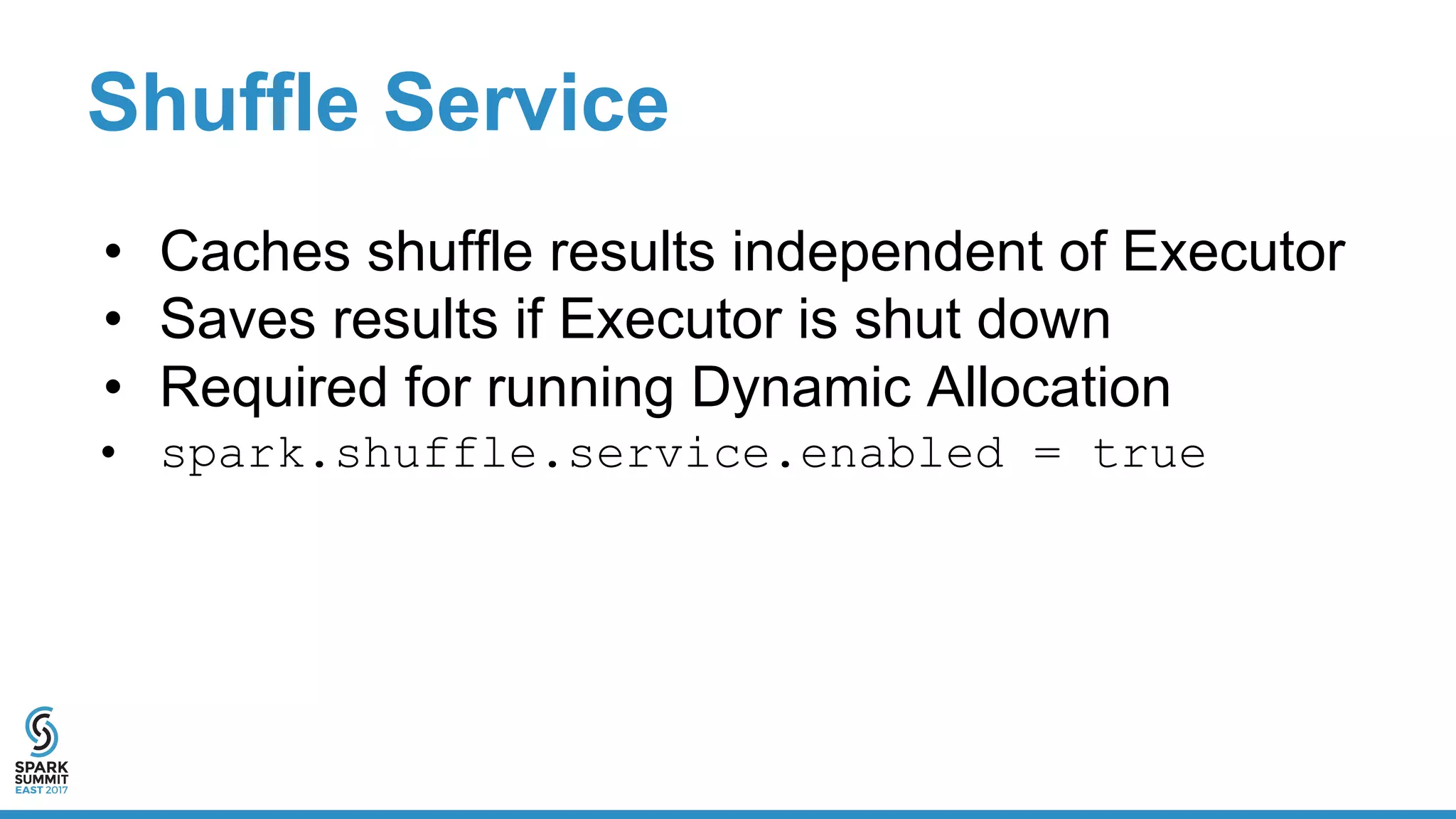

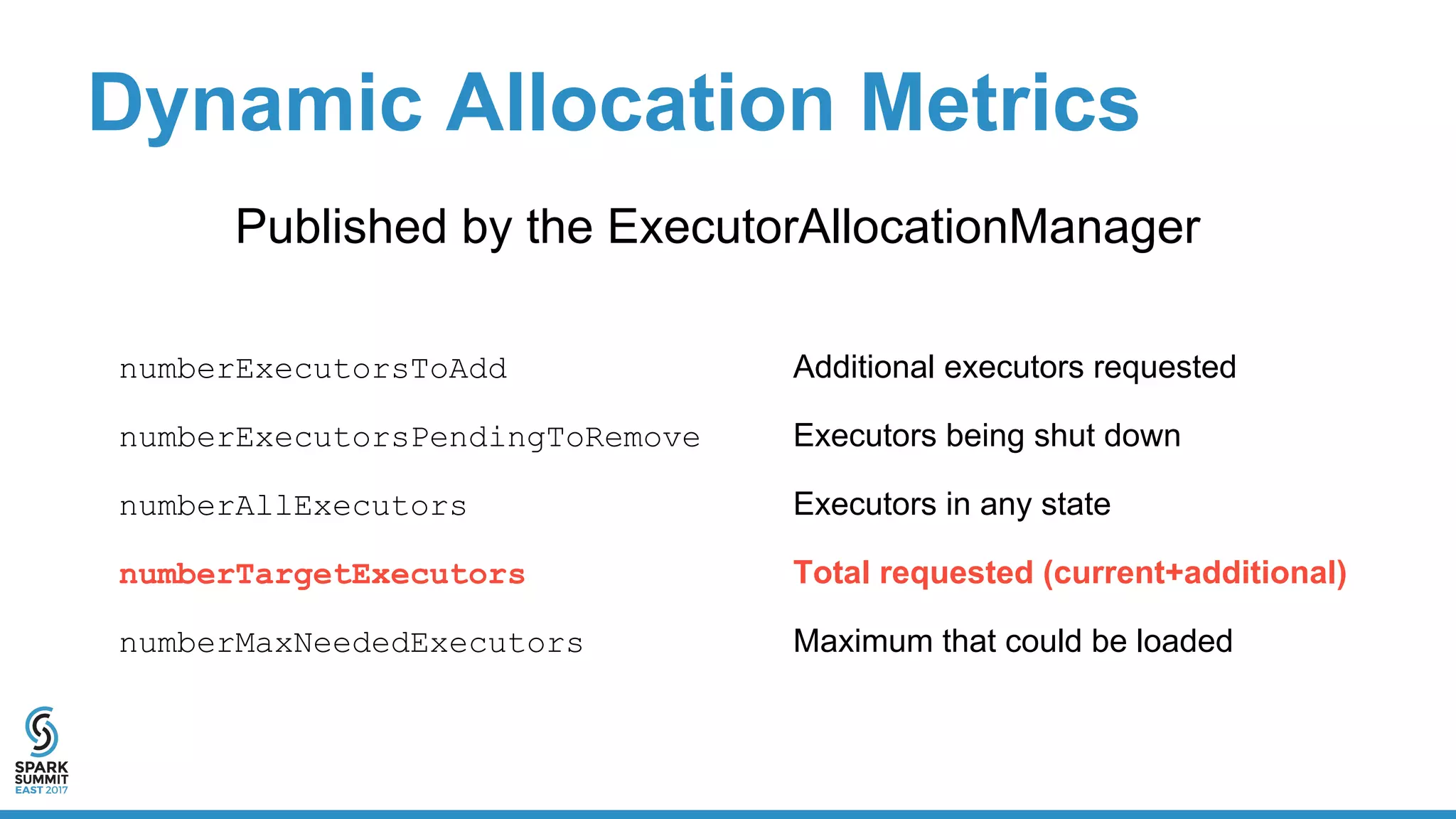

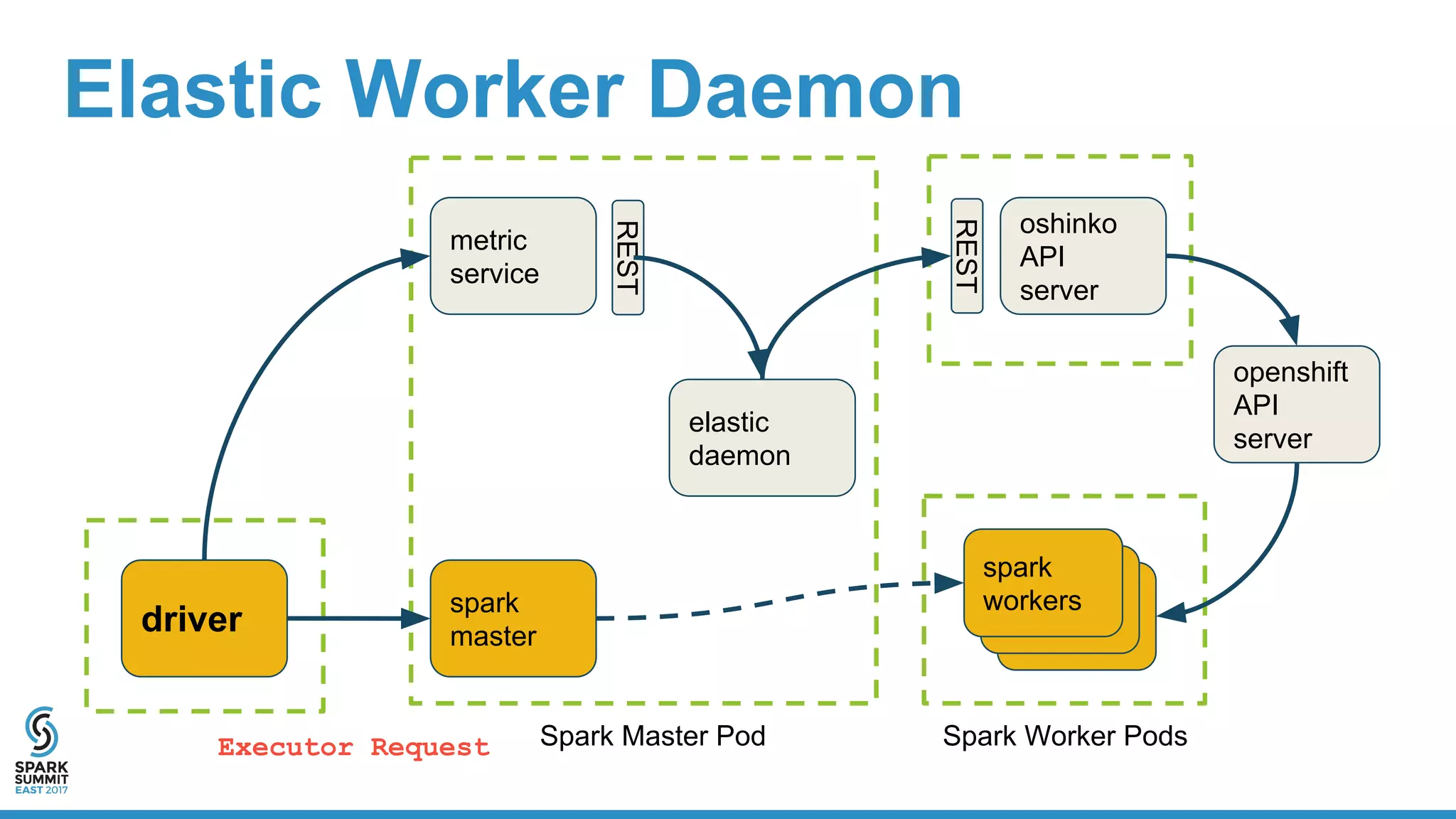

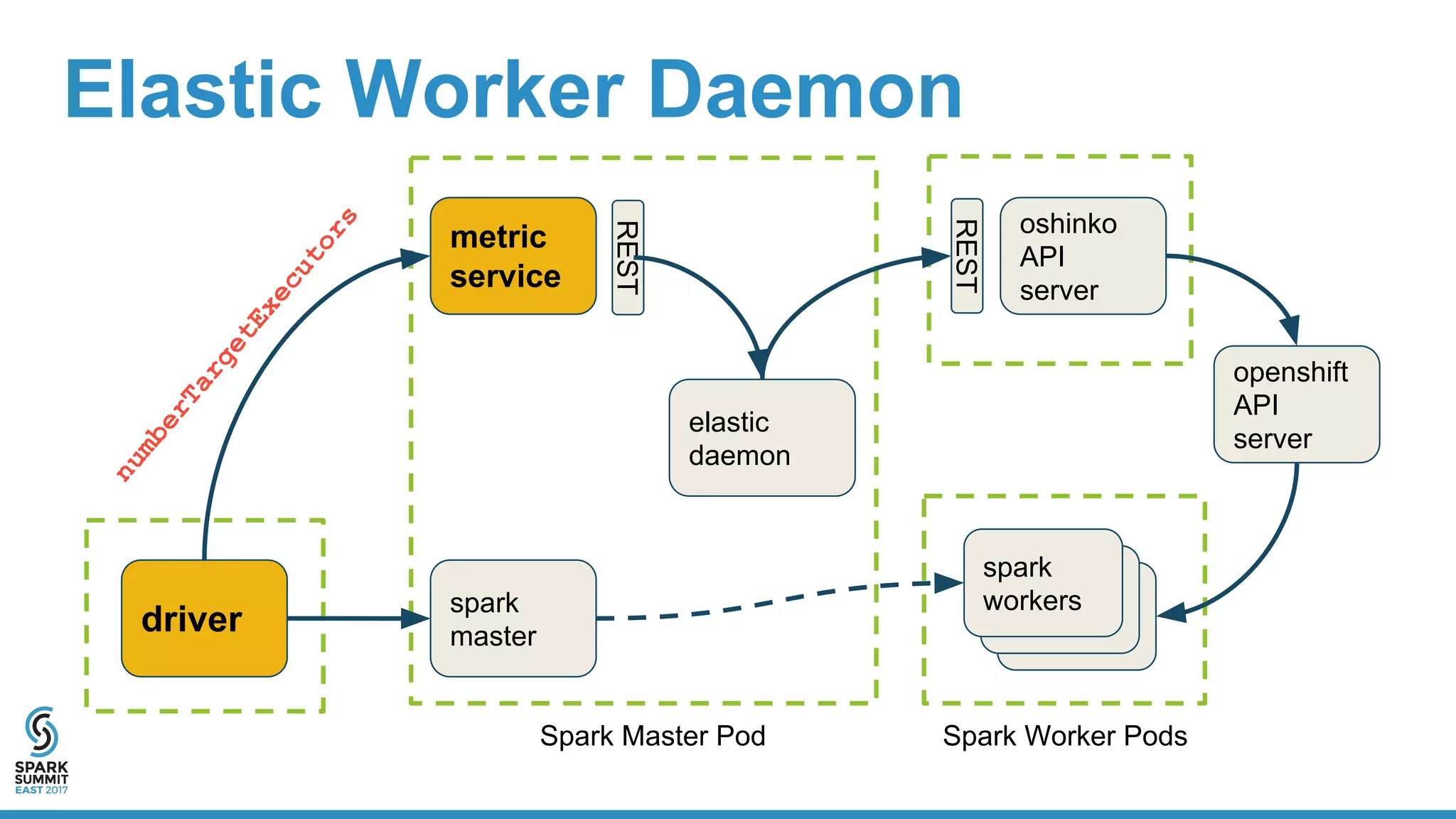

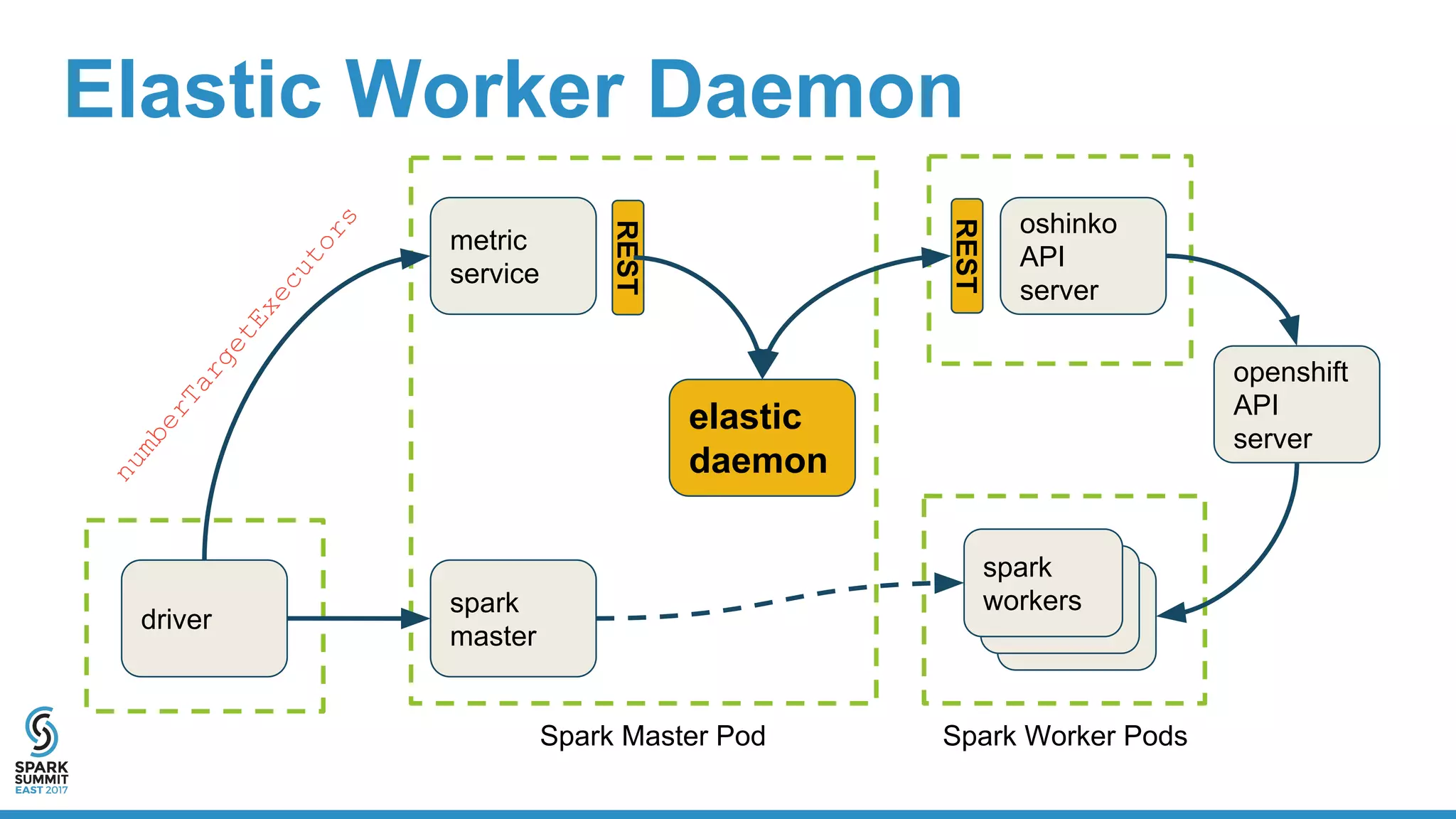

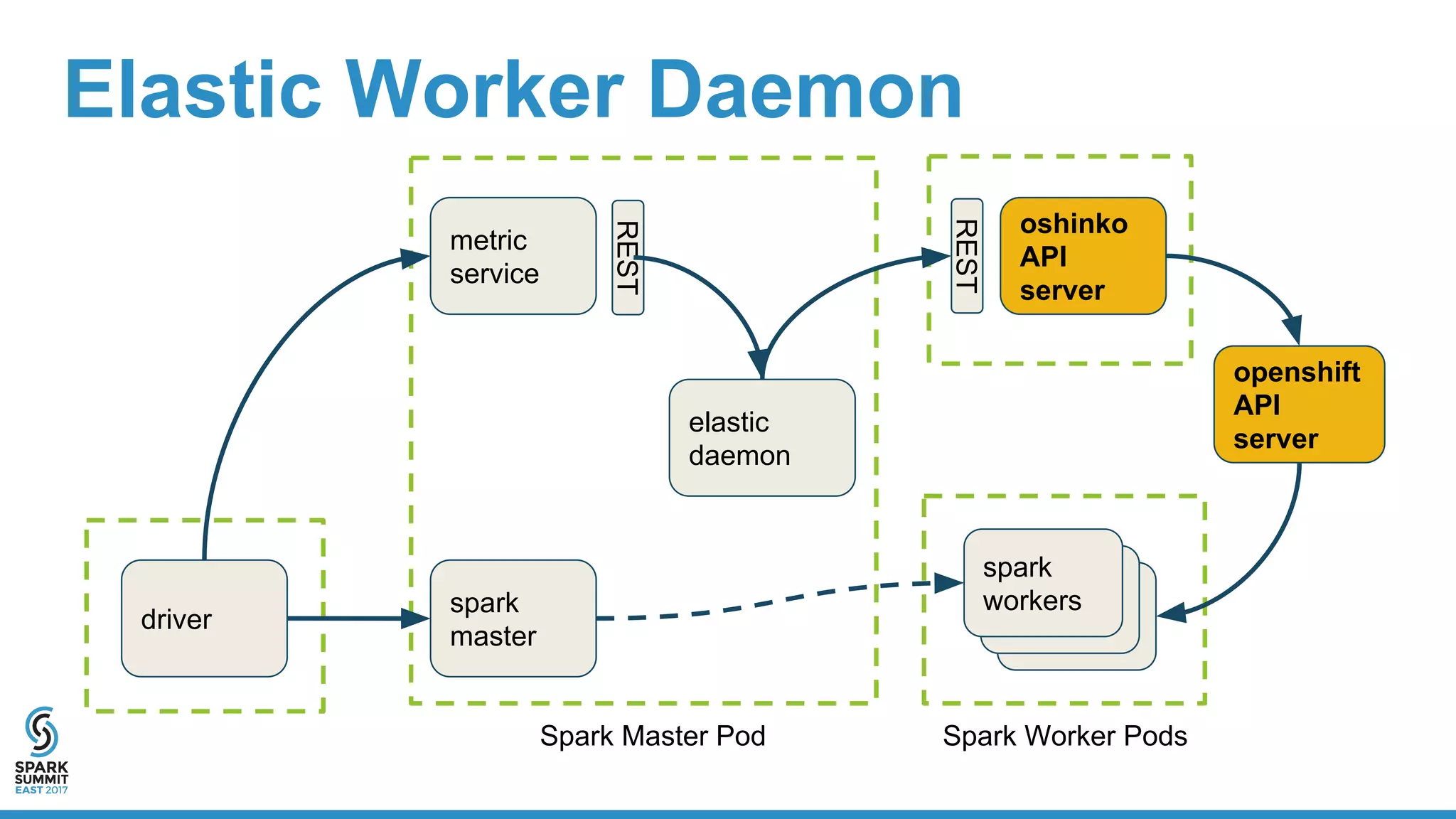

The document discusses the integration of Apache Spark with containerization technologies like Docker and Kubernetes for improving cluster management. It introduces Oshinko, a tool for simplifying the deployment of Spark applications in containers, offering features such as elastic worker management and a streamlined cluster creation process. The presentation also covers dynamic allocation metrics and the benefits of containerizing Spark for efficient resource usage and ease of scaling.